Its a start of something great, need more functions though! For example:

- VM controls (start/stop)

- VM Information

- VM Import/Export

- Add/remove storage

- SR Information

- Insert/remove dvd

- Toolstacksrestart

- Warnings (low memory/storage space)

Its a start of something great, need more functions though! For example:

@gawlo if you can reboot then you can try, but if the process is started after the reboot, it will still block your mountpoint.

And the mountpoint is the result of this command

xe pbd-param-get param-name=device-config uuid=44e9e1b7-5a7e-8e95-c5f1-edeebbc6863c

@technot when you say performance was a bit on the low end when dom0 handled the drive, how low compared to when the controller is passthrough?

For ZFS, it is still good with few number of disks but the performance won't be high than raid10 until you have more vdevs (which means striping across multiple vdevs). You do need to passthrough the disks to dom0, so you'll have to destroy the raid as you mentioned and the more ram the better for ZFS, usually around 1GB per 1TB of storage for good caching performance.

One thing to note, you can't use ZFS for dom0 yet so you still need another drive for XCP-ng.

@gawlo to check mountpoint as olivier suggested

lsof +D /mountpoint

@gawlo have you try restarting the host? may be check if xapi service is running

systemctl status xapi.service

Machine type? Are you talking about QEMU stuff? If so that is only for emulation, XCP-ng is virtualization so you get the same hardware in your VM as the physical hardware on your host.

You can use the following command to display all the PCI devices being passthrough, and then just set them up again without the one you want to remove.

/opt/xensource/libexec/xen-cmdline --get-dom0 xen-pciback.hide

The 2nd option is basically forcing the static IP through XCP-ng, if you have SSH access to your XOA VM then you can also use option 3 (hint: XOA is based on debian 10).

The steps for option 2 are:

xe vif-list vm-uuid=<UUID of your XOA VM>

xe vif-configure-ipv4 uuid=<UUID of your XOA VM VIF> mode=static address=<IP address/Subnet mask> gateway=<Gateway address>

There is so much you can do through a web browser, I think your best bet is to click SSH or SSH as... to open up putty instead.

While we're at it, most CPU icons have "legs" on 4 sides, and RAM icons have "legs" on 2 sides, the current CPU icon looks like RAM chips instead

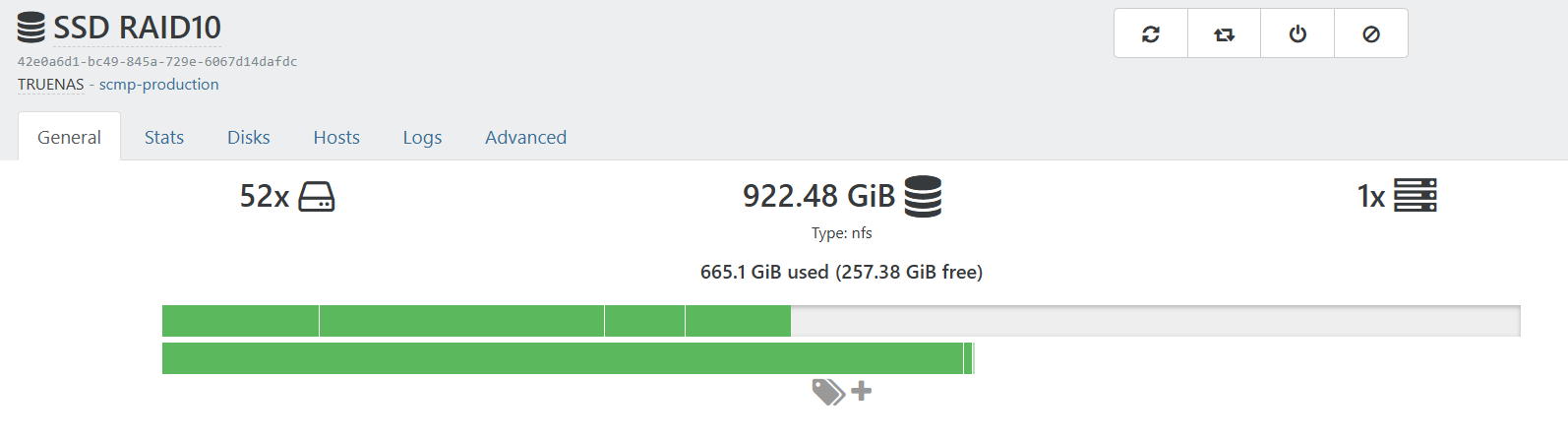

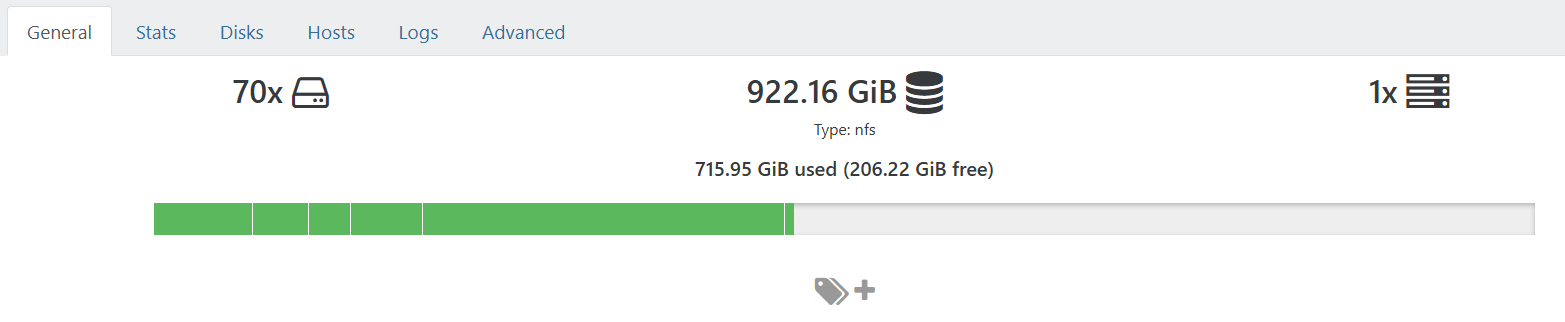

I have another weird one. The numeric representative is correct, but the bar graph is unintuitive.

@olivierlambert But for my case, I have nothing else in that NFS share except XCP-ng VHDs (single SR folder with nothing outside it). For my case (currently) I checked du -h on my NFS share and I get 572G and df -h shows about the same usage 572.1G. If I add total allocated disk space from all VMs using this NFS share, it is only 450G, so this means there are 100G+ of file system usage (from snapshots?).

I can now understand what the numbers represents (the actual physical disk usage not VM disk usage) but the bar graph still doesn't show the same thing. So just to clarify, does the bar graph actually show VM disk usage and not physical disk usage?

@olivierlambert Thank you for replying, it is indeed what the NFS share reports as free, but why there is such a discrepancy in NFS file size and actual VM disk size? Is it to do with VDI coalescing?

@peder The bar is correct I think, after adding all the disk allocated I get 450 GiB, but the number 715.95 GiB used is incorrect. I can't figure out where does this number come from, may be it also counts backups? But I don't store backups on this nfs share.

Hello, anyone have this problem or is it just my configuration?

The number says 200 GiB free, which should be around 20% free, but the graph clearly shows more than 50% free.

Ok figured it out at the end using xe pool-list on the existing pool gives me a list of existing hosts on the pool, and there was one that has been taken offline, without detaching in XO. So I did a xe host-forget uuid=<host uuid> because xe pool-eject doesn't work on offline host. And now I can add the new host just fine. Thanks everyone for helping!

Updating the BIOS of the new host made no differences. So I tried to add it to my test pool and what do you know, it works without any issue and the test pool has a master of even older CPU so I don't think its compatibility issue.

There must be something wrong with my main pool that I'm trying to add the new host to.

@nick-lloyd The new host is has new CPU, the old host already in the pool has older CPU. I will try to update the BIOS of the new host and see if it helps. I don't think the old host will have any updates.

@nick-lloyd They are both xeon but around 4 generations apart, how do I check if they are compatible? I have successfully added a non xeon with a xeon to the same pool before (there were warnings that some feature set will be disabled) so I thought this should be fine too, but please let me know otherwise.

More information, both are XCP-ng 8.2.1, I ran the host-all-editions command and both returns just xcp-ng