Hi,

Was migrating one of our important VMs from a problematic pool to a new "clean" one. However, it failed half way through. I believe this is the correct log entry:

vm.migrate

{

"vm": "b60762de-c222-2911-e18b-488c56e21646",

"mapVifsNetworks": {

"bc94ab3c-20ed-b188-5010-a4a9ea7c9196": "c0bd86ce-faaa-4fd9-a702-c093c3f5c326"

},

"migrationNetwork": "c0bd86ce-faaa-4fd9-a702-c093c3f5c326",

"sr": "cb379d8c-0226-49aa-327f-00d12f63f43e",

"targetHost": "cebfed0d-8a78-4d69-8817-2b9a4c81c59b"

}

{

"code": 21,

"data": {

"objectId": "b60762de-c222-2911-e18b-488c56e21646",

"code": "MIRROR_FAILED"

},

"message": "operation failed",

"name": "XoError",

"stack": "XoError: operation failed

at operationFailed (/usr/local/lib/node_modules/xo-server/node_modules/xo-common/src/api-errors.js:21:32)

at file:///usr/local/lib/node_modules/xo-server/src/api/vm.mjs:561:15

at Xo.migrate (file:///usr/local/lib/node_modules/xo-server/src/api/vm.mjs:547:3)

at Api.#callApiMethod (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/api.mjs:401:20)"

}

After that, VM locked up and I restarted it, but it failed to boot with the error below:

vm.start

{

"id": "b60762de-c222-2911-e18b-488c56e21646",

"bypassMacAddressesCheck": false,

"force": false

}

{

"code": "SR_BACKEND_FAILURE_46",

"params": [

"",

"The VDI is not available [opterr=VDI 28aaf3f0-447c-44a2-ad8e-e7c159b41ef8 not detached cleanly]",

""

],

"call": {

"method": "VM.start",

"params": [

"OpaqueRef:aee5c672-049e-4533-81a2-7ac0163d818c",

false,

false

]

},

"message": "SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=VDI 28aaf3f0-447c-44a2-ad8e-e7c159b41ef8 not detached cleanly], )",

"name": "XapiError",

"stack": "XapiError: SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=VDI 28aaf3f0-447c-44a2-ad8e-e7c159b41ef8 not detached cleanly], )

at Function.wrap (/usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/_XapiError.js:16:12)

at /usr/local/lib/node_modules/xo-server/node_modules/xen-api/src/transports/json-rpc.js:35:21"

}

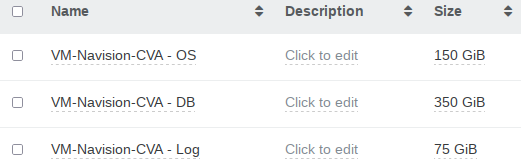

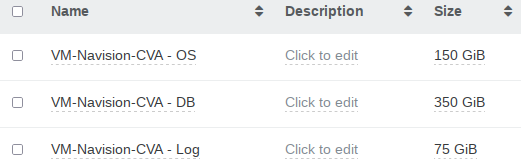

XOA still lists the disk as present along with the other two.

I found this post, but don't want to make everything worse: https://xcp-ng.org/forum/topic/4614/help-with-vdi-cant-start-a-vm-the-vdi-is-not-available-not-detached-cleanly/5?_=1684488720911

VM details:

[11:34 xcp-ng-hv04 ~]# xe vm-list name-label=VM-Navision

uuid ( RO) : b60762de-c222-2911-e18b-488c56e21646

name-label ( RW): VM-Navision

power-state ( RO): running

[11:35 xcp-ng-hv04 ~]# xe vdi-list uuid=28aaf3f0-447c-44a2-ad8e-e7c159b41ef8

uuid ( RO) : 28aaf3f0-447c-44a2-ad8e-e7c159b41ef8

name-label ( RW): VM-Navision-CVA - OS

name-description ( RW):

sr-uuid ( RO): 4da984e7-8c03-875a-0990-30a29863ee0a

virtual-size ( RO): 161061273600

sharable ( RO): false

read-only ( RO): false

Was considering the below:

xe vdi-forget uuid=28aaf3f0-447c-44a2-ad8e-e7c159b41ef8 force=true

xe sr-scan uuid=4da984e7-8c03-875a-0990-30a29863ee0a

xe vbd-create device=0 vm-uuid=b60762de-c222-2911-e18b-488c56e21646 vdi-uuid=28aaf3f0-447c-44a2-ad8e-e7c159b41ef8

xe sr-scan uuid=4da984e7-8c03-875a-0990-30a29863ee0a

Latest version of XOA/XCP-NG. (xo-server 5.111.1, xo-web 5.114.0, XCP-ng 8.2.1)

Edit: Forgot to mention that the disk UUID should be the snapshot of the last backup as it's only a few hundred MB large.

Thanks

I tried running uuidgen from my Linux machine and also on a XCP-ng server just in case there was something different. From what I've seen, it should be just a "uuidgen -r" to get a compatible uuid.

I tried running uuidgen from my Linux machine and also on a XCP-ng server just in case there was something different. From what I've seen, it should be just a "uuidgen -r" to get a compatible uuid.