There are no other jobs.

I've now spun up a completely separate, fresh install of XCP-ng 8.3 to test the symptoms mentioned in the OP.

Steps taken

- Installed XCP-ng 8.3

- Text console over SSH

-

xe host-disable

-

xe host-evacuate(not needed yet of course since it's a brand-new install)

-

yum update

-

- Reboot

- Text console over SSH again

-

- Created local ISO SR

-

-

xe sr-create name-label="Local ISO" type=iso device-config:location=/opt/var/iso_repository device-config:legacy_mode=true content-type=iso

-

-

-

cd /opt/var/iso_repository

-

-

-

wget # ISO for Ubuntu Server

-

-

-

xe sr-scan uuid=07dcbf24-761d-1332-9cd3-d7d67de1aa22

-

- XO Lite

-

- New VM

-

- Booted from ISO, installed Server

- Text console to VM over SSH

-

- apt update/upgrade

-

- installed xe-guest-utilities

-

- Installed XO from source (ronivay script)

- XO

-

- Import ISO for Ubuntu Mate

-

- New VM

-

-

- Booted from ISO, installed Mate

-

-

-

- apt update/upgrade

-

-

-

- xe-guest-utilities

-

-

- New CR backup job

-

-

- Nightly

-

-

-

- VMs: 1 (Mate)

-

-

-

- Retention: 15

-

-

-

- Full: every 7

-

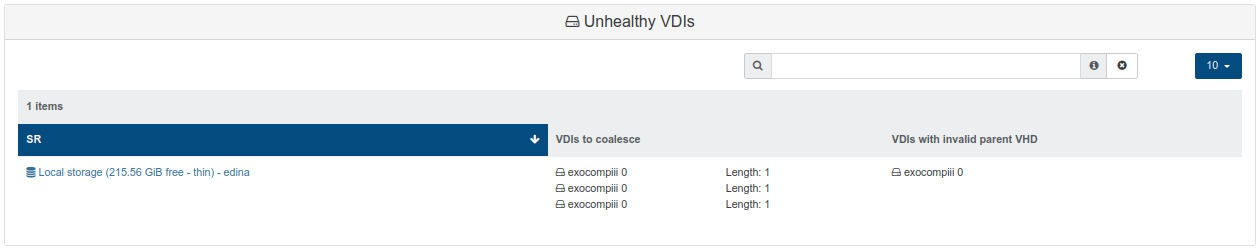

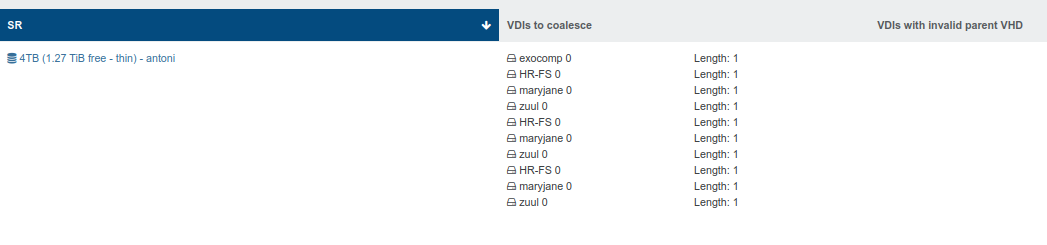

Exact same behaviour. After first (full) CR job run, additional (incremental) CR job runs results in one more 'unhealthy VDI'.

I've engaged in no other shenanigans. Plain vanilla XCP-ng and XO. There are only two VMs on this host, the XO from source VM, and a desktop OS VM which is the only target of the CR job. There are zero exceptions in SMlog.

What do you need to see?