xe pool-enable-tls-verification Was exactly what I needed, thanks! Worked after that.

Posts

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

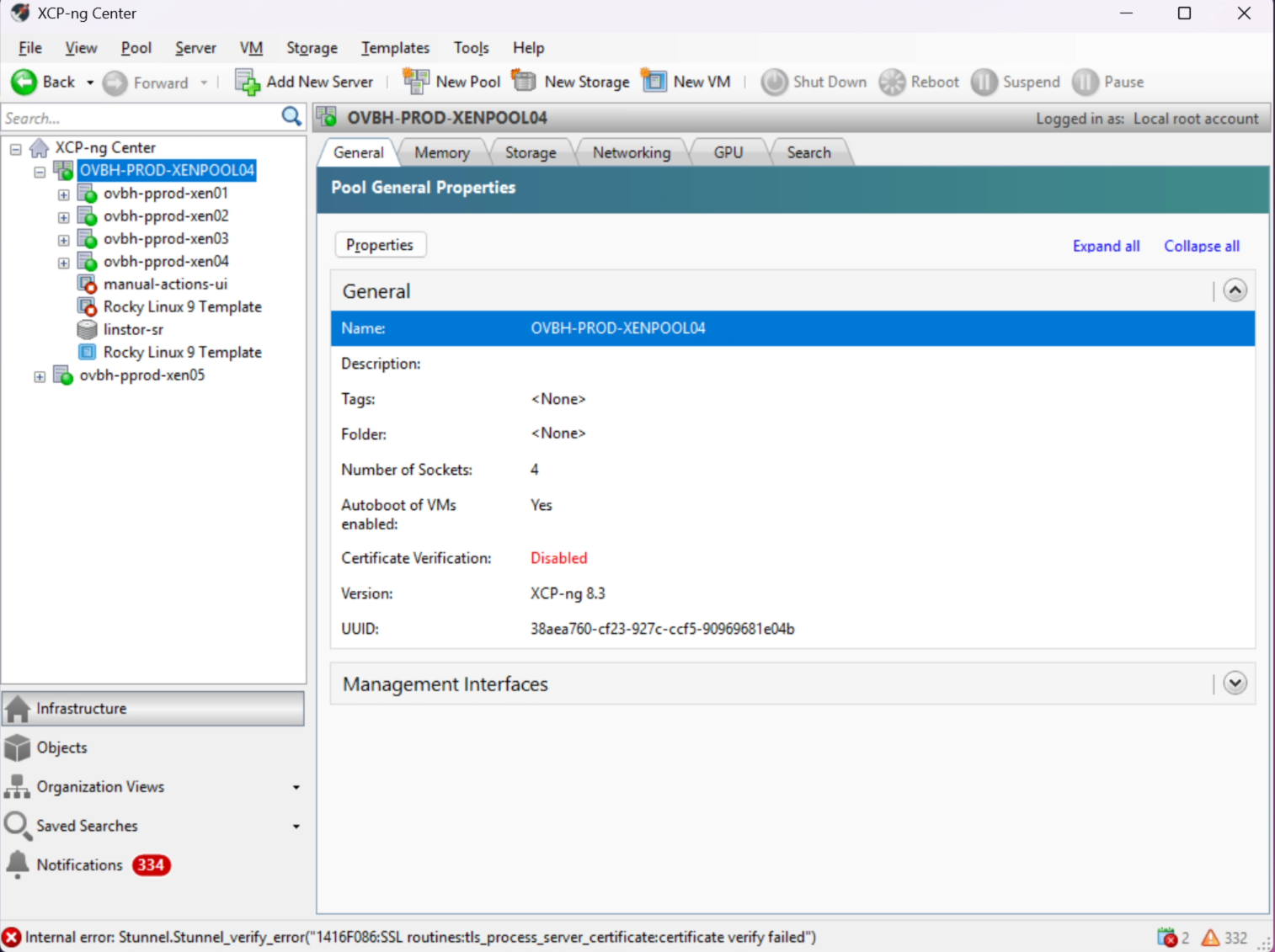

@psafont Sorry was swamped with other things. As listed above I get the same error, forced or not, from xcp-ng center, xcp-ng host, or xoa.

tls verification has always been off, and in the past we have not had issue with adding new host to pool.I have taken no other actions since my last posting.

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

I see, it also says

name ( RO): sdn-controller-ca.pem

host ( RO): <not in database>

Like in the issue, but the file exists.[11:28 ovbh-pprod-xen05 ~]# xe certificate-list uuid ( RO) : afdd9c8e-dcae-17c7-c35c-0fd7cebd387a type ( RO): host name ( RO): host ( RO): f0cec10f-ad05-48e4-893c-414b3a3e15be not-before ( RO): 20251110T23:15:51Z not-after ( RO): 20351108T23:15:51Z fingerprint ( RO): BF:83:23:BB:7B:E9:30:DE:86:EA:9D:AF:DF:F8:BA:34:39:D0:81:AD:34:E5:C6:AB:0C:49:41:7B:4A:3C:8B:9E uuid ( RO) : b8dcd1f0-ef65-e762-f189-46bb78766c6b type ( RO): ca name ( RO): sdn-controller-ca.pem host ( RO): <not in database> not-before ( RO): 20200416T00:17:31Z not-after ( RO): 20470901T00:17:31Z fingerprint ( RO): 63:1F:89:3F:0E:1F:86:52:34:95:3C:6C:3F:9C:C8:B3:5A:61:6B:4D:EE:8F:A7:11:F0:BA:79:8B:C7:15:A0:E0 uuid ( RO) : e7daedf2-7f35-ba40-093a-e0c011d91633 type ( RO): host_internal name ( RO): host ( RO): f0cec10f-ad05-48e4-893c-414b3a3e15be not-before ( RO): 20251110T23:15:46Z not-after ( RO): 20351108T23:15:46Z fingerprint ( RO): 71:41:B0:25:88:AA:E4:56:EE:F7:A9:8E:0A:A9:FE:C5:6A:0D:D5:37:30:BF:C8:81:C2:D7:B8:20:7A:6C:7F:B7 [13:50 ovbh-pprod-xen05 ~]# ll /etc/stunnel/certs/sdn-controller-ca.pem -rw-r--r-- 1 root root 1907 Nov 12 09:45 /etc/stunnel/certs/sdn-controller-ca.pemRemoving it did not help, same error

[13:54 ovbh-pprod-xen05 ~]# xe certificate-list uuid ( RO) : afdd9c8e-dcae-17c7-c35c-0fd7cebd387a type ( RO): host name ( RO): host ( RO): f0cec10f-ad05-48e4-893c-414b3a3e15be not-before ( RO): 20251110T23:15:51Z not-after ( RO): 20351108T23:15:51Z fingerprint ( RO): BF:83:23:BB:7B:E9:30:DE:86:EA:9D:AF:DF:F8:BA:34:39:D0:81:AD:34:E5:C6:AB:0C:49:41:7B:4A:3C:8B:9E uuid ( RO) : e7daedf2-7f35-ba40-093a-e0c011d91633 type ( RO): host_internal name ( RO): host ( RO): f0cec10f-ad05-48e4-893c-414b3a3e15be not-before ( RO): 20251110T23:15:46Z not-after ( RO): 20351108T23:15:46Z fingerprint ( RO): 71:41:B0:25:88:AA:E4:56:EE:F7:A9:8E:0A:A9:FE:C5:6A:0D:D5:37:30:BF:C8:81:C2:D7:B8:20:7A:6C:7F:B7I also confirmed that all the certs for the hosts are current and not expired.

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

After installing packages: https://docs.xcp-ng.org/xostor/#how-to-add-a-new-host-or-fix-a-badly-configured-host

Now I am getting the following on offical

pool.mergeInto { "sources": [ "e4cf2039-3547-6574-0e10-96f9d91316f0" ], "target": "38aea760-cf23-927c-ccf5-90969681e04b", "force": true } { "code": "INTERNAL_ERROR", "params": [ "Stunnel.Stunnel_verify_error(\"1416F086:SSL routines:tls_process_server_certificate:certificate verify failed\")" ], "call": { "duration": 3104, "method": "pool.join_force", "params": [ "* session id *", "10.2.0.10", "root", "* obfuscated *" ] }, "message": "INTERNAL_ERROR(Stunnel.Stunnel_verify_error(\"1416F086:SSL routines:tls_process_server_certificate:certificate verify failed\"))", "name": "XapiError", "stack": "XapiError: INTERNAL_ERROR(Stunnel.Stunnel_verify_error(\"1416F086:SSL routines:tls_process_server_certificate:certificate verify failed\")) at Function.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12) at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/transports/json-rpc.mjs:38:21 at runNextTicks (node:internal/process/task_queues:60:5) at processImmediate (node:internal/timers:454:9) at process.callbackTrampoline (node:internal/async_hooks:130:17)" }And still getting this on source install

pool.mergeInto { "sources": [ "e4cf2039-3547-6574-0e10-96f9d91316f0" ], "target": "38aea760-cf23-927c-ccf5-90969681e04b", "force": true } { "message": "app.getLicenses is not a function", "name": "TypeError", "stack": "TypeError: app.getLicenses is not a function at enforceHostsHaveLicense (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/pool.mjs:15:30) at Pools.apply (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/pool.mjs:80:13) at Pools.mergeInto (/opt/xen-orchestra/node_modules/golike-defer/src/index.js:85:19) at Xo.mergeInto (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:314:15) at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:175:22) at Task.run (/opt/xen-orchestra/@vates/task/index.js:159:20) at Api.#callApiMethod (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/api.mjs:469:18)" } -

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

Just tried after doing a force clean install, still getting same error. Going to look into it more if there is not any

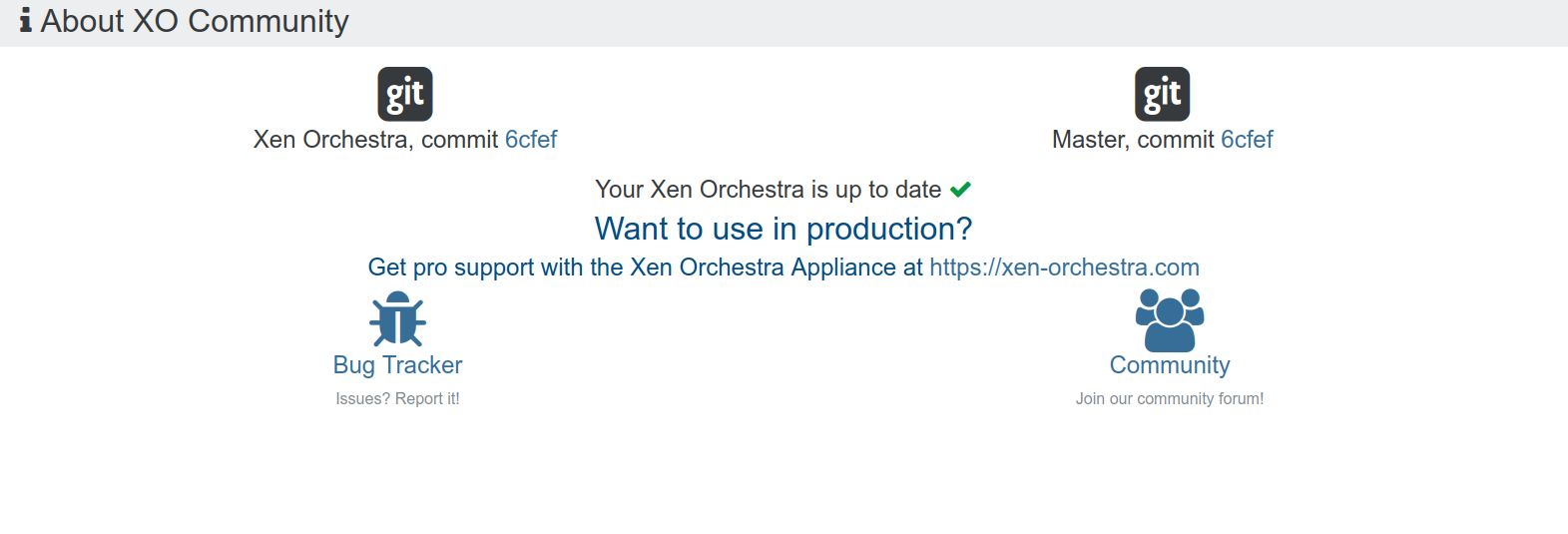

root@xoa:/home/fpcuser# sudo curl https://raw.githubusercontent.com/Jarli01/xenorchestra_updater/master/xo-update.sh | bash -s -- -f | tee xenrebuild.log % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 6896 100 6896 0 0 39116 0 --:--:-- --:--:-- --:--:-- 39181 installed : v24.11.1 (with npm 11.6.2) Stopping xo-server... Checking for Yarn package... Checking for Yarn update... E: Malformed entry 1 in list file /etc/apt/sources.list.d/yarn.list (URI parse) E: The list of sources could not be read. E: Malformed entry 1 in list file /etc/apt/sources.list.d/yarn.list (URI parse) E: The list of sources could not be read. Checking for missing dependencies... Checking for Repo change... Checking xen-orchestra... Current branch master Current version 5.192.1 / 5.189.0 Current commit 6cfefc91e47db7fb264705bc2def1f1b70bc537b 2025-11-12 18:01:41 +0100 0 updates available Updating from source... No local changes to save No stash entries found. Already up to date. Clearing directories... Installing... yarn install v1.22.22 (node:1226553) [DEP0169] DeprecationWarning: `url.parse()` behavior is not standardized and prone to errors that have security implications. Use the WHATWG URL API instead. CVEs are not issued for `url.parse()` vulnerabilities. (Use `node --trace-deprecation ...` to show where the warning was created) [1/5] Validating package.json... [2/5] Resolving packages... success Already up-to-date. $ husky install husky - Git hooks installed Done in 1.57s. yarn run v1.22.22 $ TURBO_TELEMETRY_DISABLED=1 turbo run build --filter xo-server --filter xo-server-'*' --filter xo-web turbo 2.5.8 • Packages in scope: xo-server, xo-server-audit, xo-server-auth-github, xo-server-auth-google, xo-server-auth-ldap, xo-server-auth-oidc, xo-server-auth-saml, xo-server-backup-reports, xo-server-load-balancer, xo-server-netbox, xo-server-perf-alert, xo-server-sdn-controller, xo-server-test-plugin, xo-server-transport-email, xo-server-transport-icinga2, xo-server-transport-nagios, xo-server-transport-slack, xo-server-transport-xmpp, xo-server-usage-report, xo-server-web-hooks, xo-web • Running build in 21 packages • Remote caching disabled Tasks: 30 successful, 30 total Cached: 30 cached, 30 total Time: 1.347s >>> FULL TURBO Done in 1.55s. Updated version 5.192.1 / 5.189.0 Updated commit 6cfefc91e47db7fb264705bc2def1f1b70bc537b 2025-11-12 18:01:41 +0100 Checking plugins... Ignoring xo-server-test plugin Cleanup plugins... Restarting xo-server...So then I updated our seperate vm for xoa that we have used in the past for requests like this, and I am getting this behavior

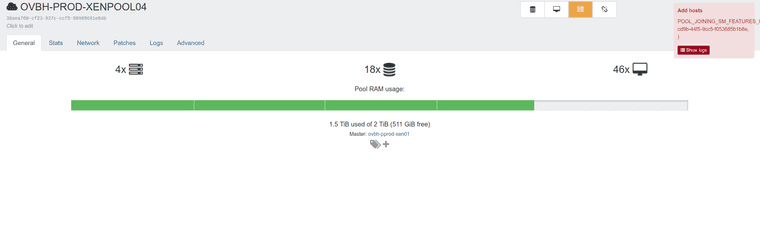

pool.mergeInto { "sources": [ "e4cf2039-3547-6574-0e10-96f9d91316f0" ], "target": "38aea760-cf23-927c-ccf5-90969681e04b", "force": true } { "code": "POOL_JOINING_SM_FEATURES_INCOMPATIBLE", "params": [ "OpaqueRef:151858ec-cd9b-44f5-9cc5-f053685b1b8e", "" ], "call": { "duration": 2049, "method": "pool.join_force", "params": [ "* session id *", "10.2.0.10", "root", "* obfuscated *" ] }, "message": "POOL_JOINING_SM_FEATURES_INCOMPATIBLE(OpaqueRef:151858ec-cd9b-44f5-9cc5-f053685b1b8e, )", "name": "XapiError", "stack": "XapiError: POOL_JOINING_SM_FEATURES_INCOMPATIBLE(OpaqueRef:151858ec-cd9b-44f5-9cc5-f053685b1b8e, ) at Function.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12) at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/transports/json-rpc.mjs:38:21 at runNextTicks (node:internal/process/task_queues:60:5) at processImmediate (node:internal/timers:454:9) at process.callbackTrampoline (node:internal/async_hooks:130:17)" }

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

Looks like it was installed with

curl https://raw.githubusercontent.com/Jarli01/xenorchestra_installer/master/xo_install.sh

This was a few years ago at least.And it gets updated with

sudo curl https://raw.githubusercontent.com/Jarli01/xenorchestra_updater/master/xo-update.sh | bash | tee xenupgrade##.logroot@xoa:/home/fpcuser# cat xenupgrade23.log installed : v22.20.0 (with npm 10.9.3) Stopping xo-server... Checking for Yarn package... Checking for Yarn update... Checking for missing dependencies... Checking for Repo change... Checking xen-orchestra... Current branch master Current version 5.189.0 / 5.186.0 Current commit a0046fa19fa5f17344061e5c7709c1e02140dca4 2025-09-25 16:15:05 +0200 95 updates available Updating from source... No local changes to save Updating a0046fa19..d829816f6 Fast-forward @vates/async-each/index.js | 28 +- @vates/async-each/index.test.js | 14 + @vates/async-each/package.json | 2 +- @vates/fuse-vhd/package.json | 2 +- @vates/generator-toolbox/src/throttle.mts | 6 +- @vates/generator-toolbox/tsconfig.json | 1 + @vates/nbd-client/package.json | 2 +- @vates/types/package.json | 2 +- @vates/types/src/lib/xen-orchestra-xapi.mts | 5 + @vates/types/src/xen-api.mts | 28 +- @vates/types/src/xo.mts | 15 +- @vates/types/tsconfig.json | 3 +- @xen-orchestra/backups-cli/package.json | 4 +- @xen-orchestra/backups/_runners/VmsRemote.mjs | 7 +- @xen-orchestra/backups/_runners/VmsXapi.mjs | 5 +- .../backups/_runners/_vmRunners/FullRemote.mjs | 2 +- .../backups/_runners/_vmRunners/FullXapi.mjs | 6 +- .../_runners/_vmRunners/IncrementalRemote.mjs | 5 +- .../_runners/_vmRunners/IncrementalXapi.mjs | 7 +- .../_runners/_vmRunners/_AbstractRemote.mjs | 2 + .../backups/_runners/_vmRunners/_AbstractXapi.mjs | 2 + .../_runners/_writers/IncrementalXapiWriter.mjs | 11 +- @xen-orchestra/backups/package.json | 4 +- @xen-orchestra/disk-transform/src/Throttled.mts | 26 + @xen-orchestra/disk-transform/src/Timeout.mts | 16 + @xen-orchestra/disk-transform/src/index.mts | 2 + @xen-orchestra/disk-transform/tsconfig.json | 1 + @xen-orchestra/fs/package.json | 4 +- @xen-orchestra/fs/src/abstract.js | 53 +- .../immutable-backups/liftProtection.mjs | 4 +- @xen-orchestra/immutable-backups/package.json | 2 +- @xen-orchestra/lite/CHANGELOG.md | 3 + @xen-orchestra/lite/docs/modals.md | 111 - @xen-orchestra/lite/package.json | 6 +- @xen-orchestra/lite/src/App.vue | 6 +- .../lite/src/components/CollectionFilter.vue | 33 +- .../lite/src/components/CollectionFilterRow.vue | 53 +- .../lite/src/components/CollectionSorter.vue | 20 +- .../components/component-story/StoryPropParams.vue | 10 +- .../lite/src/components/form/FormJson.vue | 18 +- .../src/components/modals/CodeHighlightModal.vue | 11 +- .../components/modals/CollectionFilterModal.vue | 90 +- .../components/modals/CollectionSorterModal.vue | 51 +- .../src/components/modals/InvalidFieldModal.vue | 33 +- .../lite/src/components/modals/JsonEditorModal.vue | 62 +- .../components/modals/UnreachableHostsModal.vue | 58 +- .../lite/src/components/modals/VmDeleteModal.vue | 70 +- .../components/modals/VmExportBlockedUrlsModal.vue | 38 +- .../lite/src/components/modals/VmExportModal.vue | 59 +- .../lite/src/components/modals/VmMigrateModal.vue | 64 +- .../components/ui/modals/ModalApproveButton.vue | 13 - .../src/components/ui/modals/ModalCloseIcon.vue | 22 - .../src/components/ui/modals/ModalContainer.vue | 74 - .../components/ui/modals/ModalDeclineButton.vue | 16 - .../lite/src/components/ui/modals/ModalList.vue | 10 - .../src/components/ui/modals/ModalListItem.vue | 15 - .../lite/src/components/ui/modals/UiModal.vue | 55 - .../ui/modals/layouts/BasicModalLayout.vue | 26 - .../ui/modals/layouts/ConfirmModalLayout.vue | 76 - .../ui/modals/layouts/FormModalLayout.vue | 63 - .../vm/VmActionItems/VmActionDeleteItem.vue | 18 +- .../vm/VmActionItems/VmActionExportItem.vue | 23 +- .../vm/VmActionItems/VmActionMigrateItem.vue | 34 +- .../lite/src/composables/context.composable.ts | 5 +- .../lite/src/composables/modal.composable.ts | 4 - .../composables/unreachable-hosts.composable.ts | 23 +- @xen-orchestra/lite/src/jobs/vm-export.job.ts | 5 +- .../src/libs/xen-api/operations/vm-operations.ts | 14 +- @xen-orchestra/lite/src/pages/settings.vue | 305 +- @xen-orchestra/lite/src/pages/xoa-deploy.vue | 18 +- @xen-orchestra/lite/src/stores/modal.store.ts | 69 - .../modals/layouts/basic-modal-layout.story.md | 7 - .../modals/layouts/basic-modal-layout.story.vue | 17 - .../modals/layouts/confirm-modal-layout.story.md | 18 - .../modals/layouts/confirm-modal-layout.story.vue | 30 - .../modals/layouts/form-modal-layout.story.md | 30 - .../modals/layouts/form-modal-layout.story.vue | 50 - .../src/stories/modals/modal-container.story.md | 17 - .../src/stories/modals/modal-container.story.vue | 37 - @xen-orchestra/lite/src/types/index.ts | 9 - @xen-orchestra/lite/src/types/injection-keys.ts | 7 - @xen-orchestra/lite/typed-router.d.ts | 4 - @xen-orchestra/proxy/package.json | 4 +- @xen-orchestra/qcow2/tsconfig.json | 1 + @xen-orchestra/rest-api/package.json | 6 +- .../src/abstract-classes/base-controller.mts | 31 +- .../src/abstract-classes/xapi-xo-controller.mts | 17 +- .../rest-api/src/groups/group.controller.mts | 27 +- .../rest-api/src/helpers/helper.type.mts | 11 + .../rest-api/src/hosts/host.controller.mts | 88 +- @xen-orchestra/rest-api/src/index.mts | 7 +- @xen-orchestra/rest-api/src/ioc/ioc.mts | 6 - .../rest-api/src/messages/message.controller.mts | 2 +- .../src/middlewares/authentication.middleware.mts | 83 +- .../rest-api/src/networks/network.controller.mts | 78 +- .../src/open-api/oa-examples/pbd.oa-example.mts | 43 + .../src/open-api/oa-examples/user.oa-example.mts | 12 + .../rest-api/src/pbds/pbd.controller.mts | 50 + .../rest-api/src/pifs/pif.controller.mts | 52 +- .../rest-api/src/pools/pool.controller.mts | 78 +- .../rest-api/src/rest-api/rest-api.type.mts | 13 +- .../rest-api/src/servers/server.controller.mts | 28 +- @xen-orchestra/rest-api/src/srs/sr.controller.mts | 100 +- @xen-orchestra/rest-api/src/tasks/task.service.mts | 32 - .../rest-api/src/users/user.controller.mts | 139 +- .../rest-api/src/users/user.middleware.mts | 23 + .../rest-api/src/vbds/vbd.controller.mts | 51 +- .../src/vdi-snapshots/vdi-snapshot.controller.mts | 79 +- .../rest-api/src/vdis/vdi.controller.mts | 112 +- .../rest-api/src/vifs/vif.controller.mts | 52 +- .../src/vm-controller/vm-controller.controller.mts | 80 +- .../src/vm-snapshots/vm-snapshot.controller.mts | 61 +- .../src/vm-templates/vm-template.controller.mts | 78 +- @xen-orchestra/rest-api/src/vms/vm.controller.mts | 45 +- @xen-orchestra/rest-api/tsconfig.json | 1 + @xen-orchestra/rest-api/tsoa.json | 19 + @xen-orchestra/vmware-explorer/esxi.mjs | 2 +- @xen-orchestra/vmware-explorer/package.json | 2 +- @xen-orchestra/web-core/docs/index.md | 2 + @xen-orchestra/web-core/docs/modals.md | 115 + .../web-core/lib/assets/css/_colors.pcss | 8 + .../lib/components/backdrop/VtsBackdrop.vue | 10 +- .../lib/components/backup-state/VtsBackupState.vue | 3 +- .../lib/components/button-group/VtsButtonGroup.vue | 6 +- .../web-core/lib/components/menu/MenuList.vue | 1 + .../web-core/lib/components/modal/VtsModal.vue | 82 + .../lib/components/modal/VtsModalButton.vue | 36 + .../lib/components/modal/VtsModalCancelButton.vue | 37 + .../lib/components/modal/VtsModalConfirmButton.vue | 21 + .../web-core/lib/components/modal/VtsModalList.vue | 34 + .../lib/components/task/VtsQuickTaskButton.vue | 5 +- .../lib/components/task/VtsQuickTaskList.vue | 22 +- .../web-core/lib/components/tree/VtsTreeItem.vue | 4 +- .../web-core/lib/components/ui/button/UiButton.vue | 80 +- .../ui/collapsible-list/UiCollapsibleList.vue | 4 + .../web-core/lib/components/ui/modal/UiModal.vue | 164 + .../web-core/lib/components/ui/panel/UiPanel.vue | 2 +- .../ui/quick-task-item/UiQuickTaskItem.vue | 4 +- .../ui/table-pagination/UiTablePagination.vue | 4 +- .../web-core/lib/composables/context.composable.ts | 8 +- .../lib/composables/link-component.composable.ts | 5 +- .../lib/composables/pagination.composable.ts | 5 +- @xen-orchestra/web-core/lib/locales/cs.json | 82 +- @xen-orchestra/web-core/lib/locales/en.json | 36 +- @xen-orchestra/web-core/lib/locales/es.json | 82 +- @xen-orchestra/web-core/lib/locales/fr.json | 40 +- @xen-orchestra/web-core/lib/locales/it.json | 11 +- @xen-orchestra/web-core/lib/locales/nl.json | 48 +- @xen-orchestra/web-core/lib/locales/pt_BR.json | 34 +- @xen-orchestra/web-core/lib/locales/ru.json | 84 +- @xen-orchestra/web-core/lib/locales/uk.json | 340 +- .../lib/packages/collection/use-collection.ts | 5 +- .../form-select/use-form-option-controller.ts | 5 +- .../lib/packages/form-select/use-form-select.ts | 15 +- .../web-core/lib/packages/menu/action.ts | 7 +- @xen-orchestra/web-core/lib/packages/menu/link.ts | 9 +- .../web-core/lib/packages/menu/router-link.ts | 5 +- .../web-core/lib/packages/menu/toggle-target.ts | 5 +- .../web-core/lib/packages/modal/ModalProvider.vue | 17 + .../web-core/lib/packages/modal/README.md | 253 ++ .../lib/packages/modal/create-modal-opener.ts | 103 + .../web-core/lib/packages/modal/modal.store.ts | 22 + .../web-core/lib/packages/modal/types.ts | 92 + .../web-core/lib/packages/modal/use-modal.ts | 53 + .../web-core/lib/packages/progress/use-progress.ts | 7 +- .../web-core/lib/packages/table/README.md | 336 ++ .../lib/packages/table/apply-extensions.ts | 26 + .../web-core/lib/packages/table/define-columns.ts | 62 + .../define-renderer/define-table-cell-renderer.ts | 27 + .../table/define-renderer/define-table-renderer.ts | 47 + .../define-renderer/define-table-row-renderer.ts | 29 + .../define-table-section-renderer.ts | 29 + .../define-table/define-multi-source-table.ts | 39 + .../packages/table/define-table/define-table.ts | 13 + .../table/define-table/define-typed-table.ts | 18 + .../web-core/lib/packages/table/index.ts | 11 + .../lib/packages/table/transform-sources.ts | 13 + .../lib/packages/table/types/extensions.ts | 16 + .../web-core/lib/packages/table/types/index.ts | 47 + .../lib/packages/table/types/table-cell.ts | 18 + .../web-core/lib/packages/table/types/table-row.ts | 20 + .../lib/packages/table/types/table-section.ts | 19 + .../web-core/lib/packages/table/types/table.ts | 28 + .../lib/packages/threshold/use-threshold.ts | 7 +- .../web-core/lib/types/value-matcher.d.ts | 3 + .../web-core/lib/types/vue-virtual-scroller.d.ts | 101 + .../web-core/lib/utils/injection-keys.util.ts | 3 + @xen-orchestra/web-core/lib/utils/progress.util.ts | 3 +- @xen-orchestra/web-core/lib/utils/speed.util.ts | 12 + @xen-orchestra/web-core/lib/utils/time.util.ts | 57 + .../web-core/lib/utils/to-computed.util.ts | 15 + @xen-orchestra/web-core/package.json | 5 +- @xen-orchestra/web/env.d.ts | 1 + @xen-orchestra/web/package.json | 12 +- @xen-orchestra/web/src/App.vue | 2 + .../{pool/dashboard => }/alarms/AlarmLink.vue | 0 .../web/src/components/alarms/DashboardAlarms.vue | 77 + .../components/backups/jobs/BackupJobsTable.vue | 16 +- .../backups/jobs/panel/BackupJobsSidePanel.vue | 112 + .../panel/cards-items/BackupJobsSmartModePools.vue | 23 + .../panel/cards-items/BackupJobsSmartModeTags.vue | 23 + .../panel/cards-items/BackupJobsTargetsBrItem.vue | 19 + .../panel/cards-items/BackupJobsTargetsSection.vue | 41 + .../panel/cards-items/BackupJobsTargetsSrItem.vue | 16 + .../jobs/panel/cards-items/BackupRunItem.vue | 85 + .../jobs/panel/cards/BackupJobInfosCard.vue | 69 + .../backups/jobs/panel/cards/BackupJobLogsCard.vue | 41 + .../jobs/panel/cards/BackupJobSchedulesCard.vue | 92 + .../jobs/panel/cards/BackupJobSettingsCard.vue | 225 ++ .../panel/cards/BackupJobsBackedUpPoolsCard.vue | 39 + .../jobs/panel/cards/BackupJobsBackedUpVmsCard.vue | 173 + .../jobs/panel/cards/BackupJobsTargetsCard.vue | 48 + .../panel/cards/BackupSourceRepositoryCard.vue | 42 + .../host/dashboard/HostDashboardPatches.vue | 111 + .../pool/dashboard/PoolDashboardStatus.vue | 8 +- .../pool/dashboard/alarms/PoolDashboardAlarms.vue | 85 - .../components/site/backups/BackupJobsTable.vue | 238 -- .../src/components/site/dashboard/AlarmLink.vue | 83 - .../web/src/components/site/dashboard/Alarms.vue | 80 - .../web/src/components/tree/SiteTreeList.vue | 24 +- @xen-orchestra/web/src/main.ts | 1 + @xen-orchestra/web/src/pages/(site)/backups.vue | 32 +- @xen-orchestra/web/src/pages/(site)/dashboard.vue | 7 +- .../web/src/pages/host/[id]/dashboard.vue | 24 + .../web/src/pages/pool/[id]/dashboard.vue | 21 +- @xen-orchestra/web/src/pages/vm/[id]/backups.vue | 29 +- @xen-orchestra/web/src/pages/vm/[id]/dashboard.vue | 17 + @xen-orchestra/web/src/pages/vm/new.vue | 6 +- .../remote-resources/use-xo-alarm-collection.ts | 3 +- .../use-xo-backup-job-collection.ts | 57 +- .../use-xo-backup-log-collection.ts | 2 +- .../src/remote-resources/use-xo-br-collection.ts | 13 + .../use-xo-host-alarms-collection.ts | 18 + .../use-xo-host-missing-patches-collection.ts | 11 + .../use-xo-metadata-backup-job-collection.ts | 13 - .../use-xo-mirror-backup-job-collection.ts | 13 - .../remote-resources/use-xo-proxy-collection.ts | 13 + .../remote-resources/use-xo-schedule-collection.ts | 2 +- .../src/remote-resources/use-xo-sr-collection.ts | 2 +- .../use-xo-vm-alarms-collection.ts | 18 + .../use-xo-vm-backup-job-collection.ts | 19 - .../src/remote-resources/use-xo-vm-collection.ts | 2 +- @xen-orchestra/web/src/types/xo/backup-log.type.ts | 2 + @xen-orchestra/web/src/types/xo/br.type.ts | 11 + @xen-orchestra/web/src/types/xo/index.ts | 4 + .../web/src/types/xo/metadata-backup-job.type.ts | 33 +- .../web/src/types/xo/mirror-backup-job.type.ts | 33 +- @xen-orchestra/web/src/types/xo/new-vm.type.ts | 2 +- @xen-orchestra/web/src/types/xo/proxy.type.ts | 13 + .../web/src/types/xo/vm-backup-job.type.ts | 37 +- @xen-orchestra/web/src/utils/pattern.util.ts | 42 + @xen-orchestra/web/vite.config.ts | 8 + @xen-orchestra/xapi/disks/Xapi.mjs | 23 +- @xen-orchestra/xapi/package.json | 2 +- CHANGELOG.md | 83 +- CHANGELOG.unreleased.md | 85 + docs/docs/manage_infrastructure.md | 78 +- docs/docs/object-storage-support.md | 37 + docs/docs/users.md | 11 +- docs/docs/xoa.md | 2 +- docs/sidebars.ts | 1 + packages/vhd-cli/package.json | 2 +- .../disk-consumer/DiskConsumerVhdDirectory.mjs | 4 +- packages/vhd-lib/package.json | 6 +- packages/xo-server-auth-saml/package.json | 4 +- packages/xo-server-auth-saml/src/index.js | 20 +- packages/xo-server-sdn-controller/package.json | 4 +- packages/xo-server-sdn-controller/src/index.js | 165 +- .../src/openflow-controller.js | 16 + .../src/openflow-plugin.js | 115 + .../src/protocol/openflow-channel.js | 4 +- packages/xo-server/config.toml | 3 + packages/xo-server/package.json | 12 +- packages/xo-server/src/index.mjs | 2 +- packages/xo-server/src/xo-mixins/rest-api.mjs | 910 +---- packages/xo-server/src/xo-mixins/subjects.mjs | 11 +- packages/xo-server/src/xo-mixins/vmware/index.mjs | 5 +- packages/xo-web/package.json | 2 +- packages/xo-web/src/common/copiable/index.css | 2 +- packages/xo-web/src/common/copiable/index.js | 16 +- packages/xo-web/src/common/form/toggle.js | 10 +- packages/xo-web/src/common/intl/messages.js | 5 + .../xo-web/src/xo-app/hub/recipes/recipe-ev.js | 4 + .../src/xo-app/hub/recipes/recipe-form-ev.js | 31 +- packages/xo-web/src/xo-app/new-vm/index.js | 3 +- packages/xo-web/src/xo-app/pool/tab-advanced.js | 66 +- packages/xo-web/src/xo-app/vm/action-bar.js | 12 +- packages/xo-web/src/xo-app/vm/tab-network.js | 2 +- yarn.lock | 3606 ++++++++++---------- 289 files changed, 9224 insertions(+), 5148 deletions(-) create mode 100644 @xen-orchestra/disk-transform/src/Throttled.mts create mode 100644 @xen-orchestra/disk-transform/src/Timeout.mts delete mode 100644 @xen-orchestra/lite/docs/modals.md delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/ModalApproveButton.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/ModalCloseIcon.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/ModalContainer.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/ModalDeclineButton.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/ModalList.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/ModalListItem.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/UiModal.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/layouts/BasicModalLayout.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/layouts/ConfirmModalLayout.vue delete mode 100644 @xen-orchestra/lite/src/components/ui/modals/layouts/FormModalLayout.vue delete mode 100644 @xen-orchestra/lite/src/composables/modal.composable.ts delete mode 100644 @xen-orchestra/lite/src/stores/modal.store.ts delete mode 100644 @xen-orchestra/lite/src/stories/modals/layouts/basic-modal-layout.story.md delete mode 100644 @xen-orchestra/lite/src/stories/modals/layouts/basic-modal-layout.story.vue delete mode 100644 @xen-orchestra/lite/src/stories/modals/layouts/confirm-modal-layout.story.md delete mode 100644 @xen-orchestra/lite/src/stories/modals/layouts/confirm-modal-layout.story.vue delete mode 100644 @xen-orchestra/lite/src/stories/modals/layouts/form-modal-layout.story.md delete mode 100644 @xen-orchestra/lite/src/stories/modals/layouts/form-modal-layout.story.vue delete mode 100644 @xen-orchestra/lite/src/stories/modals/modal-container.story.md delete mode 100644 @xen-orchestra/lite/src/stories/modals/modal-container.story.vue create mode 100644 @xen-orchestra/rest-api/src/open-api/oa-examples/pbd.oa-example.mts create mode 100644 @xen-orchestra/rest-api/src/pbds/pbd.controller.mts delete mode 100644 @xen-orchestra/rest-api/src/tasks/task.service.mts create mode 100644 @xen-orchestra/rest-api/src/users/user.middleware.mts create mode 100644 @xen-orchestra/web-core/docs/modals.md create mode 100644 @xen-orchestra/web-core/lib/components/modal/VtsModal.vue create mode 100644 @xen-orchestra/web-core/lib/components/modal/VtsModalButton.vue create mode 100644 @xen-orchestra/web-core/lib/components/modal/VtsModalCancelButton.vue create mode 100644 @xen-orchestra/web-core/lib/components/modal/VtsModalConfirmButton.vue create mode 100644 @xen-orchestra/web-core/lib/components/modal/VtsModalList.vue create mode 100644 @xen-orchestra/web-core/lib/components/ui/modal/UiModal.vue create mode 100644 @xen-orchestra/web-core/lib/packages/modal/ModalProvider.vue create mode 100644 @xen-orchestra/web-core/lib/packages/modal/README.md create mode 100644 @xen-orchestra/web-core/lib/packages/modal/create-modal-opener.ts create mode 100644 @xen-orchestra/web-core/lib/packages/modal/modal.store.ts create mode 100644 @xen-orchestra/web-core/lib/packages/modal/types.ts create mode 100644 @xen-orchestra/web-core/lib/packages/modal/use-modal.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/README.md create mode 100644 @xen-orchestra/web-core/lib/packages/table/apply-extensions.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-columns.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-renderer/define-table-cell-renderer.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-renderer/define-table-renderer.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-renderer/define-table-row-renderer.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-renderer/define-table-section-renderer.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-table/define-multi-source-table.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-table/define-table.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/define-table/define-typed-table.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/index.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/transform-sources.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/types/extensions.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/types/index.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/types/table-cell.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/types/table-row.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/types/table-section.ts create mode 100644 @xen-orchestra/web-core/lib/packages/table/types/table.ts create mode 100644 @xen-orchestra/web-core/lib/types/value-matcher.d.ts create mode 100644 @xen-orchestra/web-core/lib/types/vue-virtual-scroller.d.ts create mode 100644 @xen-orchestra/web-core/lib/utils/speed.util.ts create mode 100644 @xen-orchestra/web-core/lib/utils/to-computed.util.ts rename @xen-orchestra/web/src/components/{pool/dashboard => }/alarms/AlarmLink.vue (100%) create mode 100644 @xen-orchestra/web/src/components/alarms/DashboardAlarms.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/BackupJobsSidePanel.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards-items/BackupJobsSmartModePools.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards-items/BackupJobsSmartModeTags.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards-items/BackupJobsTargetsBrItem.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards-items/BackupJobsTargetsSection.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards-items/BackupJobsTargetsSrItem.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards-items/BackupRunItem.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupJobInfosCard.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupJobLogsCard.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupJobSchedulesCard.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupJobSettingsCard.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupJobsBackedUpPoolsCard.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupJobsBackedUpVmsCard.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupJobsTargetsCard.vue create mode 100644 @xen-orchestra/web/src/components/backups/jobs/panel/cards/BackupSourceRepositoryCard.vue create mode 100644 @xen-orchestra/web/src/components/host/dashboard/HostDashboardPatches.vue delete mode 100644 @xen-orchestra/web/src/components/pool/dashboard/alarms/PoolDashboardAlarms.vue delete mode 100644 @xen-orchestra/web/src/components/site/backups/BackupJobsTable.vue delete mode 100644 @xen-orchestra/web/src/components/site/dashboard/AlarmLink.vue delete mode 100644 @xen-orchestra/web/src/components/site/dashboard/Alarms.vue create mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-br-collection.ts create mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-host-alarms-collection.ts create mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-host-missing-patches-collection.ts delete mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-metadata-backup-job-collection.ts delete mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-mirror-backup-job-collection.ts create mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-proxy-collection.ts create mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-vm-alarms-collection.ts delete mode 100644 @xen-orchestra/web/src/remote-resources/use-xo-vm-backup-job-collection.ts create mode 100644 @xen-orchestra/web/src/types/xo/br.type.ts create mode 100644 @xen-orchestra/web/src/types/xo/proxy.type.ts create mode 100644 @xen-orchestra/web/src/utils/pattern.util.ts create mode 100644 docs/docs/object-storage-support.md create mode 100644 packages/xo-server-sdn-controller/src/openflow-controller.js create mode 100644 packages/xo-server-sdn-controller/src/openflow-plugin.js Clearing directories... Installing... yarn install v1.22.22 [1/5] Validating package.json... [2/5] Resolving packages... [3/5] Fetching packages... [4/5] Linking dependencies... [5/5] Building fresh packages... $ husky install husky - Git hooks installed Done in 40.48s. yarn run v1.22.22 $ TURBO_TELEMETRY_DISABLED=1 turbo run build --filter xo-server --filter xo-server-'*' --filter xo-web • Packages in scope: xo-server, xo-server-audit, xo-server-auth-github, xo-server-auth-google, xo-server-auth-ldap, xo-server-auth-oidc, xo-server-auth-saml, xo-server-backup-reports, xo-server-load-balancer, xo-server-netbox, xo-server-perf-alert, xo-server-sdn-controller, xo-server-test-plugin, xo-server-transport-email, xo-server-transport-icinga2, xo-server-transport-nagios, xo-server-transport-slack, xo-server-transport-xmpp, xo-server-usage-report, xo-server-web-hooks, xo-web • Running build in 21 packages • Remote caching disabled Tasks: 30 successful, 30 total Cached: 0 cached, 30 total Time: 1m25.652s Done in 86.03s. Updated version 5.190.1 / 5.187.0 Updated commit d829816f62bffe16b19d8d0cfc3f08841aed10df 2025-10-16 16:47:01 +0200 Checking plugins... Ignoring xo-server-test plugin Cleanup plugins... Restarting xo-server... -

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

Guess who's back!

Adding another host to the pool. Tested before and after updating xoa again.

pool.mergeInto { "sources": [ "e4cf2039-3547-6574-0e10-96f9d91316f0" ], "target": "38aea760-cf23-927c-ccf5-90969681e04b", "force": true } { "message": "app.getLicenses is not a function", "name": "TypeError", "stack": "TypeError: app.getLicenses is not a function at enforceHostsHaveLicense (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/pool.mjs:15:30) at Pools.apply (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/pool.mjs:80:13) at Pools.mergeInto (/opt/xen-orchestra/node_modules/golike-defer/src/index.js:85:19) at Xo.mergeInto (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:314:15) at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:175:22) at Task.run (/opt/xen-orchestra/@vates/task/index.js:159:20) at Api.#callApiMethod (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/api.mjs:469:18)" }Uncaught Object { code: -32000, data: {…}, stack: "", … } index.js:171:1258316 default log-error.js:10 (Async: setTimeout handler) default log-error.js:5 e action-button.js:130 InterpretGeneratorResume self-hosted:1332 throw self-hosted:1279 m action-button.js:10 s action-button.js:10 (Async: promise callback) m action-button.js:10 i action-button.js:10 _execute action-button.js:10 _execute action-button.js:10 _execute action-button.js:10 React 5 forEach self-hosted:157 React 9 (Async: EventListener.handleEvent) listen EventListener.js:29 React 29I also checked

journalctl -u xo-server.serviceNov 12 17:46:52 xoa xo-server[1223513]: 2025-11-12T17:46:52.761Z xo:api WARN jonathon@floatplanemedia.com | pool.mergeInto(...) [32ms] =!> TypeError: app.getLicenses is not a function Nov 12 17:49:14 xoa xo-server[1223513]: 2025-11-12T17:49:14.414Z xo:api WARN jonathon@floatplanemedia.com | pool.mergeInto(...) [2ms] =!> TypeError: app.getLicenses is not a function

-

RE: XOSTOR hyperconvergence preview

I have amazing news!

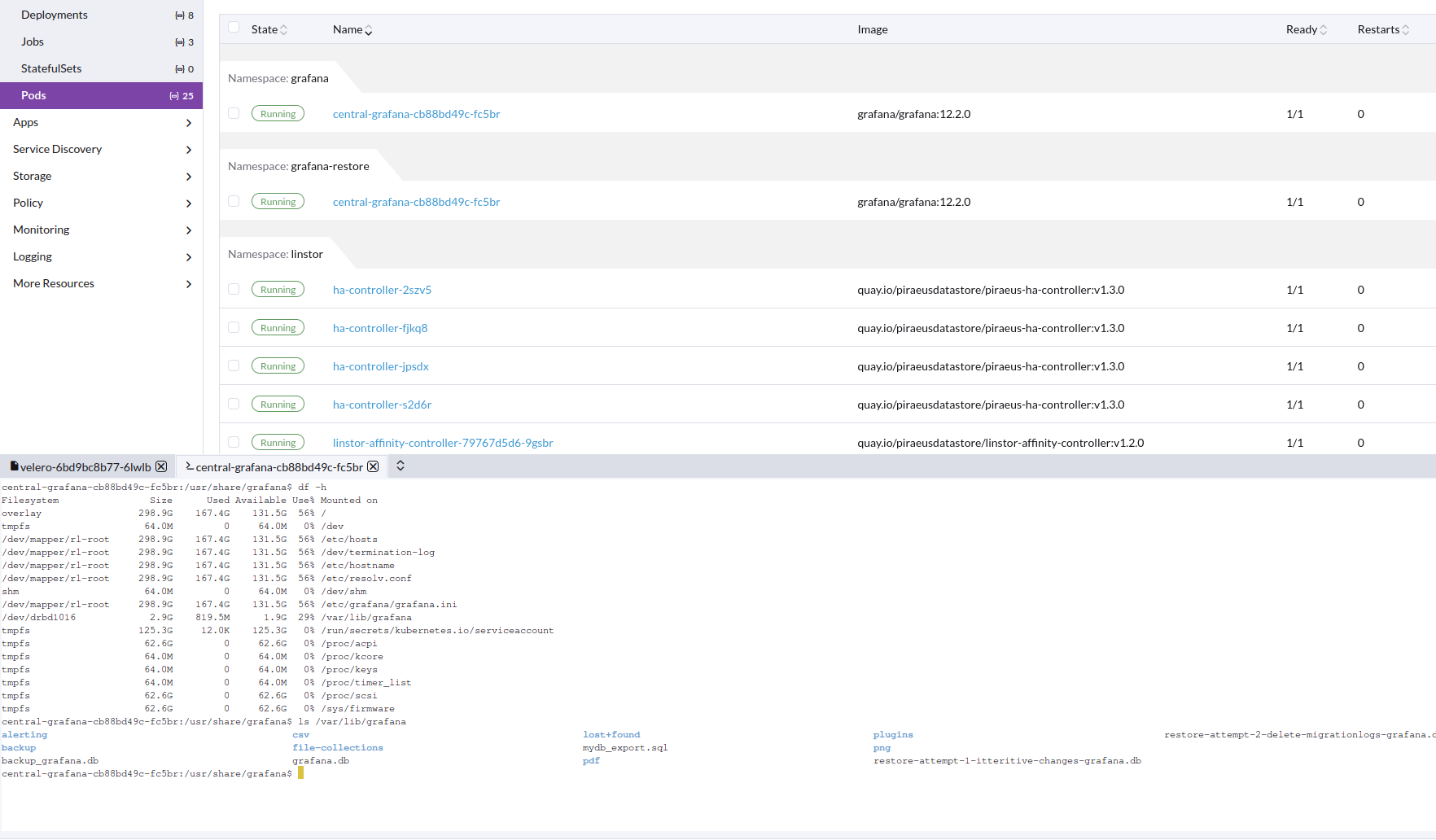

After the upgrade to xcp-ng 8.3, I retested velero backup, and it all just works

Completed Backup

jonathon@jonathon-framework:~$ velero --kubeconfig k8s_configs/production.yaml backup describe grafana-test Name: grafana-test Namespace: velero Labels: objectset.rio.cattle.io/hash=c2b5f500ab5d9b8ffe14f2c70bf3742291df565c velero.io/storage-location=default Annotations: objectset.rio.cattle.io/applied=H4sIAAAAAAAA/4SSQW/bPgzFvwvPtv9OajeJj/8N22HdBqxFL0MPlEQlWmTRkOhgQ5HvPsixE2yH7iji8ffIJ74CDu6ZYnIcoIMTeYpcOf7vtIICji4Y6OB/1MdxgAJ6EjQoCN0rYAgsKI5Dyk9WP0hLIqmi40qjiKfMcRlAq7pBY+py26qmbEi15a5p78vtaqe0oqbVVsO5AI+K/Ju4A6YDdKDXqrVtXaNqzU5traVVY9d6Uyt7t2nW693K2Pa+naABe4IO9hEtBiyFksClmgbUdN06a9NAOtvr5B4DDunA8uR64lGgg7u6rxMUYMji6OWZ/dhTeuIPaQ6os+gTFUA/tR8NmXd+TELxUfNA5hslHqOmBN13OF16ZwvNQShIqpZClYQj7qk6blPlGF5uzC/L3P+kvok7MB9z0OcCXPiLPLHmuLLWCfVfB4rTZ9/iaA5zHovNZz7R++k6JI50q89BXcuXYR5YT0DolkChABEPHWzW9cK+rPQx8jgsH/KQj+QT/frzXCdduc/Ca9u1Y7aaFvMu5Ang5Xz+HQAA//8X7Fu+/QIAAA objectset.rio.cattle.io/id=e104add0-85b4-4eb5-9456-819bcbe45cfc velero.io/resource-timeout=10m0s velero.io/source-cluster-k8s-gitversion=v1.33.4+rke2r1 velero.io/source-cluster-k8s-major-version=1 velero.io/source-cluster-k8s-minor-version=33 Phase: Completed Namespaces: Included: grafana Excluded: <none> Resources: Included cluster-scoped: <none> Excluded cluster-scoped: volumesnapshotcontents.snapshot.storage.k8s.io Included namespace-scoped: * Excluded namespace-scoped: volumesnapshots.snapshot.storage.k8s.io Label selector: <none> Or label selector: <none> Storage Location: default Velero-Native Snapshot PVs: true Snapshot Move Data: true Data Mover: velero TTL: 720h0m0s CSISnapshotTimeout: 30m0s ItemOperationTimeout: 4h0m0s Hooks: <none> Backup Format Version: 1.1.0 Started: 2025-10-15 15:29:52 -0700 PDT Completed: 2025-10-15 15:31:25 -0700 PDT Expiration: 2025-11-14 14:29:52 -0800 PST Total items to be backed up: 35 Items backed up: 35 Backup Item Operations: 1 of 1 completed successfully, 0 failed (specify --details for more information) Backup Volumes: Velero-Native Snapshots: <none included> CSI Snapshots: grafana/central-grafana: Data Movement: included, specify --details for more information Pod Volume Backups: <none included> HooksAttempted: 0 HooksFailed: 0Completed Restore

jonathon@jonathon-framework:~$ velero --kubeconfig k8s_configs/production.yaml restore describe restore-grafana-test --details Name: restore-grafana-test Namespace: velero Labels: objectset.rio.cattle.io/hash=252addb3ed156c52d9fa9b8c045b47a55d66c0af Annotations: objectset.rio.cattle.io/applied=H4sIAAAAAAAA/3yRTW7zIBBA7zJrO5/j35gzfE2rtsomymIM45jGBgTjbKLcvaKJm6qL7kDwnt7ABdDpHfmgrQEBZxrJ25W2/85rSOCkjQIBrxTYeoIEJmJUyAjiAmiMZWRtTYhb232Q5EC88tquJDKPFEU6GlpUG5UVZdpUdZ6WZZ+niOtNWtR1SypvqC8buCYwYkfjn7oBwwAC8ipHpbqC1LqqZZWrtse228isrLqywapSdS0z7KPU4EQgwN+mSI8eezSYMgWG22lwKOl7/MgERzJmdChPs9veDL9IGfSbQRcGy+96IjszCCiyCRLQRo6zIrVd5AHEfuHhkIBmmp4d+a/3e9Dl8LPoCZ3T5hg7FvQRcR8nxt6XL7sAgv1MCZztOE+01P23cvmnPYzaxNtwuF4/AwAA//8k6OwC/QEAAA objectset.rio.cattle.io/id=9ad8d034-7562-44f2-aa18-3669ed27ef47 Phase: Completed Total items to be restored: 33 Items restored: 33 Started: 2025-10-15 15:35:26 -0700 PDT Completed: 2025-10-15 15:36:34 -0700 PDT Warnings: Velero: <none> Cluster: <none> Namespaces: grafana-restore: could not restore, ConfigMap:elasticsearch-es-transport-ca-internal already exists. Warning: the in-cluster version is different than the backed-up version could not restore, ConfigMap:kube-root-ca.crt already exists. Warning: the in-cluster version is different than the backed-up version Backup: grafana-test Namespaces: Included: grafana Excluded: <none> Resources: Included: * Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io, csinodes.storage.k8s.io, volumeattachments.storage.k8s.io, backuprepositories.velero.io Cluster-scoped: auto Namespace mappings: grafana=grafana-restore Label selector: <none> Or label selector: <none> Restore PVs: true CSI Snapshot Restores: grafana-restore/central-grafana: Data Movement: Operation ID: dd-ffa56e1c-9fd0-44b4-a8bb-8163f40a49e9.330b82fc-ca6a-423217ee5 Data Mover: velero Uploader Type: kopia Existing Resource Policy: <none> ItemOperationTimeout: 4h0m0s Preserve Service NodePorts: auto Restore Item Operations: Operation for persistentvolumeclaims grafana-restore/central-grafana: Restore Item Action Plugin: velero.io/csi-pvc-restorer Operation ID: dd-ffa56e1c-9fd0-44b4-a8bb-8163f40a49e9.330b82fc-ca6a-423217ee5 Phase: Completed Progress: 856284762 of 856284762 complete (Bytes) Progress description: Completed Created: 2025-10-15 15:35:28 -0700 PDT Started: 2025-10-15 15:36:06 -0700 PDT Updated: 2025-10-15 15:36:26 -0700 PDT HooksAttempted: 0 HooksFailed: 0 Resource List: apps/v1/Deployment: - grafana-restore/central-grafana(created) - grafana-restore/grafana-debug(created) apps/v1/ReplicaSet: - grafana-restore/central-grafana-5448b9f65(created) - grafana-restore/central-grafana-56887c6cb6(created) - grafana-restore/central-grafana-56ddd4f497(created) - grafana-restore/central-grafana-5f4757844b(created) - grafana-restore/central-grafana-5f69f86c85(created) - grafana-restore/central-grafana-64545dcdc(created) - grafana-restore/central-grafana-69c66c54d9(created) - grafana-restore/central-grafana-6c8d6f65b8(created) - grafana-restore/central-grafana-7b479f79ff(created) - grafana-restore/central-grafana-bc7d96cdd(created) - grafana-restore/central-grafana-cb88bd49c(created) - grafana-restore/grafana-debug-556845ff7b(created) - grafana-restore/grafana-debug-6fb594cb5f(created) - grafana-restore/grafana-debug-8f66bfbf6(created) discovery.k8s.io/v1/EndpointSlice: - grafana-restore/central-grafana-hkgd5(created) networking.k8s.io/v1/Ingress: - grafana-restore/central-grafana(created) rbac.authorization.k8s.io/v1/Role: - grafana-restore/central-grafana(created) rbac.authorization.k8s.io/v1/RoleBinding: - grafana-restore/central-grafana(created) v1/ConfigMap: - grafana-restore/central-grafana(created) - grafana-restore/elasticsearch-es-transport-ca-internal(failed) - grafana-restore/kube-root-ca.crt(failed) v1/Endpoints: - grafana-restore/central-grafana(created) v1/PersistentVolume: - pvc-e3f6578f-08b2-4e79-85f0-76bbf8985b55(skipped) v1/PersistentVolumeClaim: - grafana-restore/central-grafana(created) v1/Pod: - grafana-restore/central-grafana-cb88bd49c-fc5br(created) v1/Secret: - grafana-restore/fpinfra-net-cf-cert(created) - grafana-restore/grafana(created) v1/Service: - grafana-restore/central-grafana(created) v1/ServiceAccount: - grafana-restore/central-grafana(created) - grafana-restore/default(skipped) velero.io/v2alpha1/DataUpload: - velero/grafana-test-nw7zj(skipped)Image of working restore pod, with correct data in PV

Velero installed from helm: https://vmware-tanzu.github.io/helm-charts

Version: velero:11.1.0

Values--- image: repository: velero/velero tag: v1.17.0 # Whether to deploy the restic daemonset. deployNodeAgent: true initContainers: - name: velero-plugin-for-aws image: velero/velero-plugin-for-aws:latest imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /target name: plugins configuration: defaultItemOperationTimeout: 2h features: EnableCSI defaultSnapshotMoveData: true backupStorageLocation: - name: default provider: aws bucket: velero config: region: us-east-1 s3ForcePathStyle: true s3Url: https://s3.location # Destination VSL points to LINSTOR snapshot class volumeSnapshotLocation: - name: linstor provider: velero.io/csi config: snapshotClass: linstor-vsc credentials: useSecret: true existingSecret: velero-user metrics: enabled: true serviceMonitor: enabled: true prometheusRule: enabled: true # Additional labels to add to deployed PrometheusRule additionalLabels: {} # PrometheusRule namespace. Defaults to Velero namespace. # namespace: "" # Rules to be deployed spec: - alert: VeleroBackupPartialFailures annotations: message: Velero backup {{ $labels.schedule }} has {{ $value | humanizePercentage }} partialy failed backups. expr: |- velero_backup_partial_failure_total{schedule!=""} / velero_backup_attempt_total{schedule!=""} > 0.25 for: 15m labels: severity: warning - alert: VeleroBackupFailures annotations: message: Velero backup {{ $labels.schedule }} has {{ $value | humanizePercentage }} failed backups. expr: |- velero_backup_failure_total{schedule!=""} / velero_backup_attempt_total{schedule!=""} > 0.25 for: 15m labels: severity: warningAlso create the following.

apiVersion: snapshot.storage.k8s.io/v1 kind: VolumeSnapshotClass metadata: name: linstor-vsc labels: velero.io/csi-volumesnapshot-class: "true" driver: linstor.csi.linbit.com deletionPolicy: DeleteWe are using Piraeus operator to use xostor in k8s

https://github.com/piraeusdatastore/piraeus-operator.git

Version: v2.9.1

Values:--- operator: resources: requests: cpu: 250m memory: 500Mi limits: memory: 1Gi installCRDs: true imageConfigOverride: - base: quay.io/piraeusdatastore components: linstor-satellite: image: piraeus-server tag: v1.29.0 tls: certManagerIssuerRef: name: step-issuer kind: StepClusterIssuer group: certmanager.step.smThen we just connect to the xostor cluster like external linstor controller.

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

@Danp Sorry, due to the host being added and me continuing on with my other tasks, it is not. I could have made that more clear.

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

It does not matter now, the host has been added. I had just found the error to be interesting that thought someone would want to know about it.

-

RE: Attempting to add new host fail on xoa and on server, worked on xcp-ng center

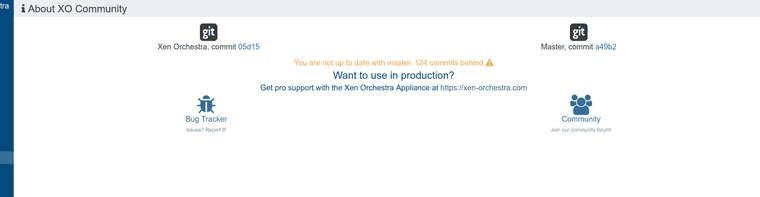

I ran the upgrade yesterday. And this is the state that it gave me

installing : node-v22.15.1 mkdir : /usr/local/n/versions/node/22.15.1 fetch : https://nodejs.org/dist/v22.15.1/node-v22.15.1-linux-x64.tar.xz installed : v22.15.1 (with npm 10.9.2) Stopping xo-server... Checking for Yarn package... Checking for Yarn update... Reading package lists... ... yarn install v1.22.22 [1/5] Validating package.json... [2/5] Resolving packages... [3/5] Fetching packages... [4/5] Linking dependencies... [5/5] Building fresh packages... $ husky install husky - Git hooks installed Done in 46.08s. yarn run v1.22.22 $ TURBO_TELEMETRY_DISABLED=1 turbo run build --filter xo-server --filter xo-server-'*' --filter xo-web • Packages in scope: xo-server, xo-server-audit, xo-server-auth-github, xo-server-auth-google, xo-server-auth-ldap, xo-server-auth-oidc, xo-server-auth-saml, xo-server-backup-reports, xo-server-load-balancer, xo-server-netbox, xo-server-perf-alert, xo-server-sdn-controller, xo-server-test, xo-server-test-plugin, xo-server-transport-email, xo-server-transport-icinga2, xo-server-transport-nagios, xo-server-transport-slack, xo-server-transport-xmpp, xo-server-usage-report, xo-server-web-hooks, xo-web • Running build in 22 packages • Remote caching disabled Tasks: 29 successful, 29 total Cached: 0 cached, 29 total Time: 1m18.85s Done in 79.13s. Updated version 5.177.0 / 5.173.2 Updated commit a49b27bff7d325f704957b8aac3055ad0407bd40 2025-05-20 16:42:02 +0200 Checking plugins... Ignoring xo-server-test plugin Cleanup plugins... Restarting xo-server... -

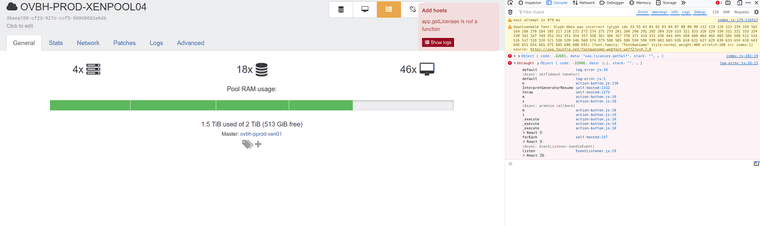

Attempting to add new host fail on xoa and on server, worked on xcp-ng center

I got the following when trying to add a new host to an existing pool

pool.mergeInto { "sources": [ "0af9d764-44e6-1393-4eea-6cb59b934f2a" ], "target": "38aea760-cf23-927c-ccf5-90969681e04b", "force": true } { "message": "app.getLicenses is not a function", "name": "TypeError", "stack": "TypeError: app.getLicenses is not a function at enforceHostsHaveLicense (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/pool.mjs:15:30) at Pools.apply (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/pool.mjs:80:13) at Pools.mergeInto (/opt/xen-orchestra/node_modules/golike-defer/src/index.js:85:19) at Xo.mergeInto (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:311:15) at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:175:22) at Task.run (/opt/xen-orchestra/@vates/task/index.js:159:20) at Api.#callApiMethod (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/api.mjs:469:18)" }Had no idea what was up with this error, so updated the xoa vm and installed updates. But I still got the same error

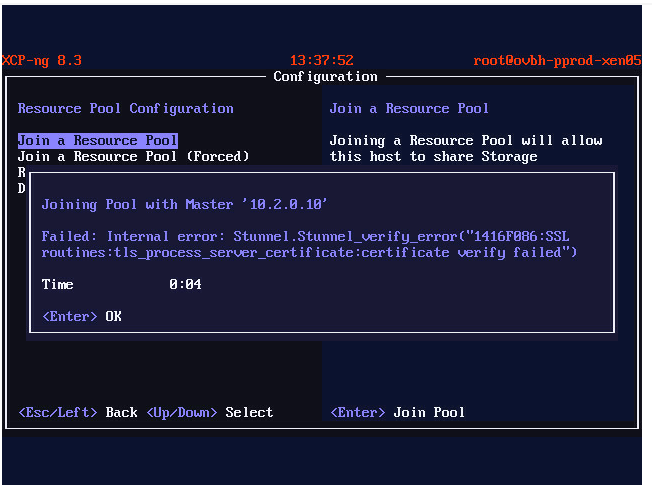

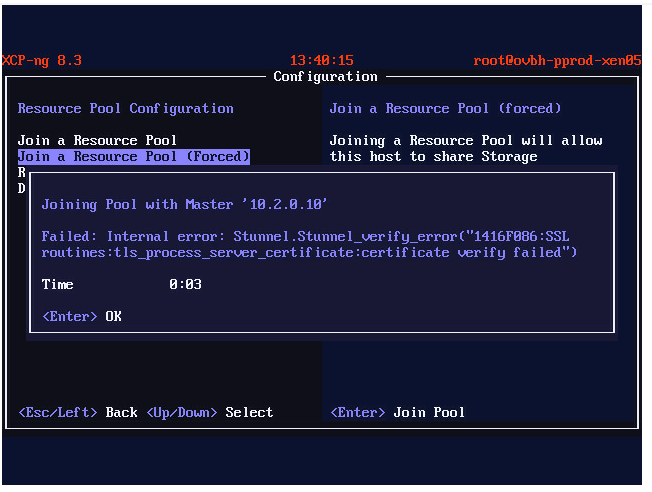

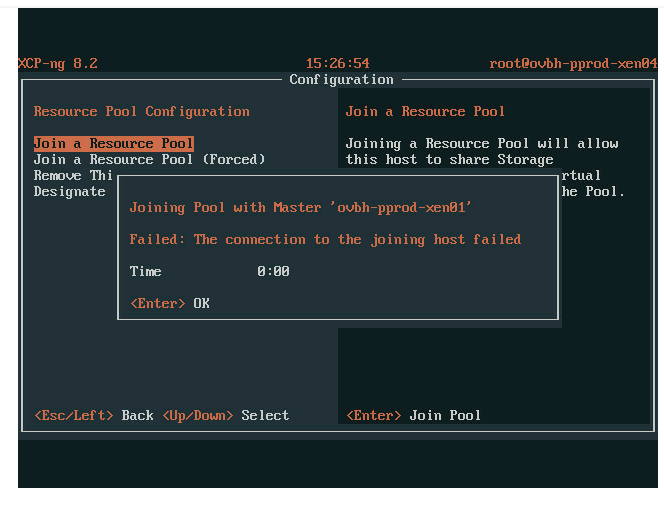

I then tried adding it from the server itself, that also failed

/home/jonathon/Pictures/Screenshots/Screenshot from 2025-05-20 15-26-59.png

/home/jonathon/Pictures/Screenshots/Screenshot from 2025-05-20 15-26-59.pngJust to see if it would work, I then tried with the latest xcp-ng center, and that worked.

So I do not need anything now, but thought it was interesting and worth posting.

-

RE: DevOps Megathread: what you need and how we can help!

@andrewperry I myself migrated our rancher management cluster from the original rke to a new rke2 cluster using this plan not too long ago, so you should not have much trouble. Feel free to ask questions

-

RE: DevOps Megathread: what you need and how we can help!

@nathanael-h Nice

If you have any questions let me know, I have been using this for all our on prem clusters for a while now.

-

RE: DevOps Megathread: what you need and how we can help!

I do not have any asks ATM, but I thought I would just share my plan that I use to create k8s clusters that we have been using for a while now.

It has grown over time and may be a bit messy, but figured better then nothing. We use this for rke2 rancher k8s clusters deployed onto out xcp-ng cluster. We use xostor for drives, and the vlan5 network is for piraeus operator to use for pv. We also use IPVS. We are using a rocky linux 9 vm template.

If these are useful to anyone and they have questions I will do my best to answer.

variable "pool" { default = "OVBH-PROD-XENPOOL04" } variable "network0" { default = "Native vRack" } variable "network1" { default = "VLAN80" } variable "network2" { default = "VLAN5" } variable "cluster_name" { default = "Production K8s Cluster" } variable "enrollment_command" { default = "curl -fL https://rancher.<redacted>.net/system-agent-install.sh | sudo sh -s - --server https://rancher.<redacted>.net --label 'cattle.io/os=linux' --token <redacted>" } variable "node_type" { description = "Node type flag" default = { "1" = "--etcd --controlplane", "2" = "--etcd --controlplane", "3" = "--etcd --controlplane", "4" = "--worker", "5" = "--worker", "6" = "--worker", "7" = "--worker --taints smtp=true:NoSchedule", "8" = "--worker --taints smtp=true:NoSchedule", "9" = "--worker --taints smtp=true:NoSchedule" } } variable "node_networks" { description = "Node network flag" default = { "1" = "--internal-address 10.1.8.100 --address <redacted>", "2" = "--internal-address 10.1.8.101 --address <redacted>", "3" = "--internal-address 10.1.8.102 --address <redacted>", "4" = "--internal-address 10.1.8.103 --address <redacted>", "5" = "--internal-address 10.1.8.104 --address <redacted>", "6" = "--internal-address 10.1.8.105 --address <redacted>", "7" = "--internal-address 10.1.8.106 --address <redacted>", "8" = "--internal-address 10.1.8.107 --address <redacted>", "9" = "--internal-address 10.1.8.108 --address <redacted>" } } variable "vm_name" { description = "Node type flag" default = { "1" = "OVBH-VPROD-K8S01-MASTER01", "2" = "OVBH-VPROD-K8S01-MASTER02", "3" = "OVBH-VPROD-K8S01-MASTER03", "4" = "OVBH-VPROD-K8S01-WORKER01", "5" = "OVBH-VPROD-K8S01-WORKER02", "6" = "OVBH-VPROD-K8S01-WORKER03", "7" = "OVBH-VPROD-K8S01-WORKER04", "8" = "OVBH-VPROD-K8S01-WORKER05", "9" = "OVBH-VPROD-K8S01-WORKER06" } } variable "preferred_host" { default = { "1" = "85838113-e4b8-4520-9f6d-8f3cf554c8f1", "2" = "783c27ac-2dcb-4798-9ca8-27f5f30791f6", "3" = "c03e1a45-4c4c-46f5-a2a1-d8de2e22a866", "4" = "85838113-e4b8-4520-9f6d-8f3cf554c8f1", "5" = "783c27ac-2dcb-4798-9ca8-27f5f30791f6", "6" = "c03e1a45-4c4c-46f5-a2a1-d8de2e22a866", "7" = "85838113-e4b8-4520-9f6d-8f3cf554c8f1", "8" = "783c27ac-2dcb-4798-9ca8-27f5f30791f6", "9" = "c03e1a45-4c4c-46f5-a2a1-d8de2e22a866" } } variable "xoa_admin_password" { } variable "host_count" { description = "All drives go to xostor" default = { "1" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "2" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "3" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "4" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "5" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "6" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "7" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "8" = "479ca676-20a1-4051-7189-a4a9ca47e00d", "9" = "479ca676-20a1-4051-7189-a4a9ca47e00d" } } variable "network1_ip_mapping" { description = "Mapping for network1 ips, vlan80" default = { "1" = "10.1.8.100", "2" = "10.1.8.101", "3" = "10.1.8.102", "4" = "10.1.8.103", "5" = "10.1.8.104", "6" = "10.1.8.105", "7" = "10.1.8.106", "8" = "10.1.8.107", "9" = "10.1.8.108" } } variable "network1_gateway" { description = "Mapping for public ip gateways, from hosts" default = "10.1.8.1" } variable "network1_prefix" { description = "Prefix for the network used" default = "22" } variable "network2_ip_mapping" { description = "Mapping for network2 ips, VLAN5" default = { "1" = "10.2.5.30", "2" = "10.2.5.31", "3" = "10.2.5.32", "4" = "10.2.5.33", "5" = "10.2.5.34", "6" = "10.2.5.35", "7" = "10.2.5.36", "8" = "10.2.5.37", "9" = "10.2.5.38" } } variable "network2_prefix" { description = "Prefix for the network used" default = "22" } variable "network0_ip_mapping" { description = "Mapping for network0 ips, public" default = { <redacted> } } variable "network0_gateway" { description = "Mapping for public ip gateways, from hosts" default = { <redacted> } } variable "network0_prefix" { description = "Prefix for the network used" default = { <redacted> } }# Instruct terraform to download the provider on `terraform init` terraform { required_providers { xenorchestra = { source = "vatesfr/xenorchestra" version = "~> 0.29.0" } } } # Configure the XenServer Provider provider "xenorchestra" { # Must be ws or wss url = "ws://10.2.0.5" # Or set XOA_URL environment variable username = "admin@admin.net" # Or set XOA_USER environment variable password = var.xoa_admin_password # Or set XOA_PASSWORD environment variable } data "xenorchestra_pool" "pool" { name_label = var.pool } data "xenorchestra_template" "template" { name_label = "Rocky Linux 9 Template" pool_id = data.xenorchestra_pool.pool.id } data "xenorchestra_network" "net1" { name_label = var.network1 pool_id = data.xenorchestra_pool.pool.id } data "xenorchestra_network" "net2" { name_label = var.network2 pool_id = data.xenorchestra_pool.pool.id } data "xenorchestra_network" "net0" { name_label = var.network0 pool_id = data.xenorchestra_pool.pool.id } resource "xenorchestra_cloud_config" "node" { count = 9 name = "${lower(lookup(var.vm_name, count.index + 1))}_cloud_config" template = <<EOF #cloud-config ssh_authorized_keys: - ssh-rsa <redacted> write_files: - path: /etc/NetworkManager/conf.d/rke2-canal.conf permissions: '0755' owner: root content: | [keyfile] unmanaged-devices=interface-name:cali*;interface-name:flannel* - path: /tmp/selinux_kmod_drbd.log permissions: '0640' owner: root content: | type=AVC msg=audit(1661803314.183:778): avc: denied { module_load } for pid=148256 comm="insmod" path="/tmp/ko/drbd.ko" dev="overlay" ino=101839829 scontext=system_u:system_r:unconfined_service_t:s0 tcontext=system_u:object_r:var_lib_t:s0 tclass=system permissive=0 type=AVC msg=audit(1661803314.185:779): avc: denied { module_load } for pid=148257 comm="insmod" path="/tmp/ko/drbd_transport_tcp.ko" dev="overlay" ino=101839831 scontext=system_u:system_r:unconfined_service_t:s0 tcontext=system_u:object_r:var_lib_t:s0 tclass=system permissive=0 - path: /etc/sysconfig/modules/ipvs.modules permissions: 0755 owner: root content: | #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack - path: /etc/modules-load.d/ipvs.conf permissions: 0755 owner: root content: | ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack #cloud-init runcmd: - sudo hostnamectl set-hostname --static ${lower(lookup(var.vm_name, count.index + 1))}.<redacted>.com - sudo hostnamectl set-hostname ${lower(lookup(var.vm_name, count.index + 1))}.<redacted>.com - nmcli -t -f NAME con show | xargs -d '\n' -I {} nmcli con delete "{}" - nmcli con add type ethernet con-name public ifname enX0 - nmcli con mod public ipv4.address '${lookup(var.network0_ip_mapping, count.index + 1)}/${lookup(var.network0_prefix, count.index + 1)}' - nmcli con mod public ipv4.method manual - nmcli con mod public ipv4.ignore-auto-dns yes - nmcli con mod public ipv4.gateway '${lookup(var.network0_gateway, count.index + 1)}' - nmcli con mod public ipv4.dns "8.8.8.8 8.8.4.4" - nmcli con mod public connection.autoconnect true - nmcli con up public - nmcli con add type ethernet con-name vlan80 ifname enX1 - nmcli con mod vlan80 ipv4.address '${lookup(var.network1_ip_mapping, count.index + 1)}/${var.network1_prefix}' - nmcli con mod vlan80 ipv4.method manual - nmcli con mod vlan80 ipv4.ignore-auto-dns yes - nmcli con mod vlan80 ipv4.ignore-auto-routes yes - nmcli con mod vlan80 ipv4.gateway '${var.network1_gateway}' - nmcli con mod vlan80 ipv4.dns "${var.network1_gateway}" - nmcli con mod vlan80 connection.autoconnect true - nmcli con mod vlan80 ipv4.never-default true - nmcli con mod vlan80 ipv6.never-default true - nmcli con mod vlan80 ipv4.routes "10.0.0.0/8 ${var.network1_gateway}" - nmcli con up vlan80 - nmcli con add type ethernet con-name vlan5 ifname enX2 - nmcli con mod vlan5 ipv4.address '${lookup(var.network2_ip_mapping, count.index + 1)}/${var.network2_prefix}' - nmcli con mod vlan5 ipv4.method manual - nmcli con mod vlan5 ipv4.ignore-auto-dns yes - nmcli con mod vlan5 ipv4.ignore-auto-routes yes - nmcli con mod vlan5 connection.autoconnect true - nmcli con mod vlan5 ipv4.never-default true - nmcli con mod vlan5 ipv6.never-default true - nmcli con up vlan5 - systemctl restart NetworkManager - dnf upgrade -y - dnf install ipset ipvsadm -y - bash /etc/sysconfig/modules/ipvs.modules - dnf install chrony -y - sudo systemctl enable --now chronyd - yum install kernel-devel kernel-headers -y - yum install elfutils-libelf-devel -y - swapoff -a - modprobe -- ip_tables - systemctl disable --now firewalld.service - systemctl disable --now rngd - dnf config-manager --add-repo=https://download.docker.com/linux/centos/docker-ce.repo - dnf install containerd.io tar -y - dnf install policycoreutils-python-utils -y - cat /tmp/selinux_kmod_drbd.log | sudo audit2allow -M insmoddrbd - sudo semodule -i insmoddrbd.pp - ${var.enrollment_command} ${lookup(var.node_type, count.index + 1)} ${lookup(var.node_networks, count.index + 1)} bootcmd: - swapoff -a - modprobe -- ip_tables EOF } resource "xenorchestra_vm" "master" { count = 3 cpus = 4 memory_max = 8589934592 cloud_config = xenorchestra_cloud_config.node[count.index].template name_label = lookup(var.vm_name, count.index + 1) name_description = "${var.cluster_name} master" template = data.xenorchestra_template.template.id auto_poweron = true affinity_host = lookup(var.preferred_host, count.index + 1) network { network_id = data.xenorchestra_network.net0.id } network { network_id = data.xenorchestra_network.net1.id } network { network_id = data.xenorchestra_network.net2.id } disk { sr_id = lookup(var.host_count, count.index + 1) name_label = "Terraform_disk_imavo" size = 107374182400 } } resource "xenorchestra_vm" "worker" { count = 3 cpus = 32 memory_max = 68719476736 cloud_config = xenorchestra_cloud_config.node[count.index + 3].template name_label = lookup(var.vm_name, count.index + 3 + 1) name_description = "${var.cluster_name} worker" template = data.xenorchestra_template.template.id auto_poweron = true affinity_host = lookup(var.preferred_host, count.index + 3 + 1) network { network_id = data.xenorchestra_network.net0.id } network { network_id = data.xenorchestra_network.net1.id } network { network_id = data.xenorchestra_network.net2.id } disk { sr_id = lookup(var.host_count, count.index + 3 + 1) name_label = "Terraform_disk_imavo" size = 322122547200 } } resource "xenorchestra_vm" "smtp" { count = 3 cpus = 4 memory_max = 8589934592 cloud_config = xenorchestra_cloud_config.node[count.index + 6].template name_label = lookup(var.vm_name, count.index + 6 + 1) name_description = "${var.cluster_name} smtp worker" template = data.xenorchestra_template.template.id auto_poweron = true affinity_host = lookup(var.preferred_host, count.index + 6 + 1) network { network_id = data.xenorchestra_network.net0.id } network { network_id = data.xenorchestra_network.net1.id } network { network_id = data.xenorchestra_network.net2.id } disk { sr_id = lookup(var.host_count, count.index + 6 + 1) name_label = "Terraform_disk_imavo" size = 53687091200 } } -

RE: XOSTOR hyperconvergence preview

OK we have debugged and improved this process, so including it here if it helps anyone else.

How to migrate resources between XOSTOR (linstor) clusters. This also works with piraeus-operator, which we use for k8s.

Manually moving listor resource with thin_send_recv

Migration of data

Commands

# PV: pvc-6408a214-6def-44c4-8d9a-bebb67be5510 # S: pgdata-snapshot # s: 10741612544B #get size lvs --noheadings --units B -o lv_size linstor_group/pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000 #prep lvcreate -V 10741612544B --thinpool linstor_group/thin_device -n pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000 linstor_group #create snapshot linstor --controller original-xostor-server s create pvc-6408a214-6def-44c4-8d9a-bebb67be5510 pgdata-snapshot #send thin_send linstor_group/pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000_pgdata-snapshot 2>/dev/null | ssh root@new-xostor-server-01 thin_recv linstor_group/pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000 2>/dev/nullWalk-through

Prep migration

[13:29 original-xostor-server ~]# lvs --noheadings --units B -o lv_size linstor_group/pvc-12aca72c-d94a-4c09-8102-0a6646906f8d_00000 26851934208B [13:53 new-xostor-server-01 ~]# lvcreate -V 26851934208B --thinpool linstor_group/thin_device -n pvc-12aca72c-d94a-4c09-8102-0a6646906f8d_00000 linstor_group Logical volume "pvc-12aca72c-d94a-4c09-8102-0a6646906f8d_00000" created.Create snapshot

15:35:03] jonathon@jonathon-framework:~$ linstor --controller original-xostor-server s create pvc-12aca72c-d94a-4c09-8102-0a6646906f8d s_test SUCCESS: Description: New snapshot 's_test' of resource 'pvc-12aca72c-d94a-4c09-8102-0a6646906f8d' registered. Details: Snapshot 's_test' of resource 'pvc-12aca72c-d94a-4c09-8102-0a6646906f8d' UUID is: 3a07d2fd-6dc3-4994-b13f-8c3a2bb206b8 SUCCESS: Suspended IO of '[pvc-12aca72c-d94a-4c09-8102-0a6646906f8d]' on 'ovbh-vprod-k8s04-worker02' for snapshot SUCCESS: Suspended IO of '[pvc-12aca72c-d94a-4c09-8102-0a6646906f8d]' on 'original-xostor-server' for snapshot SUCCESS: Took snapshot of '[pvc-12aca72c-d94a-4c09-8102-0a6646906f8d]' on 'ovbh-vprod-k8s04-worker02' SUCCESS: Took snapshot of '[pvc-12aca72c-d94a-4c09-8102-0a6646906f8d]' on 'original-xostor-server' SUCCESS: Resumed IO of '[pvc-12aca72c-d94a-4c09-8102-0a6646906f8d]' on 'ovbh-vprod-k8s04-worker02' after snapshot SUCCESS: Resumed IO of '[pvc-12aca72c-d94a-4c09-8102-0a6646906f8d]' on 'original-xostor-server' after snapshotMigration

[13:53 original-xostor-server ~]# thin_send /dev/linstor_group/pvc-12aca72c-d94a-4c09-8102-0a6646906f8d_00000_s_test 2>/dev/null | ssh root@new-xostor-server-01 thin_recv linstor_group/pvc-12aca72c-d94a-4c09-8102-0a6646906f8d_00000 2>/dev/nullNeed to yeet errors on both ends of command or it will fail.

This is the same setup process for replica-1 or replica-3. For replica-3 can target new-xostor-server-01 each time, for replica-1 be sure to spread them out right.

Replica-3 Setup

Explanation

thin_sendto new-xostor-server-01, will need to run commands to force sync of data to replicas.Commands

# PV: pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 # snapshot: snipeit-snapshot # size: 21483225088B #get size lvs --noheadings --units B -o lv_size linstor_group/pvc-96cbebbe-f827-4a47-ae95-38b078e0d584_00000 #prep lvcreate -V 21483225088B --thinpool linstor_group/thin_device -n pvc-96cbebbe-f827-4a47-ae95-38b078e0d584_00000 linstor_group #create snapshot linstor --controller original-xostor-server s create pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 snipeit-snapshot linstor --controller original-xostor-server s l | grep -e 'snipeit-snapshot' #send thin_send linstor_group/pvc-96cbebbe-f827-4a47-ae95-38b078e0d584_00000_snipeit-snapshot 2>/dev/null | ssh root@new-xostor-server-01 thin_recv linstor_group/pvc-96cbebbe-f827-4a47-ae95-38b078e0d584_00000 2>/dev/null #linstor setup linstor --controller new-xostor-server-01 resource-definition create pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 --resource-group sc-74e1434b-b435-587e-9dea-fa067deec898 linstor --controller new-xostor-server-01 volume-definition create pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 21483225088B --storage-pool xcp-sr-linstor_group_thin_device linstor --controller new-xostor-server-01 resource create --storage-pool xcp-sr-linstor_group_thin_device --providers LVM_THIN new-xostor-server-01 pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 linstor --controller new-xostor-server-01 resource create --auto-place +1 pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 #Run the following on the node with the data. This is the prefered command drbdadm invalidate-remote pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 #Run the following on the node without the data. This is just for reference drbdadm invalidate pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 linstor --controller new-xostor-server-01 r l | grep -e 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584'--- apiVersion: v1 kind: PersistentVolume metadata: name: pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 annotations: pv.kubernetes.io/provisioned-by: linstor.csi.linbit.com finalizers: - external-provisioner.volume.kubernetes.io/finalizer - kubernetes.io/pv-protection - external-attacher/linstor-csi-linbit-com spec: accessModes: - ReadWriteOnce capacity: storage: 20Gi # Ensure this matches the actual size of the LINSTOR volume persistentVolumeReclaimPolicy: Retain storageClassName: linstor-replica-three # Adjust to the storage class you want to use volumeMode: Filesystem csi: driver: linstor.csi.linbit.com fsType: ext4 volumeHandle: pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 volumeAttributes: linstor.csi.linbit.com/mount-options: '' linstor.csi.linbit.com/post-mount-xfs-opts: '' linstor.csi.linbit.com/uses-volume-context: 'true' linstor.csi.linbit.com/remote-access-policy: 'true' --- apiVersion: v1 kind: PersistentVolumeClaim metadata: annotations: pv.kubernetes.io/bind-completed: 'yes' pv.kubernetes.io/bound-by-controller: 'yes' volume.beta.kubernetes.io/storage-provisioner: linstor.csi.linbit.com volume.kubernetes.io/storage-provisioner: linstor.csi.linbit.com finalizers: - kubernetes.io/pvc-protection name: pp-snipeit-pvc namespace: snipe-it spec: accessModes: - ReadWriteOnce resources: requests: storage: 20Gi storageClassName: linstor-replica-three volumeMode: Filesystem volumeName: pvc-96cbebbe-f827-4a47-ae95-38b078e0d584Walk-through

jonathon@jonathon-framework:~$ linstor --controller new-xostor-server-01 resource-definition create pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 --resource-group sc-74e1434b-b435-587e-9dea-fa067deec898 SUCCESS: Description: New resource definition 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' created. Details: Resource definition 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' UUID is: 772692e2-3fca-4069-92e9-2bef22c68a6f jonathon@jonathon-framework:~$ linstor --controller new-xostor-server-01 volume-definition create pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 21483225088B --storage-pool xcp-sr-linstor_group_thin_device SUCCESS: Successfully set property key(s): StorPoolName SUCCESS: New volume definition with number '0' of resource definition 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' created. jonathon@jonathon-framework:~$ linstor --controller new-xostor-server-01 resource create --storage-pool xcp-sr-linstor_group_thin_device --providers LVM_THIN new-xostor-server-01 pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 SUCCESS: Successfully set property key(s): StorPoolName INFO: Updated pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 DRBD auto verify algorithm to 'crct10dif-pclmul' SUCCESS: Description: New resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on node 'new-xostor-server-01' registered. Details: Resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on node 'new-xostor-server-01' UUID is: 3072aaae-4a34-453e-bdc6-facb47809b3d SUCCESS: Description: Volume with number '0' on resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on node 'new-xostor-server-01' successfully registered Details: Volume UUID is: 52b11ef6-ec50-42fb-8710-1d3f8c15c657 SUCCESS: Created resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on 'new-xostor-server-01' jonathon@jonathon-framework:~$ linstor --controller new-xostor-server-01 resource create --auto-place +1 pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 SUCCESS: Successfully set property key(s): StorPoolName SUCCESS: Successfully set property key(s): StorPoolName SUCCESS: Description: Resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' successfully autoplaced on 2 nodes Details: Used nodes (storage pool name): 'new-xostor-server-02 (xcp-sr-linstor_group_thin_device)', 'new-xostor-server-03 (xcp-sr-linstor_group_thin_device)' INFO: Resource-definition property 'DrbdOptions/Resource/quorum' updated from 'off' to 'majority' by auto-quorum INFO: Resource-definition property 'DrbdOptions/Resource/on-no-quorum' updated from 'off' to 'suspend-io' by auto-quorum SUCCESS: Added peer(s) 'new-xostor-server-02', 'new-xostor-server-03' to resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on 'new-xostor-server-01' SUCCESS: Created resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on 'new-xostor-server-02' SUCCESS: Created resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on 'new-xostor-server-03' SUCCESS: Description: Resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on 'new-xostor-server-03' ready Details: Auto-placing resource: pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 SUCCESS: Description: Resource 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' on 'new-xostor-server-02' ready Details: Auto-placing resource: pvc-96cbebbe-f827-4a47-ae95-38b078e0d584At this point

jonathon@jonathon-framework:~$ linstor --controller new-xostor-server-01 v l | grep -e 'pvc-96cbebbe-f827-4a47-ae95-38b078e0d584' | new-xostor-server-01 | pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 | xcp-sr-linstor_group_thin_device | 0 | 1032 | /dev/drbd1032 | 9.20 GiB | Unused | UpToDate | | new-xostor-server-02 | pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 | xcp-sr-linstor_group_thin_device | 0 | 1032 | /dev/drbd1032 | 112.73 MiB | Unused | UpToDate | | new-xostor-server-03 | pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 | xcp-sr-linstor_group_thin_device | 0 | 1032 | /dev/drbd1032 | 112.73 MiB | Unused | UpToDate |To force the sync, run the following command on the node with the data

drbdadm invalidate-remote pvc-96cbebbe-f827-4a47-ae95-38b078e0d584This will kick it to get the data re-synced.

[14:51 new-xostor-server-01 ~]# drbdadm invalidate-remote pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 [14:51 new-xostor-server-01 ~]# drbdadm status pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 role:Secondary disk:UpToDate new-xostor-server-02 role:Secondary replication:SyncSource peer-disk:Inconsistent done:1.14 new-xostor-server-03 role:Secondary replication:SyncSource peer-disk:Inconsistent done:1.18 [14:51 new-xostor-server-01 ~]# drbdadm status pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 pvc-96cbebbe-f827-4a47-ae95-38b078e0d584 role:Secondary disk:UpToDate new-xostor-server-02 role:Secondary peer-disk:UpToDate new-xostor-server-03 role:Secondary peer-disk:UpToDateSee: https://github.com/LINBIT/linstor-server/issues/389

Replica-1setup

# PV: pvc-6408a214-6def-44c4-8d9a-bebb67be5510 # S: pgdata-snapshot # s: 10741612544B #get size lvs --noheadings --units B -o lv_size linstor_group/pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000 #prep lvcreate -V 10741612544B --thinpool linstor_group/thin_device -n pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000 linstor_group #create snapshot linstor --controller original-xostor-server s create pvc-6408a214-6def-44c4-8d9a-bebb67be5510 pgdata-snapshot #send thin_send linstor_group/pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000_pgdata-snapshot 2>/dev/null | ssh root@new-xostor-server-01 thin_recv linstor_group/pvc-6408a214-6def-44c4-8d9a-bebb67be5510_00000 2>/dev/null # 1 linstor --controller new-xostor-server-01 resource-definition create pvc-6408a214-6def-44c4-8d9a-bebb67be5510 --resource-group sc-b066e430-6206-5588-a490-cc91ecef53d6 linstor --controller new-xostor-server-01 volume-definition create pvc-6408a214-6def-44c4-8d9a-bebb67be5510 10741612544B --storage-pool xcp-sr-linstor_group_thin_device linstor --controller new-xostor-server-01 resource create new-xostor-server-01 pvc-6408a214-6def-44c4-8d9a-bebb67be5510--- apiVersion: v1 kind: PersistentVolume metadata: name: pvc-6408a214-6def-44c4-8d9a-bebb67be5510 annotations: pv.kubernetes.io/provisioned-by: linstor.csi.linbit.com finalizers: - external-provisioner.volume.kubernetes.io/finalizer - kubernetes.io/pv-protection - external-attacher/linstor-csi-linbit-com spec: accessModes: - ReadWriteOnce capacity: storage: 10Gi # Ensure this matches the actual size of the LINSTOR volume persistentVolumeReclaimPolicy: Retain storageClassName: linstor-replica-one-local # Adjust to the storage class you want to use volumeMode: Filesystem csi: driver: linstor.csi.linbit.com fsType: ext4 volumeHandle: pvc-6408a214-6def-44c4-8d9a-bebb67be5510 volumeAttributes: linstor.csi.linbit.com/mount-options: '' linstor.csi.linbit.com/post-mount-xfs-opts: '' linstor.csi.linbit.com/uses-volume-context: 'true' linstor.csi.linbit.com/remote-access-policy: | - fromSame: - xcp-ng/node nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: xcp-ng/node operator: In values: - new-xostor-server-01 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: annotations: pv.kubernetes.io/bind-completed: 'yes' pv.kubernetes.io/bound-by-controller: 'yes' volume.beta.kubernetes.io/storage-provisioner: linstor.csi.linbit.com volume.kubernetes.io/selected-node: ovbh-vtest-k8s01-worker01 volume.kubernetes.io/storage-provisioner: linstor.csi.linbit.com finalizers: - kubernetes.io/pvc-protection name: acid-merch-2 namespace: default spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: linstor-replica-one-local volumeMode: Filesystem volumeName: pvc-6408a214-6def-44c4-8d9a-bebb67be5510 -

RE: XOSTOR hyperconvergence preview

The reason that it may be socat, is because the commands fail when I try using it, as instructed by https://github.com/LINBIT/thin-send-recv

[13:03 ovbh-pprod-xen11 ~]# thin_send linstor_group/pvc-12aca72c-d94a-4c09-8102-0a6646906f8d_00000_s_test 2>/dev/null | zstd | socat STDIN TCP:10.2.0.10:4321 2024/10/28 13:04:59 socat[25701] E write(5, 0x55da36101da0, 8192): Broken pipe ... [13:03 ovbh-pprod-xen01 ~]# socat TCP-LISTEN:4321 STDOUT | zstd -d | thin_recv linstor_group/pvc-12aca72c-d94a-4c09-8102-0a6646906f8d_00000 2>/dev/null 2024/10/28 13:04:59 socat[27039] E read(1, 0x560ef6ff4350, 8192): Bad file descriptorAnd the same thing happens if I exclude

zstdfrom both commands. -

RE: XOSTOR hyperconvergence preview

So, did more testing. Looks like thin_send_recv is not the problem, but maybe socat.

I am able to manually migrate resource between XOSTOR (linstor) cluster using thin_send_recv. I have encluded all steps below so that it can be replicated.And we know socat is used, cause it complains if it is not there.

jonathon@jonathon-framework:~$ linstor --controller 10.2.0.19 backup ship newCluster pvc-086a5817-d813-41fe-86d8-3fac2ae2028f pvc-086a5817-d813-41fe-86d8-3fac2ae2028f INFO: Cannot use node 'ovbh-pprod-xen10' as it does not support the tool(s): SOCAT INFO: Cannot use node 'ovbh-pprod-xen12' as it does not support the tool(s): SOCAT INFO: Cannot use node 'ovbh-pprod-xen13' as it does not support the tool(s): SOCAT ERROR: Backup shipping of resource 'pvc-086a5817-d813-41fe-86d8-3fac2ae2028f' cannot be started since there is no node available that supports backup shipping.Using 1.0.1 thin_send_recv.

[16:16 ovbh-pprod-xen11 ~]# thin_send --version 1.0.1 [16:16 ovbh-pprod-xen01 ~]# thin_recv --version 1.0.1Versions of socat match.

[16:16 ovbh-pprod-xen11 ~]# socat -V socat by Gerhard Rieger and contributors - see www.dest-unreach.org socat version 1.7.3.2 on Aug 4 2017 04:57:10 running on Linux version #1 SMP Tue Jan 23 14:12:55 CET 2024, release 4.19.0+1, machine x86_64 features: #define WITH_STDIO 1 #define WITH_FDNUM 1 #define WITH_FILE 1 #define WITH_CREAT 1 #define WITH_GOPEN 1 #define WITH_TERMIOS 1 #define WITH_PIPE 1 #define WITH_UNIX 1 #define WITH_ABSTRACT_UNIXSOCKET 1 #define WITH_IP4 1 #define WITH_IP6 1 #define WITH_RAWIP 1 #define WITH_GENERICSOCKET 1 #define WITH_INTERFACE 1 #define WITH_TCP 1 #define WITH_UDP 1 #define WITH_SCTP 1 #define WITH_LISTEN 1 #define WITH_SOCKS4 1 #define WITH_SOCKS4A 1 #define WITH_PROXY 1 #define WITH_SYSTEM 1 #define WITH_EXEC 1 #define WITH_READLINE 1 #define WITH_TUN 1 #define WITH_PTY 1 #define WITH_OPENSSL 1 #undef WITH_FIPS #define WITH_LIBWRAP 1 #define WITH_SYCLS 1 #define WITH_FILAN 1 #define WITH_RETRY 1 #define WITH_MSGLEVEL 0 /*debug*/ ... [16:17 ovbh-pprod-xen01 ~]# socat -V socat by Gerhard Rieger and contributors - see www.dest-unreach.org socat version 1.7.3.2 on Aug 4 2017 04:57:10 running on Linux version #1 SMP Tue Jan 23 14:12:55 CET 2024, release 4.19.0+1, machine x86_64 features: #define WITH_STDIO 1 #define WITH_FDNUM 1 #define WITH_FILE 1 #define WITH_CREAT 1 #define WITH_GOPEN 1 #define WITH_TERMIOS 1 #define WITH_PIPE 1 #define WITH_UNIX 1 #define WITH_ABSTRACT_UNIXSOCKET 1 #define WITH_IP4 1 #define WITH_IP6 1 #define WITH_RAWIP 1 #define WITH_GENERICSOCKET 1 #define WITH_INTERFACE 1 #define WITH_TCP 1 #define WITH_UDP 1 #define WITH_SCTP 1 #define WITH_LISTEN 1 #define WITH_SOCKS4 1 #define WITH_SOCKS4A 1 #define WITH_PROXY 1 #define WITH_SYSTEM 1 #define WITH_EXEC 1 #define WITH_READLINE 1 #define WITH_TUN 1 #define WITH_PTY 1 #define WITH_OPENSSL 1 #undef WITH_FIPS #define WITH_LIBWRAP 1 #define WITH_SYCLS 1 #define WITH_FILAN 1 #define WITH_RETRY 1 #define WITH_MSGLEVEL 0 /*debug*/Migrating using only

thin_send_recvworks.