@julien-f Hi Julien,

I updated, recreated the backup job and now it works again. Thank you!

@julien-f Hi Julien,

I updated, recreated the backup job and now it works again. Thank you!

At least I think so. I worked with svn for years but that was ages ago and I never got into using git.

root@xo:/opt/xen-orchestra# git rev-parse master

de288a008d83b81ea93d7f45889972028ff9ed6c

root@xo:/opt/xen-orchestra# git branch

* master

root@xo:/opt/xen-orchestra# git fetch --dry-run

remote: Enumerating objects: 5, done.

remote: Counting objects: 100% (5/5), done.

remote: Compressing objects: 100% (2/2), done.

remote: Total 5 (delta 3), reused 5 (delta 3), pack-reused 0

Unpacking objects: 100% (5/5), done.

From https://github.com/vatesfr/xen-orchestra

e2515e531..52c4df4e4 ya_host_update_rev -> origin/ya_host_update_rev

@olivierlambert Hi Olivier,

I updated both instances, recreated the jobs and while backups on one instance now work again, on the other they still fail:

{

"data": {

"mode": "delta",

"reportWhen": "failure"

},

"id": "1617829260018",

"jobId": "72cb10c2-9949-4b9c-9618-33a2de5b0f79",

"jobName": "customer-weekdays",

"message": "backup",

"scheduleId": "8333dbe9-6525-4f4f-a2da-cc6304254e96",

"start": 1617829260018,

"status": "failure",

"infos": [

{

"data": {

"vms": [

"adbe367b-e33e-66f7-863c-e21ea8fa301a",

"3837691a-c7c2-3ba9-143f-8afd0eb14f94",

"cbee81b4-afb8-2773-a92a-dd4b3f83651f",

"16dd84d4-2455-c293-2529-6311a98ecd46",

"03037a27-bc51-7cfc-ad64-4146992fc95a",

"324aa303-4404-20c1-c8f7-686ace47fd3f",

"5544f33e-5fd9-12e1-ee65-f5849bd7f442",

"d649555a-c134-fe17-aa68-d49b5ba50edf"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "adbe367b-e33e-66f7-863c-e21ea8fa301a"

},

"id": "1617829261498:0",

"message": "backup VM",

"start": 1617829261498,

"status": "failure",

"tasks": [

{

"id": "1617829282666",

"message": "snapshot",

"start": 1617829282666,

"status": "success",

"end": 1617829289768,

"result": "4815149b-0fb3-8cf3-8ec0-e32d5f3432fc"

},

{

"id": "1617829289768:0",

"message": "merge",

"start": 1617829289768,

"status": "failure",

"end": 1617829289771,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829291965,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

},

{

"data": {

"type": "VM",

"id": "3837691a-c7c2-3ba9-143f-8afd0eb14f94"

},

"id": "1617829291966",

"message": "backup VM",

"start": 1617829291966,

"status": "failure",

"tasks": [

{

"id": "1617829292001",

"message": "snapshot",

"start": 1617829292001,

"status": "success",

"end": 1617829299739,

"result": "e095c928-92c3-c085-1ea0-70584e5300e1"

},

{

"id": "1617829299739:0",

"message": "merge",

"start": 1617829299739,

"status": "failure",

"end": 1617829299739,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829301116,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

},

{

"data": {

"type": "VM",

"id": "cbee81b4-afb8-2773-a92a-dd4b3f83651f"

},

"id": "1617829301116:0",

"message": "backup VM",

"start": 1617829301116,

"status": "failure",

"tasks": [

{

"id": "1617829301149",

"message": "snapshot",

"start": 1617829301149,

"status": "success",

"end": 1617829309295,

"result": "b34ab48b-4077-0ad8-ea82-8c7233c82db6"

},

{

"id": "1617829309296",

"message": "merge",

"start": 1617829309296,

"status": "failure",

"end": 1617829309296,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829320279,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

},

{

"data": {

"type": "VM",

"id": "16dd84d4-2455-c293-2529-6311a98ecd46"

},

"id": "1617829320279:0",

"message": "backup VM",

"start": 1617829320279,

"status": "failure",

"tasks": [

{

"id": "1617829320312",

"message": "snapshot",

"start": 1617829320312,

"status": "success",

"end": 1617829333295,

"result": "1596e20b-e061-df4c-46d3-b2d5cce00094"

},

{

"id": "1617829333295:0",

"message": "merge",

"start": 1617829333295,

"status": "failure",

"end": 1617829333295,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829339414,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

},

{

"data": {

"type": "VM",

"id": "03037a27-bc51-7cfc-ad64-4146992fc95a"

},

"id": "1617829339415",

"message": "backup VM",

"start": 1617829339415,

"status": "failure",

"tasks": [

{

"id": "1617829339440",

"message": "snapshot",

"start": 1617829339440,

"status": "success",

"end": 1617829343670,

"result": "65a5a558-c30a-d0d8-5d02-57056bf6f7fc"

},

{

"id": "1617829343671",

"message": "merge",

"start": 1617829343671,

"status": "failure",

"end": 1617829343671,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829344953,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

},

{

"data": {

"type": "VM",

"id": "324aa303-4404-20c1-c8f7-686ace47fd3f"

},

"id": "1617829344954",

"message": "backup VM",

"start": 1617829344954,

"status": "failure",

"tasks": [

{

"id": "1617829344986",

"message": "snapshot",

"start": 1617829344986,

"status": "success",

"end": 1617829352405,

"result": "a0e9ca62-3e76-b6d1-cb0c-a3f0e602aded"

},

{

"id": "1617829352405:0",

"message": "merge",

"start": 1617829352405,

"status": "failure",

"end": 1617829352405,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829354244,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

},

{

"data": {

"type": "VM",

"id": "5544f33e-5fd9-12e1-ee65-f5849bd7f442"

},

"id": "1617829354244:0",

"message": "backup VM",

"start": 1617829354244,

"status": "failure",

"tasks": [

{

"id": "1617829354276",

"message": "snapshot",

"start": 1617829354276,

"status": "success",

"end": 1617829358692,

"result": "6f1be8c4-4559-35da-e0b7-efc042d9b174"

},

{

"id": "1617829358692:0",

"message": "merge",

"start": 1617829358692,

"status": "failure",

"end": 1617829358692,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829359980,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

},

{

"data": {

"type": "VM",

"id": "d649555a-c134-fe17-aa68-d49b5ba50edf"

},

"id": "1617829359980:0",

"message": "backup VM",

"start": 1617829359980,

"status": "failure",

"tasks": [

{

"id": "1617829360005",

"message": "snapshot",

"start": 1617829360005,

"status": "success",

"end": 1617829365133,

"result": "7b152071-5515-80c0-13fc-e390a13592d4"

},

{

"id": "1617829365133:0",

"message": "merge",

"start": 1617829365133,

"status": "failure",

"end": 1617829365133,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829367170,

"result": {

"message": "Cannot read property 'length' of undefined",

"name": "TypeError",

"stack": "TypeError: Cannot read property 'length' of undefined\n at /opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:114:31\n at Zone.run (/opt/xen-orchestra/node_modules/node-zone/index.js:80:23)\n at /opt/xen-orchestra/node_modules/node-zone/index.js:89:19\n at Function.fromFunction (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:20:27)\n at SyncThenable.fulfilledThen [as then] (/opt/xen-orchestra/@xen-orchestra/backups/_syncThenable.js:2:50)\n at TaskLogger.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:77:10)\n at Object.run (/opt/xen-orchestra/@xen-orchestra/backups/Task.js:157:17)\n at DeltaBackupWriter._deleteOldEntries (/opt/xen-orchestra/@xen-orchestra/backups/_DeltaBackupWriter.js:108:17)\n at Array.<anonymous> (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:150:70)\n at Function.from (<anonymous>)"

}

}

],

"end": 1617829367170

}

I can confirm having the same issue on two XO instances since upgrading to 5.78.2 / 5.80.0

P.S.:

I gave my latest "trick" another run:

Did a full copy of the VM within xo to the other host. While that job ran, I started the delta backup that finished OK. After the copy was done I deleted the copy and saw - as last time - that the host was coalescing. After finishing the sr's advanced tab is empty again and stayed empty.

After the weekend the vm's problem reappeared.

So I'm almost certain it has something to do with the backup jobs, because the problem appears now fr the third time in a row after a weekend where everything was okay.

Mo-Fr a delta backup job is running at 11pm, on Sun 00:01am a full backup job is running. Both jobs backup all of the pool's vms, except xo and metadata which are fully backuped daily.

The last delta on Friday and the last full backup on Sunday finished i.O.

@olivierlambert said in Delta backup fails for specific vm with VDI chain error:

@mbt so what changed since the situation in your initial problem?

Nothing. No system reboot, no patch installations, no hardware reconfiguration, no XCP-ng server reconfoguration, ..

I just

I wish I had an explanation.

After the backups were done, I saw the usual problem, but this time the system was able to correctly coalesce it seems. The nightly delta backup finished okay and until now the problem hasn't reappeared.

I did a vm copy today (to have at least some sort of current backup) and while it was copying I was able to also run the delta backup job. Afterwards I found a vdi coalesce chain of 1 and when I checked after a while there were no coalesce jobs queued.

At this moment the weekly full backup job is running, so I expect to have some "regular" backups of the vm today and will monitor what happens on the coalescing side. I'm not getting my hopes too high, though

@olivierlambert Do you think both hosts' sr could suffer from the same bug?

I migrated the disk back to the local lvm sr.

Guess what...

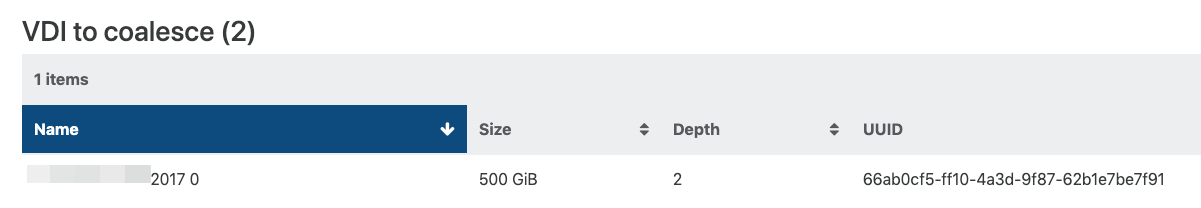

rigel: sr (25 VDIs)

├─┬ customer server 2017 0 - 972ef16c-8d47-43f9-866e-138a6a7693a8 - 500.98 Gi

│ └─┬ customer server 2017 0 - 4e61f49f-6598-4ef8-8ffa-e496f2532d0f - 0.33 Gi

│ └── customer server 2017 0 - 66ab0cf5-ff10-4a3d-9f87-62b1e7be7f91 - 500.98 Gi

But only for this vm, all the others are running (and backupping) fine.

How are my chances that coalesce will work fine again if I migrate the disk back to local lvm sr?

I now have migrated the VM's disk to NFS.

xapi-explore-sr looks like this (--full not working most of the time):

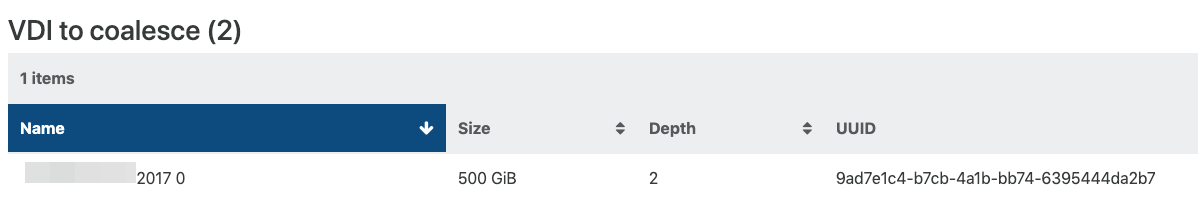

NASa-sr (3 VDIs)

└─┬ customer server 2017 0 - 6d1b49d2-51e1-4ad4-9a3b-95012e356aa3 - 500.94 Gi

└─┬ customer server 2017 0 - f2def08c-cf2e-4a85-bed8-f90bd11dd585 - 43.05 Gi

└── customer server 2017 0 - 9ad7e1c4-b7cb-4a1b-bb74-6395444da2b7 - 43.04 Gi

On the NFS it looks like this:

I'll wait a second for a coalesce job that is currently in progress...

OK, the job has finished. No VDIs to coalesce in the advanced tab.

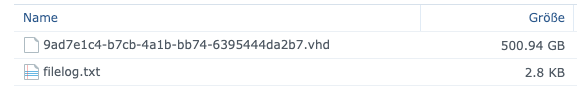

NFS looks like this:

xapi-explore-sr --full says:

NASa-sr (1 VDIs)

└── Customer server 2017 0 - 9ad7e1c4-b7cb-4a1b-bb74-6395444da2b7 - 43.04 Gi

Hi Peg,

believe me, I'd rather not be after your record

Disaster recovery does not work for this vm: "Job canceled to protect the VDI chain"

My guess: as long as XO checks the VDI chain for potential problems before each VM's backup, no backup-ng mechanism will backup this vm.

Migrated the vm to the other host, waited and watched the logs, etc.

The behaviour stays the same. It's constantly coalescing but never gets to an end. Depth in the advanced tab stays at 2.

So I guess the next step will be to setup an additional ext3 sr.

P.S.:

You said "file level sr". So I could also use NFS, right?

Setting up NFS on the 10GE SSD NAS would indeed be easier than adding drives to a host...

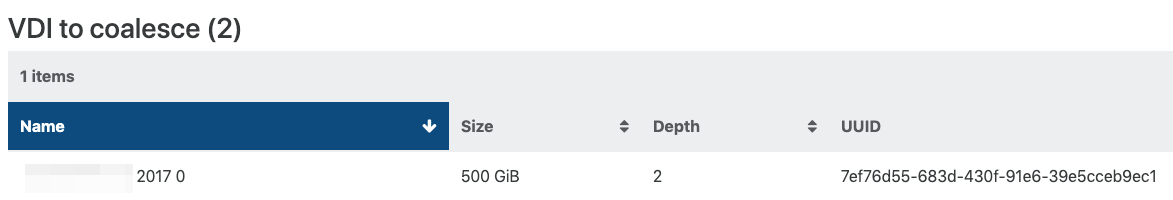

FYI, in the meantime the copy has finished, XO deleted the snapshot and now we're back at the start again:

xapi-explore-sr (--full doesn't work at the moment wit "maximum call stack size exceeded" error):

├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi

│ └─┬ customer server 2017 0 - 57b0bec0-7491-472b-b9fe-e3a66d48e1b0 - 0.2 Gi

│ └── customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 Gi

P.S.:

Whilst migrating:

Aug 28 13:45:33 rigel SMGC: [5663] No work, exiting

Aug 28 13:45:33 rigel SMGC: [5663] GC process exiting, no work left

Aug 28 13:45:33 rigel SMGC: [5663] SR f951 ('rigel: sr') (25 VDIs in 9 VHD trees): no changes

So, yeah, foobar

Hm.. I could move all vms on one host and add a couple of sas disks to the other, set up a file level sr and see how that's behaving. I just don't think I'll get it done this week.

P.S.: 16f83ba3 shows up only once in xapi-explore-sr, but twice in xapi-explore-sr --full

I tried what I did last week: I made a copy.

So I had the VM with no snapshot in the state descibed in my last posts. I triggered a full copy with zstd compression to the other host in XO.

The system created a VM snapshot and is currently in the process of copying.

Meanwhile the gc did some stuff and now says

Aug 28 11:19:27 rigel SMGC: [11997] GC process exiting, no work left

Aug 28 11:19:27 rigel SMGC: [11997] SR f951 ('rigel: sr') (25 VDIs in 9 VHD trees): no changes

xapi-explore-sr says:

rigel: sr (25 VDIs)

├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi

│ ├── customer server 2017 0 - 16f83ba3-ef58-4ae0-9783-1399bb9dea51 - 0.01 Gi

│ └─┬ customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 Gi

│ └── customer server 2017 0 - 16f83ba3-ef58-4ae0-9783-1399bb9dea51 - 0.01 Gi

Is it okay for 16f83ba3 to appear twice?

The sr's advanced tab in XO is empty.

The bigger number is equal to the configured virtual disk size.

The repair seems to work only if a disk is not in use - eq offline:

[10:24 rigel ~]# lvchange -ay /dev/mapper/VG_XenStorage--f951f048--dfcb--8bab--8339--463e9c9b708c-VHD--7ef76d55--683d--430f--91e6--39e5cceb9ec1

[10:26 rigel ~]# vhd-util repair -n /dev/mapper/VG_XenStorage--f951f048--dfcb--8bab--8339--463e9c9b708c-VHD--7ef76d55--683d--430f--91e6--39e5cceb9ec1

[10:27 rigel ~]# lvchange -an /dev/mapper/VG_XenStorage--f951f048--dfcb--8bab--8339--463e9c9b708c-VHD--7ef76d55--683d--430f--91e6--39e5cceb9ec1

Logical volume VG_XenStorage-f951f048-dfcb-8bab-8339-463e9c9b708c/VHD-7ef76d55-683d-430f-91e6-39e5cceb9ec1 in use.