I was able to find the disk that was causing the issue. Once removed, i was able to boot.

Now im trying to do a file restore but I'm getting this error on my test restore

on the one im trying to restore for real im getting this

I was able to find the disk that was causing the issue. Once removed, i was able to boot.

Now im trying to do a file restore but I'm getting this error on my test restore

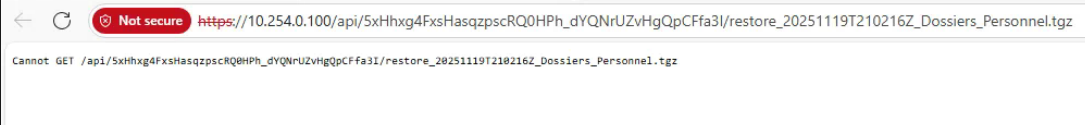

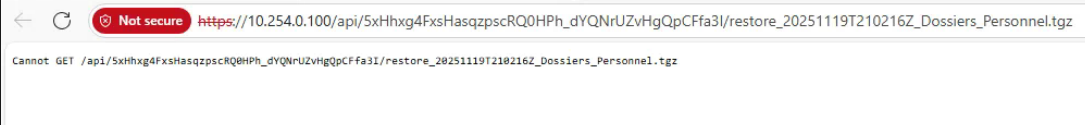

on the one im trying to restore for real im getting this

Host

XCPNG Version 8.3.0

Build Number 8.3.0

XO

Xen Orchestra, commit 1c01f

Command failed: mount --options=loop,ro,norecovery,sizelimit=16777216,offset=105906176 --source=/tmp/q7h26499y0q/vhd0 --target=/tmp/vnp8rqwkr4h

mount: /tmp/vnp8rqwkr4h: wrong fs type, bad option, bad superblock on /dev/loop5, missing codepage or helper program, or other error.

dmesg(1) may have more information after failed mount system call.

2025-11-18 14:06

adminhilo

Command failed: mount --options=loop,ro,norecovery,sizelimit=10485

===============================

backupNg.listFiles

{

"remote": "bc2e9147-522f-4898-854e-55082882fcd3",

"disk": "/xo-vm-backups/ad86ecc3-b752-c88d-3651-303ddc5c78e2/vdis/45ad91fd-6ac6-4642-a015-6273fed9c14c/1a47e837-e03c-4001-b0c3-adececd67c9b/20251113T051349Z.alias.vhd",

"path": "/",

"partition": "3d25f6f1-9ce1-4f0f-8bbf-122a260e6812"

}

{

"code": 32,

"killed": false,

"signal": null,

"cmd": "mount --options=loop,ro,norecovery,sizelimit=16777216,offset=105906176 --source=/tmp/q7h26499y0q/vhd0 --target=/tmp/vnp8rqwkr4h",

"message": "Command failed: mount --options=loop,ro,norecovery,sizelimit=16777216,offset=105906176 --source=/tmp/q7h26499y0q/vhd0 --target=/tmp/vnp8rqwkr4h

mount: /tmp/vnp8rqwkr4h: wrong fs type, bad option, bad superblock on /dev/loop5, missing codepage or helper program, or other error.

dmesg(1) may have more information after failed mount system call.

",

"name": "Error",

"stack": "Error: Command failed: mount --options=loop,ro,norecovery,sizelimit=16777216,offset=105906176 --source=/tmp/q7h26499y0q/vhd0 --target=/tmp/vnp8rqwkr4h

mount: /tmp/vnp8rqwkr4h: wrong fs type, bad option, bad superblock on /dev/loop5, missing codepage or helper program, or other error.

dmesg(1) may have more information after failed mount system call.

at genericNodeError (node:internal/errors:983:15)

at wrappedFn (node:internal/errors:537:14)

at ChildProcess.exithandler (node:child_process:414:12)

at ChildProcess.emit (node:events:518:28)

at ChildProcess.patchedEmit [as emit] (/opt/xen-orchestra/@xen-orchestra/log/configure.js:52:17)

at maybeClose (node:internal/child_process:1101:16)

at Process.ChildProcess._handle.onexit (node:internal/child_process:304:5)

at Process.callbackTrampoline (node:internal/async_hooks:130:17)"

}

Can anyone help ?

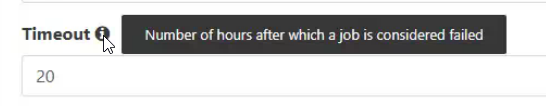

Hello, I'm running a mirror incremental backup and my timeout option is set to 20 hours but even if the backup run longer it never timeout.

It is me that doesn't understand that option correctly or I'm doing something wrong.

Thank You.

@florent Sorry for the delay. I was able to make it work with the change you posted. Thank You.

@DustinB when i disable it I can't connect it.

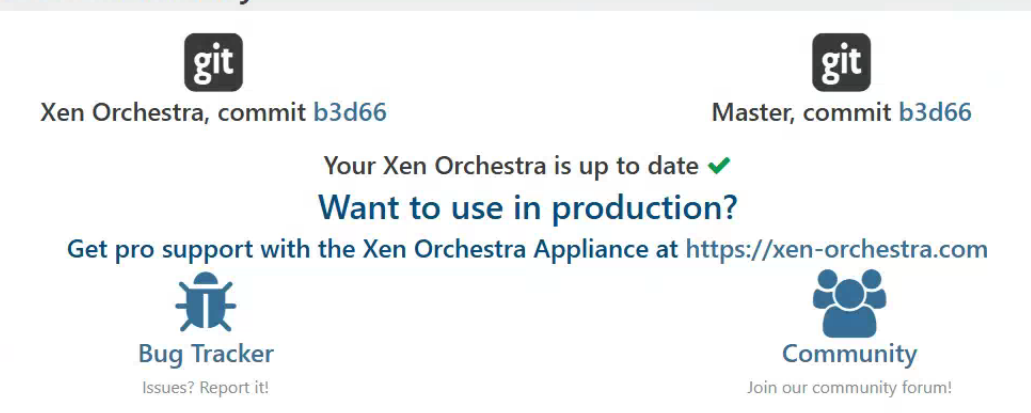

I use the from source version

This one work correctly. this is another customer with a older version.

Xen Orchestra, commit fa974

Master, commit b3d66

You are not up to date with master. 221 commits behind

@DustinB Yes the bucket exist. I'm able to connect and create folder.

When im trying to connect to my backblaze to configure a remote in xen orchestra I'm getting the following message. I'm able to connect with my credentiel with cyberduck.

{

"name": "InvalidArgument",

"$fault": "client",

"$metadata": {

"httpStatusCode": 400,

"requestId": "4c022d6f8b1e258a",

"extendedRequestId": "aNHQx6DCwZa1j6jhRM8s3OjQNYk0zvDkM",

"attempts": 1,

"totalRetryDelay": 0

},

"Code": "InvalidArgument",

"message": "Unsupported header 'x-amz-checksum-mode' received for this API call."

}

Can anynome help me solved this ?

Thank You.

Do you know what would cause this issue with a specific vm backup ?

{

"data": {

"mode": "delta",

"reportWhen": "failure"

},

"id": "1714518000004",

"jobId": "3c8de440-0a66-4a95-a201-968d1993bd8b",

"jobName": "Main - Delta - BRO-NAS-001 - VM1SQLSRV2012_PROD",

"message": "backup",

"scheduleId": "41094424-a841-4a75-bb74-edd0c5d72423",

"start": 1714518000004,

"status": "failure",

"infos": [

{

"data": {

"vms": [

"a23bacfb-543d-0ed7-cf90-902288f59ed6"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "a23bacfb-543d-0ed7-cf90-902288f59ed6",

"name_label": "VM1SQLSRV2012_PROD"

},

"id": "1714518001609",

"message": "backup VM",

"start": 1714518001609,

"status": "failure",

"tasks": [

{

"id": "1714518001617",

"message": "clean-vm",

"start": 1714518001617,

"status": "failure",

"tasks": [

{

"id": "1714518005435",

"message": "merge",

"start": 1714518005435,

"status": "failure",

"end": 1714518010534,

"result": {

"errno": -5,

"code": "Z_BUF_ERROR",

"message": "unexpected end of file",

"name": "Error",

"stack": "Error: unexpected end of file\n at BrotliDecoder.zlibOnError [as onerror] (node:zlib:189:17)\n at BrotliDecoder.callbackTrampoline (node:internal/async_hooks:128:17)"

}

}

],

"end": 1714518010534,

"result": {

"errno": -5,

"code": "Z_BUF_ERROR",

"message": "unexpected end of file",

"name": "Error",

"stack": "Error: unexpected end of file\n at BrotliDecoder.zlibOnError [as onerror] (node:zlib:189:17)\n at BrotliDecoder.callbackTrampoline (node:internal/async_hooks:128:17)"

}

},

{

"id": "1714518010549",

"message": "snapshot",

"start": 1714518010549,

"status": "success",

"end": 1714518014388,

"result": "bfce73a6-ea5b-d498-f3d8-d139b9fe29d5"

},

{

"data": {

"id": "b99d8af6-1fc0-4c49-bbe8-d7c718754070",

"isFull": true,

"type": "remote"

},

"id": "1714518014392",

"message": "export",

"start": 1714518014392,

"status": "success",

"tasks": [

{

"id": "1714518015575",

"message": "transfer",

"start": 1714518015575,

"status": "success",

"end": 1714530612896,

"result": {

"size": 1668042162176

}

},

{

"id": "1714530613148",

"message": "clean-vm",

"start": 1714530613148,

"status": "success",

"end": 1714530617953,

"result": {

"merge": true

}

}

],

"end": 1714530618039

}

],

"end": 1714530618039

}

],

"end": 1714530618040

}

@olivierlambert any news on this ? Thank You.

@olivierlambert I did the update and I'm getting the same error.

Herer are the log 2024-04-11T11_47_11.366Z - backup NG.txt

Sense my mirror incremental backup take a while to do the copy because of internet speed. I had to do update to another commit this morning. I will retest de backup job but still getting the error on the latest commit from last week.

@olivierlambert Perfect, I will work on this and come back to you.

Xen Orchestra commit ec166

XCP-NG 8.2.1 (only one host)

Let me know what specific information you need if I'm missing some.

bkp_log.txt

Here are the log.

My mirror incremental bkp always get stuck with one specific vm.

"result": {

"message": "Maximum call stack size exceeded",

"name": "RangeError",

"stack": "RangeError: Maximum call stack size exceeded\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:418:7)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)\n at getUsedChildChainOrDelete (file:///opt/xo/xo-builds/xen-orchestra-202401192117/@xen-orchestra/backups/_cleanVm.mjs:433:23)"

=============