@olivierlambert I confirm that the wget now works.

@pdonias I am sure the password was correct. It is a randomly generated one from my password manager. So it was cut and paste. But I just tried it again and is now working.

Thanks all.

@olivierlambert I confirm that the wget now works.

@pdonias I am sure the password was correct. It is a randomly generated one from my password manager. So it was cut and paste. But I just tried it again and is now working.

Thanks all.

Have you tried using xentop to see what th VMs are doing?

I couldn't find any documentation on this either. This is my understanding based on some experimenting. Someone correct me if I am wrong on any thing.

The first number is the number of vCPUs that the VM currenty has or will be started with. This can be changed while the VM is running. vCPUs will be hot plugged/unplugged. You can run top (not htop) while doing this to see the number of vCPUs changing and/or look at dmesg output. This can be changed manually in XO or by an XO job on a schedule.

The second number is the maximum number of vCPUs that the VM is allowed. This can only be changed when the VM is not running.

@florent Happy to do what I can to test this.

In my xo-server config.toml I see writeBlockConcurrency, maxMergedDeltasPerRun and vhdDirectoryCompression in a [backups] section.

Does this mean that these setting are system wide?

Shouldn't these be configurable on a per Remote basis?

Also in a previous post you have indicated that writeBlockConcurrency defaults to 8, but my xo-server config.toml it is 16.

@florent How can the level of compression and parallelisms be set?

Thanks

@pdonias I am puzzled. What do you see?

To be clear there are two problems.

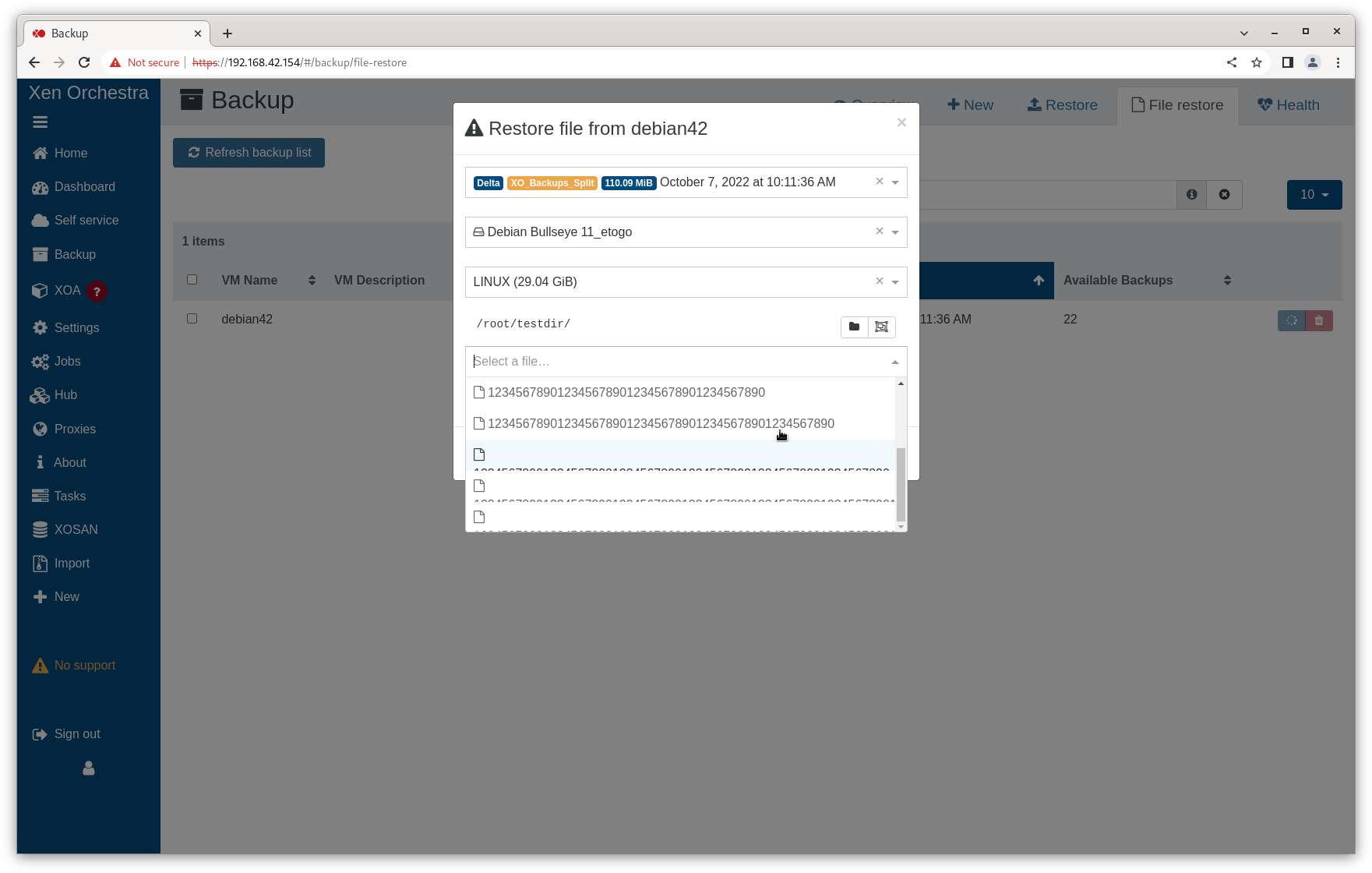

Once a file has been selected, the "Selected files/folders" does show it in a scrollable "widget" that can then be used to see the full file name.

My screen shots are from Chrome on Fedora. I normally use Fedora/Firefox which has the same issues.

I set up the following as a test. Filename lengths are 10-80 characters long.

root@debian42:~/testdir# pwd

/root/testdir

root@debian42:~/testdir# ls -l

total 0

-rw-r--r-- 1 root root 0 Oct 7 09:50 1234567890

-rw-r--r-- 1 root root 0 Oct 7 09:50 12345678901234567890

-rw-r--r-- 1 root root 0 Oct 7 09:50 123456789012345678901234567890

-rw-r--r-- 1 root root 0 Oct 7 09:50 1234567890123456789012345678901234567890

-rw-r--r-- 1 root root 0 Oct 7 09:50 12345678901234567890123456789012345678901234567890

-rw-r--r-- 1 root root 0 Oct 7 09:51 123456789012345678901234567890123456789012345678901234567890

-rw-r--r-- 1 root root 0 Oct 7 09:51 1234567890123456789012345678901234567890123456789012345678901234567890

-rw-r--r-- 1 root root 0 Oct 7 09:51 12345678901234567890123456789012345678901234567890123456789012345678901234567890

Filename lengths 10-50 display. Filename lengths 60-80 do not.

This isn't actually causing me a problem. It is just something that I came across while doing some testing.

On Linux the maximum file name length is 255 and the maximum combined length of both the file name and path name is 4096, so these should be allowed for in the solution some how.

Unless it is a really easy fix or it its really causing someone issues, can I suggest leaving this in XO 5, but make sure it is covered in XO 6?

Thanks

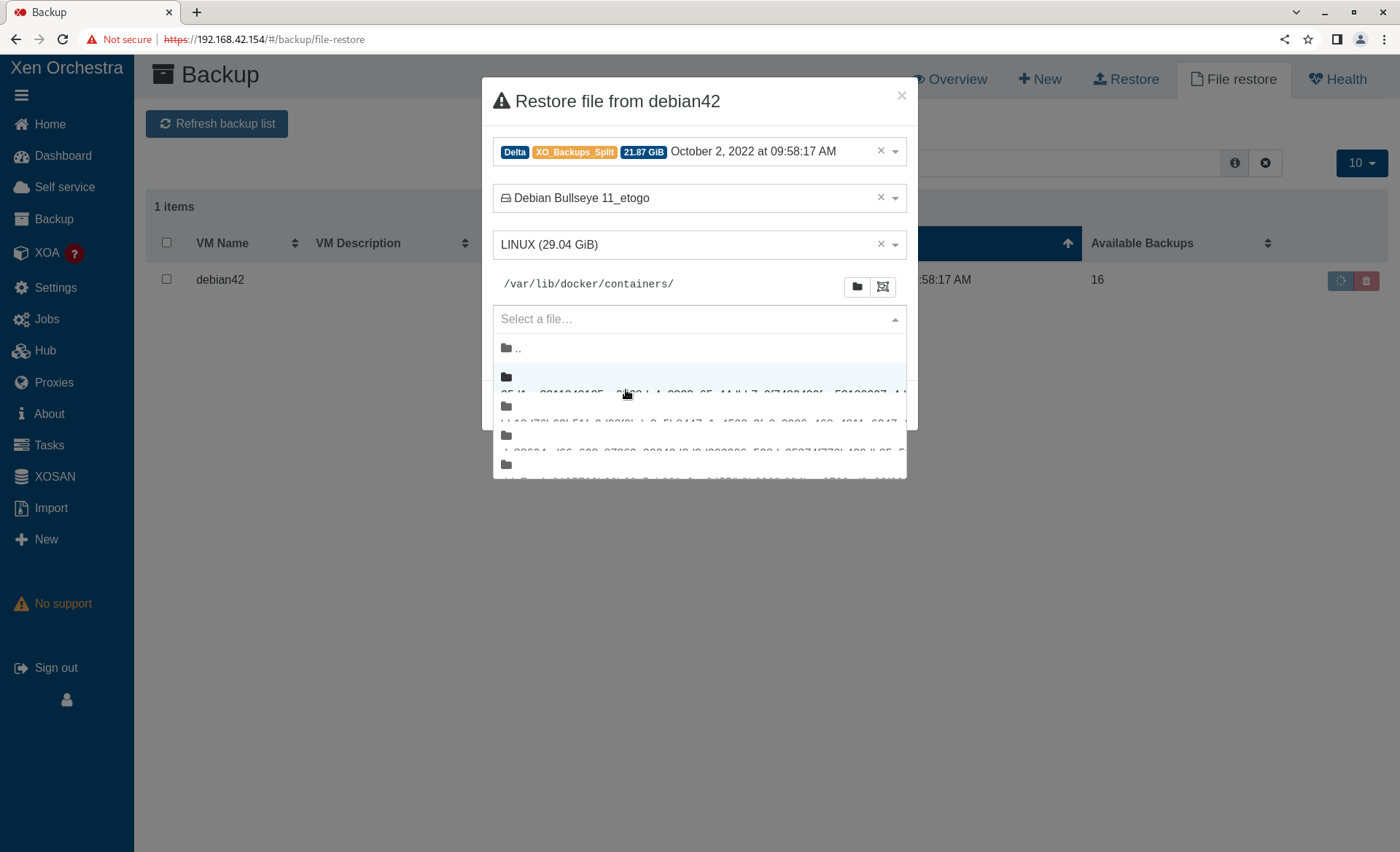

When doing a Restore File, if the file/directory name is longer then the width of the pop up window, the file/directory name is not viewable.

I added a new NFS share and Remote and kicked the backup off manually rather than waiting for the scheduled job tonight.

To my points above:

XO reports the same amount of data transferred (21.87 GiB) for old and new job, but on the remote the back up is 22GB for the old way and 18GB for the new (as reported by ls and du respectively on the NFS server).

Is there some compression being done? If so can it be changed somewhere?

Thanks

@olivierlambert

That doesn't surprise me, but on https://xen-orchestra.com/blog/xen-orchestra-5-72/ it says

To configure it, edit your current remote (or create a new one) and check "Store backup as multiple data block instead of a whole VHD file":

So I was doing the "edit your current remote" like it said.

I did delete the remote and added a new one in XO, but I pointed it at the same NFS share, which I am assuming is not right either because it had a couple of issues.

I will create a new NFS share and a new Remote for tonight's backups and see how that goes.

This also doesn't change that editing a Remote always fails with the same error when trying to save it. This is no matter what I try to change, including changing nothing, and that nothing is logged.

Thanks

With the announcement that file level restore now works with the new mode of backup I figured that it was time the I tried it out.

So I upgraded my XO from sources (now on commit 42432) and tried to edit my existing remote to enable the "multiple data blocks" mode, but it fails.

This reminded me that I don't think that I have ever been able to edit an existing remote.

I am selecting the "pencil" button to edit the remote. This populates the fields under the "New file system remote" banner with the information for the desired remote. But it does not matter if I change anything or not. When I select the "Save configuration" button I always get an error.

The error appears a a red pop up with the text "Save configuration E is undefined". Selecting the "Show logs" button in this pop uptakes me to the XO logs, but there is no log entry for this error.

Have you tried using xentop to see what th VMs are doing?