@olivierlambert @julien-f Any update on this?

Latest posts made by Morpheus0x

-

RE: Get VNC Console Stream via REST API

-

Get VNC Console Stream via REST API

I recently looked into how to have a more secure front-facing management interface for VMs, since I don't think exposing Xen Orchestra to the internet is a good idea. It should probably be fine, I guess, but the thought of allowing access to such a critical part of my infrastructure makes me uneasy.

The current state of the REST API seems to be sufficient for building my own front, except for one important missing feature. Getting access to the VNC stream for a VM console.

Are there any plans on adding this in the near future? As far as I can see, this should be rather straightforward, since the endpoint already exists in Xen Orchestra, but not using the REST API with token authentication:

/api/consoles/<uuid>.As an alternative to this, the xcp-ng host itself exposes the

/console?uuid=<uuid>https endpoint, but I have been unable to test this properly, since this requires the root user of the host to authenticate via password, which can't be done with any normal vnc client. I found a 5 year old [reddit post](https://www.reddit.com/r/XenServer/comments/cu61we/xcpng_vnc_stream/ talking about this, which suggests that token authentication is possible. However, I wasn't able to find any documentation supporting this (xcp-ng or xen server). Either way, having this in the Xen Orchestra REST API would be preferable. -

RE: Unable to create more than 7 VIFs for a VM / Adding Trunk Network to VM

@Andrew awesome, thank you for your explanation. After reading a bit more about MTU (see this) I get it now. I looked up MTU in OPNsense for the VLAN interfaces and was surprised that it automatically uses 1496 MTU, which explains why it works and means I don't have to adjust my host's MTU settings, which I really didn't want to do.

Now, as far as I understand, keeping it this way could introduce some split packets and therefore potentially limiting throughput. To fix this, I would need to increase the MTU to 1504 or larger. However, after some more reading (thanks for the keyword "baby giants"), it seems that today, MTU is just a "guideline" for LAN. I will keep the host MTU at 1500 for now.

-

RE: Unable to create more than 7 VIFs for a VM / Adding Trunk Network to VM

@Andrew thanks, I know that it is possible to add additional interfaces using the XCP-ng CLI. I was looking for information on if doing this causes any issues. From the thread you linked, I would assume that the 7 VIF limit exists only because some operating systems have issues with too many virtual Xen network interfaces. Are there any other considerations when adding additional VIFs?

I've got the trunk port working in the firewall VM and since OPNsense is based on FreeBSD, I will use that instead of adding additional VIFs from the CLI.

However, the MTU question still remains. Do I need to change it? It works for now, but I don't know if there could be any issues, e.g. limited network throughput when not changing MTU...

-

Unable to create more than 7 VIFs for a VM / Adding Trunk Network to VM

Hello, I am experiencing an issue with Xen Orchestra (self compiled) not being able to add a new VIF if the VM has a total of 7 VIFs already attached.

I get the following error trying to create the new Network Device:

could not find an allowed VIF deviceThis is only after updating Xen Orchestra to the latest commit (771b04a), before the error was:

INTERNAL_ERROR(Server_helpers.Dispatcher_FieldNotFound("device"))I have tested adding an 8th VIF to multiple VMs using different Networks, but nothing makes a difference. The reason I need that "many" is basically only for the Firewall VM since I am adding a VIF for every Subnet/Vlan in my network. A workaround would be to just attach the trunk to the Firewall VM. However, I would be better if I could attach more than 7 VIFs.

I found this XCP-ng guide stating that 7 VIFs is some limitation in Xen. I think this should be highlighted in the normal Network documentation as well. Can this restriction be changed? Does adding 8+ VIFs via CLI introduce issues like the guide states?

Is there any maximum limitation on how many VIFs are allowed or recommended for a VM in XCP-ng or is this a Xen Orchestra limitation?The same guide also states that if I want to use the trunk interface inside a VM, that I need to change the MTU of the PIF to 1504. Why is that the case? I changed the 7th VIF (#6) of my firewall VM to a network without any VLAN set (trunk) and now in OPNsense I created a VLAN interface on that network interface. However, that VLAN interface is down and unable to communicate with a VM using a Xen Orchestra network with the same VLAN set. Is this due to the MTU issues? Where exactly do I need to change the MTU, beside on the network switch? I have a LACP (802.3ad) with two SFP+ ports. Do I set 1504 MTU for each of its members, eth0 and eth1, and/or for the bond0 PIF?

Edit: I resolved my last issue, now I can successfully use the trunk port inside OPNsense without having to change the MTU. The issue was me not correctly setting up OPNsense, but now I can ping the firewall from the VM as described in the setup above. Should I still change MTU?

-

RE: GPU passthrough - Windows VM doesn't boot correctly

@l1c said in GPU passthrough - Windows VM doesn't boot correctly:

@toby4213

I don't think since it seems from the link you posted that the latest Xen version resolve the issue.

TBH most of this goes way above my head...Where do you see this as implemented? The github issue is still open...

@tony said in GPU passthrough - Windows VM doesn't boot correctly:

I had this problem before and XCP-ng won't boot without another graphic card to display its xsconsole. It might have been changed in latest version of XCP-ng but if not you'll have to have at least 1 graphics card for the host (can even be an iGPU) and another PCIe card for the VM.

Also support for GeForce graphics card for VM is still in BETA stage so it might not work 100%, if you want to be sure then try your GRID card which might work better. AMD cards will work for sure even with vGPU.

XCP-ng does boot with the GPU passed thorugh. XCP-ng comes online in XOA.

The problem is the unstable Windows VM.

I ordered a PCI riser, since I don't have a free 16x slots to test with 2 GPUs installed and 1 of them passed through. -

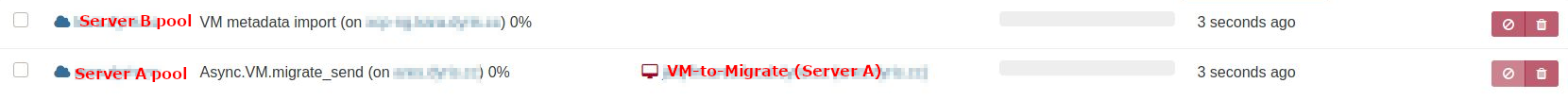

VM Migration stuck at 0% VM metadata import

I am trying to migrate a shut down VM from Server A to Server B.

Server B is new and I deployed the XOA Instance from the Server B web ui.

I also added Server A to the XOA instance. Both servers are separate pools.

Trying to migrate in XOA doesn't work, the process is stuck at 0%:

Server B task list, VM metadata import stuck at pending:[14:55 xcp-ng log]# xe task-list uuid ( RO) : <uuid> name-label ( RO): VM metadata import name-description ( RO): status ( RO): pending progress ( RO): 0.000XCP-ng Center can migrate VM without a Problem.

There I can also see the error message for the failed XOA migration attempt(s):

Message: Migrate VM <VM-to-Migrate> Cannot contact the other host using TLS on the specified address and port; Server/Pool: Server A

Both server have public IPs, there is no firewall between the servers. -

RE: GPU passthrough - Windows VM doesn't boot correctly

@l1c Thanks for the reply and the Qubes OS workaround . I didn't now that Qubes was also using Xen, that's awesome. I will try with less ram (Currently 8GB), hopefully it will work. And if it does I will look into the workaround.

I found this issue on Github:

https://github.com/xcp-ng/xcp/issues/435

Could this be the problem I am having?I am not at home this weekend and will give an update next week.

-

GPU passthrough - Windows VM doesn't boot correctly

OS: XCP-ng 8.1.0

Guest OS: Windows 10 LTSC 2019, Version 1809, Build 17763.2183

GPU: Nvidia GTX 1660 SuperThe GPU worked for the XCP-ng console before I followed the PCIe Passthough guide from the XCP-ng docs. I enabled RDP and installed Parsec on the Windows VM. XCP-ng Guest Tools are installed. I am unable to install the Nvidia drivers beforehand. I get following error:

This NVIDIA graphics driver is no compatible with this version of Windows. This graphics driver could not find compatible graphics hardware.I tried 465.89 and 471.96, both show the same error. I think this is because the GPU isn't passed through yet. The VM should boot even without those drivers.

Following PCIe devices:[23:38 xcp-ng ~]# lspci 09:00.0 VGA compatible controller: NVIDIA Corporation Device 21c4 (rev a1) 09:00.1 Audio device: NVIDIA Corporation Device 1aeb (rev a1) 09:00.2 USB controller: NVIDIA Corporation Device 1aec (rev a1) 09:00.3 Serial bus controller [0c80]: NVIDIA Corporation Device 1aed (rev a1) [23:42 xcp-ng ~]# xl pci-assignable-list 0000:09:00.0 0000:09:00.3 0000:09:00.1 0000:09:00.2I assigned all four device to my Windows VM:

[23:45 xcp-ng ~]# xe vm-param-set other-config:pci=0/0000:09:00.0,0/0000:09:00.1,0/0000:09:00.2,0/0000:09:00.3 uuid=<uuid>After that the devices still show up under

xl pci-assignable-list, is that expected?

Starting the Windows VM works without an error.[23:48 xcp-ng ~]# xe vm-start uuid=<uuid> [23:48 xcp-ng ~]#There is no video out on the console, which is expected I think, but the Windows VM never boots correctly. I am unable to ping/establish rdp connection. I have also tried connecting a monitor to the GPU while the VM is running and before booting, but get no video out.

This is the only GPU installed on the system, no integrated graphics(Ryzen 5 3600). This shouldn't be a problem right? XCP-ng boots normally, the screen goes blank in the middle of the boot process, indicating that it's correctly blacklisted.

Is this a known problem? How do I fix this?

UPDATE:

I got the VM to boot with the gpu passed through by NOT passing throught the USB Controller(0000:09:00.2). I am now able to install the nvidia drivers. Maybe this has to do with the USB Passthrough problems described here: https://xcp-ng.org/forum/topic/3594/pci-passthrough-usb-passthrough-does-not-work-together/ or maybe because my gpu doesn't have a USB-C connector which makes the USB controller irrelevant.After restarting to apply the nvidia drivers I get a black screen on the console and it seems that the VM once again doesn't boot correctly. I am unable to ping or establish rdp connection. I forced a shutdown and starting the VM again I could see the Tiano Core Windows boot screen, before I once again get a black screen.

UPDATE 2:

I also tried TightVNC but have the same problem that the VM isn't accessible via the network.UPDATE 3:

I was able to boot the VM with the GPU. The 1660 Super was correctly shown in the Device Manager. After opening Geforce experience and trying to download the latest update I got the black screen again. During testing I also got a BSOD with error code VIDEO_TDR_FAILURE a couple of times. It seems that every time I try to update the drivers, the VM crashes.

I also have a Grid K2 which I am going to try next. -

Grid K1/K2 GPU Passthrough

Re: Graphics Cards no longer on Hardware Compatibility List for 8.

Does the Grid K1/K2 show up as 4 or 2 different GPUs respectively?

Can multiple VMs utilise one of those GPUs at the same time?

According to their datasheet they have multiple Kepler based GPUs.

I haven't found any info regarding this.

I know that vGPU won't work.OS: XCP-ng 8.2

Edit:

Also what performance could I expect from one VM with a passed through GPU?

With passthrough does NVENC work in the VM?