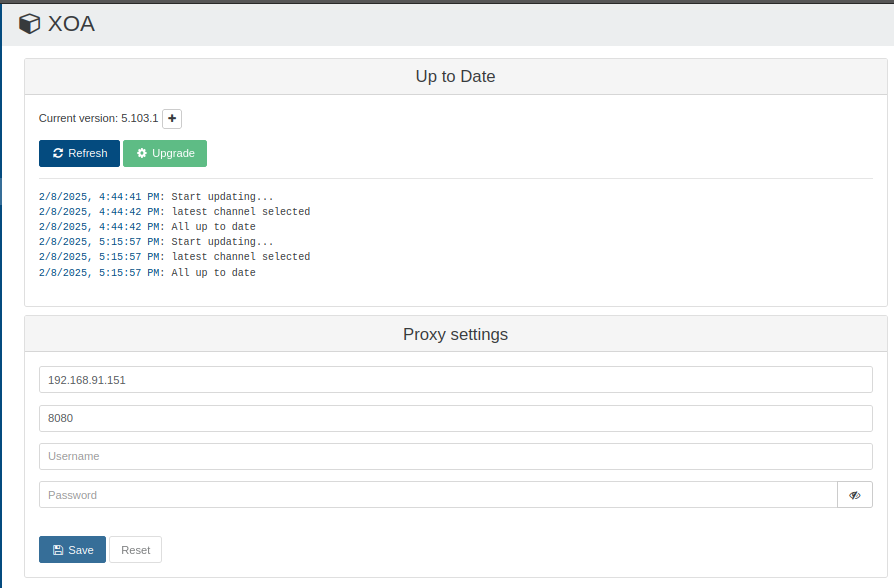

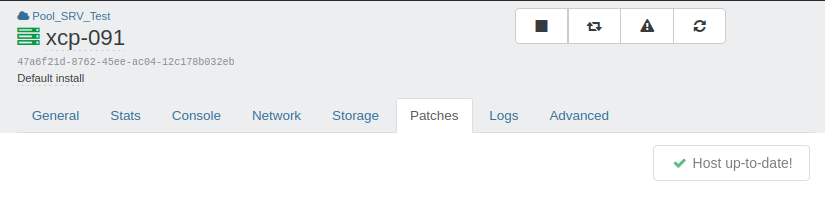

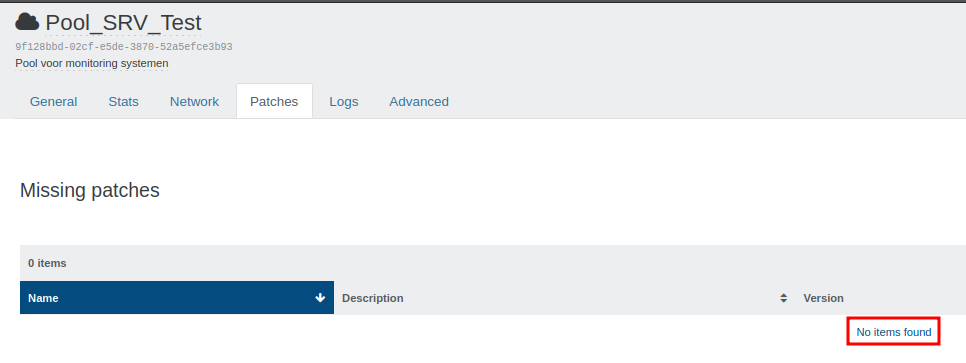

It seems to be working on another pool, but, how can we get this working in XOA?

XOA:

CLI:

[07:38 xcp-102 ~]# xe host-call-plugin host-uuid=a0d83751-159a-4f0b-8618-4984d543f0c8 plugin=updater.py fn=check_update

[{"url": "http://www.xen.org", "version": "24.19.2", "name": "xcp-networkd", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 4647492, "description": "Simple host network management service for the xapi toolstack"}, {"url": "https://github.com/xapi-project/sm", "version": "3.2.3", "name": "sm-fairlock", "license": "LGPL", "changelog": null, "release": "1.15.xcpng8.3", "size": 42552, "description": "Fair locking subsystem"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "forkexecd", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 2361328, "description": "A subprocess management service"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "sm-cli", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 1787364, "description": "CLI for xapi toolstack storage managers"}, {"url": null, "version": "4.19.19", "name": "kernel", "license": "GPLv2", "changelog": null, "release": "8.0.37.1.xcpng8.3", "size": 30911672, "description": "The Linux kernel"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xapi-storage-script", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 4589220, "description": "Xapi storage script plugin server"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xapi-xe", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 1369152, "description": "The xapi toolstack CLI"}, {"url": "https://www.jedsoft.org/slang/", "version": "2.3.2", "name": "slang", "license": "GPLv2+", "changelog": null, "release": "11.xcpng8.3", "size": 367944, "description": "Shared library for the S-Lang extension language"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xenopsd-xc", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 5163268, "description": "Xenopsd using xc"}, {"url": "https://git.kernel.org/pub/scm/linux/kernel/git/firmware/linux-firmware.git", "version": "20240503", "name": "amd-microcode", "license": "Redistributable", "changelog": null, "release": "1.1.xcpng8.3", "size": 96912, "description": "AMD Microcode"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xapi-rrd2csv", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 2924652, "description": "A tool to output RRD values in CSV format"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xenopsd-cli", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 1879780, "description": "CLI for xenopsd, the xapi toolstack domain manager"}, {"url": "https://github.com/xapi-project/blktap", "version": "3.54.9", "name": "blktap", "license": "BSD", "changelog": null, "release": "1.2.xcpng8.3", "size": 312948, "description": "blktap user space utilities"}, {"url": null, "version": "2.0.15", "name": "kexec-tools", "license": "GPL", "changelog": null, "release": "20.1.xcpng8.3", "size": 69300, "description": "kexec/kdump userspace tools"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "wsproxy", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 967004, "description": "Websockets proxy for VNC traffic"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "vhd-tool", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 4875652, "description": "Command-line tools for manipulating and streaming .vhd format files"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xapi-nbd", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 2977480, "description": "NBD server that exposes XenServer disks"}, {"url": "http://www.gnu.org/software/grub/", "version": "2.06", "name": "grub-tools", "license": "GPLv3+", "changelog": null, "release": "4.0.2.1.xcpng8.3", "size": 2318216, "description": "Support tools for GRUB."}, {"url": "https://github.com/vatesfr/xen-orchestra", "version": "0.6.0", "name": "xo-lite", "license": "AGPL3-only", "changelog": null, "release": "1.xcpng8.3", "size": 941976, "description": "Xen Orchestra Lite"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xapi-core", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 27803496, "description": "The xapi toolstack"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xenopsd", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 1378804, "description": "Simple VM manager"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "rrdd-plugins", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 4977012, "description": "RRDD metrics plugin"}, {"url": null, "version": "5.10.226", "name": "intel-igc", "license": "GPL", "changelog": null, "release": "1.xcpng8.3", "size": 64356, "description": "Intel igc device drivers"}, {"url": "https://xcp-ng.org", "version": "8.3", "name": "xcp-ng-deps", "license": "GPLv2", "changelog": null, "release": "13", "size": 11808, "description": "A meta package pulling all needed dependencies for XCP-ng"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xcp-rrdd", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 3640260, "description": "Statistics gathering daemon for the xapi toolstack"}, {"url": "http://www.gnu.org/software/grub/", "version": "2.06", "name": "grub-efi", "license": "GPLv3+", "changelog": null, "release": "4.0.2.1.xcpng8.3", "size": 4224468, "description": "GRUB for EFI systems."}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "python2-xapi-storage", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 281168, "description": "Xapi storage interface (Python2)"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "squeezed", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 1801864, "description": "Memory ballooning daemon for the xapi toolstack"}, {"url": "http://www.gnu.org/software/grub/", "version": "2.06", "name": "grub", "license": "GPLv3+", "changelog": null, "release": "4.0.2.1.xcpng8.3", "size": 1054832, "description": "Bootloader with support for Linux, Multiboot and more"}, {"url": "https://github.com/xapi-project/sm", "version": "3.2.3", "name": "sm", "license": "LGPL", "changelog": null, "release": "1.15.xcpng8.3", "size": 364880, "description": "sm - XCP storage managers"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "xapi-tests", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 6841292, "description": "Toolstack test programs"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "message-switch", "license": "ISC", "changelog": null, "release": "1.9.xcpng8.3", "size": 4443828, "description": "A store and forward message switch"}, {"url": "http://www.xen.org", "version": "24.19.2", "name": "varstored-guard", "license": "LGPL-2.1-or-later WITH OCaml-LGPL-linking-exception", "changelog": null, "release": "1.9.xcpng8.3", "size": 4843928, "description": "Deprivileged XAPI socket Daemon for EFI variable storage"}]