@lsouai-vates those look great!

@Pilow hey I’m just happy that @olivierlambert officially 100% promised and fully committed to a December 2025 initial release of XO6 on the monthly blog

@lsouai-vates those look great!

@Pilow hey I’m just happy that @olivierlambert officially 100% promised and fully committed to a December 2025 initial release of XO6 on the monthly blog

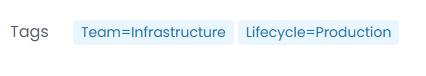

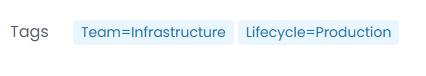

I am a fan of how with X05, tags with subvalues notate this more explicitly. In XO6, they just show the = sign separator, which I think decreases legibility.

So this is simply a request to make XO6 tags display more how XO5 tags do, including color selection.

XO5:

XO6:

I'm not saying match the interface exactly because it is kind of ugly, but keep what's good.

@olivierlambert thanks.

Ps. I know I’m being a little nitpicky with this suggestion but honestly I can’t wait for XO6, the redesign overhaul looks amazing and functionally appears to be a ton more cohesive/better user experience.

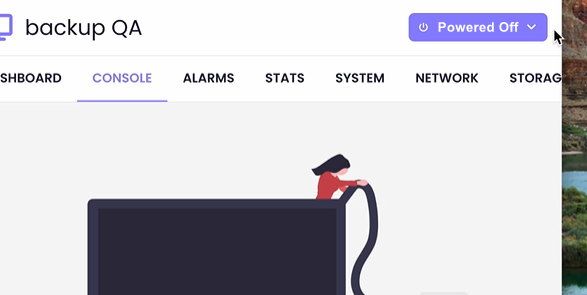

In the most recent blog post for XO 5.85, a preview of the VM console view was shown towards the end. I'd like to suggest for the "Change state" button to instead show the current power state of the VM. It'd be great to know there if it's rebooting, suspended, etc anywhere from the view.

So it'd look like this:

Hopefully this is the right place to post. Thanks for all your work!

@jbamford said in Veeam and XCP-ng:

there is having something faster and there is something that’s going to be reliable

How about fast and reliable, which is Veeam.

I’m not saying that Veeam isn’t going to be reliable but what I am going to say is that you are at more risk of backups not being reliable

If this is a Production environment I would go with the XCP-ng and XO because if something goes wrong

That's a pretty hot take that Veeam, an industry leading product where their only business is backup and they are damn good at it, is going to somehow be less reliable than the, in my opinion, half-baked solution that exists in XO (while I appreciate the work the team is doing to make this better and better, seriously, I love reading the monthly progress updates, the fact remains). Additionally, the fact that XO requires at least double the provisioned storage of a VM in order to properly coalesce snapshots is a drawback that Veeam doesn't require.

While I agree with the answer someone else gave that this question should be getting asked to Veeam, I think the discussion here is important as it may drive interest to Vates to work with Veeam. Especially now that a lot of VMWare customers that are accustomed to all that Veeam can do are making their way to XO, I'd expect Veeam integration to be at least in consideration.

@lsouai-vates those look great!

@Pilow hey I’m just happy that @olivierlambert officially 100% promised and fully committed to a December 2025 initial release of XO6 on the monthly blog

I am a fan of how with X05, tags with subvalues notate this more explicitly. In XO6, they just show the = sign separator, which I think decreases legibility.

So this is simply a request to make XO6 tags display more how XO5 tags do, including color selection.

XO5:

XO6:

I'm not saying match the interface exactly because it is kind of ugly, but keep what's good.

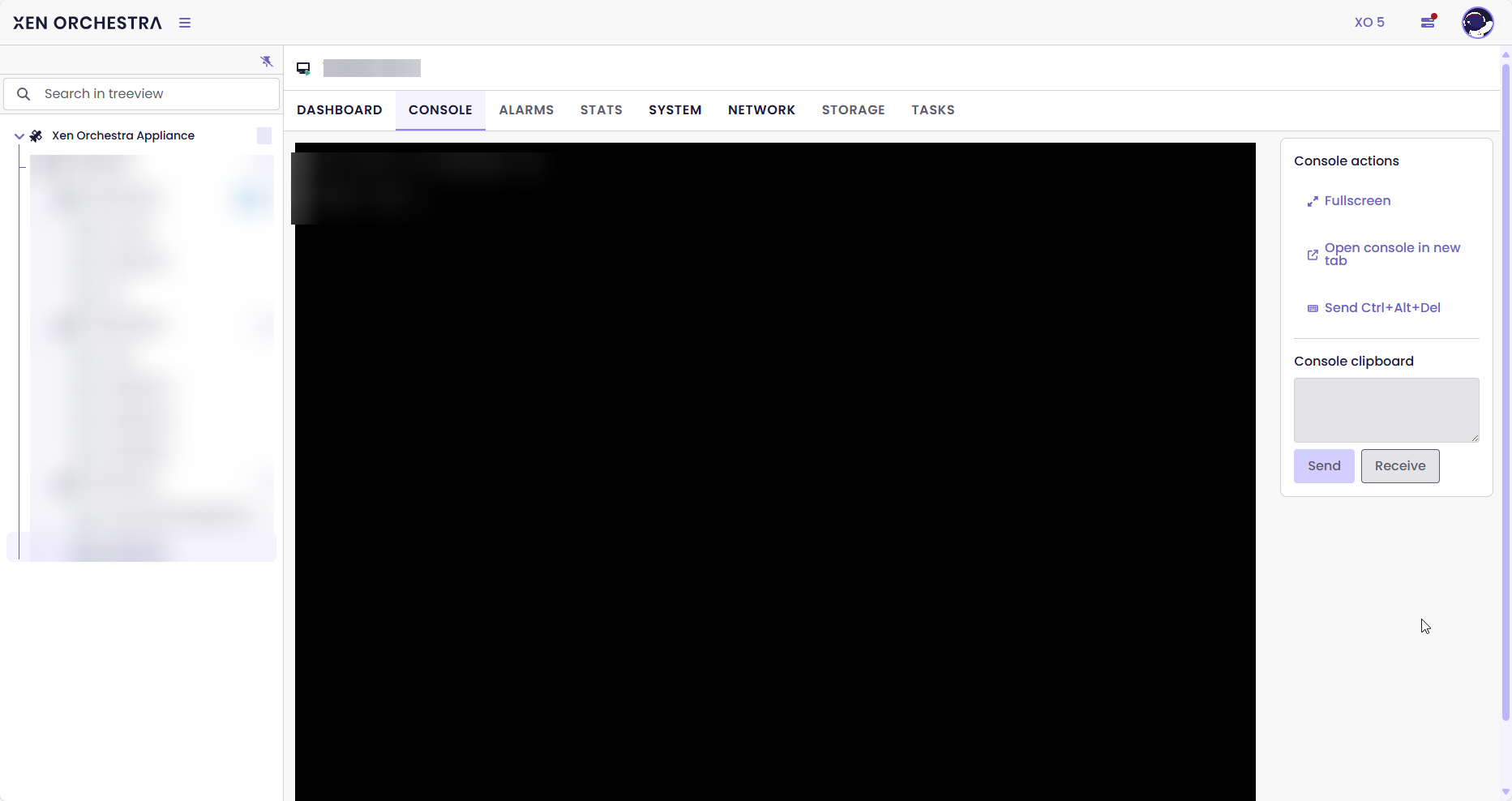

@marcoi XO6 is still a work in progress and many features are not available. Really it is not usable yet for managing your infra. I think Olivier just meant any feature requests should be directed at XO6 as they aren't adding features to XO5. You'll be happy to know that the feature you are looking for does exist in XO6 as they are based on the same fundamentals.

XO6 console view:

@KPS said in Veeam and XCP-ng:

- Instant restore "pendant" is missing --> makes restore speed MORE critical

While not entirely the same, depending on the reason you are restoring, you can restore to the most recent snapshot taken by your scheduled backups or setup a separate snapshot schedule independent from your backups.

To add to your list, XO also can't snapshot (and therefore backup) VMs that are configured with USB passthrough due to an XCP-NG 8.2 limitation. Support has told me this "should be solved" in 8.3, but to me should does not mean confirmed and there is no timeframe that I'm aware of for 8.3 stable release.

@DustinB said in Veeam and XCP-ng:

Vates would have to build (either into or their own) guest utility that supports application awareness, which can be done by using the "Driver Development Kit (DDK) 8.0.0"

And they should if they want to compete with VMware and Hyper-V. I think a native, completely Vates-controlled guest utility should be high on the priority list. Preferably, one that is automatically distributed by Windows Update (admittedly, I don't know the cost for this) or automatically installed on Linux systems, controlled by a setting at the VM and/or Pool level.

@jbamford said in Veeam and XCP-ng:

there is having something faster and there is something that’s going to be reliable

How about fast and reliable, which is Veeam.

I’m not saying that Veeam isn’t going to be reliable but what I am going to say is that you are at more risk of backups not being reliable

If this is a Production environment I would go with the XCP-ng and XO because if something goes wrong

That's a pretty hot take that Veeam, an industry leading product where their only business is backup and they are damn good at it, is going to somehow be less reliable than the, in my opinion, half-baked solution that exists in XO (while I appreciate the work the team is doing to make this better and better, seriously, I love reading the monthly progress updates, the fact remains). Additionally, the fact that XO requires at least double the provisioned storage of a VM in order to properly coalesce snapshots is a drawback that Veeam doesn't require.

While I agree with the answer someone else gave that this question should be getting asked to Veeam, I think the discussion here is important as it may drive interest to Vates to work with Veeam. Especially now that a lot of VMWare customers that are accustomed to all that Veeam can do are making their way to XO, I'd expect Veeam integration to be at least in consideration.

Yeah, the trouble is manually migrating from a different hypervisor platform (hyper-v) through web-browser downloads and uploads (and then, a middleman being my laptop) is extremely slow so I just transferred directly using SCP and then imported using xe commands.

Okay, yup that was it. Migration is in progress now. When I manually added these disks to the host I never cleaned up the original disks after importing from the CLI, so removing them allowed the SR scan to work properly (or something like that). Thanks for the direction @olivierlambert !

Kind of annoying that totally unrelated disks would cause a migration failure for another VM, but alas. Are there any plans to improve the error reporting in XO?

My assumption is the error is due to these lines:

Aug 22 10:09:06 H2SPH180034 SM: [26118] ***** sr_scan: EXCEPTION <class 'XenAPI.Failure'>, ['UUID_INVALID', 'VDI', 'nwweb1_disk2']

Aug 22 10:09:06 H2SPH180034 SM: [26118] Raising exception [40, The SR scan failed [opterr=['UUID_INVALID', 'VDI', 'nwweb1_disk2']]]