too many VDI/VHD per VM, how to get rid of unused ones

-

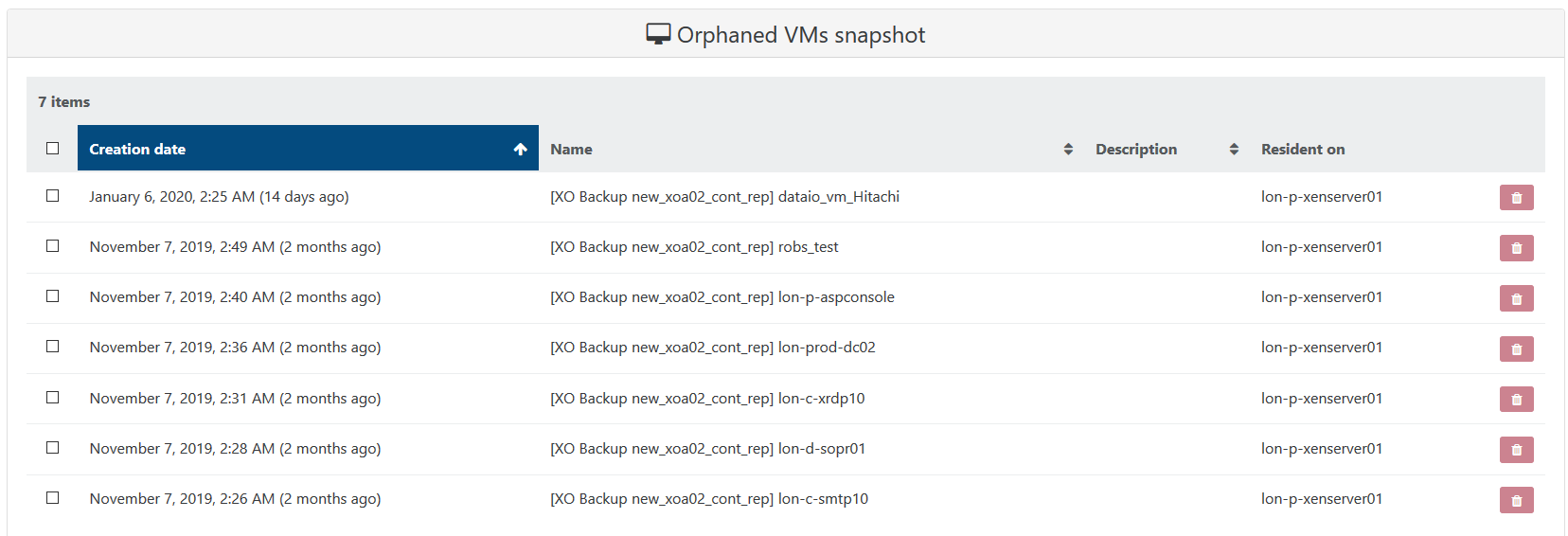

mmm.... its asking me for the orphaned vm snapshots if i really want to delete them

im guessing i do?!?

-

There's no reason to have orphaned VM snapshots

-

thanks Olivier!!!!!!

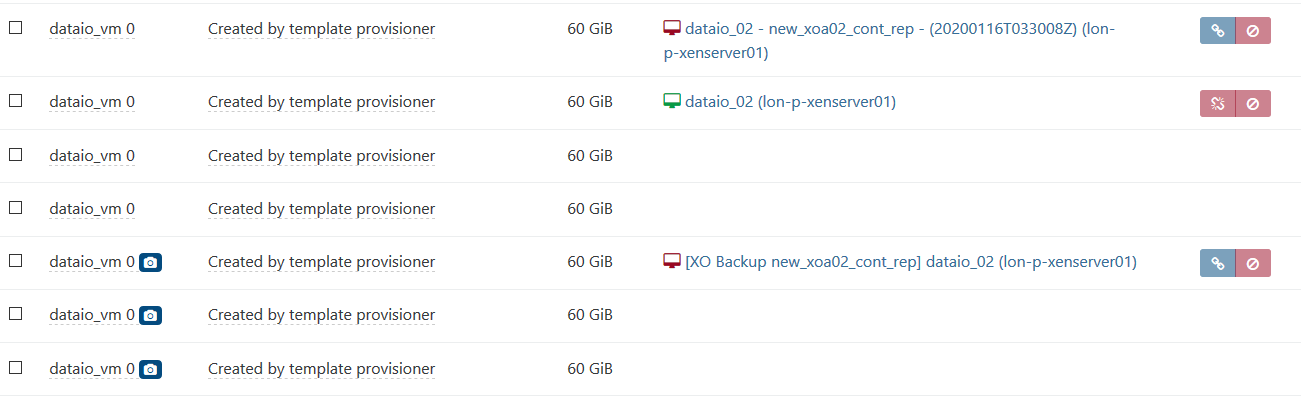

now my VDI's, i see i have multiple VDI's for my VMs

for example my "dataio vm"

i have a main vm running and i have a continious replication of the dataio vm

now can i delete any of them as i seem to be just racking up multiple VDI's of the same VM

-

Continue to remove all orphaned VDIs

-

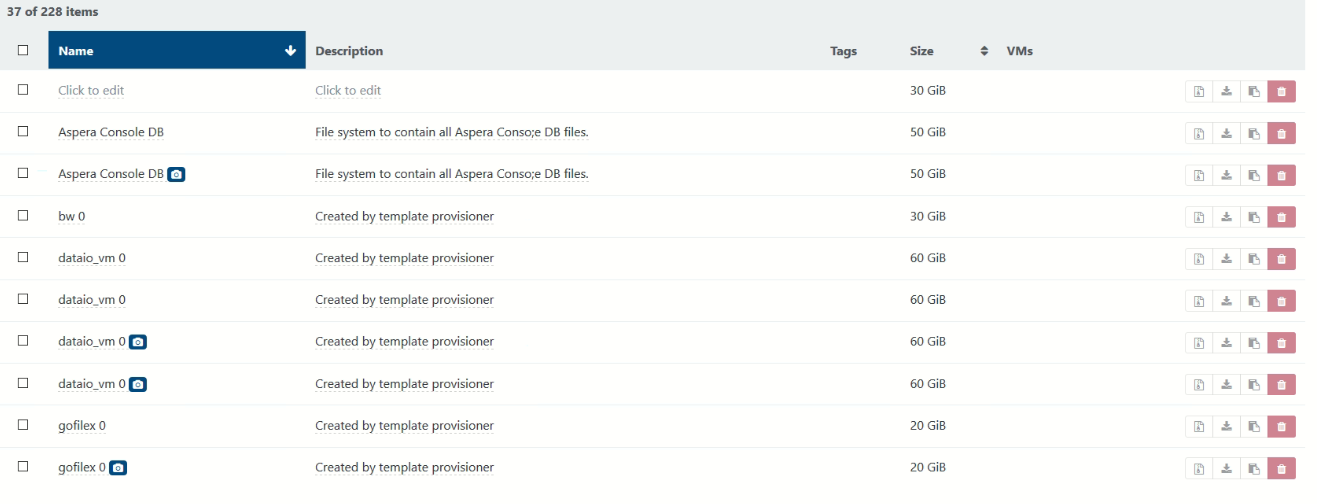

@olivierlambert but I can't see any more orphaned vdis on XOA?

-

In the SR view, disk tab, bottom right, magnifying glass icon, select "Orphaned VDIs".

-

@olivierlambert your amazing Olivier, so I imagine I can delete all the orphaned vdi's

-

Yes, as long as you don't have intentionally left a VDI connected to no VM whatsoever, you can remove all of them.

-

im looking and i see no vms attached to the orphaned disks

-

That's by definition why are they called "orphaned"

The question is: have you decided to disconnect some VDI of some VM by yourself or they just are here because they are leftover of some failed operations?

The question is: have you decided to disconnect some VDI of some VM by yourself or they just are here because they are leftover of some failed operations?I think that in your case, you can remove them all.

-

thanks Olivier because when i change the magnifying glass to "type:!VDI-unmanaged" i see under the VMs the actual live vm name and the backup vm so i think its safe to delete all the orphaned ones

-

Yes, VDIs connected to a VM are by definition not orphaned. You don't want to remove those.

-

just out of interest when i change the magnifying glass to "type:VDI-unmanaged"

it lists "base copy"

what are they?

-

They are the read only parent of a disk (when you do a snapshot, in fact it creates 3 disks: the base copy in read only, the new active disk and the snapshot).

If you remove the snapshot, then a coalesce will appear, to merge the active disk within the base copy, and get back to the initial state: 1 disk only.

-

so can i delete the "type:VDI-snapshot"

-

This post is deleted! -

- Please edit your post and use Markdown syntax for code/error blocks

- Rescan your SR and try again, at worst remove everything you can, leave it a while to coalesce and wait before trying to remove it again

-

vdi.delete { "id": "1778c579-65a5-48b3-82df-9558a4f6ff7f" } { "code": "SR_BACKEND_FAILURE_1200", "params": [ "", "", "" ], "task": { "uuid": "3c72bd9a-36ec-01da-b1b5-0b19468f4532", "name_label": "Async.VDI.destroy", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20200120T19:23:41Z", "finished": "20200120T19:23:50Z", "status": "failure", "resident_on": "OpaqueRef:bb0f833a-b400-4862-9d3f-07f15f34e0f8", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "SR_BACKEND_FAILURE_1200", "", "", "" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process"xapi @ lon-p-xenserver01")(filename lib/backtrace.ml)(line 210))((process"xapi @ lon-p-xenserver01")(filename ocaml/xapi/storage_access.ml)(line 31))((process"xapi @ lon-p-xenserver01")(filename ocaml/xapi/xapi_vdi.ml)(line 683))((process"xapi @ lon-p-xenserver01")(filename ocaml/xapi/message_forwarding.ml)(line 100))((process"xapi @ lon-p-xenserver01")(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process"xapi @ lon-p-xenserver01")(filename ocaml/xapi/rbac.ml)(line 236))((process"xapi @ lon-p-xenserver01")(filename ocaml/xapi/server_helpers.ml)(line 83)))" }, "message": "SR_BACKEND_FAILURE_1200(, , )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_1200(, , ) at Function.wrap (/xen-orchestra/packages/xen-api/src/_XapiError.js:16:11) at _default (/xen-orchestra/packages/xen-api/src/_getTaskResult.js:11:28) at Xapi._addRecordToCache (/xen-orchestra/packages/xen-api/src/index.js:812:37) at events.forEach.event (/xen-orchestra/packages/xen-api/src/index.js:833:13) at Array.forEach (<anonymous>) at Xapi._processEvents (/xen-orchestra/packages/xen-api/src/index.js:823:11) at /xen-orchestra/packages/xen-api/src/index.js:984:13 at Generator.next (<anonymous>) at asyncGeneratorStep (/xen-orchestra/packages/xen-api/dist/index.js:58:103) at _next (/xen-orchestra/packages/xen-api/dist/index.js:60:194) at tryCatcher (/xen-orchestra/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/xen-orchestra/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/xen-orchestra/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/xen-orchestra/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/xen-orchestra/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/xen-orchestra/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/xen-orchestra/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/xen-orchestra/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues (/xen-orchestra/node_modules/bluebird/js/release/async.js:15:14) at runCallback (timers.js:810:20) at tryOnImmediate (timers.js:768:5) at processImmediate [as _immediateCallback] (timers.js:745:5)" } -

got the command

xe vm-disk-list vm=(name or UUID)

im going to cross refernce it with this

xe vdi-list sr-uuid=$sr_uuid params=uuid managed=true

and any vdis that dont match im going to delete with

xe vdi-destroy

is this corect?

mmm... when i run a

xe vdi-list

i see i have a lot of base copies

what are they all about?

-

ok Olivier

as XOA isnt working can i do it from the cli of xen, so -

xe vdi-destroy uuid=(uuid of orphaned vdi )