S3 / Wasabi Backup

-

130 GB Windows VM (before zstd) backup to Wasabi failed during the upload:

Error: Part number must be an integer between 1 and 10000, inclusiveA quick Google turned out something about multipart chunk sizes ...

Anything I can do to help pinpoint this?

-

Yes, @nraynaud is working on it

-

it should be fixed in this PR: https://github.com/vatesfr/xen-orchestra/pull/5242

You can test it if you build from the sources and pull the PR.

-

@nraynaud First test of it with a small vm.....only issue I had was the folder section of the remote.

I tried to leave blank but it did not let me. So I put a value in, but did not create the folder inside the bucket. I assumed XOA would create it if it did not exist.

The backup failed because of this with a pretty lame (non descriptive) transfer interrupted message.

I created the folder and I was able to successfully backup a test vm.

I am currently trying a variety of vm's ranging from 20GB to 1tb.

-

Hmm, failed.

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1599934093646", "jobId": "5811c71e-0332-44f1-8733-92e684850d60", "jobName": "Wasabis3", "message": "backup", "scheduleId": "74b902af-e0c1-409b-ba88-d1c874c2da5d", "start": 1599934093646, "status": "interrupted", "tasks": [ { "data": { "type": "VM", "id": "1c6ff00c-862a-09a9-80bb-16c077982853" }, "id": "1599934093652", "message": "Starting backup of CDDev. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093652, "status": "interrupted", "tasks": [ { "id": "1599934093655", "message": "snapshot", "start": 1599934093655, "status": "success", "end": 1599934095529, "result": "695149ec-94c3-a8b6-4670-bcb8b1865487" }, { "id": "1599934095532", "message": "add metadata to snapshot", "start": 1599934095532, "status": "success", "end": 1599934095547 }, { "id": "1599934095728", "message": "waiting for uptodate snapshot record", "start": 1599934095728, "status": "success", "end": 1599934095935 }, { "id": "1599934096010", "message": "start snapshot export", "start": 1599934096010, "status": "success", "end": 1599934096010 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934096011", "message": "export", "start": 1599934096011, "status": "interrupted", "tasks": [ { "id": "1599934096104", "message": "transfer", "start": 1599934096104, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "413a67e3-b505-29d1-e1b7-a848df184431" }, "id": "1599934093655:0", "message": "Starting backup of APC Powerchute. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093655, "status": "interrupted", "tasks": [ { "id": "1599934093656", "message": "snapshot", "start": 1599934093656, "status": "success", "end": 1599934110591, "result": "ee4b2001-1324-fb02-07b4-bded6e5e19cb" }, { "id": "1599934110594", "message": "add metadata to snapshot", "start": 1599934110594, "status": "success", "end": 1599934111133 }, { "id": "1599934111997", "message": "waiting for uptodate snapshot record", "start": 1599934111997, "status": "success", "end": 1599934112783 }, { "id": "1599934118449", "message": "start snapshot export", "start": 1599934118449, "status": "success", "end": 1599934118449 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": false, "type": "remote" }, "id": "1599934118450", "message": "export", "start": 1599934118450, "status": "interrupted", "tasks": [ { "id": "1599934120299", "message": "transfer", "start": 1599934120299, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "e18cbbbb-9419-880c-8ac7-81c5449cf9bd" }, "id": "1599934093656:0", "message": "Starting backup of Brian-CDQB. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093656, "status": "interrupted", "tasks": [ { "id": "1599934093657", "message": "snapshot", "start": 1599934093657, "status": "success", "end": 1599934095234, "result": "98cdf926-55cc-7c2f-e728-96622bee7956" }, { "id": "1599934095237", "message": "add metadata to snapshot", "start": 1599934095237, "status": "success", "end": 1599934095255 }, { "id": "1599934095431", "message": "waiting for uptodate snapshot record", "start": 1599934095431, "status": "success", "end": 1599934095635 }, { "id": "1599934095762", "message": "start snapshot export", "start": 1599934095762, "status": "success", "end": 1599934095763 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934095763:0", "message": "export", "start": 1599934095763, "status": "interrupted", "tasks": [ { "id": "1599934095825", "message": "transfer", "start": 1599934095825, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "0c426b2b-c24c-9532-32f0-0ce81caa6aed" }, "id": "1599934093657:0", "message": "Starting backup of testspeed2. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093657, "status": "interrupted", "tasks": [ { "id": "1599934093658", "message": "snapshot", "start": 1599934093658, "status": "success", "end": 1599934096298, "result": "0ec43ca8-52b2-fdd3-5f1c-7182abac7cbe" }, { "id": "1599934096302", "message": "add metadata to snapshot", "start": 1599934096302, "status": "success", "end": 1599934096316 }, { "id": "1599934096528", "message": "waiting for uptodate snapshot record", "start": 1599934096528, "status": "success", "end": 1599934096737 }, { "id": "1599934096952", "message": "start snapshot export", "start": 1599934096952, "status": "success", "end": 1599934096952 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934096952:1", "message": "export", "start": 1599934096952, "status": "interrupted", "tasks": [ { "id": "1599934097196", "message": "transfer", "start": 1599934097196, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "dfb231b8-4c45-528a-0e1b-2e16e2624a40" }, "id": "1599934093658:0", "message": "Starting backup of AFGUpdateTest. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093658, "status": "interrupted", "tasks": [ { "id": "1599934093658:1", "message": "snapshot", "start": 1599934093658, "status": "success", "end": 1599934099064, "result": "1468a52f-a5a2-64d3-072e-5c35e9c70ec5" }, { "id": "1599934099068", "message": "add metadata to snapshot", "start": 1599934099068, "status": "success", "end": 1599934099453 }, { "id": "1599934100070", "message": "waiting for uptodate snapshot record", "start": 1599934100070, "status": "success", "end": 1599934100528 }, { "id": "1599934101178", "message": "start snapshot export", "start": 1599934101178, "status": "success", "end": 1599934101179 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934101188", "message": "export", "start": 1599934101188, "status": "interrupted", "tasks": [ { "id": "1599934101562", "message": "transfer", "start": 1599934101562, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "34cd5a50-61ab-c232-1254-93b52666a52c" }, "id": "1599934093658:2", "message": "Starting backup of CentOS8-Base. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093658, "status": "interrupted", "tasks": [ { "id": "1599934093659", "message": "snapshot", "start": 1599934093659, "status": "success", "end": 1599934101677, "result": "0a1287c8-a700-35e9-47dd-9a337d18719a" }, { "id": "1599934101681", "message": "add metadata to snapshot", "start": 1599934101681, "status": "success", "end": 1599934102030 }, { "id": "1599934102730", "message": "waiting for uptodate snapshot record", "start": 1599934102730, "status": "success", "end": 1599934103247 }, { "id": "1599934103759", "message": "start snapshot export", "start": 1599934103759, "status": "success", "end": 1599934103760 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934103760:0", "message": "export", "start": 1599934103760, "status": "interrupted", "tasks": [ { "id": "1599934104329", "message": "transfer", "start": 1599934104329, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "1c157c0c-e62c-d38a-e925-30b3cf914548" }, "id": "1599934093659:0", "message": "Starting backup of Oro. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093659, "status": "interrupted", "tasks": [ { "id": "1599934093659:1", "message": "snapshot", "start": 1599934093659, "status": "success", "end": 1599934106102, "result": "5b9054b8-24db-5dd8-378a-a667089e84d4" }, { "id": "1599934106109", "message": "add metadata to snapshot", "start": 1599934106109, "status": "success", "end": 1599934106638 }, { "id": "1599934107407", "message": "waiting for uptodate snapshot record", "start": 1599934107407, "status": "success", "end": 1599934108110 }, { "id": "1599934109020", "message": "start snapshot export", "start": 1599934109020, "status": "success", "end": 1599934109021 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934109022", "message": "export", "start": 1599934109022, "status": "interrupted", "tasks": [ { "id": "1599934109778", "message": "transfer", "start": 1599934109778, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "3719b724-306f-d1ee-36d1-ff7ce584e145" }, "id": "1599934093659:2", "message": "Starting backup of AFGDevQB (20191210T050003Z). (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093659, "status": "interrupted", "tasks": [ { "id": "1599934093659:3", "message": "snapshot", "start": 1599934093659, "status": "success", "end": 1599934112949, "result": "848a2fef-fc0c-8d3e-242a-7640b14d20e7" }, { "id": "1599934112953", "message": "add metadata to snapshot", "start": 1599934112953, "status": "success", "end": 1599934113706 }, { "id": "1599934114436", "message": "waiting for uptodate snapshot record", "start": 1599934114436, "status": "success", "end": 1599934115269 }, { "id": "1599934116362", "message": "start snapshot export", "start": 1599934116362, "status": "success", "end": 1599934116362 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934116362:1", "message": "export", "start": 1599934116362, "status": "interrupted", "tasks": [ { "id": "1599934117230", "message": "transfer", "start": 1599934117230, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "d2475530-a43d-8e70-2eff-fedfa96541e1" }, "id": "1599934093659:4", "message": "Starting backup of Wickermssql. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093659, "status": "interrupted", "tasks": [ { "id": "1599934093660", "message": "snapshot", "start": 1599934093660, "status": "success", "end": 1599934114621, "result": "e90b1a2d-8c22-2159-ec5e-2a4f39040562" }, { "id": "1599934114627", "message": "add metadata to snapshot", "start": 1599934114627, "status": "success", "end": 1599934115347 }, { "id": "1599934116269", "message": "waiting for uptodate snapshot record", "start": 1599934116269, "status": "success", "end": 1599934116954 }, { "id": "1599934118049", "message": "start snapshot export", "start": 1599934118049, "status": "success", "end": 1599934118049 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934118050", "message": "export", "start": 1599934118050, "status": "interrupted", "tasks": [ { "id": "1599934118988", "message": "transfer", "start": 1599934118988, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "e63d7aba-9c82-7278-c46f-a4863fd2914d" }, "id": "1599934093660:0", "message": "Starting backup of XOA-FULL. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093660, "status": "interrupted", "tasks": [ { "id": "1599934093660:1", "message": "snapshot", "start": 1599934093660, "status": "success", "end": 1599934118205, "result": "1c542cd1-8a84-7aec-d0c3-3c7e25daf216" }, { "id": "1599934118210", "message": "add metadata to snapshot", "start": 1599934118210, "status": "success", "end": 1599934118914 }, { "id": "1599934119851", "message": "waiting for uptodate snapshot record", "start": 1599934119851, "status": "success", "end": 1599934120624 }, { "id": "1599934121964", "message": "start snapshot export", "start": 1599934121964, "status": "success", "end": 1599934121965 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934121966", "message": "export", "start": 1599934121966, "status": "interrupted", "tasks": [ { "id": "1599934124045", "message": "transfer", "start": 1599934124045, "status": "interrupted" } ] } ] } ] } -

@cdbessig Thanks.

I need to investigate the bug further.

About the folder: are you using AWS S3 or something else? Because S3 normally doesn't need to create a folder, you can just write straight wherever you want, and I was wondering if "S3-compatible" emulate this behavior.

-

I am using Wasabi

-

I want to report I was able to make a backup to Wasabi S3 like storage via native XO S3 beta option. This is amazing for homelabbers like me who don't have access to other resilient storage. Thank you!

-

Also running into the same "Interrupted" message, with VM's that are larger than 7+GB or so (this is not a hard limit, just what I've tested). I've tried with zstd compression and none, both are "interrupted."

-

Please upgrade to the latest version, this should be solved now

-

Updated today. Here's the snippet from syslog.

Oct 14 14:42:00 chaz xo-server[101910]: [load-balancer]Execute plans! Oct 14 14:43:00 chaz xo-server[101910]: [load-balancer]Execute plans! Oct 14 14:44:00 chaz xo-server[101910]: [load-balancer]Execute plans! Oct 14 14:44:16 chaz xo-server[101910]: 2020-10-14T21:44:16.127Z xo:main INFO + WebSocket connection (::ffff:10.0.0.15) Oct 14 14:44:59 chaz xo-server[101910]: 2020-10-14T21:44:59.644Z xo:xapi DEBUG Snapshotting VM Calculon as [XO Backup Calculon-Onetime] Calculon Oct 14 14:45:00 chaz xo-server[101910]: [load-balancer]Execute plans! Oct 14 14:45:02 chaz xo-server[101910]: 2020-10-14T21:45:02.694Z xo:xapi DEBUG Deleting VM [XO Backup Calculon-Onetime] Calculon Oct 14 14:45:02 chaz xo-server[101910]: 2020-10-14T21:45:02.916Z xo:xapi DEBUG Deleting VDI OpaqueRef:67b407aa-14a4-4452-9d7c-852b645bbd90 Oct 14 14:46:01 chaz xo-server[101910]: [load-balancer]Execute plans! Oct 14 14:46:59 chaz xo-server[101910]: _watchEvents TimeoutError: operation timed out Oct 14 14:46:59 chaz xo-server[101910]: at Promise.call (/opt/xen-orchestra/node_modules/promise-toolbox/timeout.js:13:16) Oct 14 14:46:59 chaz xo-server[101910]: at Xapi._call (/opt/xen-orchestra/packages/xen-api/src/index.js:666:37) Oct 14 14:46:59 chaz xo-server[101910]: at Xapi._watchEvents (/opt/xen-orchestra/packages/xen-api/src/index.js:1012:31) Oct 14 14:46:59 chaz xo-server[101910]: at runMicrotasks (<anonymous>) Oct 14 14:46:59 chaz xo-server[101910]: at processTicksAndRejections (internal/process/task_queues.js:97:5) { Oct 14 14:46:59 chaz xo-server[101910]: call: { Oct 14 14:46:59 chaz xo-server[101910]: method: 'event.from', Oct 14 14:46:59 chaz xo-server[101910]: params: [ [Array], '00000000000012051192,00000000000011907676', 60.1 ] Oct 14 14:46:59 chaz xo-server[101910]: } Oct 14 14:46:59 chaz xo-server[101910]: } Oct 14 14:47:00 chaz xo-server[101910]: [load-balancer]Execute plans! Oct 14 14:48:05 chaz xo-server[101910]: [load-balancer]Execute plans! Oct 14 14:48:06 chaz xo-server[101910]: terminate called after throwing an instance of 'std::bad_alloc' Oct 14 14:48:06 chaz xo-server[101910]: what(): std::bad_alloc Oct 14 14:48:06 chaz systemd[1]: xo-server.service: Main process exited, code=killed, status=6/ABRT Oct 14 14:48:06 chaz systemd[1]: xo-server.service: Failed with result 'signal'. Oct 14 14:48:07 chaz systemd[1]: xo-server.service: Service RestartSec=100ms expired, scheduling restart. Oct 14 14:48:07 chaz systemd[1]: xo-server.service: Scheduled restart job, restart counter is at 1. Oct 14 14:48:07 chaz systemd[1]: Stopped XO Server.Transfer (Interrupted) started at 14:45:03.

{ "data": { "mode": "full", "reportWhen": "always" }, "id": "1602711899558", "jobId": "c686003e-d71a-47f1-950d-ca57afe31923", "jobName": "Calculon-Onetime", "message": "backup", "scheduleId": "9d9c2499-b476-4e82-a9a5-505032da49a7", "start": 1602711899558, "status": "interrupted", "tasks": [ { "data": { "type": "VM", "id": "8e56876a-e4cf-6583-d8de-36dba6dfad9e" }, "id": "1602711899609", "message": "Starting backup of Calculon. (c686003e-d71a-47f1-950d-ca57afe31923)", "start": 1602711899609, "status": "interrupted", "tasks": [ { "id": "1602711899614", "message": "snapshot", "start": 1602711899614, "status": "success", "end": 1602711902288, "result": "ae8968f8-01bb-2316-4d45-9a78c42c4e95" }, { "id": "1602711902294", "message": "add metadata to snapshot", "start": 1602711902294, "status": "success", "end": 1602711902308 }, { "id": "1602711903334", "message": "waiting for uptodate snapshot record", "start": 1602711903334, "status": "success", "end": 1602711903545 }, { "id": "1602711903548", "message": "start VM export", "start": 1602711903548, "status": "success", "end": 1602711903571 }, { "data": { "id": "8f721d6a-0d67-4c67-a860-e648bed3a458", "type": "remote" }, "id": "1602711903573", "message": "export", "start": 1602711903573, "status": "interrupted", "tasks": [ { "id": "1602711905594", "message": "transfer", "start": 1602711905594, "status": "interrupted" } ] } ] } ] } -

Your

xo-serverprocess crashed, so it's expected that your backup is interrupted.Because you are using it from sources, I can't be sure about the issue origin, but here I have the feeling you got out of memory and Node process crashed.

-

Thanks for the hint. The upload still eventually times out now (after 12000ms of inactivity somewhere), and I still have sporadic TimeOutErrors (see above) during the transfer.

However, the

xo-serverprocess doesn't obviously crash anymore after I increased VM memory from 3GB to 4GB. I see that XOA defaults to 2GB -- what's recommended at this point (~30 VMs, 3 hosts)?With both 3GB or 4GB,

topshows thenodeprocess taking ~90% of memory during a transfer. I wonder if it's buffering the entire upload chunk in memory?With that in mind, I increased to 5.5GB, since the largest upload chunk should be 5GB. And it completed the upload successfully, though still using 90% memory throughout the process. This ended up being a 6GB upload, after zstd.

-

It shouldn't buffer anything. Also increasing VM memory won't change the Node process memory. The cache is used by the system because there's RAM, but what matters in the Node memory. See https://xen-orchestra.com/docs/troubleshooting.html#memory

-

Again, thanks for the help with this.

Whether or not the node process is actually using that much (I've been reading that by default, it maxes around 1.4 GB, but I just increased that with

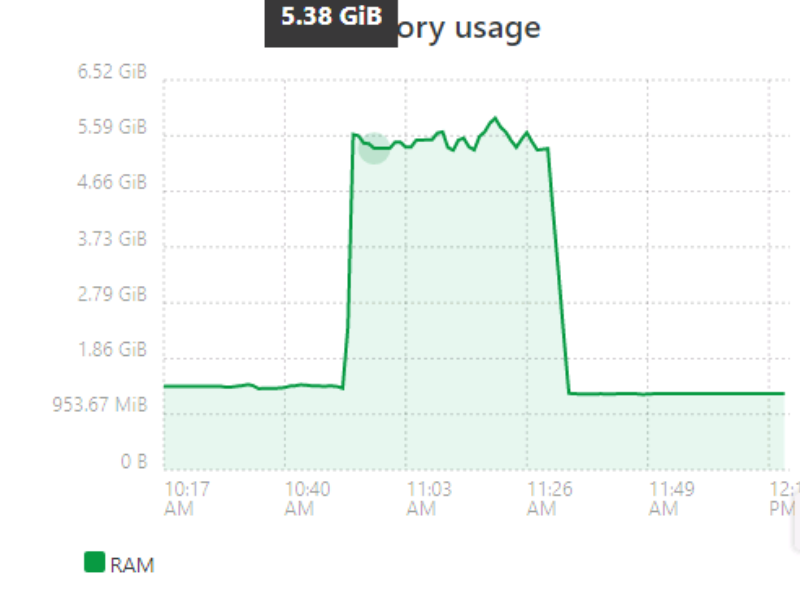

--max-old-space-size=2560), larger S3 backups/transfers are still only successful if I increase XO VM memory to > 5 GB.A recent backup run showed overall usage ballooned to 5.5+ GB during the backup, and then went back to ~1.4GB afterwards.

I don't know if this is intended behavior or if you want to finetune it later, but leaving the VM at 6 GB works for me.

-

It shouldn't be the case. Are you using XOA or XO from the sources?

-

@olivierlambert From sources. Latest pull was last Friday, so 5.68.0/5.72.0.

Memory usage is relatively stable around 1.4 GB (most of this morning, with

--max-old-space-size=2560) , balloons during a S3 backup job, and then goes back down to 1.4 GB when transfer is complete.

edit: The above was a backup without compression.

-

-

OK, DL'ed/Registered XOA, bumped it to 6 GB just in case (VM only, not the node process).

Updated to XOA latest channel, 5.51.1 (5.68.0/5.72.0). (p.s. thanks for the config backup/restore function. Needed to restart the VM or xo-server, but retained my S3 remote settings.)

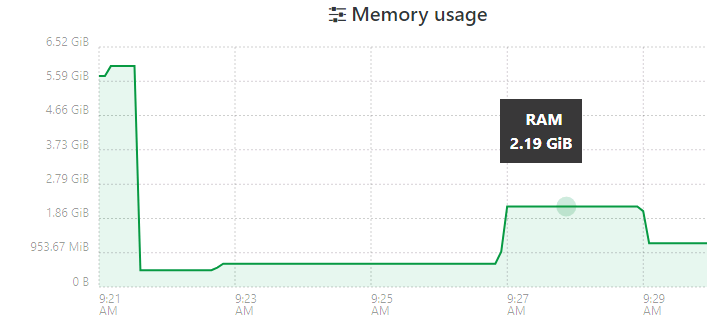

First is a pfSense VM, ~600 MB after zstd. The initial 5+GB usage is VM bootup.

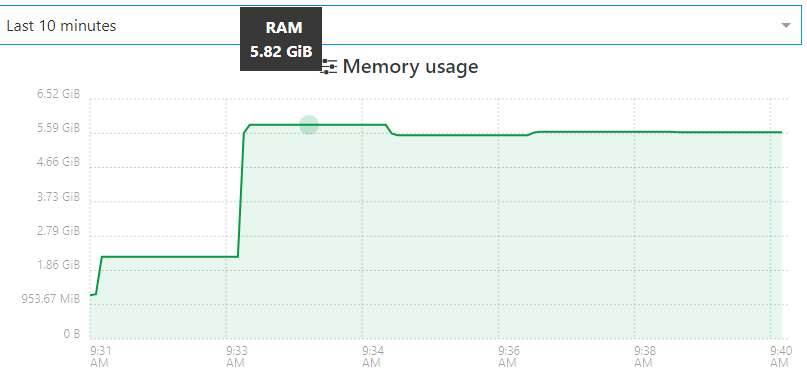

Next is the same VM that I used for the previous tests. ~7GB after ztsd. The job started around 9:30, where the initial ramp-up occurred (during snapshot and transfer start). Then it jumped further to 5+ GB.

That's about as clear as I can get in the 10 minute window. It finished, dropped down to 3.5GB, and then eventually back to 1.4GB.

-

@klou Did you try it without increasing the memory in XOA?