Backup solutions for XCP-ng

-

@cg hi, I am working on the backups , and I can add some precisions

- compression is already on (first on S3, but now for all backups remotes) with the new vhd storage system, using brotli compression by default ( gzip possible , but it's slower and less efficient) . Compression level can be configured

- deduplicaton is in our backlog

- backups can be processed in parallel, and with the new vhd storage vhd blocks are uploaded in parallel ( default 8, can be configured)

- delta backup merging is done in parallel ( 2 by default, can be configured)

- we 're working on using network block device server to be able to read the data in parallel from the host, with the goal of speeding up backups and offloading DOM0

-

@florent great info. Is there some metrics on how long merging operations take? This would be helpful to avoid bottlenecks.

-

@Forza

We're currently creating a lab that will allow us to make benchmark more easily. The latest version of the merging operation only do a block renaming + deletion of unused block (blocks are 2MB , not configurable for now), no data copy, thus no data transfer between the remote and XO.

It is faster on performant backend, but we still have some fine tuning to do to ensure we don't overload slower backend. In the meantime we put some very conservatives settings.backup tiering and backup immutability are also in the work. I don't have a precise ETA, but it's in a few weeks to a few month at most.

theses features will pave the way for backup to tape

-

@florent IIRC OpenZFS 2 uses zstd and/or lz4 as efficient algorythms, which do a pretty good job. Yet I only know brotli from webservers.

How do you connect the tape, if it's virtualized?

Putting it on bare metal would also target that (aside of performance benefits and falling restrictions on backup size due to VHD limits). -

The problem of bare metal is to provide the appliance. As you can imagine, it's a very different business to distribute hardware appliances than a virtual one (stock management, spare parts, hardware support, shipping and so on).

-

@olivierlambert Sure it is a different thing, that's why I recommended using your connections to HPE to offer a bundle or at least to offer a version, that runs with a (more or less specific) version of one of their servers. As it only makes sense when the environment reaches a certain point, it would make sense to pick a DL380/385 series/generation, which offer a good bandwith of performance and space.

E.g. we use a DL385 with 10x 10 TB HDD + a few SSDs for cache and database.IMHO it's okay to say: We support bare metal on platform X. Lots of configurations options don't matter for your support, as more memory, bigger CPUs or more storage behind the same controller don't touch the needed drivers/evaluations.

-

It's more complicated than that. We need then to have a way to install the exact environment we need to have decent QA on it. So it's more like building an installer for it (which is not immensely complex but MORE work, since the installer should be written but also maintained).

I'm pretty convinced about the perf level of using it on a physical machine, it's just that everything around is more complex to deliver.

-

@florent How can the level of compression and parallelisms be set?

Thanks

-

@cg we are envisaging various way, from using iSCSI to access tape from the VM, to using an agent on the tape (but here we'll have to support physical hardware patching , updating ) . There is also a lot of work to ensure we write sequentially without concurrency and to make it work with the futur dedup and to keep a catalog of backups / tapes

@mjtbrady my bad it is set as

zlib.constants.BROTLI_MIN_QUALITYfor now. Since I called it , I can add the param if you want to help me test it . Is it ok for you ?upload concurrency is in

writeBlockConcurrency

merge concurrency ismaxMergedDeltasPerRun

Compression type can be set in the config.toml,vhdDirectoryCompression -

@florent said in Backup solutions for XCP-ng:

@cg we are envisaging various way, from using iSCSI to access tape from the VM, to using an agent on the tape (but here we'll have to support physical hardware patching , updating ) . There is also a lot of work to ensure we write sequentially without concurrency and to make it work with the futur dedup and to keep a catalog of backups / tapes

I don't know every product, but yet I've never seen a Tapedrive/Library using iSCSI.

iSCSI is usually only used by storage systems, not by devices or libraries.

Common interfaces are either SAS or - especially in larger environments - Fiberchannel. So your way to go, probably, is to passthrough an HBA. -

@cg said in Backup solutions for XCP-ng:

I don't know every product, but yet I've never seen a Tapedrive/Library using iSCSI.

iSCSI, at least with Linux's LIO subsystem, can pass through SCSI devices (PSCSI, not the block device, though I have seen some warnings about that. I guess the initiator has to understand the target's device model specific commands properly.

I actually haven't tested this myself, so I don't know how well it works.

http://www.linux-iscsi.org/wiki/LIO#Backstores

Edit: found this article of doing something like that https://www.kraftkennedy.com/virtualizing-scsi-tape-drives-with-an-iscsi-bridge/

-

@cg We're still in the early phase, but it seems possible to connect the tape loader to a host, and to let the host expose it. Passthrough will means that Xo's host is directly connected, which is not always the case

Backup to tape is quite different than backuping on disk, S3 or even glacier, I am confident that it will be possible, and we will communicate as soon as we have a working copy.

-

@florent Happy to do what I can to test this.

In my xo-server

config.tomlI seewriteBlockConcurrency,maxMergedDeltasPerRunandvhdDirectoryCompressionin a[backups]section.Does this mean that these setting are system wide?

Shouldn't these be configurable on a per Remote basis?

Also in a previous post you have indicated that

writeBlockConcurrencydefaults to 8, but my xo-serverconfig.tomlit is 16. -

@mjtbrady For now these settings are globals. Yes it's 16 by default , I was misled by my non default test installation

-

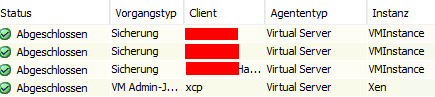

I've set up a test-environment and indeed it worked. Commvault was (is?) checking for specific version/agent string when connecting to XS/CHV/XCP-ng and in older versions refused to connect to XCP-ng.

I successfully installed the agent on a proxy-vm (it uses a proxy to ro-mount the VM-VHDs and backup the content), connected the pool, delivered the VM-inventory and also ran a successful backup:

In other words: If you're using Commvault (11.28+) you're not any longer locked on Citrix.