Storage not connecting, VMs stuck in state and unable to get back.

-

Make you sure you can ping the iSCSI target. I would also check

dmesg, the usual stuff. -

Couple of the errors i've come across when trying to power off a VM that paused or showing as running.

host.restart { "id": "4f969212-d381-40e5-b19a-862395faa1e3", "force": false } { "code": "VM_BAD_POWER_STATE", "params": [ "OpaqueRef:2c1a2394-0413-4fae-bedb-30c93c0e5cc9", "running", "paused" ], "task": { "uuid": "d1db6e59-b65e-8daa-5a5f-2e73c8802ab1", "name_label": "Async.host.evacuate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20221010T22:56:05Z", "finished": "20221010T22:56:05Z", "status": "failure", "resident_on": "OpaqueRef:53a20064-bf98-467f-af13-0491a672b3a0", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VM_BAD_POWER_STATE", "OpaqueRef:2c1a2394-0413-4fae-bedb-30c93c0e5cc9", "running", "paused" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_host.ml)(line 560))((process xapi)(filename hashtbl.ml)(line 266))((process xapi)(filename hashtbl.ml)(line 272))((process xapi)(filename hashtbl.ml)(line 277))((process xapi)(filename ocaml/xapi/xapi_host.ml)(line 556))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 231))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 103)))" }, "message": "VM_BAD_POWER_STATE(OpaqueRef:2c1a2394-0413-4fae-bedb-30c93c0e5cc9, running, paused)", "name": "XapiError", "stack": "XapiError: VM_BAD_POWER_STATE(OpaqueRef:2c1a2394-0413-4fae-bedb-30c93c0e5cc9, running, paused) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/_XapiError.js:16:12) at _default (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/_getTaskResult.js:11:29) at Xapi._addRecordToCache (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:954:24) at forEach (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:988:14) at Array.forEach (<anonymous>) at Xapi._processEvents (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:978:12) at Xapi._watchEvents (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:1144:14)" }vm.start { "id": "db3f5735-7f21-63c2-2c6b-5c60db8cd9df", "bypassMacAddressesCheck": false, "force": false } { "code": "INTERNAL_ERROR", "params": [ "Object with type VM and id db3f5735-7f21-63c2-2c6b-5c60db8cd9df/config does not exist in xenopsd" ], "task": { "uuid": "53e2f76a-4200-8ed1-64f3-282af7b44351", "name_label": "Async.VM.unpause", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20221010T22:02:49Z", "finished": "20221010T22:02:50Z", "status": "failure", "resident_on": "OpaqueRef:53a20064-bf98-467f-af13-0491a672b3a0", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "INTERNAL_ERROR", "Object with type VM and id db3f5735-7f21-63c2-2c6b-5c60db8cd9df/config does not exist in xenopsd" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 6065))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 131))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 231))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 103)))" }, "message": "INTERNAL_ERROR(Object with type VM and id db3f5735-7f21-63c2-2c6b-5c60db8cd9df/config does not exist in xenopsd)", "name": "XapiError", "stack": "XapiError: INTERNAL_ERROR(Object with type VM and id db3f5735-7f21-63c2-2c6b-5c60db8cd9df/config does not exist in xenopsd) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/_XapiError.js:16:12) at _default (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/_getTaskResult.js:11:29) at Xapi._addRecordToCache (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:954:24) at forEach (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:988:14) at Array.forEach (<anonymous>) at Xapi._processEvents (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:978:12) at Xapi._watchEvents (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:1144:14)" }@olivierlambert here is the error that i manged to get from connecting one of the storage repos:

pbd.connect { "id": "948638ac-2edd-5493-4354-84adb0345891" } { "code": "CANNOT_CONTACT_HOST", "params": [ "OpaqueRef:5c091131-ad50-4f5d-b1f2-2d6e4f1a776a" ], "task": { "uuid": "f2100dad-3c79-3d03-89d8-647c6e0e549f", "name_label": "Async.PBD.plug", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20221010T22:42:46Z", "finished": "20221010T22:43:24Z", "status": "failure", "resident_on": "OpaqueRef:53a20064-bf98-467f-af13-0491a672b3a0", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "CANNOT_CONTACT_HOST", "OpaqueRef:5c091131-ad50-4f5d-b1f2-2d6e4f1a776a" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 138))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 231))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 103)))" }, "message": "CANNOT_CONTACT_HOST(OpaqueRef:5c091131-ad50-4f5d-b1f2-2d6e4f1a776a)", "name": "XapiError", "stack": "XapiError: CANNOT_CONTACT_HOST(OpaqueRef:5c091131-ad50-4f5d-b1f2-2d6e4f1a776a) at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/_XapiError.js:16:12) at _default (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/_getTaskResult.js:11:29) at Xapi._addRecordToCache (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:954:24) at forEach (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:988:14) at Array.forEach (<anonymous>) at Xapi._processEvents (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:978:12) at Xapi._watchEvents (/opt/xo/xo-builds/xen-orchestra-202209081641/packages/xen-api/src/index.js:1144:14)" } -

Do you have a host halted/not available in your pool?

-

@olivierlambert there looks to be a disconnect from what XOA is showing and the real world, currntly i have VMs showing as running on Host 2 & 3 and showing the hosts are online but they are physically powered down.

-

You need to have all your hosts in your pool powered up. Otherwise, this won't work.

-

@olivierlambert I've just restarted all hosts to bring them back up one by one, I still had this issue with all the host powered up and showing as available. I'll let you know where i'm at once i've got them booted back up.

-

Wait for a bit until all your hosts are physically online.

Then, try again to plug the PBD. Also, on each host, double check you can ping each member of the pool and the iSCSI target.

-

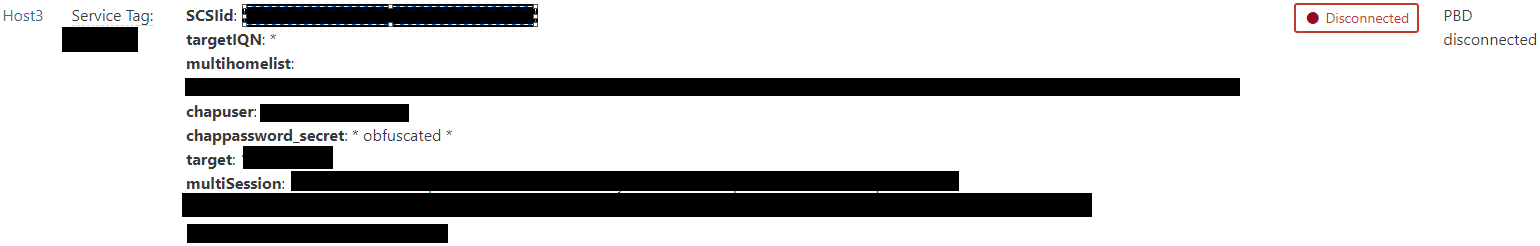

@olivierlambert I now have all 3 hosts back up:

- Host 1 Started VM it already had running on there

- Host 2 Shows enabled but no storage, shared or local

- Host 3 Shows enabled but no storage, shared or local

In the VM list i still get 6 VMs on host 2 in Running / Paused state and host 3 shows 3 VMs running.

Is there a way to clear out the VM states?

-

I have found a work round for this, another problem i found is the host 2&3 server have wrong credentials setup so can't access them locally, so a rebuild on them will be starting shortly.

Thanks @olivierlambert i looked into the networking a bit more, I was able to get successful pings between the servers and more looking into the networking on these 2 servers I had an IP address but was showing disconnected, so i started to look at forcefully removing the unresponsive hosts and found the following article.

-

I ran this from the master (still working) to find the list of hosts.

xe host-list -

Get a list of all the VMs that are resident on the unresponsive host(s) to get their uuid's.

xe vm-list resident-on=aaaaaaaaa-bbbb-cccc-ddddddddddd

3.Force powerstate reset for each of the VMs on the host(s).

xe vm-reset-powerstate uuid=eeeeeee-dddd-cccc-bbbb-aaaaaaaaaaaa --force- Force the removing of the host(s).

xe host-forget uuid=aaaaaaaaa-bbbb-cccc-ddddddddddd

This has now allowed me to spin up the VMs on the working host while I get the other rebuilt.

Thanks you @olivierlambert and @Gheppy for advice and pointers for going some solution for this.

-

-

Indeed, if your other hosts are somehow dead/unresponsive from XAPI perspective, ejecting them from the pool is an option to get your master booting those VMs