Deleting snapshots and base copies

-

Base copies should not be removed, they are part of your VM disk, in facts the root of them, removing them will kill your VM. Each time you make a snapshot your VM disk is split in two, the original disk before the snapshot > base copie and the new current disk of the VM ,who is a differential between the base copie and the current VM state.

That why the base copies are essential because they have part of your VM that are not in the current disk. -

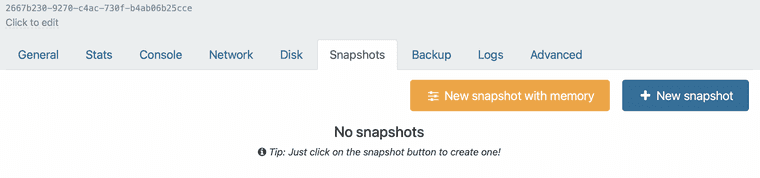

XOA not showing the snapshots. May be it is due to failed backup but not sure. What can be possible ways to delete the snapshots that are not showing in XOA but showing in local lvm disk.

-

@sumansaha Look in Dashboard > Health if something should not be there you will see it.

-

@Darkbeldin Got some some orphand disk in there. I've removed those. But stilll base files are there and not cleared up.

-

@sumansaha Do you have a backup job on this VM?

-

It's not instantly removed, you need the coalesce to do it

-

@olivierlambert Can we expect over the time it will clear up automatically .

-

@Darkbeldin currently no back up job in there.

-

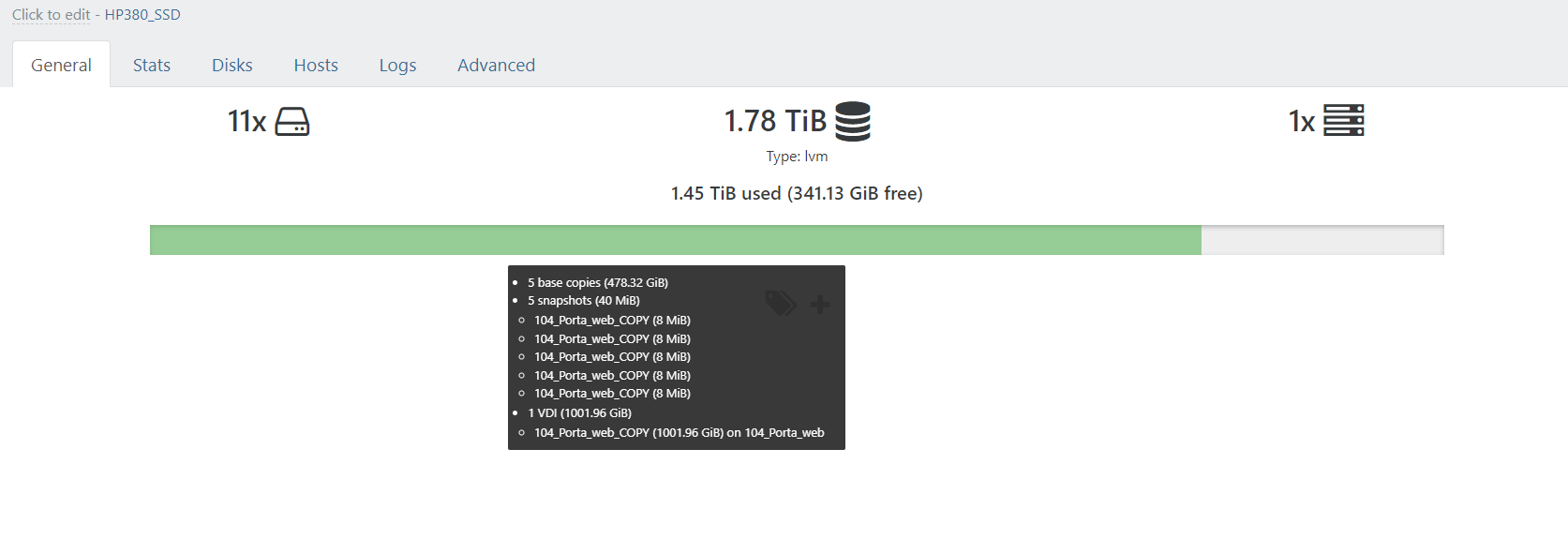

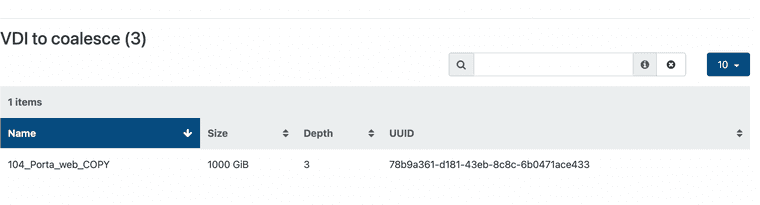

@sumansaha check the "Advanced" view of your SR, you'll see if there's VDI to coalesce and how much/which depth.

Then, you should take a look at the SMlog to see if it's moving forward or not.

-

-

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] ['/usr/bin/vhd-util', 'scan', '-f', '-m', '/var/run/sr-mount/c2de6039-fa02-837a-8fcb-87e47392c7fe/.vhd']

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] pread SUCCESS

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] ['ls', '/var/run/sr-mount/c2de6039-fa02-837a-8fcb-87e47392c7fe', '-1', '--color=never']

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] pread SUCCESS

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] lock: opening lock file /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/running

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] lock: tried lock /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/running, acquired: True (exists: True)

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] lock: released /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/running

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] Kicking GC

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27693] === SR c2de6039-fa02-837a-8fcb-87e47392c7fe: gc ===

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27712] Will finish as PID [27713]

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27693] New PID [27712]

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] lock: opening lock file /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/running

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] lock: opening lock file /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/gc_active

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27693] lock: released /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/sr

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] lock: opening lock file /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/sr

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27713] Found 0 cache files

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] lock: tried lock /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/gc_active, acquired: True (exists: True)

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] lock: tried lock /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/sr, acquired: True (exists: True)

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] ['/usr/bin/vhd-util', 'scan', '-f', '-m', '/var/run/sr-mount/c2de6039-fa02-837a-8fcb-87e47392c7fe/.vhd']

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] pread SUCCESS

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27713] SR c2de ('nasnew') (0 VDIs in 0 VHD trees): no changes

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] lock: released /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/sr

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27713] No work, exiting

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27713] GC process exiting, no work left

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27713] lock: released /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/gc_active

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27713] In cleanup

Dec 21 00:03:34 xcp-ng-slqtflub SMGC: [27713] SR c2de ('nasnew') (0 VDIs in 0 VHD trees): no changes

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27737] lock: opening lock file /var/lock/sm/c2de6039-fa02-837a-8fcb-87e47392c7fe/sr

Dec 21 00:03:34 xcp-ng-slqtflub SM: [27737] sr_update {'sr_uuid': 'c2de6039-fa02-837a-8fcb-87e47392c7fe', 'subtask_of': 'DummyRef:|073fd04b-d097-4bd7-8dba-e76a353279bd|SR.stat', 'args': [], 'host_ref': 'OpaqueRef:e5dea4f6-197b-47dd-a725-6c57ddb6d70b', 'session_ref': 'OpaqueRef:b545cd23-51ca-4e66-8d0f-c77d43364dd0', 'device_config': {'server': '192.168.196.121', 'SRmaster': 'true', 'serverpath': '/mnt/nas1/portawebback2', 'options': 'hard'}, 'command': 'sr_update', 'sr_ref': 'OpaqueRef:a9e94842-c873-408c-b128-78e189539b6a'}Not sure whether coalesce is going fine through .

-

@sumansaha /usr/bin/vhd-util coalesce --debug -n /dev/VG_XenStorage-da208944-11c3-c286-b097-2dbf5eb37103/VHD-8297ea93-a76a-446a-a37c-471efe1b2847

This process is running behind.

-

Actually no luck in removing the base files after removing the orphand disks.

-

So you have a coalesce process. It could take some time, leave it as is and NEVER remove a base copy manually.

-

Note that the coalesce process can take up to 24 hours. If there are issues, this article might be helpful: https://support.citrix.com/article/CTX201296/understanding-garbage-collection-and-coalesce-process-troubleshooting

-

@olivierlambert That works. XCP-NG Rocks.

-

@sumansaha In summary , I've deleted Orphan VDIs from Dashboard->health . But in deletion, I've maintained the sequence of their age.It has taken 6 hours, though that depends on the VM size.

-

K kamil-v4 referenced this topic on