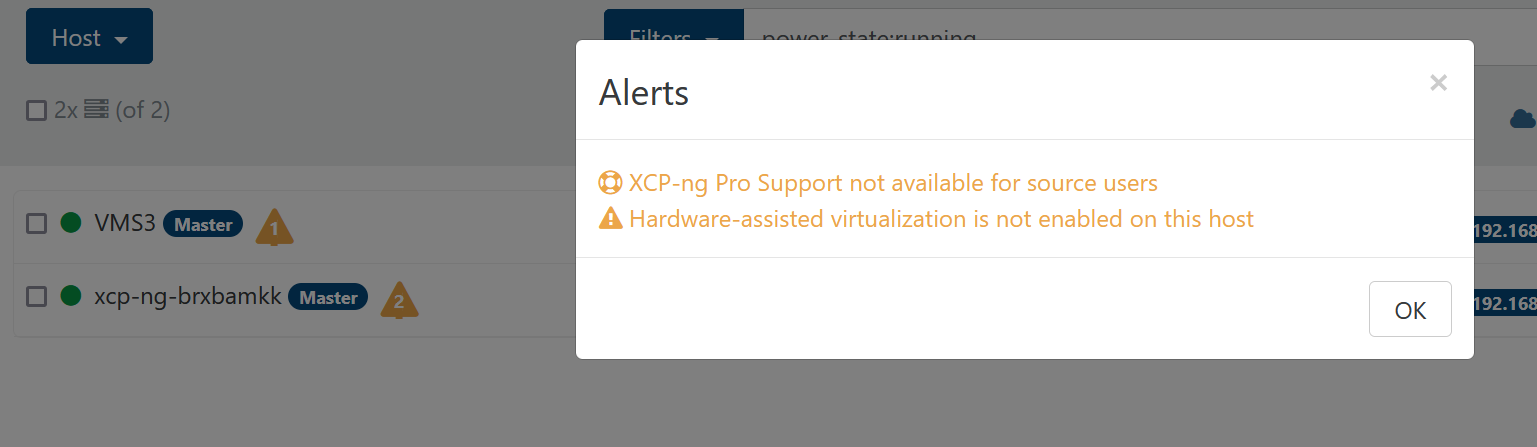

"Hardware-assisted virtualization is not enabled on this host" even though platform:exp-nested-hvm=true is set

-

@stormi If the host is on version 8.3 with Xen 4.17, nested virtualization does not work on any guest. If version 8.3 with Xen 4.17 is on a guest, but the host is running version 8.2.1 or an out-of-date 8.3 (Xen 4.13), it doesn't matter and nested guest virtualization works.

-

@abudef In the second case, do you mean there's:

8.2.1 host running a nested 8.3 (Xen 4.17) VM running itself a Nested XCP-ng? (so nested inside nested)

-

@stormi That's not quite what I meant. The point is that if the host is 8.3/4.17, then virtualization is not working on its guest and therefore no other nested guest can be started on this guest. And actually, yes, if 8.3/4.17 appears somewhere in the cascade of nested hosts, then nested virtualization will no longer work on its guest.

-

@abudef Can you try this on a shutdown VM:

- Disable nested from the VM/Advanced tab

xe vm-param-set platform:nested_virt=true uuid=<VM UUID>- Start the VM

-

It seems we also need to modify some other bits, stand by.

-

Disable nested from the VM/Advanced tab

xe vm-param-set platform:nested_virt=true uuid=<VM UUID>

Start the VM...unfortunately it didn't work, I assume because...

It seems we also need to modify some other bits

-

@olivierlambert On XCP 8.3/Xen 4.17 the whole vmx option is missing from any VM's CPU.... Waiting for the other bits...

-

Yes, that's exactly the missing piece, but it has to be computed from the hex string on the pool platform CPU.

-

As expected, the same problem is with XenServer 8/4.17

-

@olivierlambert @stormi Please do you have any idea when this problem might be resolved? The question is how to deal with the test lab, whether to wait, because a secondary problem is that the nested VMs cannot be migrated elsewhere from the affected virtualized XCP-ng hosts.

vm.migrate { "vm": "654cc5c6-7e50-fc28-ecc4-fe46929905b2", "mapVifsNetworks": { "2db4235a-345f-f286-4172-77dab4e198fe ": "8e969c1a-cafa-7ac0-504d-cf5cd19ef1e4 " }, "migrationNetwork": "8e969c1a-cafa-7ac0-504d-cf5cd19ef1e4 ", "sr": "a25ba333-a1a5-f22f-c337-0ec662e835ed", "targetHost": "ca60fce7-924a-45f9-a1c6-ee860952e6aa" } { "code": "NO_HOSTS_AVAILABLE", "params": [], "task": { "uuid": "57fe6efb-569e-3fa3-a345-d43377260884 ", "name_label": "Async.VM.migrate_send", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240510T10:45:50Z", "finished": "20240510T10:45:51Z", "status": "failure", "resident_on": "OpaqueRef:99de1bb8-8e7f-e79a-d7f9-5d84c2c09a73", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "NO_HOSTS_AVAILABLE" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_placement.ml)(line 106))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 1453))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/helpers.ml)(line 1506))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 1445))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2537))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2559))((process xapi)(filename ocaml/xapi/rbac.ml)(line 189))((process xapi)(filename ocaml/xapi/rbac.ml)(line 198))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 75)))" }, "message": "NO_HOSTS_AVAILABLE()", "name": "XapiError", "stack": "XapiError: NO_HOSTS_AVAILABLE() at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202405091612/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202405091612/packages/xen-api/_getTaskResult.mjs:11:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202405091612/packages/xen-api/index.mjs:1029:24) at file:///opt/xo/xo-builds/xen-orchestra-202405091612/packages/xen-api/index.mjs:1063:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202405091612/packages/xen-api/index.mjs:1053:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202405091612/packages/xen-api/index.mjs:1226:14)" }(targetHost above is a common native XCP-ng host)

-

@abudef @olivierlambert @stormi Ok, so we can't hot migrate a VM from 8.3 back to 8.2.... I get it... Cold migration fails also, I almost understand why it won't work because there might be features missing. Then why does Warm migration work?

Can normal cold migration be forced to work? May be as a check box/warning option that features might not be available (like TPM)?

-

@Andrew No, migration is always forward compatible, not backward. You can use warm migration in XO instead (or backup delta/restore).

-

@abudef No. We are discussing internally to see what would be the best approach.

-

Hello @olivierlambert, curiosity prevents me from not asking if you have reached any conclusion or solution yet. Thank you in advance for revealing the hot news

-

Hi,

It's not on the top priority list as right now, as 8.3 is coming closer and especially this Friday there's XO 5.95.

However, next week, we are at the Xen Summit, so that will be the occasion to discuss with both Vates (@andSmv @marcungeschikts ...) but also Xen upstream directly

-

Hi, did you come to any new findings and conclusions at the Xen Summit?

-

We re asked upstream about this, no feedback yet.

-

Hi, any new information on this issue?

-

No news at the moment, as soon there's something to test, I'll keep you posted.

-

A abudef referenced this topic on

-

Hi XCP team :),

I believe I might also be affected by this issue.

I was previously using a Dell 2RU Server 4x CPU's (at work atm, I'll update this post with the exact Dell model and CPU models later as it's powered off at home).The ''old'' server was running XCPNG 8.2.1, and I was running Truenas as a VM with LSI HBA and nVidia GPU passed through, and Truenas was working like a charm - I was able to use the kubernetes apps, and create nested VM's (although I didnt use them, I was /able/ to, in a pinch).

I recently moved to a Dell R740 with 2 x Intel 6136 CPU's running XCPNG 8.3. These CPU's appear to support all required VT-d (with EPT) extensions, IOUMMU and SRV-IOV.

I've been through the BIOS about a billion times trying to troubleshoot this issue, and have changed the workload profiles and ensured everything relating to virtualisation and performance optimisation is enabled.However, now my truenas VM displays an error "Virtualization is not enabled on this system", and I'm unable to use the Kubernetes apps, or create VM's from within Truenas. (I have checked and confirmed that the "nested virtualization" option is definitely enabled for that VM.

As a test, I installed a new Truenas VM, with nested virtualization enabled.

After creating the test VM, I can actually click on the Virtualization tab within Truenas, and it doesnt display the same error as before (on the old truenas VM") , but it says your CPU does not support KVM extensions" which I believe to be erroneous.I can see within this thread that you suspect ""Xen 4.17" is the issue.

Can you provide any steps to 'rollback' to a version that might re-enable the nested virtualization feature to work correctly (or point me in the right direction on how to achieve this?)

Otherwise, is there a supported method to 'downgrade' the XCPNG 8.3 host to 8.2.1?Please let me know if you require any additional information or if I can be of help testing anything.

(thanks again for all your hard work and amazing free and opensource products! can't wait for the v6 UI :D)