File restore error on LVMs

-

@florent Still not working.

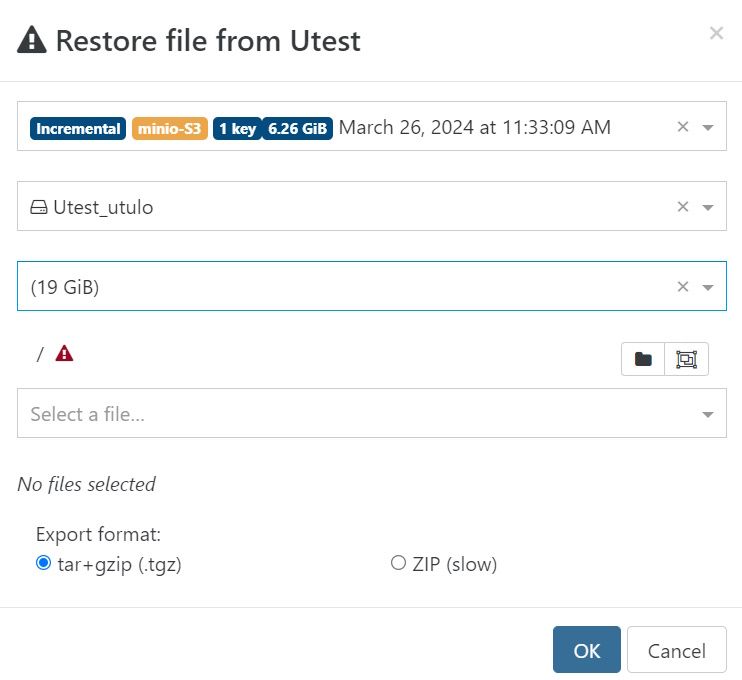

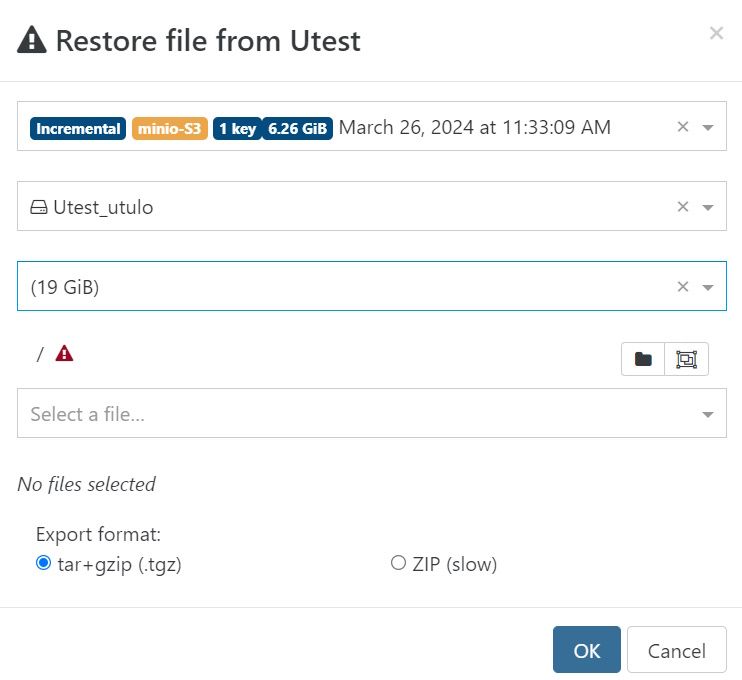

Here is the partition list for a standard Ubuntu 20.04.3 install.

Partition 3 is the main OS root as a single LVM (19G).

Trying to restore a file from it makes XO confused.xvda 202:0 0 20G 0 disk ├─xvda1 202:1 0 1M 0 part ├─xvda2 202:2 0 1G 0 part /boot └─xvda3 202:3 0 19G 0 part └─ubuntu--vg-ubuntu--lv 253:0 0 19G 0 lvm /

-

my test LVM VM works

@michmoor0725 or @Andrew , do you have any open tunnel I can use to test on your VM ?

@Andrew said in File restore error on LVMs:

@florent Still not working.

Here is the partition list for a standard Ubuntu 20.04.3 install.

Partition 3 is the main OS root as a single LVM (19G).

Trying to restore a file from it makes XO confused.xvda 202:0 0 20G 0 disk ├─xvda1 202:1 0 1M 0 part ├─xvda2 202:2 0 1G 0 part /boot └─xvda3 202:3 0 19G 0 part └─ubuntu--vg-ubuntu--lv 253:0 0 19G 0 lvm /

it see the partition but fail to m

@Andrew said in File restore error on LVMs:

@florent Still not working.

Here is the partition list for a standard Ubuntu 20.04.3 install.

Partition 3 is the main OS root as a single LVM (19G).

Trying to restore a file from it makes XO confused.xvda 202:0 0 20G 0 disk ├─xvda1 202:1 0 1M 0 part ├─xvda2 202:2 0 1G 0 part /boot └─xvda3 202:3 0 19G 0 part └─ubuntu--vg-ubuntu--lv 253:0 0 19G 0 lvm /

-

@florent Yes i have a support tunnel IP.

id: redacted but kept by our team

-

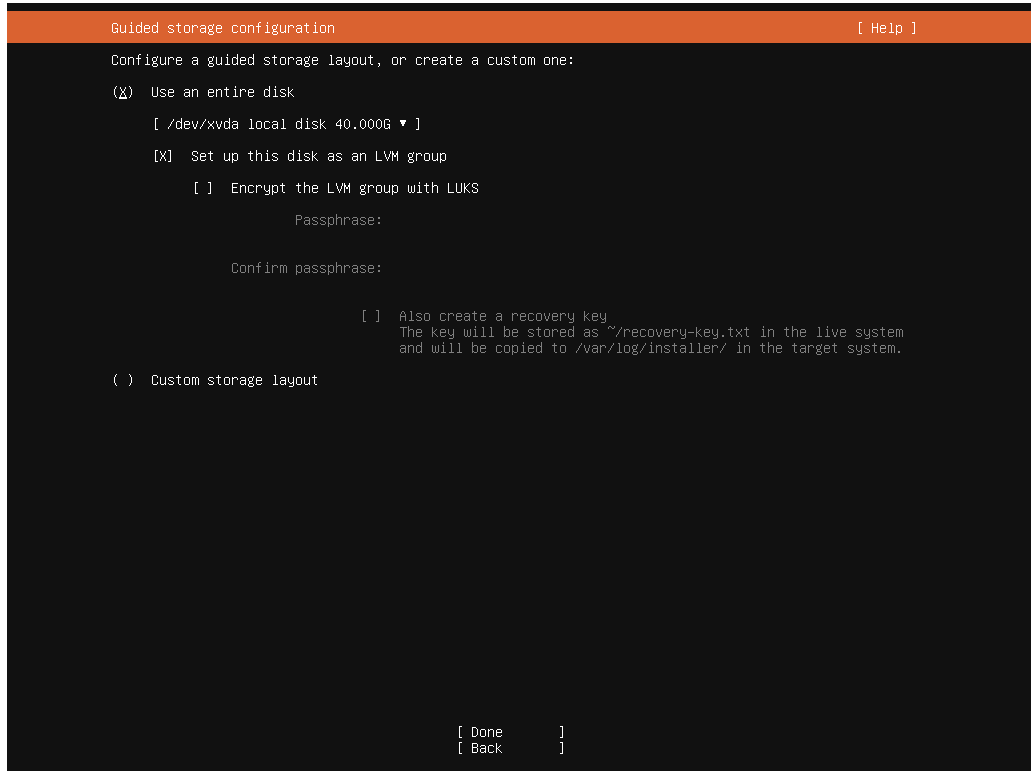

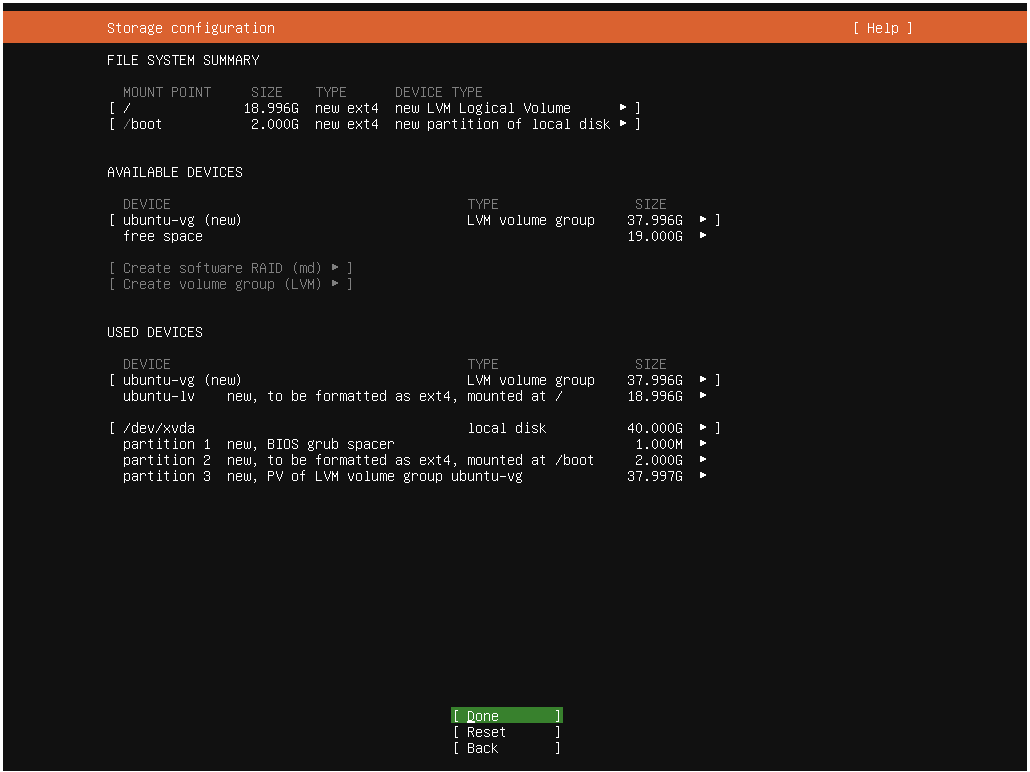

I ran into this issue (xcp-ng 8.3.0 beta 2, XO 4cf03 ronivay docker image) and thought it had to do with my vm disk storage location (local vs NFS vs iSCSI). After not being able to restore on the file level from delta backups in all three situations I randomly decided to untick the "set up this disk as an LVM group" when installing a test vm which is enabled by default on Ubuntu 24.04 LTS.

The following screenshot is what the storage setup looks like with the default lvm group.

-

I'm a new ("about a week old") user of xcp-ng and Xen Orchestra and observed the same problem as described in this forum post.

The older (20.04.6 LTS) Linux installation I moved out of ESXi just for testing purposes is the one I'm testing on for now, but this problem seems to be easily reproduced on the newer versions as well (and I will test it within a few days).

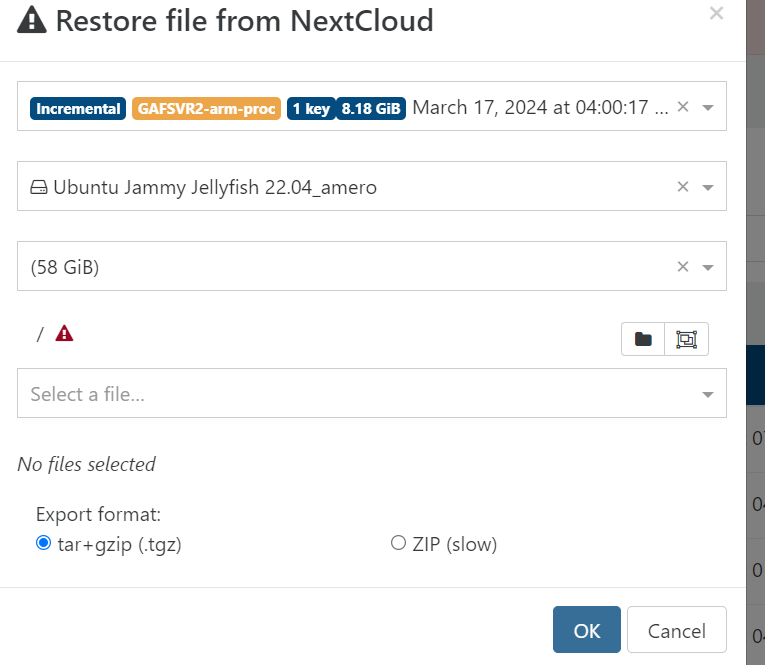

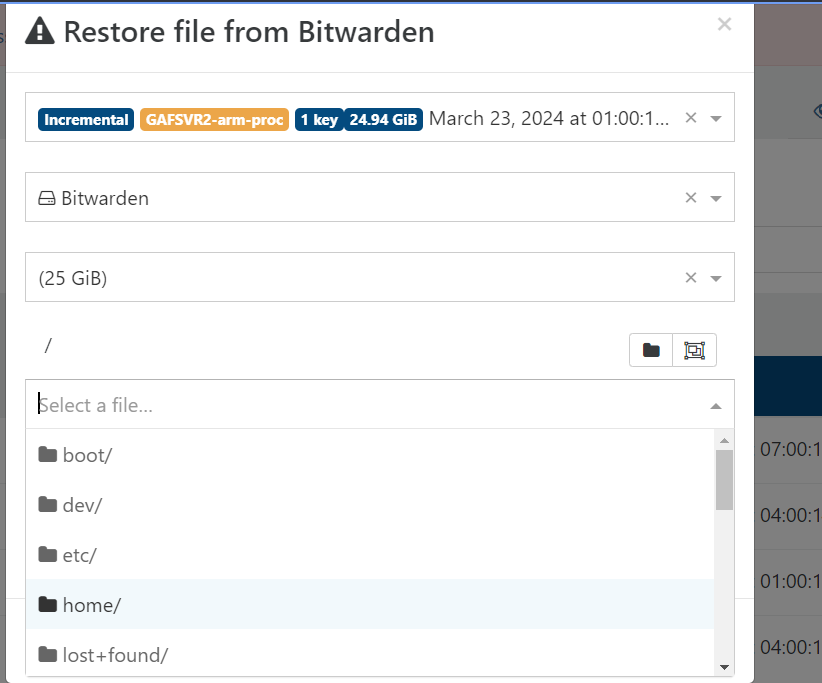

From the backup I create, I can list the partitions in the backup, but am only able to select files from the boot partition (1.42GB), the two other shows the red exclamation mark.

The behaviour is the same with both XO from source and the just activated full trial of XOA (for testing the file-level restore).

Anyone solved this yet ?

-

@peo i am seeing the same issue on an Ubuntu 22.04 LTS VM setup with LVM.

-

@flakpyro What's the state of the LVM VG, its members and the LV? Has the VG been brought active along with its LV?

Is the VG and LV in the active system the file is being restored to the same as the one in the backup?

Are there any missing member disks (virtual) from the VM preventing the restore?

Are the relevant packages for the LVM software installed namely the following:-

- lvm2

- lvm2-dbusd

- lvm2-lockd

-

@john-c

Here is the output of vgdisplay and lvdisplay from the VM being backed up.

--- Volume group --- VG Name ubuntu-vg System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 6 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 1 Max PV 0 Cur PV 1 Act PV 1 VG Size <58.00 GiB PE Size 4.00 MiB Total PE 14847 Alloc PE / Size 14847 / <58.00 GiB Free PE / Size 0 / 0 VG UUID iUJyAl-7Ltq-IvF5-xla3-RA1E-bYP5-QJABwy--- Logical volume --- LV Path /dev/ubuntu-vg/ubuntu-lv LV Name ubuntu-lv VG Name ubuntu-vg LV UUID bwEE3c-fkgc-nATq-czqZ-TkdK-xFA1-ULmRGz LV Write Access read/write LV Creation host, time ubuntu-server, 2022-05-30 11:47:36 -0600 LV Status available # open 1 LV Size <58.00 GiB Current LE 14847 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:0Its basically a bog standard Ubuntu install. The file system on the LV is XFS.

The active system and backup should have the same exact layout. And no missing virtual disks.

-

@flakpyro said in File restore error on LVMs:

@john-c

Here is the output of vgdisplay and lvdisplay from the VM being backed up.

--- Volume group --- VG Name ubuntu-vg System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 6 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 1 Max PV 0 Cur PV 1 Act PV 1 VG Size <58.00 GiB PE Size 4.00 MiB Total PE 14847 Alloc PE / Size 14847 / <58.00 GiB Free PE / Size 0 / 0 VG UUID iUJyAl-7Ltq-IvF5-xla3-RA1E-bYP5-QJABwy--- Logical volume --- LV Path /dev/ubuntu-vg/ubuntu-lv LV Name ubuntu-lv VG Name ubuntu-vg LV UUID bwEE3c-fkgc-nATq-czqZ-TkdK-xFA1-ULmRGz LV Write Access read/write LV Creation host, time ubuntu-server, 2022-05-30 11:47:36 -0600 LV Status available # open 1 LV Size <58.00 GiB Current LE 14847 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:0Its basically a bog standard Ubuntu install. The file system on the LV is XFS.

The active system and backup should have the same exact layout. And no missing virtual disks.

Does the UUID from the backup match the one from the live machine?

Does the VG name matchup with backup and live machine?

-

@john-c Yep nothing has been renamed in regards to the LV or VG name inside the VM. I have not made any recent changes to this VM or the backup job itself.

I assume the UUID matches up as the VM is displayed by its name in the "VMs to backup" section of the backup job and not simply displayed as a UUID.

-

@flakpyro said in File restore error on LVMs:

@john-c Yep nothing has been renamed in regards to the LV or VG name inside the VM. I have not made any recent changes to this VM or the backup job itself.

I assume the UUID matches up as the VM is displayed by its name in the "VMs to backup" section of the backup job and not simply displayed as a UUID.

I'm referring to the UUID of VG, PV and LV of the disks used by VM's OS (on live) and in the backup. Cause if these change then it can cause issues with bringing the LVM VG back up into operation.

Thus afterwards the mounting of the LVM LV's from the VG on the VM's Linux based Operating System will have trouble as a result. So wouldn't be able to get the root directory (/). Which is what several people are trying to restore into.

-

@john-c Do you know what would cause them to change? I am testing a restore from last nights backup so i assume the UUIDs have not changed. I could do a full restore from last nights backup however and compare to be sure.

-

@flakpyro said in File restore error on LVMs:

@john-c Do you know what would cause them to change? I am testing a restore from last nights backup so i assume the UUIDs have not changed. I could do a full restore from last nights backup however and compare to be sure.

Do the restore then compare to check, to see whether they have changed.

If you have done any of the following or if XOA has done any of the following when performing the requested functions, it would cause the UUID for LVMs to change:-

- Create a new LVM

- Edit Metadata Directly

- Delete and recreate

- Change Filesystem UUID

- Change the member disk's location address - virtual bus connecting virtual disk

Also if the actions in XOA or XCP-ng affect the member disk's address visible inside of the VM then this would affect the metadata.

@florent Your employer did that new Debian 12 based XOA since 2021 are the packages for lvm2 and any relevant NodeJS NPM ones included in the appliance?

-

A Andrew referenced this topic on