XCP-ng 8.3 betas and RCs feedback 🚀

-

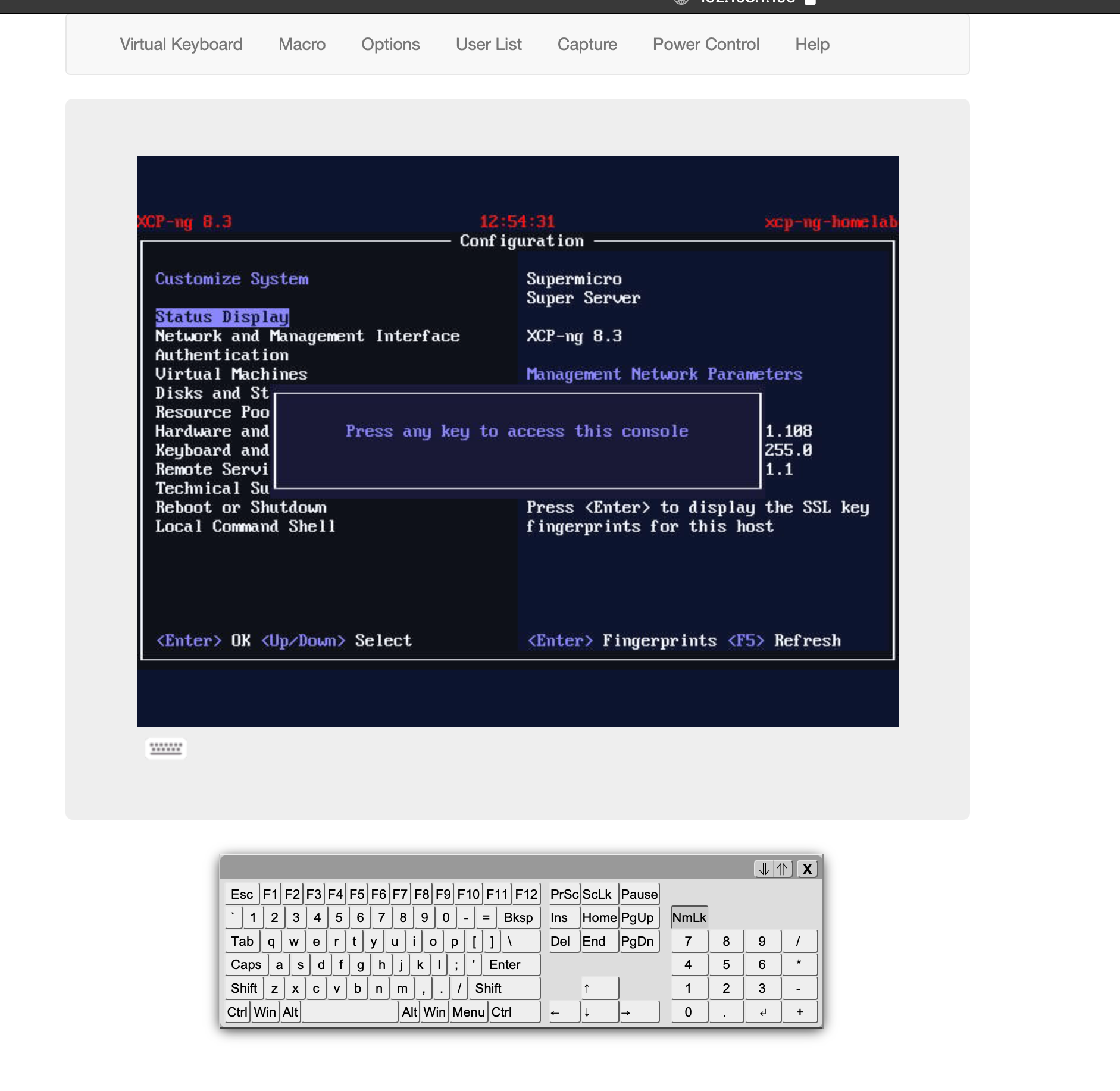

Weird incident here, my server froze for no reason at all (none that I can know yet), I don't even know for how long it has been frozen since the VM I use every day is working. I cannot ping Xcp-ng server, it is registered but cannot be connected via Xen Orchestra. I did not run the 2 latest batches of updates on my XCp-NG 8.3 server. The weird thing is that most of my Linux VM are still running and reachable!! The Windows VM, on the other hand are not reachable. Any recommendations for investigation before I reboot the host server? It is running on a Supermicro and I can see the frozen main screen via iKVM and it does not respond to keyboard inputs, not even from the virtual kvm keyboard.

-

I suppose you cannot have any physical access to check on a screen?

-

@olivierlambert There is no physical screen attached to it. I am currently investigating the SSD that was booting CXP-ng. I am guessing it just died on me. Will update if I can read any data from it.

- Any specific log I should look at if any available?

- If I restart from scratch with a new disk, will I retrieve my VMs that are actually stored on other disks?

-

- kern.log, dmesg, the usual suspects.

- if you have a metadata backup yes. Otherwise, you'll need to re introduce the SR, and just have to recreate your VMs and attach the existing disks to it (and without the metadata info, if your disks are all the same size, it could take same some time to find who's who)

-

@olivierlambert I could access the SSD, filesystem was corrupted. I don't think I have a backup at hand just in case I would not be able to boot at all. What files could I transfer to get all my VMs back to normal after a clean install on a new disk?

-

@olivierlambert System restarted, not sure how to backup XCP-NG server side properly though. I ran a backup of XO metadata and pool parameters.

-

XCP-ng pool metadata backup is exactly what you needed in that case

-

@stormi

FWIW...one of the boxes where I installed the latest updates (from a few days ago) did not autostart any of the VMs. I had to manually start each of them. -

Latest updates over ISO-installed 8.3 RC2 worked fine for me. I did experience one host in my three-host pool to which no VMs could be migrated. After looking at the networking from bash in DOM0, it showed that both 10G ports for the storage and migration networks were DOWN. These ports are on a genuine IBM-branded Intel X540-T2 card I bought used on eBay so it might have gone bad. Since the card has worked well for some time, I figured it couldn't hurt to re-seat it in the PCIe slot. Sure enough, that fixed it. Moral of the story: check the mundane stuff first; it's not always the fault of new updates.

-

haha nice catch, PCI reseat is like black magic sometimes

-

@archw and the other hosts did?

-

@stormi

YesI've not had a chance to reboot the host since then to see if something else is going on. Will do so tonight.

-

Hi,

I'm currently testing our the RC2 with ceph backed rbd devices, which works perfectly for us on 8.2.1. After installation I tried to add an existing shared storage, without success. Then I tried to create a new one and ran into following problem. As you see I can create a volume group manually without a problem.

xe sr-create fails:

xe sr-create name-label="RC2StorageTest" shared=true type=lvm device-config:device=/dev/rbd0 Error code: SR_BACKEND_FAILURE_77 Error parameters: , Logical Volume group creation failed,vgcreate from commandline works:

vgcreate RC2StorageTest /dev/rbd0 Physical volume "/dev/rbd0" successfully created. Volume group "RC2StorageTest" successfully createdIf I repeat the xe sr-create after manually creating a VG the VG will be removed by xe sr-create, but is still failing with the same error.

Any idea where to look to solve this issue?

-

@stormi

Just rebooted the problematic host. All the VMs autostarted just fine.Odd!

-

@stormi I see

plug-late-sris available now. Just finished testing it. Looks like it works just fine.thanks

-

Somewhere between 8.3b2 and 8.3rc1 Windows 2016 was broken, where it immediately has a "kmode exception not handled" bluescreen on boot.

I had to downgrade back to 8.3b2 for an existing VM. I can reproduce the failure on a clean install from 2016 Server Essentials, using the Windows server 2016 template. It installs ok until it reboots and see the bluescreen immediately on each boot.

https://go.microsoft.com/fwlink/p/?LinkID=2195170&clcid=0x409&culture=en-us&country=US

It still fails even with latest patches, which I believe is 8.3rc2.

Any suggestions?

-

@mbven Strange we have a few Windows Server 2016 servers remaining that seem to be working since since the last batch of updates. I even tried to reboot one just to be sure. They are running "Microsoft Windows Server 2016 Datacenter". What CPU are your servers running? Any errors in xl dmesg when the crash occurs?

-

@mbven I too have Windows Server 2016 (with 2024-09 updates) running on current 8.3 RC2...

-

@flakpyro Thanks for the feedback. Just tried the eval iso of Windows Server 2016 Datacenter and it spun up ok with latest xcp-ng patches.

So it looks like it's a problem specifically with the Windows Server 2016 Essentials edition and 8.2rc1 and later.

Unfortunately that's the edition that I'm licensed for and use. It's failed on both an Intel 12700k and a Xeon E3-1275v3 with the same blue screen message.

-

After doing a yum update on one of my 8.3 RC1 server and rebooting, there were no ethernet cards detected.

It happened from time to time in older versions of xcp-ng but after rebooting some times and doing emergency network reset worked fine before. This time it didn't.

Finally I reinstalled RC2 from ISO.

I have two HPE (ProLiant ML350 Gen10), and the master is the one showing this problem, the other one doesn't show this problem.

Doing a yum update in the secondary server promoted to master worked as a charm.

Any ideas?