Rolling Pool Update - host took too long to restart

-

We are introducing an XO task to monitor the RPU process. That will be easier to track the whole process

-

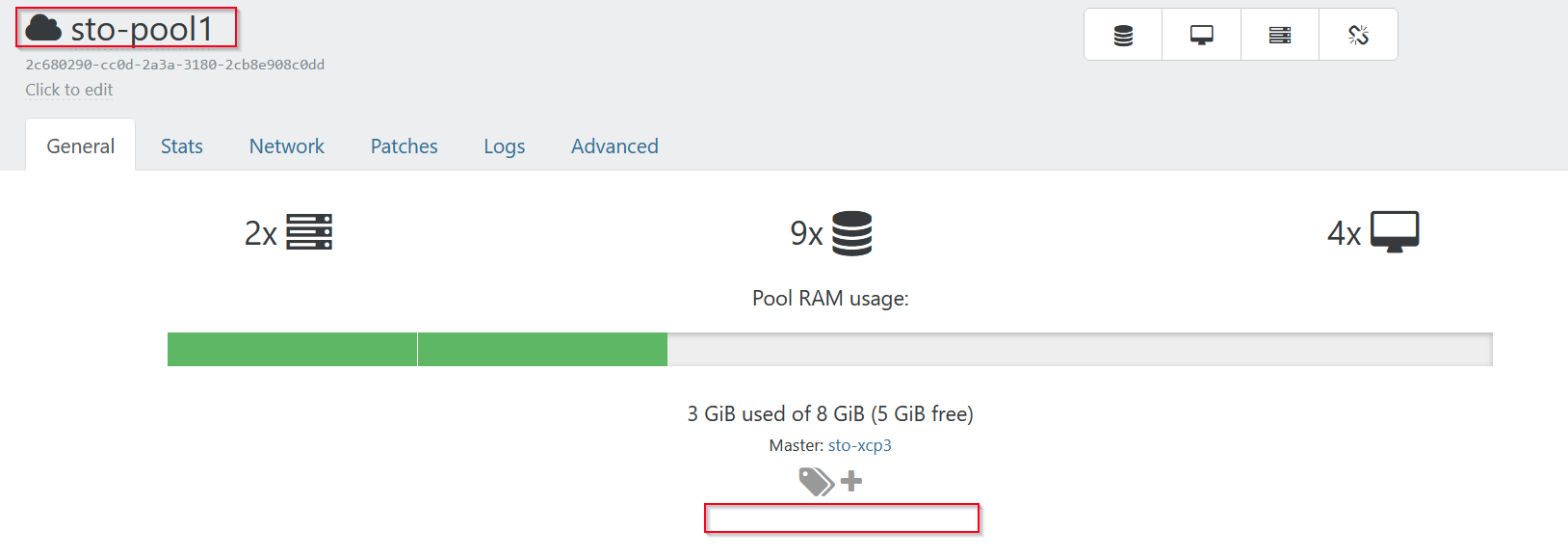

next pool, almost empty, enough memory for rolling, reboot takes 5min.

2nd host not updated.

-

@olivierlambert said in Rolling Pool Update - host took too long to restart:

We are introducing an XO task to monitor the RPU process. That will be easier to track the whole process

This should also be displayed in the pool overview to make sure other admins dont start other tasks by mistake.

In a perfect world there would be a indicative icon by the pool name and also a warning in the general pool overview with some kind of notification ,like "Pool upgrade in progress - Wait for it to complete before starting new tasks" or similar in a very red/orange/other visible color that you cant miss:

-

Good idea for XO 6 likely (because they are not XAPI but XO tasks and I don't think we planned to use the task to have an impact on XAPI objects). But that's a nice idea

Adding @pdonias in the loop

Adding @pdonias in the loop -

@olivierlambert I finally had a chance to apply patches to the two ProLiant servers with the 20 minute boot time and everything worked as expected.

-

Yay!! Thanks for the feedback @dsiminiuk !

-

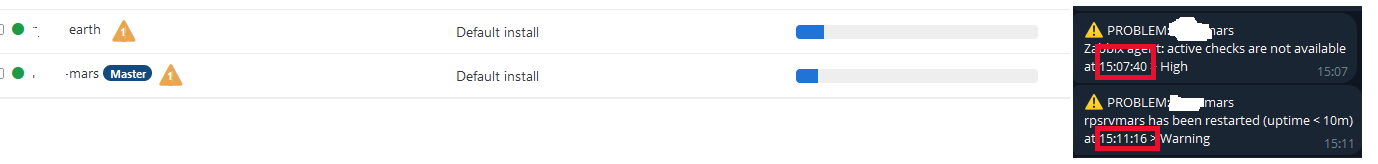

This seems to be an issue still for myself.

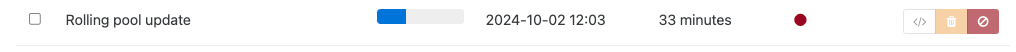

Running XO - CE 9321bThe last few updates on different 2 different clusters, have resulted in failed rolling updates. The most current one today.

The odd part is that the server is back up in about 8 min and I can attach to it via XO.The system never reconnects to complete the upgrade process. resulting in having to manually apply patches move VMs and restore HA.

Our

xo-server/config.tomlfile has been updated so `restartHostTimeout = '60 minutes' to try to overcome this but to no avail.

{ "id": "0m1s8k827", "properties": { "poolId": "62d8471c-e515-0d7a-d77f-5ac38a945507", "poolName": "TESTING-POOL-01", "progress": 33, "name": "Rolling pool update", "userId": "61ea8f96-4e67-468f-a0cf-9d9711482a42" }, "start": 1727895825871, "status": "failure", "updatedAt": 1727897834288, "tasks": [ { "id": "go7y3ilbo9u", "properties": { "name": "Listing missing patches", "total": 3, "progress": 100 }, "start": 1727895825873, "status": "success", "tasks": [ { "id": "wesndzbzb0r", "properties": { "name": "Listing missing patches for host dd36b6f8-ffff-4310-aa29-66f312c83930", "hostId": "dd36b6f8-ffff-4310-aa29-66f312c83930", "hostName": "TESTING-03" }, "start": 1727895825873, "status": "success", "end": 1727895825874 }, { "id": "cib36mtqu1k", "properties": { "name": "Listing missing patches for host 6fb0707f-2655-4ef2-a0a2-13abe70e3077", "hostId": "6fb0707f-2655-4ef2-a0a2-13abe70e3077", "hostName": "TESTING-02" }, "start": 1727895825873, "status": "success", "end": 1727895825874 }, { "id": "9zk1dabnwuj", "properties": { "name": "Listing missing patches for host 1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostId": "1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostName": "TESTING-01" }, "start": 1727895825874, "status": "success", "end": 1727895825874 } ], "end": 1727895825874 }, { "id": "ayiur0vq5bn", "properties": { "name": "Updating and rebooting" }, "start": 1727895825874, "status": "failure", "tasks": [ { "id": "7l55urnrsdj", "properties": { "name": "Restarting hosts", "progress": 22 }, "start": 1727895835047, "status": "failure", "tasks": [ { "id": "y7ba4451qg", "properties": { "name": "Restarting host 1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostId": "1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostName": "TESTING-01" }, "start": 1727895835047, "status": "failure", "tasks": [ { "id": "qtt61zspfwl", "properties": { "name": "Evacuate", "hostId": "1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostName": "TESTING-01" }, "start": 1727895835285, "status": "success", "end": 1727896526200 }, { "id": "3i41dez1f3q", "properties": { "name": "Installing patches", "hostId": "1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostName": "TESTING-01" }, "start": 1727896526200, "status": "success", "end": 1727896634080 }, { "id": "jmhv7qbyyid", "properties": { "name": "Restart", "hostId": "1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostName": "TESTING-01" }, "start": 1727896634110, "status": "success", "end": 1727896634248 }, { "id": "ky40100any9", "properties": { "name": "Waiting for host to be up", "hostId": "1f4b8cd7-e9da-414e-8558-8059a3165b98", "hostName": "TESTING-01" }, "start": 1727896634248, "status": "failure", "end": 1727897834282, "result": { "message": "Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart", "name": "Error", "stack": "Error: Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:152:17\n at /opt/xen-orchestra/@vates/task/index.js:54:40\n at AsyncLocalStorage.run (node:async_hooks:346:14)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:41)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:31)\n at Function.run (/opt/xen-orchestra/@vates/task/index.js:54:27)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:142:24\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:112:11\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Xapi.rollingPoolReboot (file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:102:5)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/patching.mjs:524:7\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at XenServers.rollingPoolUpdate (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/xen-servers.mjs:703:5)\n at Xo.rollingUpdate (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:243:3)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)" } } ], "end": 1727897834282, "result": { "message": "Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart", "name": "Error", "stack": "Error: Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:152:17\n at /opt/xen-orchestra/@vates/task/index.js:54:40\n at AsyncLocalStorage.run (node:async_hooks:346:14)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:41)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:31)\n at Function.run (/opt/xen-orchestra/@vates/task/index.js:54:27)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:142:24\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:112:11\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Xapi.rollingPoolReboot (file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:102:5)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/patching.mjs:524:7\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at XenServers.rollingPoolUpdate (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/xen-servers.mjs:703:5)\n at Xo.rollingUpdate (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:243:3)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)" } } ], "end": 1727897834282, "result": { "message": "Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart", "name": "Error", "stack": "Error: Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:152:17\n at /opt/xen-orchestra/@vates/task/index.js:54:40\n at AsyncLocalStorage.run (node:async_hooks:346:14)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:41)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:31)\n at Function.run (/opt/xen-orchestra/@vates/task/index.js:54:27)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:142:24\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:112:11\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Xapi.rollingPoolReboot (file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:102:5)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/patching.mjs:524:7\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at XenServers.rollingPoolUpdate (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/xen-servers.mjs:703:5)\n at Xo.rollingUpdate (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:243:3)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)" } } ], "end": 1727897834288, "result": { "message": "Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart", "name": "Error", "stack": "Error: Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:152:17\n at /opt/xen-orchestra/@vates/task/index.js:54:40\n at AsyncLocalStorage.run (node:async_hooks:346:14)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:41)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:31)\n at Function.run (/opt/xen-orchestra/@vates/task/index.js:54:27)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:142:24\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:112:11\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Xapi.rollingPoolReboot (file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:102:5)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/patching.mjs:524:7\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at XenServers.rollingPoolUpdate (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/xen-servers.mjs:703:5)\n at Xo.rollingUpdate (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:243:3)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)" } } ], "end": 1727897834288, "result": { "message": "Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart", "name": "Error", "stack": "Error: Host 1f4b8cd7-e9da-414e-8558-8059a3165b98 took too long to restart\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:152:17\n at /opt/xen-orchestra/@vates/task/index.js:54:40\n at AsyncLocalStorage.run (node:async_hooks:346:14)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:41)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:31)\n at Function.run (/opt/xen-orchestra/@vates/task/index.js:54:27)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:142:24\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:112:11\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Xapi.rollingPoolReboot (file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/pool.mjs:102:5)\n at file:///opt/xen-orchestra/packages/xo-server/src/xapi/mixins/patching.mjs:524:7\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)\n at XenServers.rollingPoolUpdate (file:///opt/xen-orchestra/packages/xo-server/src/xo-mixins/xen-servers.mjs:703:5)\n at Xo.rollingUpdate (file:///opt/xen-orchestra/packages/xo-server/src/api/pool.mjs:243:3)\n at Task.runInside (/opt/xen-orchestra/@vates/task/index.js:169:22)\n at Task.run (/opt/xen-orchestra/@vates/task/index.js:153:20)" } } -

So you are saying XO doesn't detect when the host is back online?

-

@olivierlambert It has not for me on 2 different clusters. And I made sure I was on the latest XO release before attempting.

This also occurred about a month ago, but I was not on the current release. So this time I updated to the current xo first then proceeded to do the rolling pool update.

One of the clusters is local to the xo vm. (the VM runs on the cluster)

The other is done over a VPN connection. Both failed with timeouts and the machines were up.I also had verification done by being connected to the iLO on both systems. These are DL360 GEN10 systems with 2.5 - 5GB internet connections, with at least 128GB of ram so no slow machines. All disks are also SSD's.

Not sure if any of that really helps, only to point out that the systems are not slow, they were observed coming back on line via ILO, and even going in to settings> server I could reconnect the master node.

The pattern seems to be that they always migrate the VM's off the master node, and reboots, but never seems to reconnect after. Only way to recover is to manually update each node and move VM's then go and reactivate HA.

This also started a bout 2 months ago , and it has been working wonderful in the past. Maybe something changed?

-

It's hard to tell, are you using XOA or XO from the sources?

-

@tuxpowered justs to make sure, you're using the "Rolling pool update" button, right?

And then the master is patched, vm's migrated off and then no bueno, correct?Did you happend to have any shared storage in this pool or is all storage local storage?

-

@tuxpowered I'm wondering if this is a network connectivity issue.

When the rolling pool update stops, what does your route table look like on the master (can it reach the other node)?

Is your VPN layer 3 (routed), layer 2 (non-routed), IPSEC tunnel?

-

@olivierlambert said in Rolling Pool Update - host took too long to restart:

Reply

XO -CE .... XO from source

-

@nikade Both clusters have shared storage. Kind of a pre-req to have a cluster

Yes both systems were on line and working well. One is TrueNas SCALE and the other is a Qnap. Both massively over kill systems. -

@dsiminiuk The local cluster has not VPN or anything like that, in fact its all on the same network so doesnt even hit the firewall or internet.

The remote location yes that is over IPSec, but that tunnel never goes down.

When the first note reboots (the master), I can see that the system is back up in 5-8 min. If I go in to XO > Settings > Servers and click the Enable/Disable status button to reconnect it pops right up. Again, does not resume migrating the other nodes.

If I leave it sit and wait for it to connect on its own some times it does sometimes it doesnt. (Same generally holds true when I reboot just a normal node not in HA.)And the XOA - CE vm has 4 cores and 16GB of ram. and in the last year I have never used more than 6GB of ram. CPU usage is less than 15%, and network peak is 18MiB averaged out of the last year (taken from the Stats tab) so those are averaged out clearly.

I haven't looked at the route table while the issue is happening, as there does not appear to be any network issue. Other nodes are all reachable and manageable as well. its just the clusters rolling update that seems to not reconnect. But the next round of updates I will surely take a look

-

We'll try to reproduce it internally, the code should try to reconnect every xx seconds so it's weird it doesn't work

edit: adding @pdonias in the convo, he might ask some XO logs to see more in depth.

-

@tuxpowered said in Rolling Pool Update - host took too long to restart:

@nikade Both clusters have shared storage. Kind of a pre-req to have a cluster

Yes both systems were on line and working well. One is TrueNas SCALE and the other is a Qnap. Both massively over kill systems.Yea I was hoping that you were using shared storage, but i've actually seen ppl using clusters/pools without shared storage so I felt I had to ask

-

@tuxpowered said in Rolling Pool Update - host took too long to restart:

When the first note reboots (the master), I can see that the system is back up in 5-8 min. If I go in to XO > Settings > Servers and click the Enable/Disable status button to reconnect it pops right up. Again, does not resume migrating the other nodes.

That is what I am seeing also, logged here https://xcp-ng.org/forum/topic/9683/rolling-pool-update-incomplete