Lots of performance alerts after upgrading XO to commit aa490

-

Haha no problem

Enjoy your week-end!

Enjoy your week-end! -

@olivierlambert

Crap, I need an extension of the trial period to setup the email and alert in XOA -

- I havent tested XOA yet. need a new trial period

- I have not changed anything but the updates for the host showing up in XO, and running the ronivay-script

- Testing the guide to use Git bisect

More errors

Seems I bult this XO on another host. not the same IP as the one I use now.

2024-09-21T17:05:32.024Z xo:xo-mixins:xen-servers WARN failed to connect to XenServer {

host: '192.168.11.29',Should have been .22

Here is the full storyroot@XO:~# cd /opt/xo/xo-src/xen-orchestra root@XO:/opt/xo/xo-src/xen-orchestra# git checkout master git pull --ff-only Redan på "master" Din gren är à jour med "origin/master". remote: Enumerating objects: 60, done. remote: Counting objects: 100% (60/60), done. remote: Compressing objects: 100% (9/9), done. remote: Total 60 (delta 47), reused 60 (delta 47), pack-reused 0 (from 0) Packar upp objekt: 100% (60/60), 8,30 KiB | 58,00 KiB/s, klart. Från https://github.com/vatesfr/xen-orchestra + eba6c5d2a...ce0408a80 web-core/vts-link -> origin/web-core/vts-link (tvingad uppdatering) + 1a8ea782a...b8e88f3ab xo-collection-jest-to-node-core-test -> origin/xo-collection-jest-to-node-core-test (tvingad uppdatering) + e39f4e781...301ad2372 xo6/counter-component-v2 -> origin/xo6/counter-component-v2 (tvingad uppdatering) Redan à jour. root@XO:/opt/xo/xo-src/xen-orchestra# git bisect start status: väntar på både bra och trasiga incheckningar root@XO:/opt/xo/xo-src/xen-orchestra# git bisect bad status: väntar på bra incheckning(ar), trasig incheckning känd root@XO:/opt/xo/xo-src/xen-orchestra# git bisect good f9220cd272674ef2f0403c9b784c955783fae334 Bisect: 105 revisioner kvar att testa efter denna (ungefär 7 steg) [9d196c4211a35d4da9fbfe4145fb700c8087fd3e] feat(xo-server/rest-api/dashboard): add alarms info (#7914) root@XO:/opt/xo/xo-src/xen-orchestra# yarn; yarn build yarn install v1.22.22 [1/5] Validating package.json... [2/5] Resolving packages... [3/5] Fetching packages... [4/5] Linking dependencies... warning "@commitlint/cli > @commitlint/load > cosmiconfig-typescript-loader@5.0.0" has unmet peer dependency "@types/node@*". warning "@commitlint/cli > @commitlint/load > cosmiconfig-typescript-loader@5.0.0" has unmet peer dependency "typescript@>=4". warning "@typescript-eslint/eslint-plugin > ts-api-utils@1.3.0" has unmet peer dependency "typescript@>=4.2.0". warning " > @xen-orchestra/web-core@0.0.5" has unmet peer dependency "pinia@^2.1.7". warning " > @xen-orchestra/web-core@0.0.5" has incorrect peer dependency "vue@^3.4.13". warning " > @xen-orchestra/web-core@0.0.5" has unmet peer dependency "vue-i18n@^9.9.0". warning " > @xen-orchestra/web-core@0.0.5" has unmet peer dependency "vue-router@^4.4.0". warning "workspace-aggregator-d6337d82-43ae-4757-801a-58e0e4bdc6ef > @vates/event-listeners-manager > tap > @tapjs/test > @isaacs/ts-node-temp-fork-for-pr-2009@10.9.7" has unmet peer dependency "@types/node@*". warning "workspace-aggregator-d6337d82-43ae-4757-801a-58e0e4bdc6ef > @vates/event-listeners-manager > tap > @tapjs/asserts > tcompare > react-element-to-jsx-string@15.0.0" has unmet peer dependency "react@^0.14.8 || ^15.0.1 || ^16.0.0 || ^17.0.1 || ^18.0.0". warning "workspace-aggregator-d6337d82-43ae-4757-801a-58e0e4bdc6ef > @vates/event-listeners-manager > tap > @tapjs/asserts > tcompare > react-element-to-jsx-string@15.0.0" has unmet peer dependency "react-dom@^0.14.8 || ^15.0.1 || ^16.0.0 || ^17.0.1 || ^18.0.0". warning Workspaces can only be enabled in private projects. [5/5] Building fresh packages... $ husky install husky - Git hooks installed Done in 121.66s. yarn run v1.22.22 $ TURBO_TELEMETRY_DISABLED=1 turbo run build --filter xo-server --filter xo-server-'*' --filter xo-web • Packages in scope: xo-server, xo-server-audit, xo-server-auth-github, xo-server-auth-google, xo-server-auth-ldap, xo-server-auth-oidc, xo-server-auth-saml, xo-server-backup-reports, xo-server-load-balancer, xo-server-netbox, xo-server-perf-alert, xo-server-sdn-controller, xo-server-test, xo-server-test-plugin, xo-server-transport-email, xo-server-transport-icinga2, xo-server-transport-nagios, xo-server-transport-slack, xo-server-transport-xmpp, xo-server-usage-report, xo-server-web-hooks, xo-web • Running build in 22 packages • Remote caching disabled Tasks: 25 successful, 25 total Cached: 0 cached, 25 total Time: 2m41.126s Done in 161.48s. root@XO:/opt/xo/xo-src/xen-orchestra# ./packages/xo-server/dist/cli.mjs 2024-09-21T17:05:28.333Z xo:main INFO Configuration loaded. 2024-09-21T17:05:28.369Z xo:main WARN Web server could not listen on http://localhost:80 { error: Error: listen EADDRINUSE: address already in use :::80 at Server.setupListenHandle [as _listen2] (node:net:1817:16) at listenInCluster (node:net:1865:12) at Server.listen (node:net:1953:7) at Server.listen (/opt/xo/xo-src/xen-orchestra/node_modules/http-server-plus/index.js:171:12) at makeWebServerListen (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:508:37) at Array.<anonymous> (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:532:5) at Function.from (<anonymous>) at asyncMap (/opt/xo/xo-src/xen-orchestra/@xen-orchestra/async-map/index.js:23:28) at createWebServer (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:531:9) at main (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:810:27) { code: 'EADDRINUSE', errno: -98, syscall: 'listen', address: '::', port: 80, niceAddress: 'http://localhost:80' } } 2024-09-21T17:05:28.372Z xo:main WARN Address already in use. 2024-09-21T17:05:28.868Z xo:mixins:hooks WARN start failure { error: Error: spawn xenstore-read ENOENT at Process.ChildProcess._handle.onexit (node:internal/child_process:284:19) at onErrorNT (node:internal/child_process:477:16) at processTicksAndRejections (node:internal/process/task_queues:82:21) { errno: -2, code: 'ENOENT', syscall: 'spawn xenstore-read', path: 'xenstore-read', spawnargs: [ 'vm' ], cmd: 'xenstore-read vm' } } 2024-09-21T17:05:30.142Z xo:main INFO Setting up /robots.txt → /opt/xo/xo-src/xen-orchestra/packages/xo-server/robots.txt 2024-09-21T17:05:30.236Z xo:main INFO Setting up / → /opt/xo/xo-web/dist/ 2024-09-21T17:05:30.237Z xo:main INFO Setting up /v6 → /opt/xo/xo-src/xen-orchestra/@xen-orchestra/web/dist 2024-09-21T17:05:30.241Z xo:plugin INFO register audit 2024-09-21T17:05:30.242Z xo:plugin INFO register auth-github 2024-09-21T17:05:30.243Z xo:plugin INFO register auth-google 2024-09-21T17:05:30.244Z xo:plugin INFO register auth-ldap 2024-09-21T17:05:30.245Z xo:plugin INFO register auth-oidc 2024-09-21T17:05:30.247Z xo:plugin INFO register auth-saml 2024-09-21T17:05:30.248Z xo:plugin INFO register backup-reports 2024-09-21T17:05:30.249Z xo:plugin INFO register load-balancer 2024-09-21T17:05:30.250Z xo:plugin INFO register netbox 2024-09-21T17:05:30.251Z xo:plugin INFO register perf-alert 2024-09-21T17:05:30.252Z xo:plugin INFO register sdn-controller 2024-09-21T17:05:30.253Z xo:plugin INFO register test 2024-09-21T17:05:30.255Z xo:plugin INFO register test-plugin 2024-09-21T17:05:30.255Z xo:plugin INFO register transport-email 2024-09-21T17:05:30.256Z xo:plugin INFO register transport-icinga2 2024-09-21T17:05:30.257Z xo:plugin INFO register transport-nagios 2024-09-21T17:05:30.258Z xo:plugin INFO register transport-slack 2024-09-21T17:05:30.259Z xo:plugin INFO register transport-xmpp 2024-09-21T17:05:30.260Z xo:plugin INFO register usage-report 2024-09-21T17:05:30.261Z xo:plugin INFO register web-hooks 2024-09-21T17:05:30.263Z xo:plugin INFO failed register test 2024-09-21T17:05:30.263Z xo:plugin INFO Cannot find module '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test/dist'. Please verify that the package.json has a valid "main" entry { error: Error: Cannot find module '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test/dist'. Please verify that the package.json has a valid "main" entry at tryPackage (node:internal/modules/cjs/loader:443:19) at Function.Module._findPath (node:internal/modules/cjs/loader:711:18) at Function.Module._resolveFilename (node:internal/modules/cjs/loader:1126:27) at requireResolve (node:internal/modules/helpers:188:19) at Xo.call (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:354:32) at Xo.call (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:406:25) at from (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:442:95) at Function.from (<anonymous>) at registerPlugins (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:442:27) at main (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:921:5) { code: 'MODULE_NOT_FOUND', path: '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test/package.json', requestPath: '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test' } } strict mode: required property "discoveryURL" is not defined at "#/anyOf/0" (strictRequired) strict mode: missing type "object" for keyword "required" at "#/anyOf/1/properties/advanced" (strictTypes) strict mode: required property "authorizationURL" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) strict mode: required property "issuer" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) strict mode: required property "userInfoURL" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) strict mode: required property "tokenURL" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) 2024-09-21T17:05:31.776Z xo:plugin INFO successfully register auth-github 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register auth-google 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register auth-ldap 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register auth-oidc 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register auth-saml 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register netbox 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register test-plugin 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register transport-icinga2 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register transport-nagios 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register transport-slack 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register transport-xmpp 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register web-hooks 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register usage-report 2024-09-21T17:05:31.777Z xo:plugin INFO successfully register backup-reports 2024-09-21T17:05:31.778Z xo:plugin INFO successfully register transport-email 2024-09-21T17:05:31.778Z xo:plugin INFO successfully register load-balancer 2024-09-21T17:05:31.787Z xo:plugin INFO successfully register audit 2024-09-21T17:05:31.787Z xo:plugin INFO successfully register perf-alert 2024-09-21T17:05:31.799Z xo:plugin INFO successfully register sdn-controller 2024-09-21T17:05:32.024Z xo:xo-mixins:xen-servers WARN failed to connect to XenServer { host: '192.168.11.29', error: Error: connect EHOSTUNREACH 192.168.11.29:443 at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1555:16) at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:128:17) { errno: -113, code: 'EHOSTUNREACH', syscall: 'connect', address: '192.168.11.29', port: 443, call: { method: 'session.login_with_password', params: '* obfuscated *' } } }And there it stops

This XO server was buildt with ronivay script

I vill install a new XO on this host. -

I have built a new XO with the ronivay-script and got almost the same result

It get a warning: Address already in use. So I stopped the xo-server

It didn't help. here is the outputroot@XO-test:~# /bin/systemctl stop xo-server root@XO-test:~# cd /opt/xo/xo-src/xen-orchestra root@XO-test:/opt/xo/xo-src/xen-orchestra# git checkout master Tidigare position för HEAD var 9d196c421 feat(xo-server/rest-api/dashboard): add alarms info (#7914) Växlade till grenen "master" Din gren är à jour med "origin/master". root@XO-test:/opt/xo/xo-src/xen-orchestra# git pull --ff-only Redan à jour. root@XO-test:/opt/xo/xo-src/xen-orchestra# git bisect start Redan på "master" Din gren är à jour med "origin/master". status: väntar på både bra och trasiga incheckningar root@XO-test:/opt/xo/xo-src/xen-orchestra# git bisect bad status: väntar på bra incheckning(ar), trasig incheckning känd root@XO-test:/opt/xo/xo-src/xen-orchestra# git bisect good f9220cd272674ef2f0403c9b784c955783fae334 Bisect: 105 revisioner kvar att testa efter denna (ungefär 7 steg) [9d196c4211a35d4da9fbfe4145fb700c8087fd3e] feat(xo-server/rest-api/dashboard): add alarms info (#7914) root@XO-test:/opt/xo/xo-src/xen-orchestra# yarn; yarn build yarn install v1.22.22 [1/5] Validating package.json... [2/5] Resolving packages... success Already up-to-date. $ husky install husky - Git hooks installed Done in 2.09s. yarn run v1.22.22 $ TURBO_TELEMETRY_DISABLED=1 turbo run build --filter xo-server --filter xo-server-'*' --filter xo-web • Packages in scope: xo-server, xo-server-audit, xo-server-auth-github, xo-server-auth-google, xo-server-auth-ldap, xo-server-auth-oidc, xo-server-auth-saml, xo-server-backup-reports, xo-server-load-balancer, xo-server-netbox, xo-server-perf-alert, xo-server-sdn-controller, xo-server-test, xo-server-test-plugin, xo-server-transport-email, xo-server-transport-icinga2, xo-server-transport-nagios, xo-server-transport-slack, xo-server-transport-xmpp, xo-server-usage-report, xo-server-web-hooks, xo-web • Running build in 22 packages • Remote caching disabled Tasks: 25 successful, 25 total Cached: 25 cached, 25 total Time: 2.395s >>> FULL TURBO Done in 2.87s. root@XO-test:/opt/xo/xo-src/xen-orchestra# ./packages/xo-server/dist/cli.mjs 2024-09-21T19:26:06.785Z xo:main INFO Configuration loaded. 2024-09-21T19:26:06.792Z xo:main INFO Web server listening on http://[::]:80 2024-09-21T19:26:07.308Z xo:mixins:hooks WARN start failure { error: Error: spawn xenstore-read ENOENT at Process.ChildProcess._handle.onexit (node:internal/child_process:286:19) at onErrorNT (node:internal/child_process:484:16) at processTicksAndRejections (node:internal/process/task_queues:82:21) { errno: -2, code: 'ENOENT', syscall: 'spawn xenstore-read', path: 'xenstore-read', spawnargs: [ 'vm' ], cmd: 'xenstore-read vm' } } 2024-09-21T19:26:07.707Z xo:main INFO Setting up /robots.txt → /opt/xo/xo-src/xen-orchestra/packages/xo-server/robots.txt 2024-09-21T19:26:07.800Z xo:main INFO Setting up / → /opt/xo/xo-web/dist/ 2024-09-21T19:26:07.800Z xo:main INFO Setting up /v6 → /opt/xo/xo-src/xen-orchestra/@xen-orchestra/web/dist 2024-09-21T19:26:07.862Z xo:plugin INFO register audit 2024-09-21T19:26:07.863Z xo:plugin INFO register auth-github 2024-09-21T19:26:07.864Z xo:plugin INFO register auth-google 2024-09-21T19:26:07.864Z xo:plugin INFO register auth-ldap 2024-09-21T19:26:07.865Z xo:plugin INFO register auth-oidc 2024-09-21T19:26:07.865Z xo:plugin INFO register auth-saml 2024-09-21T19:26:07.865Z xo:plugin INFO register backup-reports 2024-09-21T19:26:07.866Z xo:plugin INFO register load-balancer 2024-09-21T19:26:07.866Z xo:plugin INFO register netbox 2024-09-21T19:26:07.866Z xo:plugin INFO register perf-alert 2024-09-21T19:26:07.867Z xo:plugin INFO register sdn-controller 2024-09-21T19:26:07.867Z xo:plugin INFO register test 2024-09-21T19:26:07.868Z xo:plugin INFO register test-plugin 2024-09-21T19:26:07.868Z xo:plugin INFO register transport-email 2024-09-21T19:26:07.868Z xo:plugin INFO register transport-icinga2 2024-09-21T19:26:07.869Z xo:plugin INFO register transport-nagios 2024-09-21T19:26:07.869Z xo:plugin INFO register transport-slack 2024-09-21T19:26:07.870Z xo:plugin INFO register transport-xmpp 2024-09-21T19:26:07.870Z xo:plugin INFO register usage-report 2024-09-21T19:26:07.870Z xo:plugin INFO register web-hooks 2024-09-21T19:26:07.871Z xo:plugin INFO failed register test 2024-09-21T19:26:07.871Z xo:plugin INFO Cannot find module '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test/dist'. Please verify that the package.json has a valid "main" entry { error: Error: Cannot find module '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test/dist'. Please verify that the package.json has a valid "main" entry at tryPackage (node:internal/modules/cjs/loader:487:19) at Function.Module._findPath (node:internal/modules/cjs/loader:771:18) at Function.Module._resolveFilename (node:internal/modules/cjs/loader:1211:27) at requireResolve (node:internal/modules/helpers:190:19) at Xo.call (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:354:32) at Xo.call (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:406:25) at from (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:442:95) at Function.from (<anonymous>) at registerPlugins (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:442:27) at main (file:///opt/xo/xo-src/xen-orchestra/packages/xo-server/src/index.mjs:921:5) { code: 'MODULE_NOT_FOUND', path: '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test/package.json', requestPath: '/opt/xo/xo-src/xen-orchestra/packages/xo-server-test' } } strict mode: required property "discoveryURL" is not defined at "#/anyOf/0" (strictRequired) strict mode: missing type "object" for keyword "required" at "#/anyOf/1/properties/advanced" (strictTypes) strict mode: required property "authorizationURL" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) strict mode: required property "issuer" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) strict mode: required property "userInfoURL" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) strict mode: required property "tokenURL" is not defined at "#/anyOf/1/properties/advanced" (strictRequired) 2024-09-21T19:26:10.172Z xo:plugin INFO successfully register auth-github 2024-09-21T19:26:10.172Z xo:plugin INFO successfully register auth-google 2024-09-21T19:26:10.172Z xo:plugin INFO successfully register auth-ldap 2024-09-21T19:26:10.172Z xo:plugin INFO successfully register auth-oidc 2024-09-21T19:26:10.172Z xo:plugin INFO successfully register auth-saml 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register netbox 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register test-plugin 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register transport-icinga2 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register transport-nagios 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register transport-slack 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register transport-xmpp 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register web-hooks 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register usage-report 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register load-balancer 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register backup-reports 2024-09-21T19:26:10.173Z xo:plugin INFO successfully register transport-email 2024-09-21T19:26:10.180Z xo:plugin INFO successfully register audit 2024-09-21T19:26:10.180Z xo:plugin INFO successfully register perf-alert 2024-09-21T19:26:10.224Z xo:plugin INFO successfully register sdn-controllerWell that didn't go as planned

@olivierlambert said in Lots of performance alerts after upgrading XO to commit aa490:

Hi,

- What's the result with XOA

latest? - Have you changed Node version between the update?

- It would be truly helpful if you can manage to find the exact culprit (commit).

@julien-f wrote a guide to use Git bisect: https://xcp-ng.org/forum/post/58981

Since you know a good commit (here

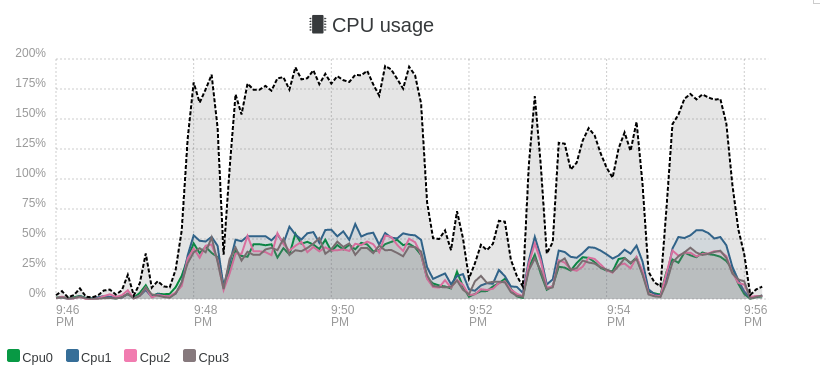

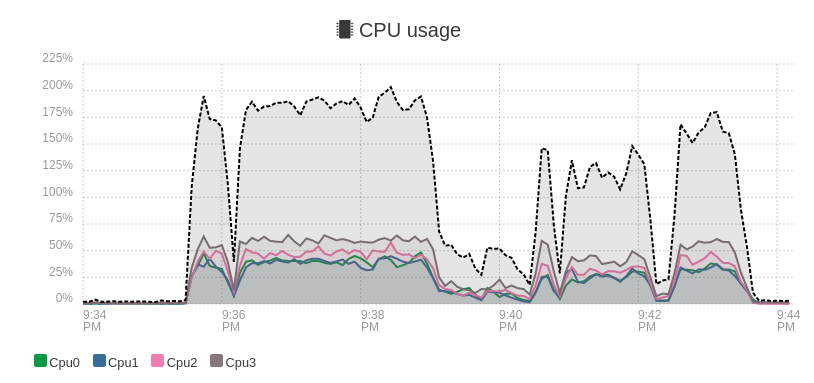

f9220) you should be able to quickly find the problematic code change- the f9220 build used node v18.20.4

The latest build 21bd7 use v20.17.0

Could it be that the newer node is faster than the old one?

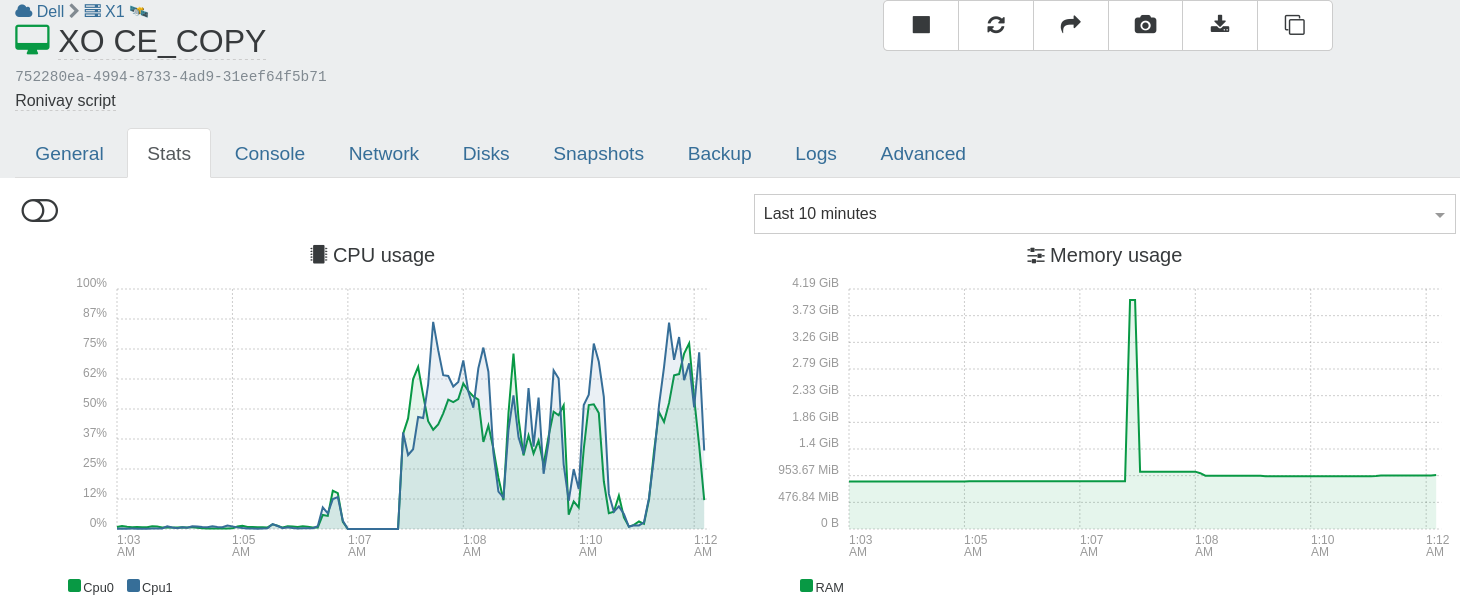

f9220:

21bd7:

On 21bd7, if I increase frequency threshold to 65% it run backup without alert

Yes 21bd7 is a little bit faster but not that much that you have to increase from 40% to 65%

That's an increase by 62.5%Ran out of tests for today, but it was a nice way to spend a Saturday evening ;o)

- What's the result with XOA

-

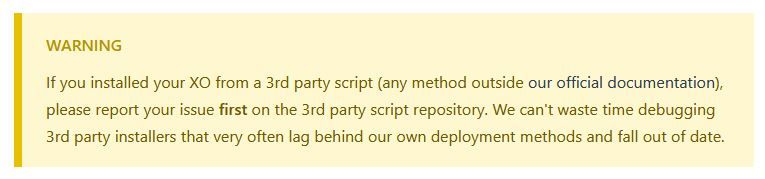

Sigh... So that's the issue when one use 3rd party installers/repo. It's a lot harder to find the root cause, that's why we have put this big yellow warning in our doc:

https://xen-orchestra.com/docs/community.html

This is consistently making everyone losing precious time to understand a problem in the first place. Nobody (expect the 3rd party script provider) could guess that this script changed the Node version between "releases". You wouldn't have this result by just building from our doc.

So yes, that's very likely the reason here (Node 18 vs Node 20). Node 20 introduced different things that could trigger this behavior (adding @julien-f in the loop)

You could have saved an entire evening by using our official doc to install XO instead of a 3rd party script by the way. I don't blame you, I blame the fact that those 3rd party providers should be clearly contacted first before using community's time (and/or XO dev time, and/or mine). And it's not obvious enough since I'm repeating this around 5 times a month.

-

@olivierlambert said in Lots of performance alerts after upgrading XO to commit aa490:

You could have saved an entire evening by using our official doc to install XO instead of a 3rd party script by the way. I don't blame you, I blame the fact that those 3rd party providers should be clearly contacted first before using community's time (and/or XO dev time, and/or mine). And it's not obvious enough since I'm repeating this around 5 times a month.

I'm retired, so for me it's Saturday all week.

Sorry I have wasted Your time.

I knew very well about the 3rd party debacle, but didn't think about it now

Now I'm Setting up a new VM, building it properly from the docs. -

Don't worry, it's not your fault, it's just that's the xxx time it happened

Still, it's interesting to had your feedback about the perf change with Node 20 (we read something about it recently)

-

@olivierlambert

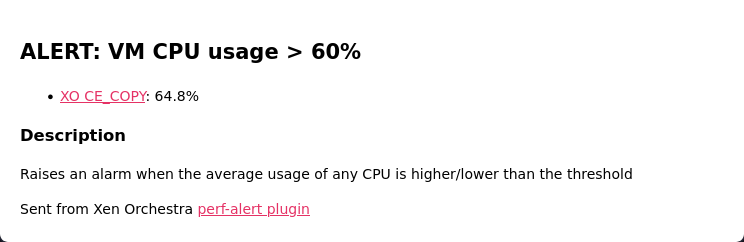

One strange note when I get the alarms on e-mail during a full backup of my running XOAlert: vm cpu usage > 65%, XO 67,8%End of alert: vm cpu usage > 65% SyncMate 0,3%End of alert is the wrong VM, should be XO.

In Dashboard/Health it's OK, it says XO in both alert and end of alert ??Not a big deal for me, but I thought might as well report it

-

Might be a bug, worth pinging @MathieuRA

-

P ph7 referenced this topic on

-

@ph7 I'm seeing the same thing as you, where I'm getting a mismatch between the server that is sending out the alert and then ending the alert. Just like you, it is actually the XO server that is truly the one that should be alerting. The second server (and it's always the same second server) is NOT having any issues with CPU or memory usage but is being drug into the alerts for some strange reason.

I'm currently on Commit 2e8d3 running Xen from sources. Yes, I know I'm 5 commits behind right now, and will update as soon as I finish this message. However, this issue has been going on for me for some time now and when I saw others with the same issue, I figured I'd add to the chain.

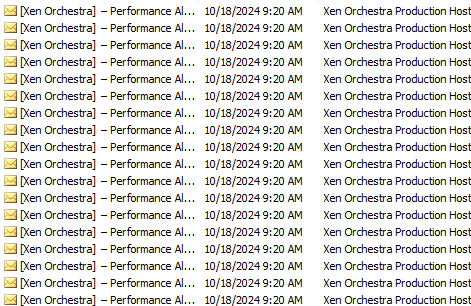

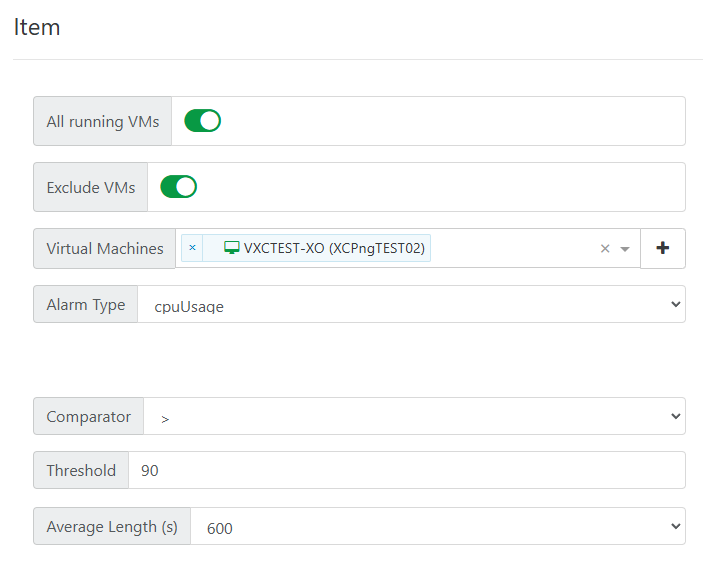

One other thing that happed around the same time this issue started... it seems the Average Length value for alerts are being ignored, or are at least being handled differently than they had previously. For example, I have my CPU alert set to trip if it exceeds 90% for over 600 seconds. Before the issue started, if I had a long running backup, my CPU would go over 90% and could sometimes stay there for an hour or more. During that period, I would get a single alert after the CPU was over 90% for that period of time and then Xen was "smart" enough that it would keep an eye on the average, so a brief couple second dip below 90% would NOT send out an "end of alert" and then a second "alert" message when the CPU went over 90% once again. This is not happening anymore... if the CPU spikes over my 90% threshold, I get an almost immediate alert message. The instant the CPU goes below the 90% threshold, I get an immediate end of alert message. If threshold goes back over 90%, even a few seconds later, I get yet another alert message.

This has had the effect where instead of getting a single message that spans the duration of the time the threshold is exceeded, where brief dips below were ignored if they were only a few seconds long, I am now getting an alert/end of alert/alert sequence for every seconds-long dip in CPU usage. Last night, for example, I received over 360 alert e-mails because of this, with many happening within seconds:

So... just confirming what @ph7 has been seeing... alerts are sending out from one server and a second sends the end-of-alerts, and for some reason the ability of Xen to average the alerts over the selected period of time so messages aren't sent out with every single seconds-long dip below the threshold is no longer working, as well.

Thanks!

-

Also ping @Bastien-Nollet & @MathieuRA since it seems also visible here

-

Hi. I am finally able to reproduce the end of alert issue.

However, I was only able to reproduce if I used the "ALL *" options and not by manually selecting the objects to monitor. Can you confirm that you are usingAll running VMs/Hosts/SRs? -

@MathieuRA Yes, I can confirm I am using the All Running VMs and All Running Hosts (I am not using All Running SRs, but I never get alerts for those because I have a LOT of free disk space).

I did place an exclusion for one of my VMs (the one that was generating dozens and dozens of alerts) to cut down on some of that chatter, but even with one machine excluded, when I do get a report from one of the other VMs it still has the same issue: the proper VM will generate the alert, but an improper VM will be reported in the end of alert message.

So... as far as I can tell, we still have the issue with the improper machine identification and the Average Length field is ignored so a machine that pops over the threshold, then briefly under the threshold for a few seconds, then back over the threshold again will generate three messages (alert, end of alert, alert) in several seconds instead of looking at the average to make sure the dip isn't just a brief one.

Hopefully that makes sense.

Thanks again!

-

@JamfoFL As the bug appears to be non-trivial, I’ll create the issue on our end, and then we’ll see with the team to schedule this task. We’ll keep you updated here.

-

@MathieuRA

Yes, All running hosts and all running VMs -

B Bastien Nollet referenced this topic on

B Bastien Nollet referenced this topic on

-

@MathieuRA Thanks so much! I appreciate all the effort!

-

@ph7 said in Lots of performance alerts after upgrading XO to commit aa490:

@olivierlambert

One strange note when I get the alarms on e-mail during a full backup of my running XOAlert: vm cpu usage > 65%, XO 67,8%End of alert: vm cpu usage > 65% SyncMate 0,3%End of alert is the wrong VM, should be XO.

In Dashboard/Health it's OK, it says XO in both alert and end of alert ??Not a big deal for me, but I thought might as well report it

I added a new VM and put it in a backup job (which had a concurrency of 2) and got these new alerts:

ALERT: VM CPU usage > 80% XO5: 80.4%

End of alert: vm cpu usage > 80% HomeAssistant: 6,7%

It seems like the reporting has changed and is reporting the latest VM.The host alert is reporting in the same way

ALERT: host memory usage > 90% X2 🚀: 92.7% used

END OF ALERT: host memory usage > 90% X1: 11.3%I have changed all jobs to concurrency 1 and I have not got any SR alert

-

-

@Bastien-Nollet

Hi

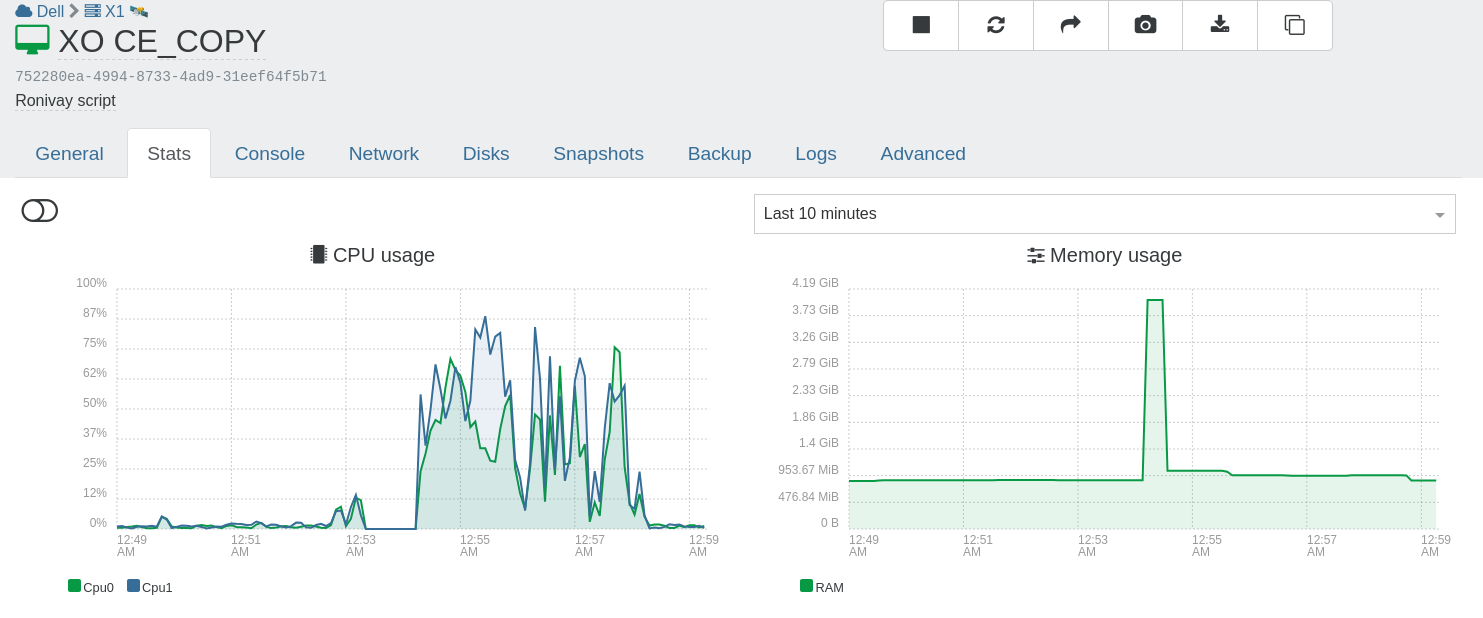

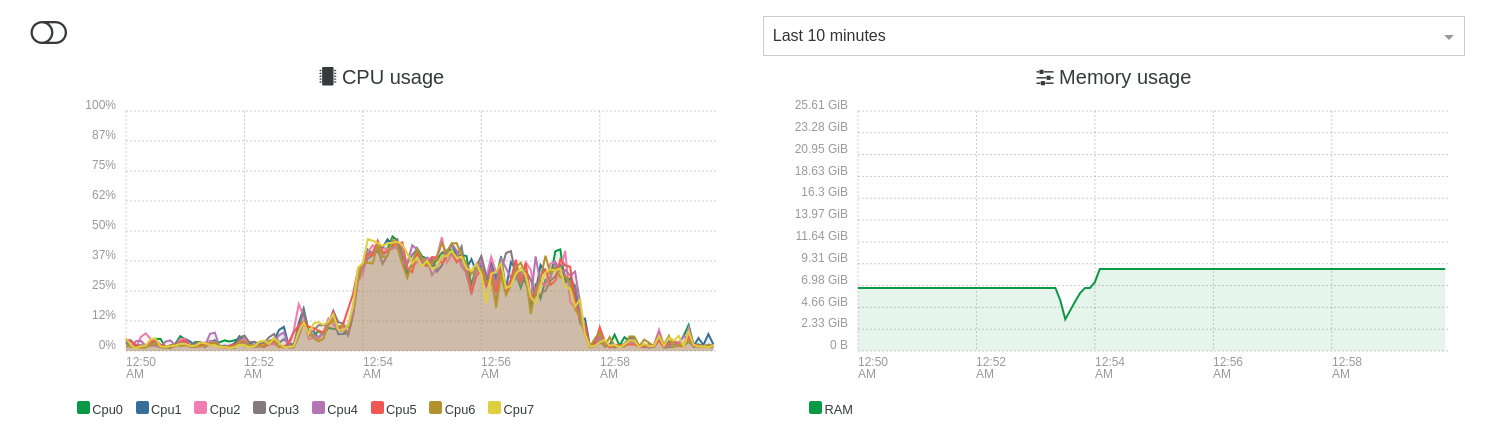

I updated my test server and a copy of a Ronivay XO1st run

Host:

I only got 1+1 alert on e-mail, that seems OK.

2nd run

This time NO alert.

Maybe You should check some more

I will test again tomorrow, Good night... -

@ph7

And it did report the correct VM at the end of alert