XO Community edition backups dont work as of build 6b263

-

@wf said in XO Community edition backups dont work as of build 6b263:

How do I roll back to a previous commit?

git checkout <target commit>Then rebuild with

yarn; yarn build -

Hi,

Having the same issue, took me a while to figure out that it was a bug as the last backup got interrupted during a power failure and I thought the issues was to do with that! I reverted to a snapshot pre xoa update and it seems to be working now.

-

-

@archw said in XO Community edition backups dont work as of build 6b263:

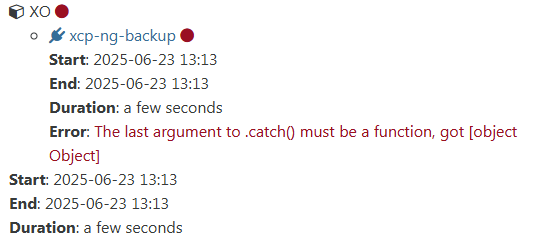

The last argument to .catch() must be a function, got [object Object]

thanks for the reports

we are testing the fix ( https://github.com/vatesfr/xen-orchestra/pull/8739 )This may be bigger than a simple typo, this code last changes was 2 years ago

We reworked the timeout/retry mechanic of the unlink method as that doesn't perform as well as expected on some object storage backend, expecting new clues on how to improve the situation.

And you reports (24h after the merges) pointed us to a possible solution : We were deleting up to 10^depth files in parallel instead of 2^depth. -

@florent commit 035f5 still seems not to work. Meta Data Backup lasts a few seconds but now it is starting for 2 minutes without doing anything.

-

@uwood a metadata backup shouldn't trigger any timeout

I am retesting it -

@florent meta data backups make the same problems as normal backups. with commit 85868 the problem still exists.

-

A acebmxer referenced this topic on

-

commit 040ae Meta Data Backup fails now instantly.

-

@uwood the fix regarding the "catch" error is still in review, will probably be merged in a few hours

the second fix regarding the stuck stream ( so pool metadata, full backup and disaster recovery) is merged . This one was effectively added in friday's PR

-

second fix is merged

Can you retest master, latest commit ? -

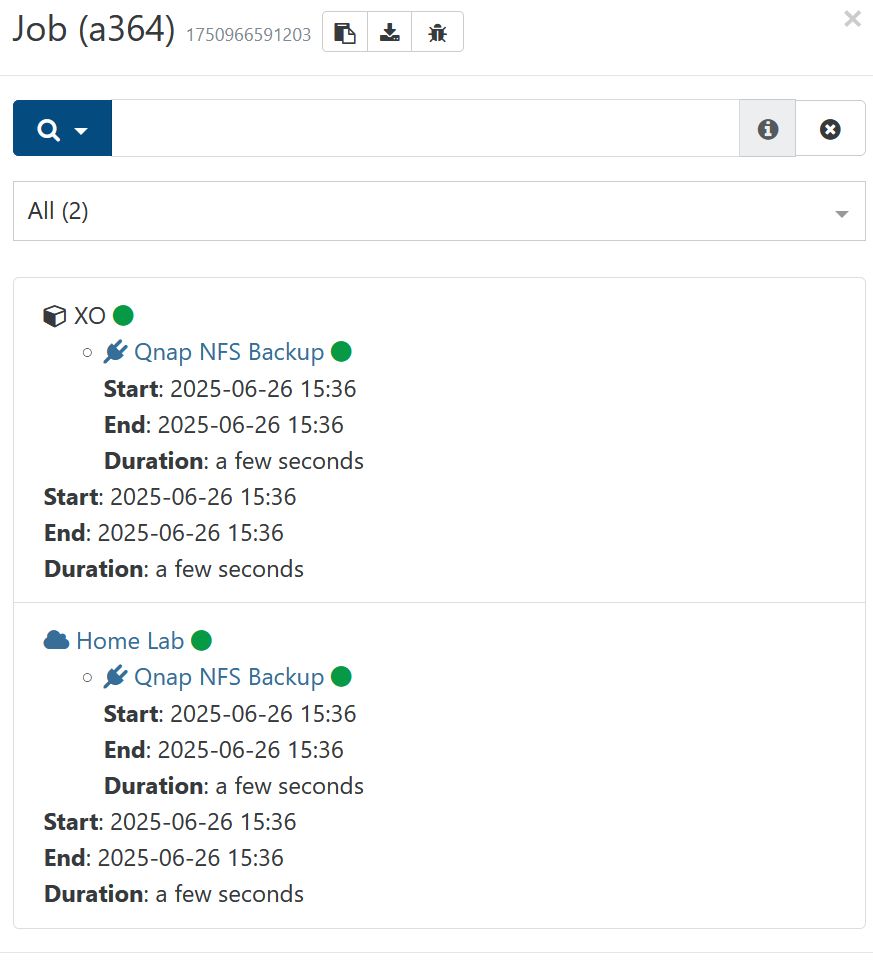

@florent Just updated, and the pool metadata and XO config backup seems to be working. Running the main back up right now and seems to be running fine.

-

@florent commit 1a7b5 seems to work now. meta backup has run succesfully and other backup has run ok too.

-

I've updated to the latest master as well (1a7b5) and my metadata/config backups completed successfully, as did my smaller test backup. However, only 16 of 19 VMs in my main backup job completed. I am re-running the main backup now and will report back if the error recurs.

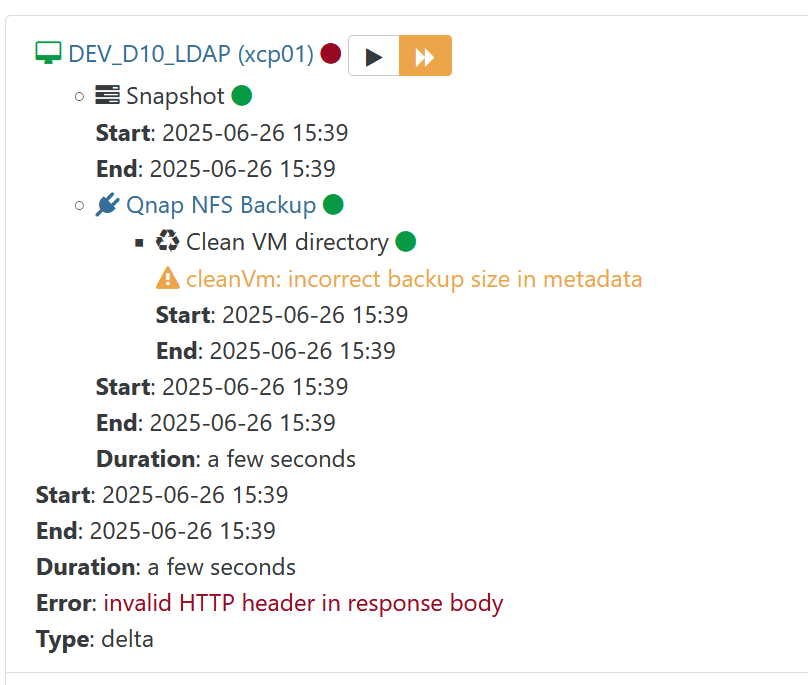

Update: The job is still running, but here is the error from the three VMs that failed previously: "Error: invalid HTTP header in response body"

-

@katapaltes I did have that HTTP error before, but everything is running successfully now. I'm getting " cleanVm: incorrect backup size in metadata" on all the VMs, but not sure if that's related - it was happening before so not sure if it's related to my power faliure.

-

Yes, you will always see those "cleanVm: incorrect backup size in metadata" warnings, and Vates has said that it's OK to ignore them. You won't see them if you "Store backup as multiple data blocks instead of a whole VHD file," but your storage will need to support the required 500-1000 files per backed up GB (and of course you'll need to evaluate whether you wish to use multiple data blocks instead of VHD files in the first place).

I re-ran my backups and the three VMs that failed backup previously have now succeeded.

-

posted in the other thread as well but Xen Orchestra, commit 1a7b5 backups are working again. I tested configure and vm backups. They are being backup to NFS share.

-

@katapaltes said in XO Community edition backups dont work as of build 6b263:

Error: invalid HTTP header in response body

Error: invalid HTTP header in response body should come with a trace in the logs ( journalctl under the same acount that runs xo )

could you extract it ? -

Hello @florent , the results below between 8:04:10 and 8:04:36 seem to align with one of the three failed VM backups. NB: The error did not return when I did another Delta backup (everything backed up successfully). I am now doing a Force Full Backup on all VMs just to be safe. As of now I am still running 1a7b5 and have not yet updated to 7994f.

Jun 23 08:01:14 sxoap31 xo-server[19663]: } Jun 23 08:04:10 sxoap31 xo-server[19663]: 2025-06-23T13:04:10.703Z xo:backups:MixinBackupWriter WARN cleanVm: incorrect backup size in metadata { Jun 23 08:04:10 sxoap31 xo-server[19663]: path: '/xo-vm-backups/bca06bdf-3e1f-40a4-570e-7b584b356e22/20250623T130122Z.json', Jun 23 08:04:10 sxoap31 xo-server[19663]: actual: 12283019264, Jun 23 08:04:10 sxoap31 xo-server[19663]: expected: 12288363520 Jun 23 08:04:10 sxoap31 xo-server[19663]: } Jun 23 08:04:31 sxoap31 xo-server[19663]: 2025-06-23T13:04:31.411Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target V> Jun 23 08:04:31 sxoap31 xo-server[19663]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202506230729/packages/xen-api/_XapiError.mjs:16:12) Jun 23 08:04:31 sxoap31 xo-server[19663]: at default (file:///opt/xo/xo-builds/xen-orchestra-202506230729/packages/xen-api/_getTaskResult.mjs:13:29) Jun 23 08:04:31 sxoap31 xo-server[19663]: at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202506230729/packages/xen-api/index.mjs:1073:24) Jun 23 08:04:31 sxoap31 xo-server[19663]: at file:///opt/xo/xo-builds/xen-orchestra-202506230729/packages/xen-api/index.mjs:1107:14 Jun 23 08:04:31 sxoap31 xo-server[19663]: at Array.forEach (<anonymous>) Jun 23 08:04:31 sxoap31 xo-server[19663]: at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202506230729/packages/xen-api/index.mjs:1097:12) Jun 23 08:04:31 sxoap31 xo-server[19663]: at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202506230729/packages/xen-api/index.mjs:1270:14) Jun 23 08:04:31 sxoap31 xo-server[19663]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Jun 23 08:04:31 sxoap31 xo-server[19663]: code: 'SR_BACKEND_FAILURE_460', Jun 23 08:04:31 sxoap31 xo-server[19663]: params: [ Jun 23 08:04:31 sxoap31 xo-server[19663]: '', Jun 23 08:04:31 sxoap31 xo-server[19663]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', Jun 23 08:04:31 sxoap31 xo-server[19663]: '' Jun 23 08:04:31 sxoap31 xo-server[19663]: ], Jun 23 08:04:31 sxoap31 xo-server[19663]: call: undefined, Jun 23 08:04:31 sxoap31 xo-server[19663]: url: undefined, Jun 23 08:04:31 sxoap31 xo-server[19663]: task: task { Jun 23 08:04:31 sxoap31 xo-server[19663]: uuid: 'aeac5fce-c3c8-d3d5-eff0-f1aa3b7dc4dc', Jun 23 08:04:31 sxoap31 xo-server[19663]: name_label: 'Async.VDI.list_changed_blocks', Jun 23 08:04:31 sxoap31 xo-server[19663]: name_description: '', Jun 23 08:04:31 sxoap31 xo-server[19663]: allowed_operations: [], Jun 23 08:04:31 sxoap31 xo-server[19663]: current_operations: {}, Jun 23 08:04:31 sxoap31 xo-server[19663]: created: '20250623T13:04:30Z', Jun 23 08:04:31 sxoap31 xo-server[19663]: finished: '20250623T13:04:30Z', Jun 23 08:04:31 sxoap31 xo-server[19663]: status: 'failure', Jun 23 08:04:31 sxoap31 xo-server[19663]: resident_on: 'OpaqueRef:e9735ec6-f9a5-da8a-0891-262ae26db402', Jun 23 08:04:31 sxoap31 xo-server[19663]: progress: 1, Jun 23 08:04:31 sxoap31 xo-server[19663]: type: '<none/>', Jun 23 08:04:31 sxoap31 xo-server[19663]: result: '', Jun 23 08:04:31 sxoap31 xo-server[19663]: error_info: [ Jun 23 08:04:31 sxoap31 xo-server[19663]: 'SR_BACKEND_FAILURE_460', Jun 23 08:04:31 sxoap31 xo-server[19663]: '', Jun 23 08:04:31 sxoap31 xo-server[19663]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', Jun 23 08:04:31 sxoap31 xo-server[19663]: '' Jun 23 08:04:31 sxoap31 xo-server[19663]: ], Jun 23 08:04:31 sxoap31 xo-server[19663]: other_config: {}, Jun 23 08:04:31 sxoap31 xo-server[19663]: subtask_of: 'OpaqueRef:NULL', Jun 23 08:04:31 sxoap31 xo-server[19663]: subtasks: [], Jun 23 08:04:31 sxoap31 xo-server[19663]: backtrace: '(((process xapi)(filename lib/backtrace.ml)(line 210))((process xapi)(filename ocaml/xapi/storage_utils.ml)(line 141))((process xapi)(filename ocaml/xapi/message_forwardi> Jun 23 08:04:31 sxoap31 xo-server[19663]: } Jun 23 08:04:31 sxoap31 xo-server[19663]: } Jun 23 08:04:36 sxoap31 xo-server[19663]: 2025-06-23T13:04:36.914Z xo:xapi:vdi WARN invalid HTTP header in response body { Jun 23 08:04:36 sxoap31 xo-server[19663]: body: 'HTTP/1.1 500 Internal Error\r\n' + Jun 23 08:04:36 sxoap31 xo-server[19663]: 'content-length: 318\r\n' + Jun 23 08:04:36 sxoap31 xo-server[19663]: 'content-type: text/html\r\n' + Jun 23 08:04:36 sxoap31 xo-server[19663]: 'connection: close\r\n' + Jun 23 08:04:36 sxoap31 xo-server[19663]: 'cache-control: no-cache, no-store\r\n' + Jun 23 08:04:36 sxoap31 xo-server[19663]: '\r\n' + Jun 23 08:04:36 sxoap31 xo-server[19663]: '<html><body><h1>HTTP 500 internal server error</h1>An unexpected error occurred; please wait a while and try again. If the problem persists, please contact your support representati> Jun 23 08:04:36 sxoap31 xo-server[19663]: } Jun 23 08:05:14 sxoap31 systemd-timesyncd[412]: Timed out waiting for reply from 23.155.40.38:123 (0.debian.pool.ntp.org). -

All is well for me ...thanks for the hard work!

-

Seems like backup are broken again for me. I tried the latest build.

Xen Orchestra, commit d8370Im seeing two kinds of errors:

Error 1 The job starts and does nothing. I do see it creating a snap shot as I deleted the existing snapshot as part of testingError2: The job runs and fails with http error.

Testing so far:

XO config backup still work fine.