SR Garbage Collection running permanently

-

The GC is doing one chain after another. We told XenServer team back in 2016 that it could probably merge multiple chains at once, but they told us it was too risky. So we did not focus on that. Patience is key there. Clearly, we'll do better in the future.

-

@olivierlambert got it. Will see what happens in few days.

-

It will accelerate. First merges are the slowest ones, but then it's going faster and faster.

-

2 days, few backup cycles, snapshots amount won't descrease.

-

@Tristis-Oris Check

SMlogfor further exceptions. -

@Tristis-Oris Note that if the SR storage device is around 90% or more full, a coalesce may not work. You have to either delete or move then enough storage so that there is adequate free space.

How full is the SR? That said, a coalesce process can take up to 24 hours to complete. I wonder if this shows up and with what progress when you run "xe task-list" ? -

@Danp No, can't find any exceptions.

that typical log for now, it repeating a lot of times:Jan 20 00:02:00 host SM: [2736362] Kicking GC Jan 20 00:02:00 host SM: [2736362] Kicking SMGC@93d53646-e895-52cf-7c8e-df1d5e84f5e4... Jan 20 00:02:00 host SM: [2736362] utilisation 40394752 <> 34451456 * Jan 20 00:02:00 host SM: [2736362] VDIs changed on disk: ['34fa9f2d-95fa-468e-986c-ade22b92b1f3', '56b94e20-01ae-4da1-99f8-03aa901da64f', 'a75c6e7b-7f8d-4a4b-99$ Jan 20 00:02:00 host SM: [2736362] Updating VDI with location=34fa9f2d-95fa-468e-986c-ade22b92b1f3 uuid=34fa9f2d-95fa-468e-986c-ade22b92b1f3 * Jan 20 00:02:00 host SM: [2736362] lock: released /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:00 host SMGC: [2736466] === SR 93d53646-e895-52cf-7c8e-df1d5e84f5e4: gc === Jan 20 00:02:00 host SM: [2736466] lock: opening lock file /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/running * Jan 20 00:02:00 host SMGC: [2736466] Found 0 cache files Jan 20 00:02:00 host SM: [2736466] lock: tried lock /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr, acquired: True (exists: True) Jan 20 00:02:00 host SM: [2736466] ['/usr/bin/vhd-util', 'scan', '-f', '-m', '/var/run/sr-mount/93d53646-e895-52cf-7c8e-df1d5e84f5e4/*.vhd'] Jan 20 00:02:00 host SM: [2736614] lock: opening lock file /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:00 host SM: [2736614] sr_update {'host_ref': 'OpaqueRef:3570b538-189d-6a16-fe61-f6d73cc545dc', 'command': 'sr_update', 'args': [], 'device_config':$ Jan 20 00:02:00 host SM: [2736614] lock: closed /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:01 host SM: [2736466] pread SUCCESS * Jan 20 00:02:01 host SMGC: [2736466] SR 93d5 ('VM Sol flash') (73 VDIs in 39 VHD trees): Jan 20 00:02:01 host SMGC: [2736466] *70119dcb(50.000G/45.497G?) Jan 20 00:02:01 host SMGC: [2736466] e6a3e53a(50.000G/107.500K?) * Jan 20 00:02:01 host SM: [2736466] lock: released /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/sr Jan 20 00:02:01 host SMGC: [2736466] Got sm-config for *70119dcb(50.000G/45.497G?): {'vhd-blocks': 'eJzFlrFuwjAQhk/Kg5R36Ngq5EGQyJSsHTtUPj8WA4M3GDrwBvEEDB2yEaQQ$ * Jan 20 00:02:01 host SMGC: [2736466] No work, exiting Jan 20 00:02:01 host SMGC: [2736466] GC process exiting, no work left Jan 20 00:02:01 host SM: [2736466] lock: released /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/gc_active Jan 20 00:02:01 host SMGC: [2736466] In cleanup Jan 20 00:02:01 host SMGC: [2736466] SR 93d5 ('VM Sol flash') (73 VDIs in 39 VHD trees): no changes Jan 20 00:02:01 host SM: [2736466] lock: closed /var/lock/sm/93d53646-e895-52cf-7c8e-df1d5e84f5e4/running@tjkreidl Free space is enough. I never see GC job running for a long, so never see it at other pools or after fix. Have no any coalesce in queue.

xe task-listshow nothing.Maybe i need to cleanup bad VDIs manually for first time?

-

@Tristis-Oris It wouldn't hurt to do a manual cleanup. Not sure if a reboot might help, but strange that no task is showing as active. Do you have other SRs on which you can try a scan/coalesce?

Are there any VMs in a weird power state? -

@tjkreidl nothing unusual.

I found same issue on another 8.3 pool, another SR, but never seen related GC tasks. No exceptions at log.

But no problems with 3rd 8.3 pool.SR scan don't trigger GC, can i run it manually?

-

@Tristis-Oris By manually, do you mean from the CLI vs. from the GUI? If so, then:

xe sr-scan sr-uuid=sr_uuid

Check your logs (probably /var/log/SMlog) and run "xe task-list" to see what, if anything, is active. -

@tjkreidl but that same scan as

?

? -

I guess I'm a little confused.

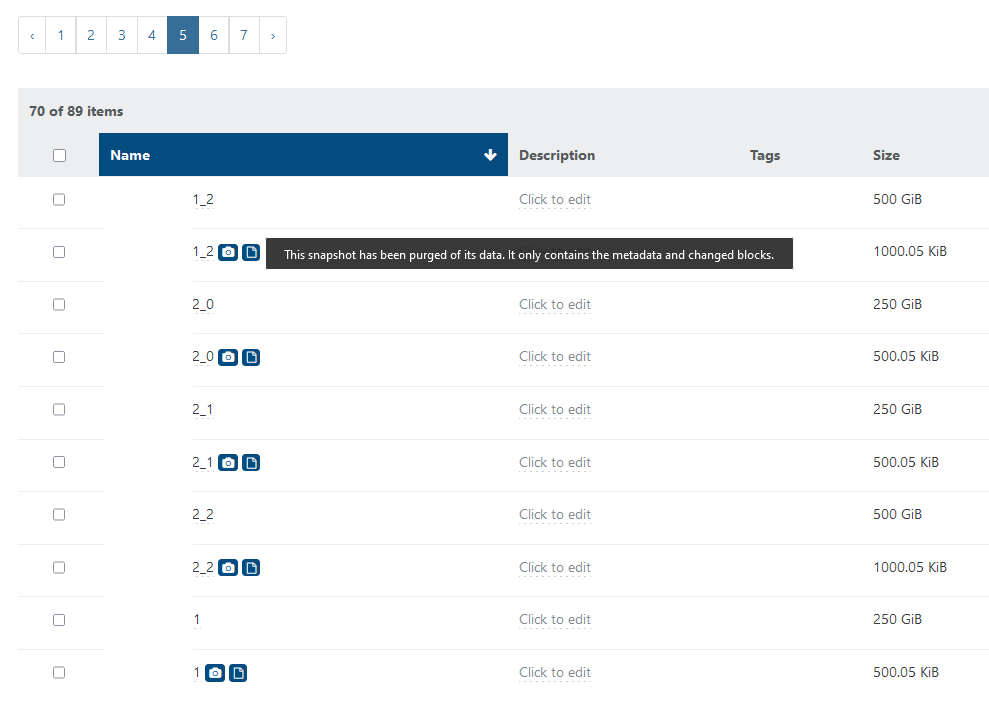

Probably, after fix all bad snapshots been removed, and now they are exist only for hatled (archive) VMs. They got backup only once with such job:

so without backup tasks, GC for vdi chains not running. Is it safe to remove them manually, or better to run backup task again? (that very long and not required).

-

@Tristis-Oris Do first verify you have good backups before considering deleting snapshots. You could also just export the snapshots associated with the VMs..

As to the GUI vs. CLI, it should do the same thing, but if it runs, it should show up in the task list. -

Hello, I've migrated 200 VMs from an XCP-NG 8.2 cluster to 8.3 on new iSCSi SRs and I'm encountering quite a few VDIs to coalesce in my various SRs. As a result, I constantly have garbage collection tasks running in the background, taking several hours and restarting each time because there are always VDIs to coalesce. This also has a negative impact on my Vinchin backup jobs, which take a very long time (over 15 minutes to do a snapshot, for example).

I've set the SR autoscan to false too to prevent this problem but nothing helps.I don't have this issue at all with my other 8.2 clusters, is it normal?

-

Pinging @Team-Storage

-

@Razor_648 Do you have any exceptions in your /var/log/SMlog files?

-

@ronan-a for example, for ID 3407716 in SMlog for Dom0 master :

Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] Setting LVM_DEVICE to /dev/disk/by-scsid/3624a93704071cc78f82b4df4000113ee Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] Setting LVM_DEVICE to /dev/disk/by-scsid/3624a93704071cc78f82b4df4000113ee Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] lock: opening lock file /var/lock/sm/5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/sr Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] LVMCache created for VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89 Jul 9 09:44:45 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893214.155789358' Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] ['/sbin/vgs', '--readonly', 'VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89'] Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] lock: acquired /var/lock/sm/5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/sr Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] LVMCache: will initialize now Jul 9 09:44:45 na-mut-xen-03 SM: [3407716] LVMCache: refreshing Jul 9 09:44:46 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893215.105961535' Jul 9 09:44:46 na-mut-xen-03 SM: [3407716] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89'] Jul 9 09:44:46 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:46 na-mut-xen-03 SM: [3407716] lock: released /var/lock/sm/5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/sr Jul 9 09:44:46 na-mut-xen-03 SM: [3407716] Entering _checkMetadataVolume Jul 9 09:44:46 na-mut-xen-03 SM: [3407716] vdi_list_changed_blocks {'host_ref': 'OpaqueRef:8bb2bb09-1d56-cb47-6014-91c3eca78529', 'command': 'vdi_list_changed_blocks', 'args': ['OpaqueRef:3a4d6847-eb39-5a96-f2c2-ffcad3da4477'], 'device_config': {'SRmaster': 'true', 'port': '3260', 'SCSIid': '3624a93704071cc78f82b4df4000113ee', 'targetIQN': 'iqn.2010-06.com.purestorage:flasharray.2498b71d53b104d9', 'target': '10.20.0.21', 'multihomelist': '10.20.0.21:3260,10.20.0.22:3260,10.20.1.21:3260,10.20.1.22:3260'}, 'session_ref': '******', 'sr_ref': 'OpaqueRef:78f4538b-4c0b-531c-c523-fd92f8738fc5', 'sr_uuid': '5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89', 'vdi_ref': 'OpaqueRef:05e1857b-f39d-47da-9c06-d0d35ba97f00', 'vdi_location': '9fae176c-2c5f-4fd1-91fd-9bdb32533795', 'vdi_uuid': '9fae176c-2c5f-4fd1-91fd-9bdb32533795', 'subtask_of': 'DummyRef:|dea7f1a8-9116-8be0-6ba8-c3ecac77b442|VDI.list_changed_blocks', 'vdi_on_boot': 'persist', 'vdi_allow_caching': 'false'} Jul 9 09:44:47 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893215.990307086' Jul 9 09:44:47 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893216.783648117' Jul 9 09:44:48 na-mut-xen-03 SM: [3407716] lock: opening lock file /var/lock/sm/9fae176c-2c5f-4fd1-91fd-9bdb32533795/cbtlog Jul 9 09:44:48 na-mut-xen-03 SM: [3407716] lock: acquired /var/lock/sm/9fae176c-2c5f-4fd1-91fd-9bdb32533795/cbtlog Jul 9 09:44:48 na-mut-xen-03 SM: [3407716] LVMCache: refreshing Jul 9 09:44:48 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893217.178993739' Jul 9 09:44:48 na-mut-xen-03 SM: [3407716] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89'] Jul 9 09:44:48 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:49 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893218.029420281' Jul 9 09:44:49 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-ay', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/9fae176c-2c5f-4fd1-91fd-9bdb32533795.cbtlog'] Jul 9 09:44:49 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:49 na-mut-xen-03 SM: [3407716] ['/usr/sbin/cbt-util', 'get', '-n', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/9fae176c-2c5f-4fd1-91fd-9bdb32533795.cbtlog', '-c'] Jul 9 09:44:49 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:49 na-mut-xen-03 SM: [3407716] fuser /dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/9fae176c-2c5f-4fd1-91fd-9bdb32533795.cbtlog => 1 / '' / '' Jul 9 09:44:50 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893219.268237038' Jul 9 09:44:50 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-an', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/9fae176c-2c5f-4fd1-91fd-9bdb32533795.cbtlog'] Jul 9 09:44:50 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:51 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893220.721225461' Jul 9 09:44:51 na-mut-xen-03 SM: [3407716] ['/sbin/dmsetup', 'status', 'VG_XenStorage--5301ae76--31fd--9ff0--7d4c--65c8b1ed8f89-9fae176c--2c5f--4fd1--91fd--9bdb32533795.cbtlog'] Jul 9 09:44:51 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:51 na-mut-xen-03 SM: [3407716] lock: released /var/lock/sm/9fae176c-2c5f-4fd1-91fd-9bdb32533795/cbtlog Jul 9 09:44:51 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893220.765534349' Jul 9 09:44:52 na-mut-xen-03 SM: [3407716] lock: opening lock file /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:44:52 na-mut-xen-03 SM: [3407716] lock: acquired /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:44:52 na-mut-xen-03 SM: [3407716] LVMCache: refreshing Jul 9 09:44:53 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893222.038114535' Jul 9 09:44:53 na-mut-xen-03 SM: [3407716] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89'] Jul 9 09:44:53 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:54 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893222.921315355' Jul 9 09:44:54 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-ay', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog'] Jul 9 09:44:54 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:54 na-mut-xen-03 SM: [3407716] ['/usr/sbin/cbt-util', 'get', '-n', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog', '-s'] Jul 9 09:44:54 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:54 na-mut-xen-03 SM: [3407716] fuser /dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog => 1 / '' / '' Jul 9 09:44:55 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893224.138058885' Jul 9 09:44:55 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-an', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog'] Jul 9 09:44:55 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:56 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893225.053222085' Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] ['/sbin/dmsetup', 'status', 'VG_XenStorage--5301ae76--31fd--9ff0--7d4c--65c8b1ed8f89-54bc2594--5228--411a--a4b2--cc1a7502d9a4.cbtlog'] Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] lock: released /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] DEBUG: Processing VDI 54bc2594-5228-411a-a4b2-cc1a7502d9a4 of size 107374182400 Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] lock: acquired /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] LVMCache: refreshing Jul 9 09:44:56 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893225.076140954' Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89'] Jul 9 09:44:56 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:57 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893225.934427314' Jul 9 09:44:57 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-ay', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog'] Jul 9 09:44:57 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:57 na-mut-xen-03 SM: [3407716] ['/usr/sbin/cbt-util', 'get', '-n', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog', '-b'] Jul 9 09:44:57 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:44:57 na-mut-xen-03 SM: [3407716] fuser /dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog => 1 / '' / '' Jul 9 09:44:59 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893227.790063374' Jul 9 09:44:59 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-an', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog'] Jul 9 09:44:59 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:45:00 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893228.82935698' Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] ['/sbin/dmsetup', 'status', 'VG_XenStorage--5301ae76--31fd--9ff0--7d4c--65c8b1ed8f89-54bc2594--5228--411a--a4b2--cc1a7502d9a4.cbtlog'] Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] lock: released /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] Size of bitmap: 1638400 Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] lock: acquired /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] LVMCache: refreshing Jul 9 09:45:00 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893229.259729804' Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89'] Jul 9 09:45:00 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:45:01 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893230.644242194' Jul 9 09:45:01 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-ay', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog'] Jul 9 09:45:02 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:45:02 na-mut-xen-03 SM: [3407716] ['/usr/sbin/cbt-util', 'get', '-n', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog', '-c'] Jul 9 09:45:02 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:45:02 na-mut-xen-03 SM: [3407716] fuser /dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog => 1 / '' / '' Jul 9 09:45:02 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893231.488289557' Jul 9 09:45:02 na-mut-xen-03 SM: [3407716] ['/sbin/lvchange', '-an', '/dev/VG_XenStorage-5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/54bc2594-5228-411a-a4b2-cc1a7502d9a4.cbtlog'] Jul 9 09:45:03 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:45:04 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893233.069490829' Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] ['/sbin/dmsetup', 'status', 'VG_XenStorage--5301ae76--31fd--9ff0--7d4c--65c8b1ed8f89-54bc2594--5228--411a--a4b2--cc1a7502d9a4.cbtlog'] Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] pread SUCCESS Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] lock: released /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:45:04 na-mut-xen-03 fairlock[3949]: /run/fairlock/devicemapper sent '3407716 - 1893233.110222368' Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] Raising exception [460, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]] Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] ***** generic exception: vdi_list_changed_blocks: EXCEPTION <class 'xs_errors.SROSError'>, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated] Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/SRCommand.py", line 113, in run Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] return self._run_locked(sr) Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/SRCommand.py", line 163, in _run_locked Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] rv = self._run(sr, target) Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/SRCommand.py", line 333, in _run Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] return target.list_changed_blocks() Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/VDI.py", line 761, in list_changed_blocks Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] "Source and target VDI are unrelated") Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] ***** LVHD over iSCSI: EXCEPTION <class 'xs_errors.SROSError'>, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated] Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/SRCommand.py", line 392, in run Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] ret = cmd.run(sr) Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/SRCommand.py", line 113, in run Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] return self._run_locked(sr) Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/SRCommand.py", line 163, in _run_locked Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] rv = self._run(sr, target) Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/SRCommand.py", line 333, in _run Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] return target.list_changed_blocks() Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] File "/opt/xensource/sm/VDI.py", line 761, in list_changed_blocks Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] "Source and target VDI are unrelated") Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] lock: closed /var/lock/sm/9fae176c-2c5f-4fd1-91fd-9bdb32533795/cbtlog Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] lock: closed /var/lock/sm/54bc2594-5228-411a-a4b2-cc1a7502d9a4/cbtlog Jul 9 09:45:04 na-mut-xen-03 SM: [3407716] lock: closed /var/lock/sm/5301ae76-31fd-9ff0-7d4c-65c8b1ed8f89/sr -

This post is deleted! -

@ronan-a Hello, do you have any news please ?

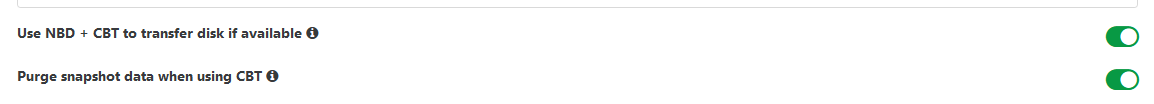

I'd like to understand the difference between the 8.2 and 8.3 versions; why is there Garbage Collection in 8.3 and not in 8.2? On what criteria is GC triggered? We've forced autoscan=false, leaf-coalesce=false on the iSCSI SRs in our 8.3 cluster and the SRs are becoming full, I'd like to understand why too, considering my backup jobs have CBT enabled. -

@Razor_648 Hi,

The log you showed only mean that it couldn't compare two VDI together using their CBT.

It sometimes happen that a CBT chain become disconnected.Disabling leaf-coalesce mean it won't run on leaf, VHD chain will always be 2 depths deep.

You migrated 200 VMs, every disks of those VMs had snapshot made that then need to be coalesced, it can take a while.

Your backup then also do a snapshot each time while running that need to be coalesced.There are GC in both version of XCP-ng 8.2 and 8.3.

The GC is run independently of auto-scan, if you really want to disable it you can do it temporarily using/opt/xensource/sm/cleanup.py -x -u <SR UUID>it will stop the GC until you press enter. I guess you could run it in a tmux to make it stop until next reboot. But it would be better to find the problem or if there really is no problem let the GC work until it's finished.

It's a bit weird to need 15 minutes to take a snapshot, it would point to a problem though.

Do you have any other error than the CBT one in your SMlog?