the writer IncrementalRemoteWriter has failed the step writer.beforeBackup() with error Lock file is already being held. It won't be used anymore in this job execution.

-

Thanks. As soon the commit generating the error is identified, this will be very helpful for @florent

-

@olivierlambert I can then restore the config and retest once it's identified/fixed.... But just now I need the backups working without issues

-

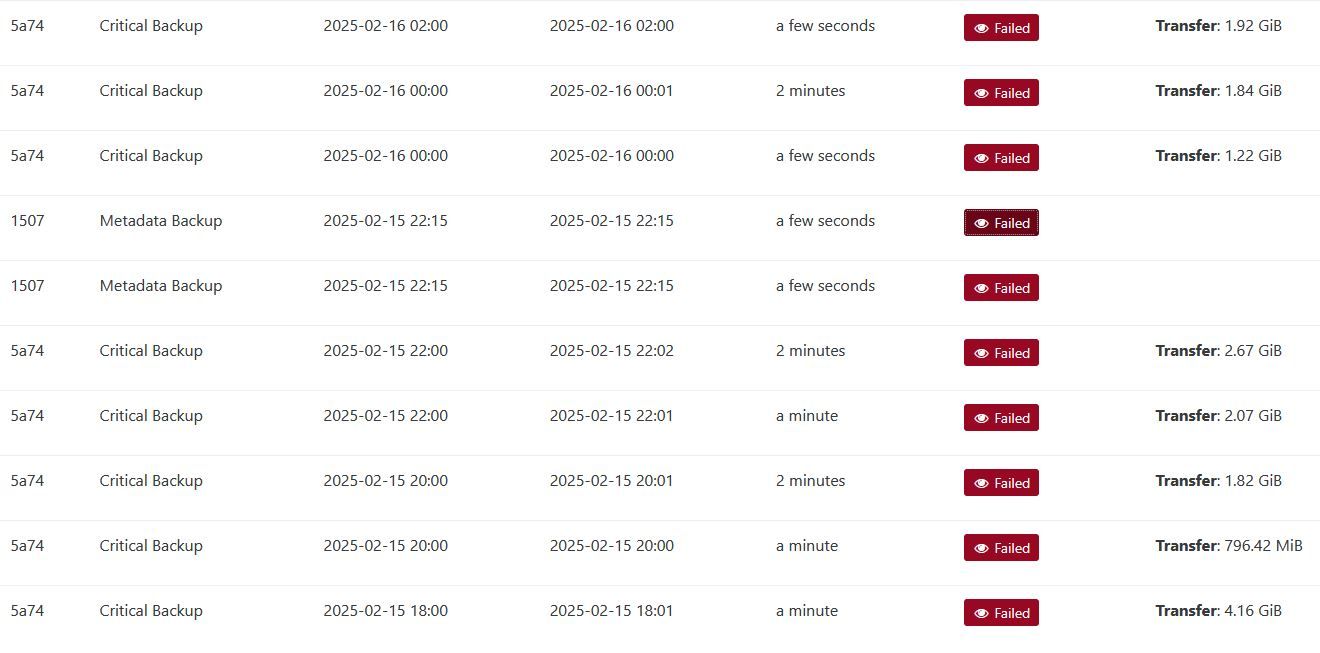

So since I went back to an older version, I have not had a single backup fail. I have 3 main backups that run. 2 of them daily (my pool metadata/XO config and a delta of 18vms) and then 1 every 2 hours (also a delta but only of 3 vms). ALL of them would fail in one way or another, even the metadata. Something I just noticed now is it looks like each backup is starting twice or at least there are failed logs indicating so. If they are actually running twice that would explain the errors. This would also explain why clicking the retry backup option would always succeed.

Error from the metadata backup:

EEXIST: file already exists, open '/run/xo-server/mounts/c9199dfc-af05-4707-badb-8741e61daafb/xo-config-backups/f19069dd-f98b-4b41-9ca8-0e711fc75968/20250216T041500Z/data.json' -

@SudoOracle are you able to find the offending commit?

-

@olivierlambert Still working on it. It's between a4986 and 494ea.

-

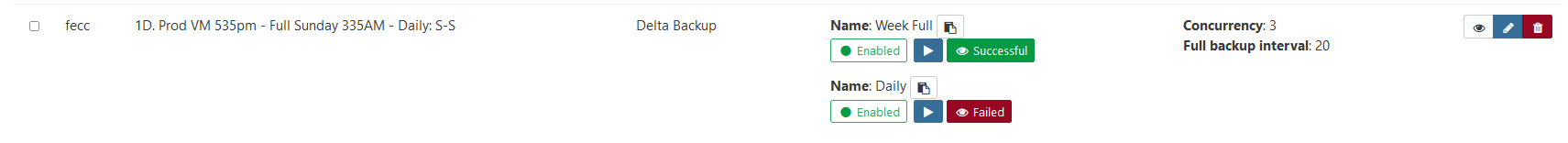

@olivierlambert Just to confirm that the error have stopped since I changed the backup plans.

The change being to have only one schedule per job and not using Replicate jobs.

-

Ok so I'm not sure what happened but it's working fine now, I made it all the way back to commit 494ea and my backups continue to work. I have done nothing except move between commits. Maybe something happened when updating before? I am going to move to the latest commit and see if it continues to work.

-

@SudoOracle could you post the full json log of the backup ?

(you can get it by clicking on the download button on top of a failed execution job)if possible, one per type of failed backup job

-

@florent So it does seem to be working now. I'm on the latest version and backups are fine. Attached are the 2 jsons of the failed jobs. It's technically only the 1 backup, but there were 2 failed jobs per backup job.

2025-02-16T22_00_00.004Z - backup NG.txt

2025-02-16T22_00_00.007Z - backup NG.txt -

I just had a backup fail with a similar error.

Details:

XO Community

Xen Orchestra, commit 749f0

Master, commit 749f0

Merge backups synchronously was off on the backup job, going to enable it.

{ "data": { "type": "VM", "id": "4f715b32-ddfb-5818-c7bd-aaaa2a77ce70", "name_label": "PROD_SophosXG" }, "id": "1740263738986", "message": "backup VM", "start": 1740263738986, "status": "failure", "warnings": [ { "message": "the writer IncrementalRemoteWriter has failed the step writer.beforeBackup() with error Lock file is already being held. It won't be used anymore in this job execution." } ], "end": 1740263738993, "result": { "code": "ELOCKED", "file": "/run/xo-server/mounts/f992fff1-e245-48f7-8eb3-25987ecbfbd4/xo-vm-backups/4f715b32-ddfb-5818-c7bd-aaaa2a77ce70", "message": "Lock file is already being held", "name": "Error", "stack": "Error: Lock file is already being held\n at /opt/xo/xo-builds/xen-orchestra-202502212211/node_modules/proper-lockfile/lib/lockfile.js:68:47\n at callback (/opt/xo/xo-builds/xen-orchestra-202502212211/node_modules/graceful-fs/polyfills.js:306:20)\n at FSReqCallback.oncomplete (node:fs:199:5)\n at FSReqCallback.callbackTrampoline (node:internal/async_hooks:130:17)\nFrom:\n at NfsHandler.addSyncStackTrace (/opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/fs/dist/local.js:21:26)\n at NfsHandler._lock (/opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/fs/dist/local.js:135:48)\n at NfsHandler.lock (/opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/fs/dist/abstract.js:234:27)\n at IncrementalRemoteWriter.beforeBackup (file:///opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/backups/_runners/_writers/_MixinRemoteWriter.mjs:54:34)\n at async IncrementalRemoteWriter.beforeBackup (file:///opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:68:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:343:7\n at async callWriter (file:///opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/backups/_runners/_vmRunners/_Abstract.mjs:33:9)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:342:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202502212211/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } },Email report of failure

VM Backup report Global status : failure 🚨 • Job ID: bfadfecc-b651-4fd6-b104-2f015100db29 • Run ID: 1740263706121 • Mode: delta • Start time: Saturday, February 22nd 2025, 5:35:06 pm • End time: Saturday, February 22nd 2025, 5:37:59 pm • Duration: 3 minutes • Successes: 0 / 8 1 Failure PROD_SophosXG Production Sophos Firewall Application • UUID: 4f715b32-ddfb-5818-c7bd-aaaa2a77ce70 • Start time: Saturday, February 22nd 2025, 5:35:38 pm • End time: Saturday, February 22nd 2025, 5:35:38 pm • Duration: a few seconds • ⚠️ the writer IncrementalRemoteWriter has failed the step writer.beforeBackup() with error Lock file is already being held. It won't be used anymore in this job execution. • Error: Lock file is already being heldManually run completed

PROD_SophosXG (xcp02) Clean VM directory cleanVm: incorrect backup size in metadata Start: 2025-02-22 18:37 End: 2025-02-22 18:37 Snapshot Start: 2025-02-22 18:37 End: 2025-02-22 18:37 Qnap NFS Backup transfer Start: 2025-02-22 18:37 End: 2025-02-22 18:38 Duration: a minute Size: 12.85 GiB Speed: 185.52 MiB/s Start: 2025-02-22 18:37 End: 2025-02-22 18:44 Duration: 7 minutes Start: 2025-02-22 18:37 End: 2025-02-22 18:44 Duration: 7 minutes Type: delta