VM qcow2 --> vhd Drive Migration Failures

-

Hello again ..

I have batched transferred and exported a series of *.qcow2 VHD's from Proxmox to remote NFS storage:

qemu-img convert -O qcow2 /dev/zvol/zpool1/name-of-vm-plus-id /mnt/pve/nfs-share/dump/name-of-vm-plus-id.qcow2I then converted those exported images to *.vhd using the

qemu-imgcommand:qemu-img convert -O vpc /mnt/pve/nfs-share/dump/name-of-vm-plus-id.qcow2 /mnt/pve/nfs-share/dump/'uuid-target-vdi-xcp-ng'.vhdFinally, I attempted to "IMPORT" the

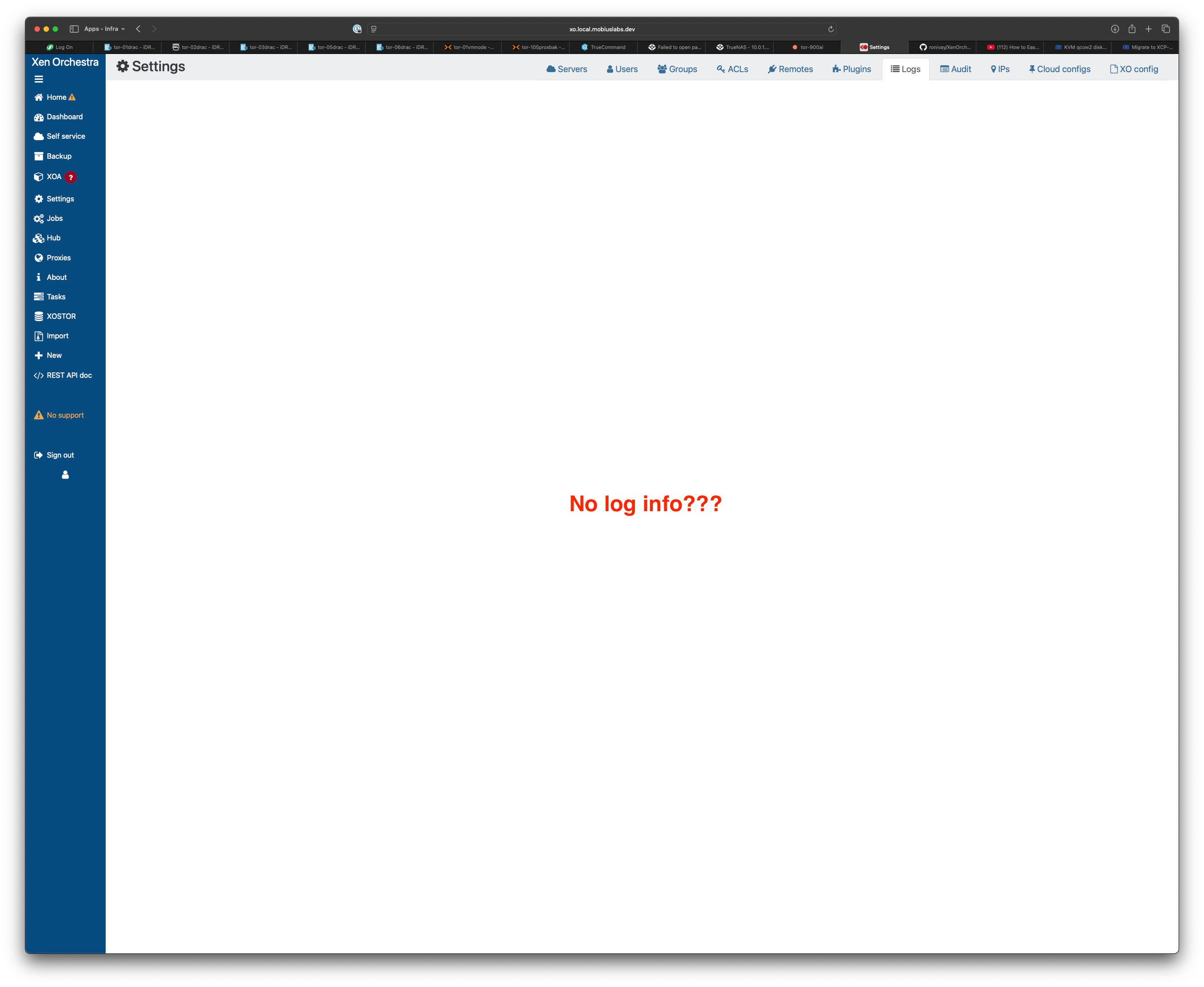

'uuid-target-vdi-xcp-ng'.vhdimages into XCP-ng and they fail, every single time. When I attempt to comb the log for errors from within XO there is nothing (see screenshot). Which is very, very weird.

I originally was referencing this post when searching for a means to convert Proxmox VHD's to XCP-ng. Prior to finding the forum post, I did persuse and review the official docs here.

Hopefully there's a simple way for me to view the logs and understand why XCP-ng is failing to import the converted *.vhd's.

Thanks in advance for your help!

-

@cichy The docs specifically mention that you need to repair the VHD files following the rsync operation.

-

@Danp right, this:

- Use rsync to copy the converted files (VHD) to your XCP-ng host.

- After the rsync operation, the VHD are not valid for the XAPI, so repair them

But

rsyncto where? @AtaxyaNetwork mentions/run/sr-mount/uuid-of-vmbut I don't see a folder for the UUID of the VM I created there. Likely because it resides on an NFS mount?? Or does the *.vhd just need to exist somewhere on the XCP-ng host?Confused.

I want the *.vhd's to be on the NFS mount/share. Though, I could rsync to a local folder, then migrate once added?

-

Also, where does XCP-ng mount NFS volumes? Not in

/mntor/mediaand I cannot seem to find anything in/deveither. If I am going torsyncthe image to the host for repair I need to be able to access the files on the NFS mount. -

It's in

/run/sr-mount/<SR-UUID>(not VM UUID!!) -

@olivierlambert literally in the midst of replying! Yes, got it.

After reading over the reference post I realized

sr-mount(ie Storage Repo ) was where all my *.vhd's are. Duh. So, now only one question remains for me ..

) was where all my *.vhd's are. Duh. So, now only one question remains for me ..Do I copy the

qcow2to my "buffer" machine (as noted in docs), perform the conversion tovhdthere and thenrsyncthe resulting output to the NFS share dir that is identified undersr-mountor to the XCP-ng host directly? There shouldn't be any difference here as they are the same NFS mount.Sorry, one additional follow up: do name the

vhdimage exactly the same as the existing 'dummy'vhdthat was created with the VDI? Following this, do I then import disconnect the drive from the VDI and re-import?Perhaps my confusion is around the fact that I have already created a VDI for the drive I am migrating. As the docs say not to do this until after the image has been converted.

-

Okay. I have cracked this nut!

Answered all my questions by muddling my way through things.

Will document my steps after I finish the next batch of VM's/VDI's. I will say though, after having figured this out and looking for ways to automate and streamline the process, this is hella labour intensive! Honestly, for many of these VM's it is less work re-creating from scratch. I wish there were a way that were as easy as VMWare.

In short, I re-read the docs over and over. Then I followed the advice of a "staging/buffer" environment to carry out all of the

rsyncandqemu-img converttasks. The tricky part (for me) was locating theuuidinfo etc for the disk images -- I copied converted viarsyncto the local datastor. I booted my first vm and all checked out. Though, I am not able to migrate the VDI off of the localsr-mountrepo and onto my NAS NFS volume. It fails every time. -

@cichy the documentation for the Kvm/proxmox part is really weird with unnecessary steps

Normally you just have to do:

qemu-img convert -O vpc proxmox-disk.qcow2 `uuidgen`.vhd scp thegenerateduuid.vhd ip.xcp-ng.server:/run/sr-mount/uuid-of-your-SRThen with XO, create a VM and attach the disk

I really need to find some time to finish the rework of this part of the docs, I'm sorry I didn't do it earlier

-

@AtaxyaNetwork I appreciate the sentiment. I think this one is all on me as pointed out by @Danp ..

My VDI's would not register without this step. I'm unsure as to why because the error logs were completely blank within XO. Your post in conjunction with the docs were extremely helpful though!