Orphan VDIs in XO show health problem

-

Version:

XO:

From:

Xen Orchestra, commit 0a28a

To:

Xen Orchestra, commit b89c2XCP-ng:

From:

8.3 loaded from ISO: xcp-ng-8.3.0-rc1.iso

To:

8.3 loaded from ISO: xcp-ng-8.3.0-20250606.iso

and haven't update it since until there is a major version release.Issue:

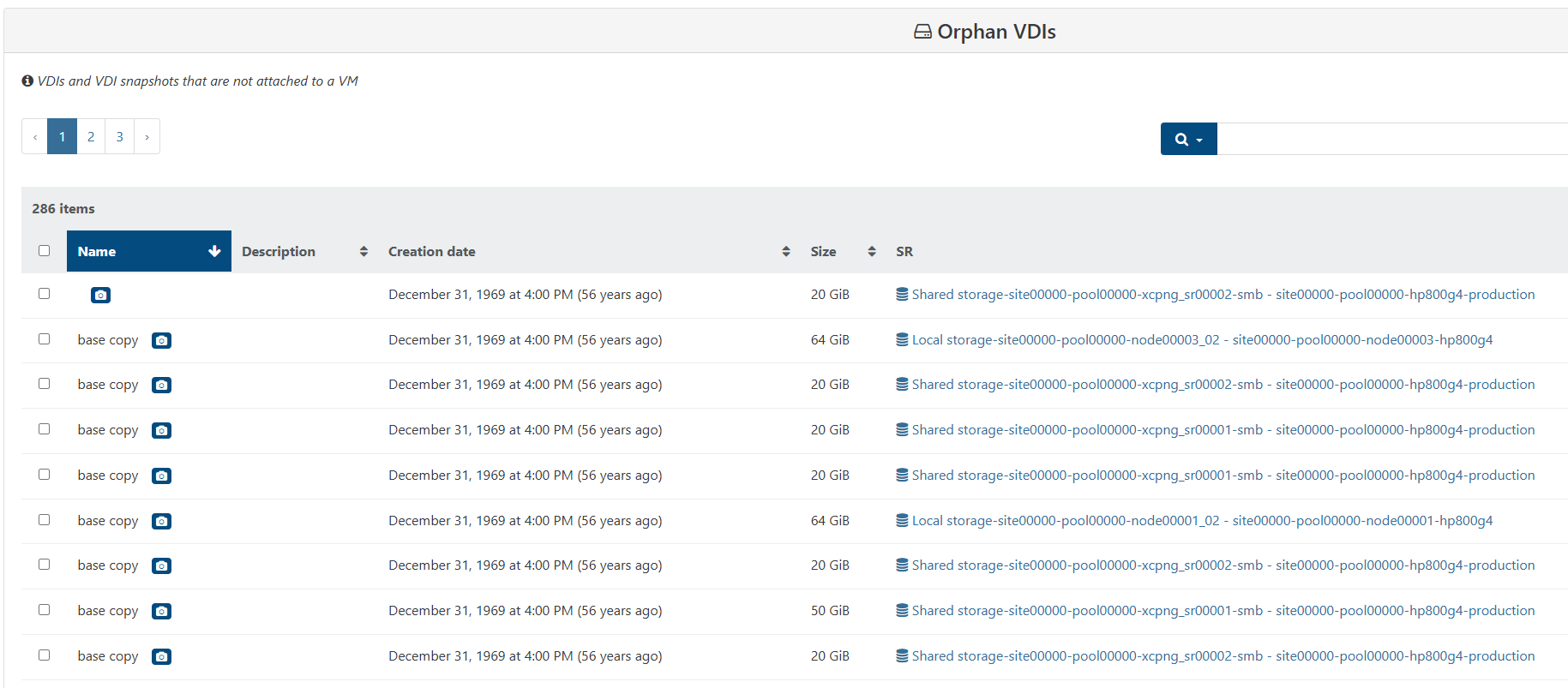

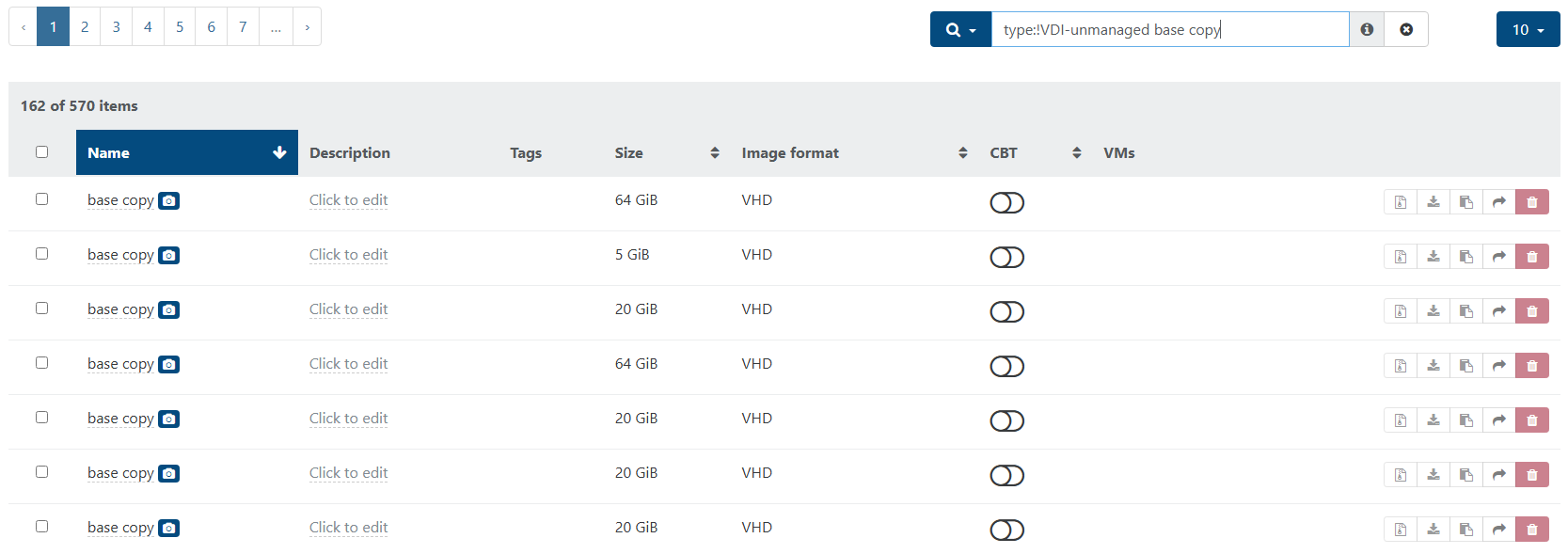

Orphan VDIs in XO show health problem for my "base copy" snapshots as it can't find the VDIs anymore for some reason?

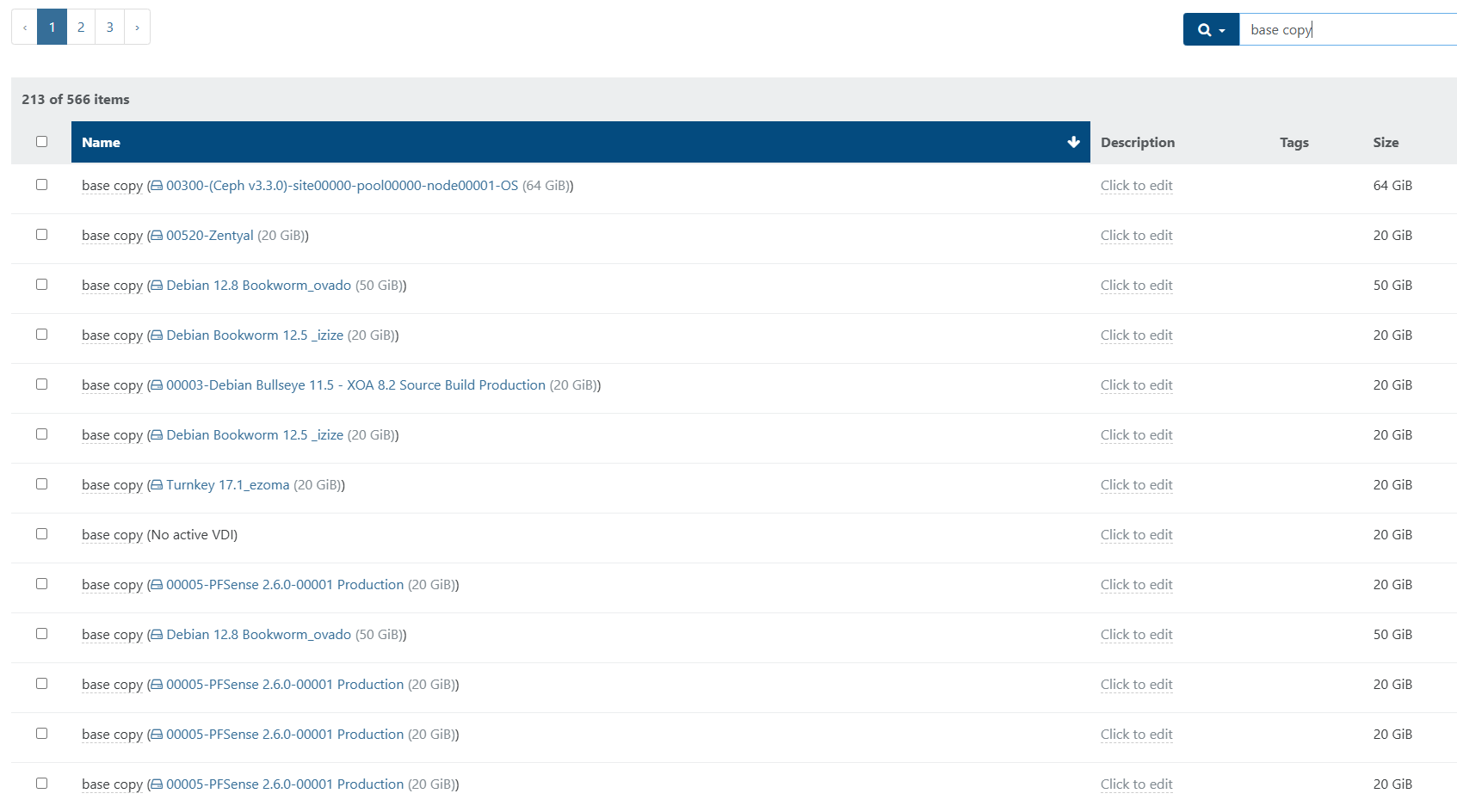

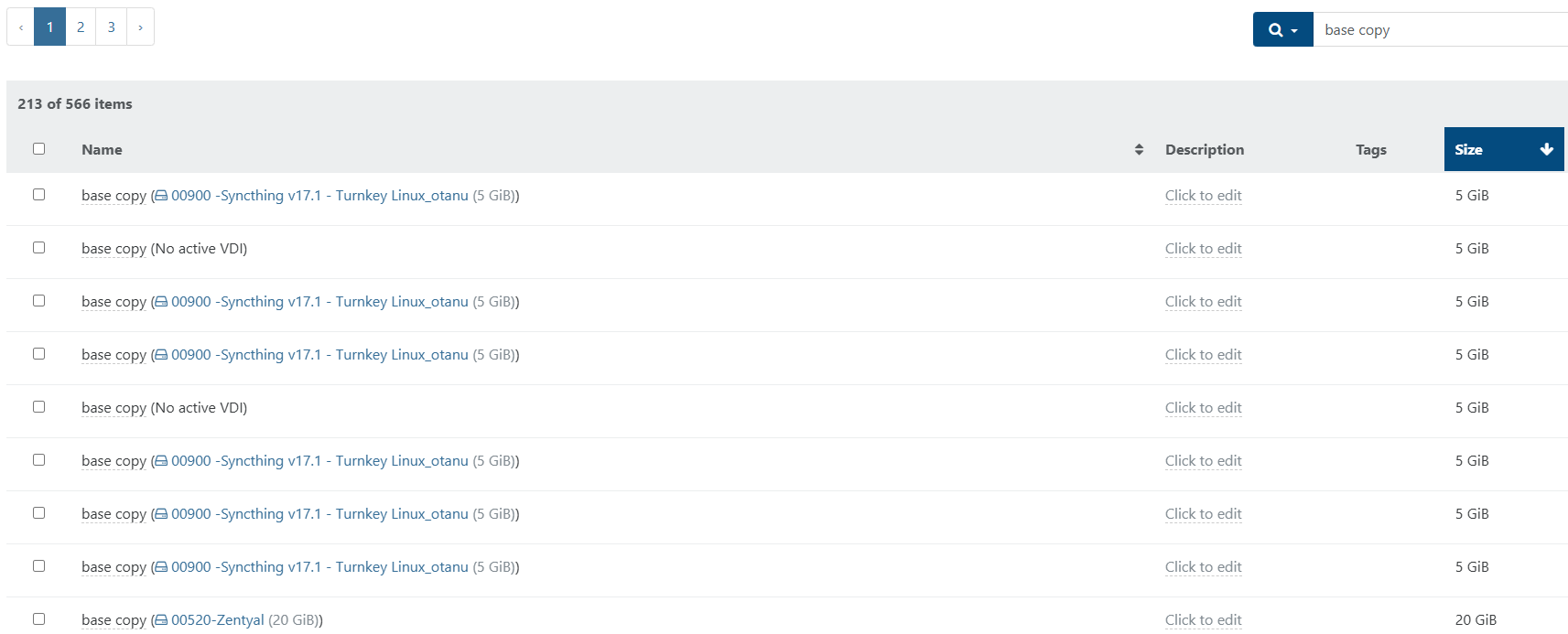

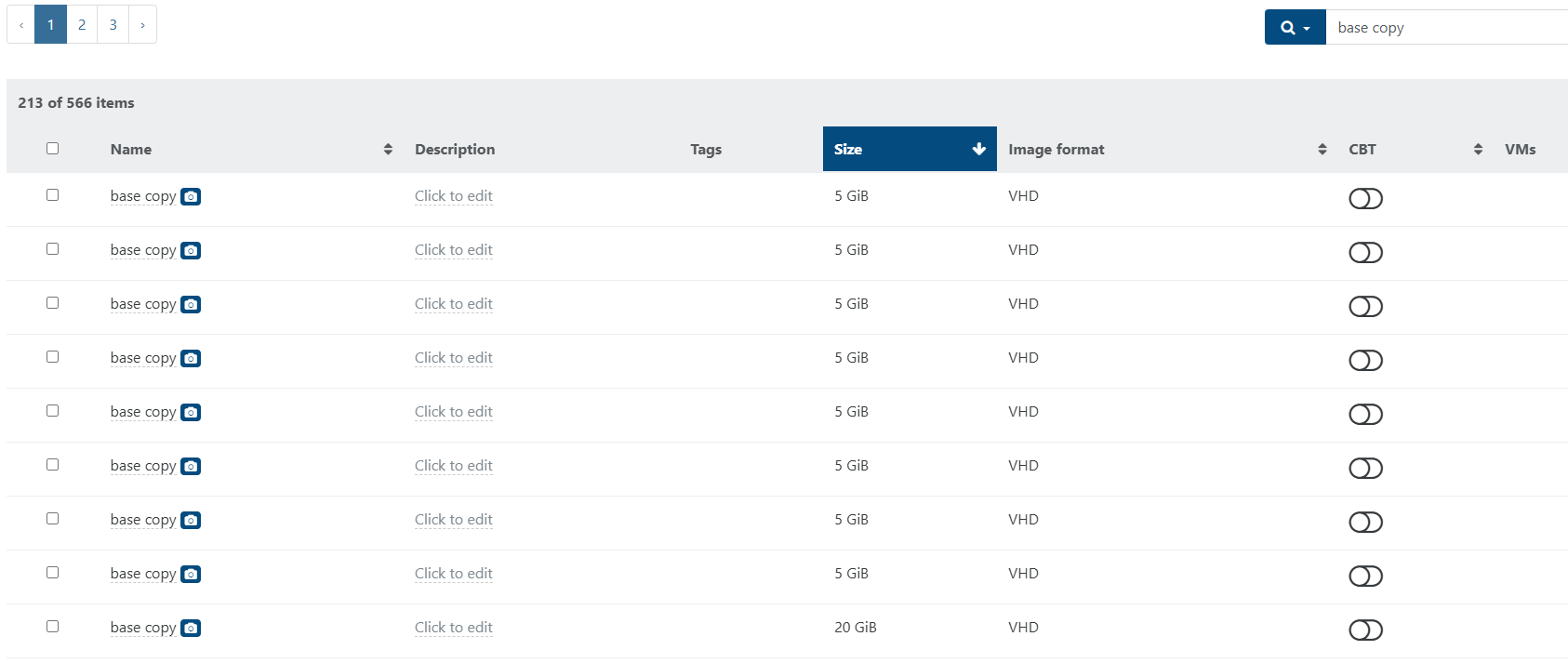

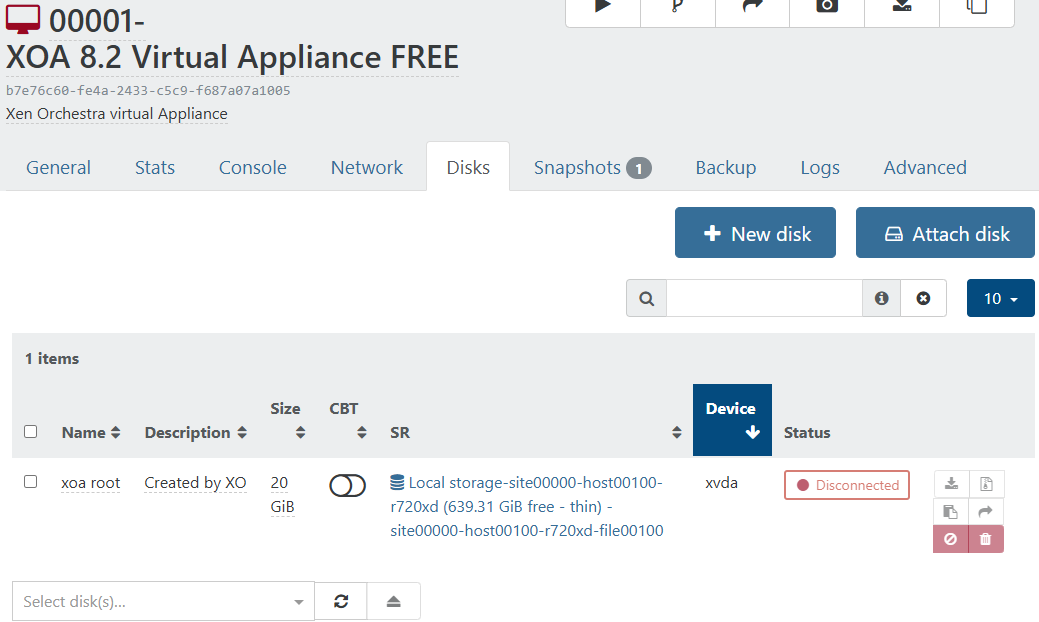

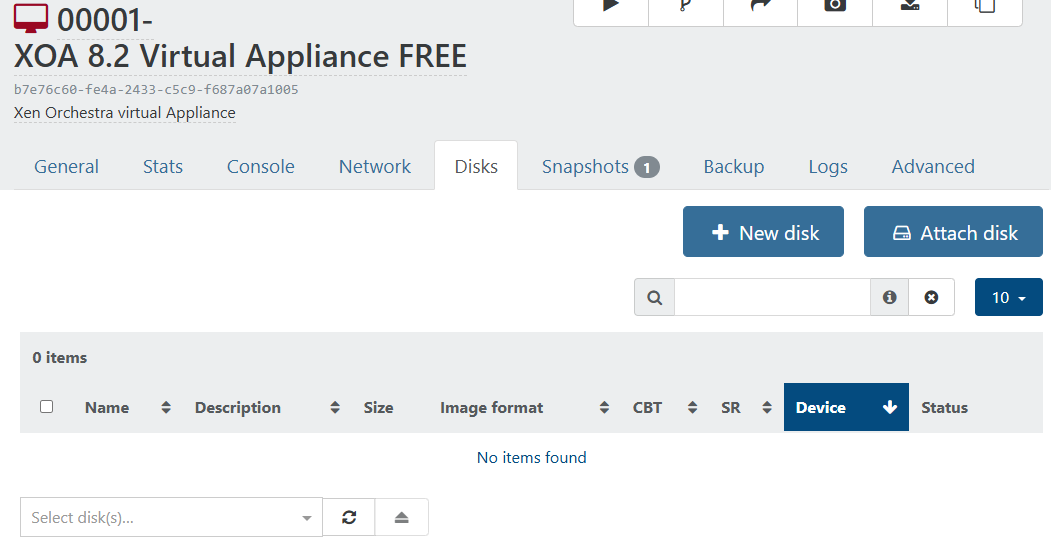

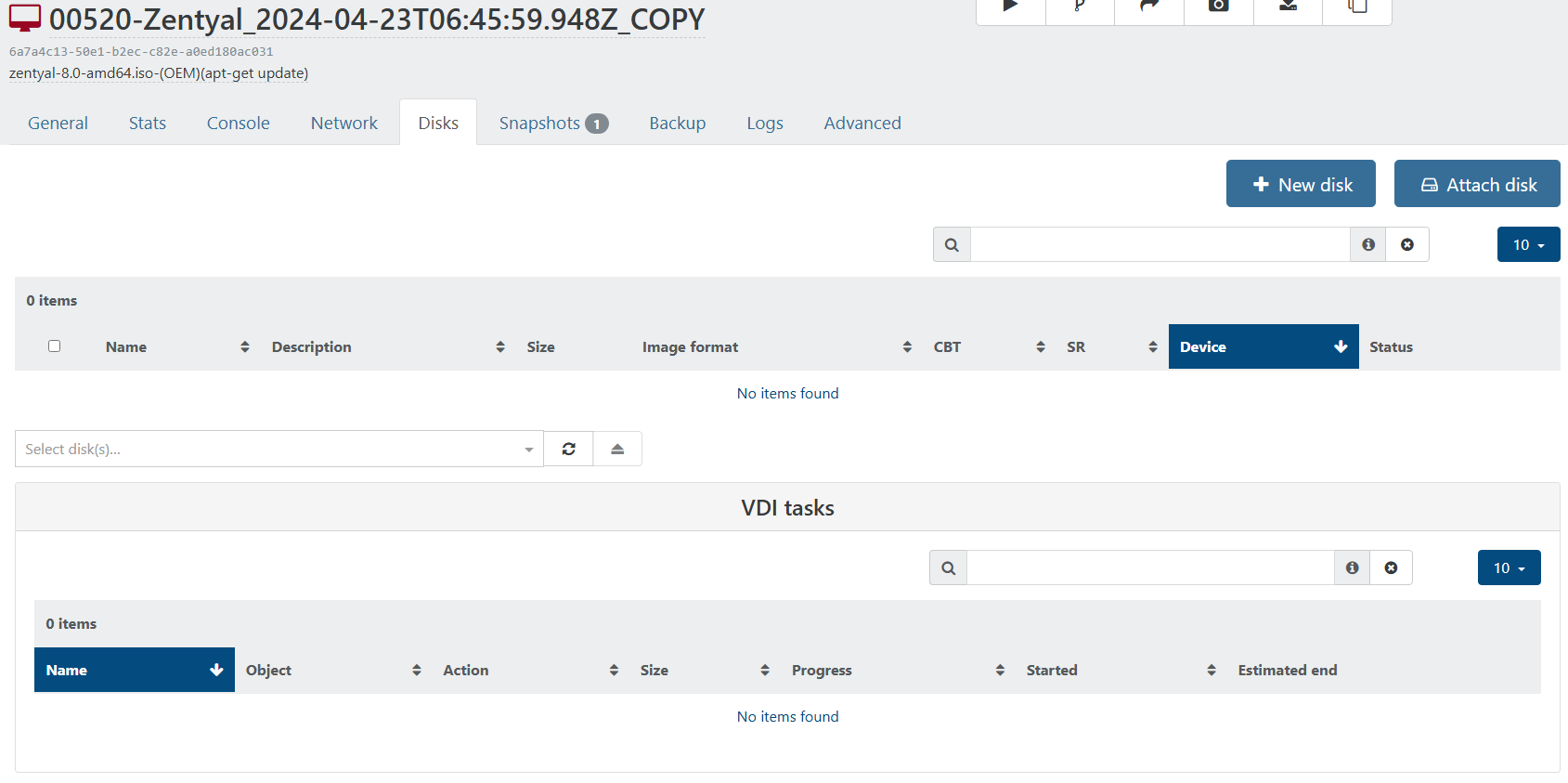

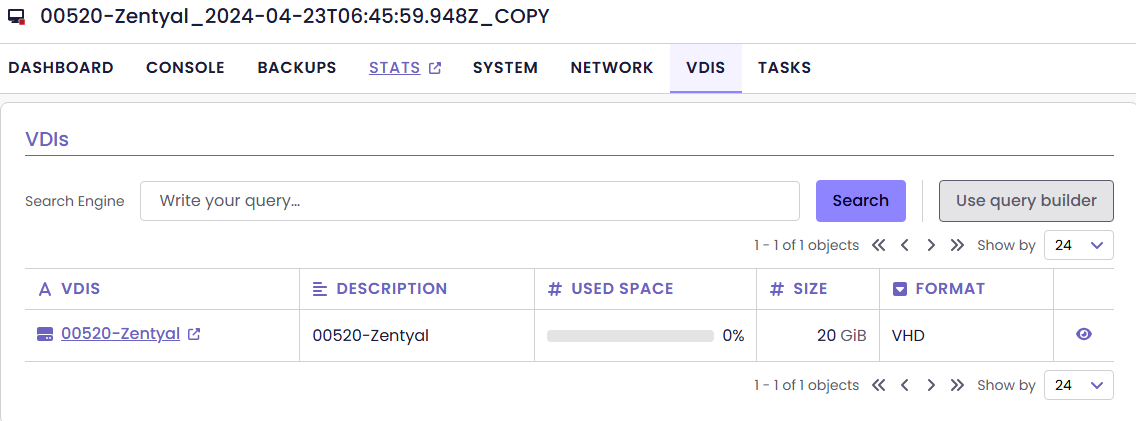

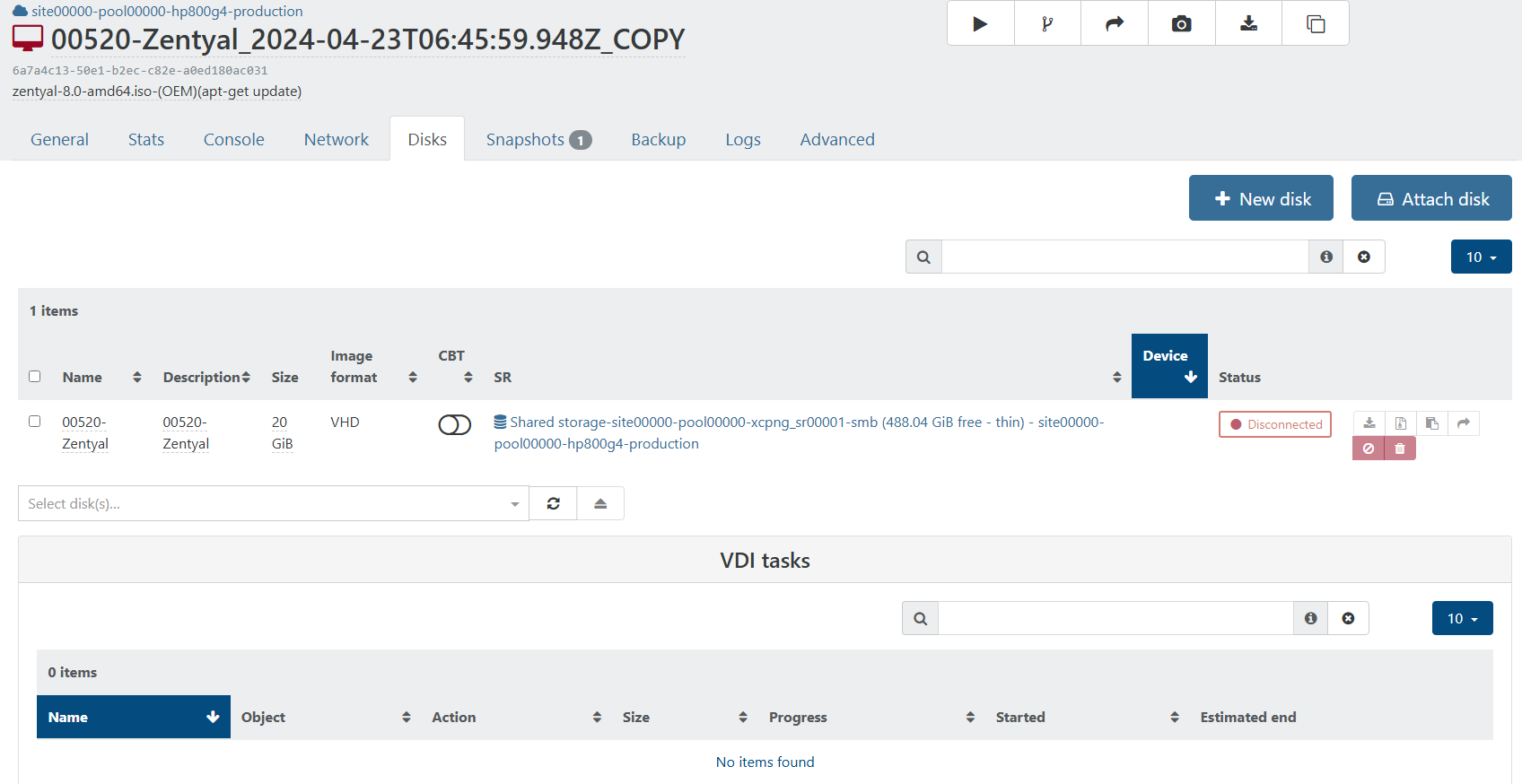

Note: I have clone of before the XO update and have both XO commit 0a28a and commit b89c2 running that's why I was able to take snapshots below so not everything is all lost and done for...From: (before update)

To: (after update)

Is it ok to delete it seem like I can't delete it... in fear of breaking all my VMs.

-

Adding @team-storage in the loop

-

@wilsonqanda, can you share the /var/log/SMlog? Do you installed qcow2 release and use some qcow2 VDIs?

-

Really appreciate your help

!

!This is the SMlog on one of the machine. I cannot rule out qcow2 but I definitely upgrade it from back in the early release of 8.3a and 8.3b. If I did use qcow2 I probably install and forgot about it... but its definitely affecting every VMs on the XO (commit b89c2)

I have also notice that all the VMs on the XO (commit b89c2) run on debian 12 all have missing disks.

FROM:

Xen Orchestra, commit 0a28a (Pre Update on XO disk available still as long as I use the old GUI)

TO:

Xen Orchestra, commit b89c2 (Post Update on XO missing Disks)

Surprisingly the VM still loads and run without the disks but extremely confusing...

Snapshots on it fail for both XO commit version with the following error might be a related issue too which I just notice now:

vm.revert { "snapshot": "d0734350-87a4-97f8-97dc-9d9ac5c24b5d" } { "code": "INVALID_VALUE", "params": [ "snapshot_metadata:HVM__boot_policy", "null" ], "task": { "uuid": "058f49ac-82cc-a49b-eaaf-d20e4a319432", "name_label": "Async.VM.revert", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20260106T02:19:26Z", "finished": "20260106T02:19:26Z", "status": "failure", "resident_on": "OpaqueRef:ea86afa1-6562-0eac-79fc-75be4ce1cb2a", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "INVALID_VALUE", "snapshot_metadata:HVM__boot_policy", "null" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vm_snapshot.ml)(line 432))((process xapi)(filename ocaml/xapi/xapi_vm_snapshot.ml)(line 428))((process xapi)(filename ocaml/xapi/xapi_vm_snapshot.ml)(line 471))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2334))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 2292))((process xapi)(filename ocaml/xapi/rbac.ml)(line 188))((process xapi)(filename ocaml/xapi/rbac.ml)(line 197))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 77)))" }, "message": "INVALID_VALUE(snapshot_metadata:HVM__boot_policy, null)", "name": "XapiError", "stack": "XapiError: INVALID_VALUE(snapshot_metadata:HVM__boot_policy, null) at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202512260040/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202512260040/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202512260040/packages/xen-api/index.mjs:1078:24) at file:///opt/xo/xo-builds/xen-orchestra-202512260040/packages/xen-api/index.mjs:1112:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202512260040/packages/xen-api/index.mjs:1102:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202512260040/packages/xen-api/index.mjs:1275:14)" } -

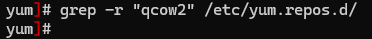

@anthoineb Is there a cmd to check on the xcp-ng to see if "qcow2" was ever installed? Likely I either never did or installed and forgot about it but I did ready about it previously but don't remember if I acted on it or not.

-

@wilsonqanda qcow2 packages are in a separate repository. You should have setup the repo, a

grep -r "qcow2" /etc/yum.repos.d/should tell you if it was setup on your host. -

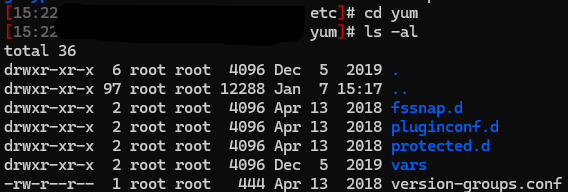

@anthoineb Seem it was never installed as far as i can tell as i tried running it and nothing processed:

Don't even have a /etc/yum.repos.d but do have a yum directory.

-

I think I am going to try wiping out everything and do a clean install and see what happen but will take a bit of time and report back...

-

@wilsonqanda perhaps is it the same probleme as here ?

https://xcp-ng.org/forum/topic/11715/vdi-not-showing-in-xo-5-from-source./9invisible VDIs on some SRs

they are seen as snapshots and not presented in XO5 web UI but you can see them in XO6 or by API -

@Pilow Ok I can confirm that this is extremely like this issue with the disks disappearing but still boot up and run fine lol

No disk on XO v5

Disk show up on v6

Fixed it by REVERTING the SNAPSHOT....

Seem others was able to migrate the VM out and back in to fix it as well which seem ridiculously time consuming lol

. I might try that for those that have no snapshot as its a lost cost...

. I might try that for those that have no snapshot as its a lost cost... -

So far a few solutions for me has been:

- Revert the SNAPSHOT and it magically find the disk...

- Create a new SNAPSHOT and it magically find the disk... (esp. if you don't have a snapshot on some VMs like me)

- SNAPSHOT sometime works but still missing disk so boot it up and disk show up

- Some claim migrating the VM works... (migration fail for me as it can't find the disk lol...)

There should be better solutions as sometime I want to keep old snapshots. Still testing other methods... as my old XO still finds everything... even though its loaded with the same CONFIG FILES and same XCP-ng machine metadata.

-

It will be a nightmare if I have to do all 286 VMs...

slowing verifying if its going down the countdown... luckily most of them are related so it's not too bad.

-

@wilsonqanda doing a snapshot and deleting it do not resolve the issue for me

i'll try to snap and revert to the snap and tell you if its Ok this way for me

-

@Pilow Does disk show up after you do the snapshot? If it is able to do the snapshot there is a high chance it can boot up. So try booting up (and check for disk) and shut it down and do the snapshot at that time if the disk show up.

It helps to boot up on the old v5 commit and check from that side too if you have both copy before the commit update.

Yep I can confirm that snapshot doesn't always work. But using the older v5 that used to work before the update does get it showing up on the newer v5 commit if I go through whole snapshot, revert, etc. one of those case work.

-

This post is deleted!