Delta Backup Changes in 5.66 ?

-

@danp We were not able to reproduce the issue on our side, what's the config of your backup job?

-

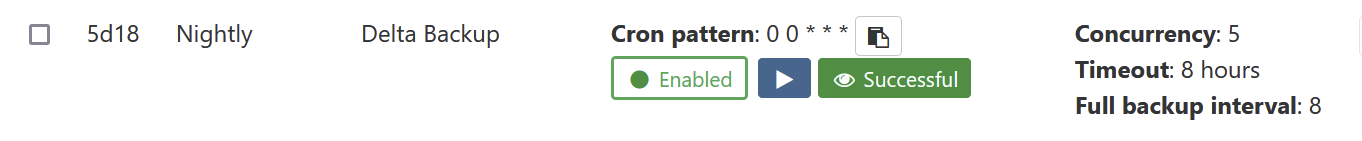

@julien-f It's the same delta backup job that I've been using forever --

It uses

smart modeto backup all running VMs. Scheduled to run daily at midnight, backup retention is set to 12. -

Unsure if this is related, but I've found some errors in the logs that I don't believe occurred prior to the most recent updates --

Dec 28 00:19:10 xo-server[257773]: 2021-12-28T06:19:10.418Z xo:main WARN WebSocket send: { Dec 28 00:19:10 xo-server[257773]: error: Error: Cannot call write after a stream was destroyed Dec 28 00:19:10 xo-server[257773]: at new NodeError (node:internal/errors:371:5) Dec 28 00:19:10 xo-server[257773]: at errorBuffer (node:internal/streams/writable:517:14) Dec 28 00:19:10 xo-server[257773]: at afterWrite (node:internal/streams/writable:501:5) Dec 28 00:19:10 xo-server[257773]: at onwrite (node:internal/streams/writable:477:7) Dec 28 00:19:10 xo-server[257773]: at WriteWrap.onWriteComplete [as oncomplete] (node:internal/stream_base_commons:91:12) Dec 28 00:19:10 xo-server[257773]: at WriteWrap.callbackTrampoline (node:internal/async_hooks:130:17) { Dec 28 00:19:10 xo-server[257773]: code: 'ERR_STREAM_DESTROYED' Dec 28 00:19:10 xo-server[257773]: } Dec 28 00:19:10 xo-server[257773]: }Also this --

Dec 28 00:00:13 xo-server[360334]: 2021-12-28T06:00:13.194Z xo:xapi:vm WARN HANDLE_INVALID(VDI, OpaqueRef:83be31a3-81d7-4be3-9714-0ad1fa034d20) { Dec 28 00:00:13 xo-server[360334]: error: XapiError: HANDLE_INVALID(VDI, OpaqueRef:83be31a3-81d7-4be3-9714-0ad1fa034d20) Dec 28 00:00:13 xo-server[360334]: at Function.wrap (/opt/xen-orchestra/packages/xen-api/dist/_XapiError.js:26:12) Dec 28 00:00:13 xo-server[360334]: at /opt/xen-orchestra/packages/xen-api/dist/transports/json-rpc.js:58:30 Dec 28 00:00:13 xo-server[360334]: at runMicrotasks (<anonymous>) Dec 28 00:00:13 xo-server[360334]: at processTicksAndRejections (node:internal/process/task_queues:96:5) { Dec 28 00:00:13 xo-server[360334]: code: 'HANDLE_INVALID', Dec 28 00:00:13 xo-server[360334]: params: [ 'VDI', 'OpaqueRef:83be31a3-81d7-4be3-9714-0ad1fa034d20' ], Dec 28 00:00:13 xo-server[360334]: call: { method: 'VDI.get_record', params: [Array] }, Dec 28 00:00:13 xo-server[360334]: url: undefined, Dec 28 00:00:13 xo-server[360334]: task: undefined Dec 28 00:00:13 xo-server[360334]: } Dec 28 00:00:13 xo-server[360334]: } Dec 28 00:00:13 xo-server[360334]: 2021-12-28T06:00:13.393Z xo:xapi:vm WARN HANDLE_INVALID(VDI, OpaqueRef:83be31a3-81d7-4be3-9714-0ad1fa034d20) { Dec 28 00:00:13 xo-server[360334]: error: XapiError: HANDLE_INVALID(VDI, OpaqueRef:83be31a3-81d7-4be3-9714-0ad1fa034d20) Dec 28 00:00:13 xo-server[360334]: at Function.wrap (/opt/xen-orchestra/packages/xen-api/dist/_XapiError.js:26:12) Dec 28 00:00:13 xo-server[360334]: at /opt/xen-orchestra/packages/xen-api/dist/transports/json-rpc.js:58:30 Dec 28 00:00:13 xo-server[360334]: at runMicrotasks (<anonymous>) Dec 28 00:00:13 xo-server[360334]: at processTicksAndRejections (node:internal/process/task_queues:96:5) { Dec 28 00:00:13 xo-server[360334]: code: 'HANDLE_INVALID', Dec 28 00:00:13 xo-server[360334]: params: [ 'VDI', 'OpaqueRef:83be31a3-81d7-4be3-9714-0ad1fa034d20' ], Dec 28 00:00:13 xo-server[360334]: call: { method: 'VDI.get_record', params: [Array] }, Dec 28 00:00:13 xo-server[360334]: url: undefined, Dec 28 00:00:13 xo-server[360334]: task: undefined Dec 28 00:00:13 xo-server[360334]: } Dec 28 00:00:13 xo-server[360334]: } -

@danp I don't think these are new or related to this issue.

-

@danp Which type of remote are you using for this job? SMB? NFS?

-

@julien-f NFS

-

@julien-f said in Delta Backup Changes in 5.66 ?:

We discussed this issue in a support ticket but for everyone else: it was a bug and a fix was shipped in XO 5.66.1 yesterday

We're on 5.66.1 and incrememntal backups still make full backups. This is now eating up quite a lot of storage space.

-

@s-pam we were not able to reproduce so far on our side. Also, most of the XO team (myself included) is on holidays until next week, thus I'm not sure we'll be able to fix it before.

If you are using an official XO appliance, you can rollback to the

stablechannel and if you are using XO from the sources, you can go back to a previous commit. -

@julien-f said in Delta Backup Changes in 5.66 ?:

@s-pam we were not able to reproduce so far on our side. Also, most of the XO team (myself included) is on holidays until next week, thus I'm not sure we'll be able to fix it before.

If you are using an official XO appliance, you can rollback to the

stablechannel and if you are using XO from the sources, you can go back to a previous commit.Yea, I have a support ticket active for another issue, but this popped up now in the middle of it. But then I'll wait until next week before downgrating so you can have a chance to look at it through the support tunnel. I've changed the backup schedule to daily instead of hourly to save some space on the remote

.

. -

Not wanting to be a "me too" but I'm seeing exactly the same thing.

Some VM's (unfortunately in my case large ones) and doing daily fulls rather than deltas.

Backup taking 14 hours instead of 2 or 3.

Similar etup to @S-Pam with nfs as the remote target.

-

We are doing progress in the investigation. @florent will keep you posted!

-

@pnunn @S-Pam If you use the sources, can you try to use this branch

feat-add-debug-backupit's master with additionnal debug and a potential fix ( potential , because I can't reproduce it regularly in our lab)Then you can activate more debug by this

[logs] # Display all logs matching this filter, regardless of their level filter = 'xo:backups' # Display all logs with level >=, regardless of their namespace level = 'debug'in your config file ( should be in

~/.config/xo-server/config.toml -

@florent unfortunately not. We use XOA.

-

@s-pam it's a great choice

If you can launch a backup , I can connect through a support tunnel and deploy the fixthen you can launch the backup and we can celebrate the success of this fix

-

@florent said in Delta Backup Changes in 5.66 ?:

@s-pam it's a great choice

If you can launch a backup , I can connect through a support tunnel and deploy the fixthen you can launch the backup and we can celebrate the success of this fix

Do you mean a second XOA? I already have a support ticket+tunnel open on it. Originally due to failed incremental backups, but now it includes this issue with full backups being made when they shouldn't.

-

@s-pam this issue caused quite a few tickets, which one is yours ? I can connect right away

-

@florent said in Delta Backup Changes in 5.66 ?:

@s-pam this issue caused quite a few tickets, which one is yours ? I can connect right away

It is Ticket#774300

-

@s-pam I answered on the ticket and deployed the fix.

-

We seem to experience the same issues. We are on commit d3071. It seems that xoa mislables the backups as well.

Even though we enforced a full backup through the schedule option, it is labled as a delta in the restore process menu.

The xoa backup "log" in the interface shows full as well instead of delta and the amount of data transferred is clearly a full. -

I'm running XO as well, not from sources.. but today, everthing worked as it should??

Lets see what happens tomorrow.