XOCE limit ?

-

I can't exceed the limit of 1Gb on XOCE, as far as I can tell.

Because the HDD on the NAS is on XenServer linux level and goes up to 10Gb, copying to the terminal on XenServer -

@olivierlambert

I tried that ( http ) and I can't exceed the limit -

Again, there's no artificial limit

It's the max speed the source host can export OR the destination host can import (or maybe something in the middle, remember it's flowing through XO). Double check your XO VM got enough memory and CPUs.

It's the max speed the source host can export OR the destination host can import (or maybe something in the middle, remember it's flowing through XO). Double check your XO VM got enough memory and CPUs. -

@olivierlambert

ok, thank you -

@Gheppy Sis you check to make sure all ports are configured correctly (speed, full-duplex, etc.)? Any clues from running ifconfig or netstat? Check also TCP parameters on the hosts as they can influence traffic quite a bit, and if you use NFS, NFS mount parameters like rsize and wsize, for example. 10 GiB interfaces often require mods -- you can google for a number of articles on improving 10 GiB network traffic under Linux.

-

I can see the same performance limits.

My example: Full-Backup 100 GB VM to SMB share. Everything is connected with 10 GbE.XOCE / XOA (tested both):

- zstd compression: 14 min

- no compression: 14 min

3rd-party-backup-software on same host, same vm, etc.

- with compression and 1 thread: 17 min

- with compression and 8 threads: 4:40 min

Although my host is relatively fast, I am rarely getting more than 120 MB/s with XOA/XOCE per thread. The 3rd-party software seems to have the same limitation but offers the possibility to work multi-threaded also if only one VM is backed up.

--> In real life, this is hopefully mostly not important, as there can be concurrent bacukps

-

See my answer on your thread.

-

@tjkreidl

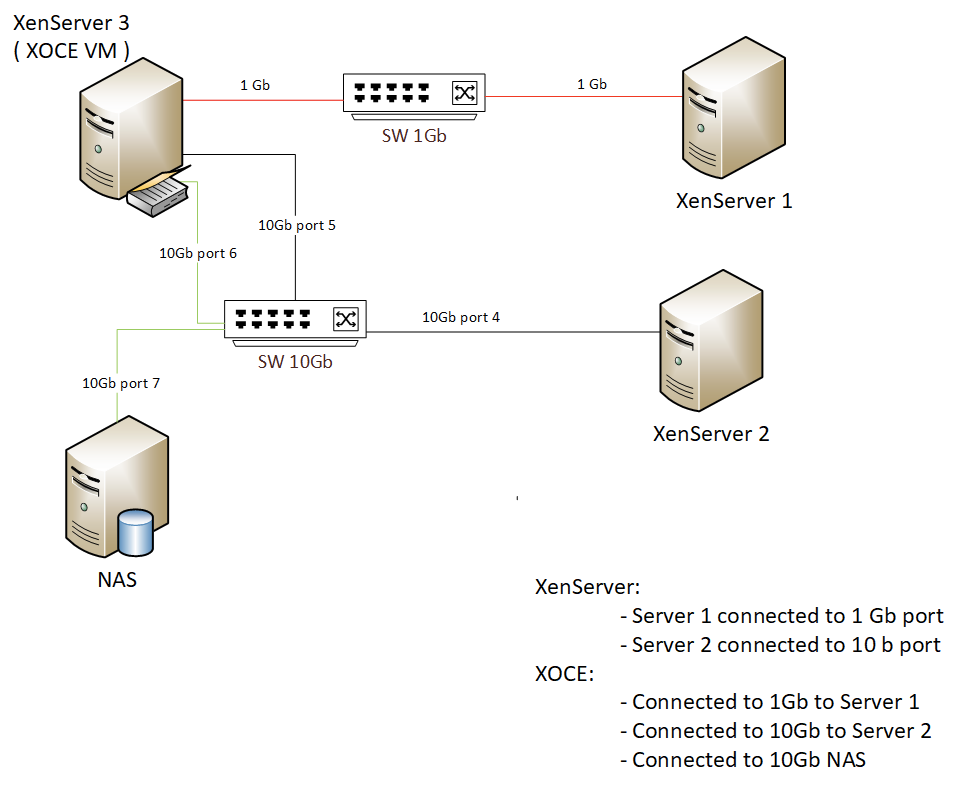

On XenServer 3 with XOCE on him, I have connexion on block level over iSCSI. So XOCE see this iSCSI as local disk.

If I copy on linux level (XenServer terminal) from local disk (local RAID) to iSCSI I get more that 450Mb, but if I move an disk of an VM thru XOCE from local disk (local RAID) to iSCSI I can't get more that 33Mb.

I don't think is configuration connection, because only the "copy" over XOCE is with speed limited. As I say on linux level I get value up to 450Gb and an constant to 350Gb. -

As I explained multiple times, VM storage migration or export is totally unrelated to line speed. Also moving a disk doesn't involve XO, XO is just sending the order.

-

@Gheppy For a direct storage to VM connection, yes, it's faster because you bypass a lot of the Xen overhead, but VM reads and writes vs. backups are different beasts, as @olivierlambert said. I used to get around 300 Mb/sec for a direct VM iSCSI conention on SenServer, but no more than 200 or even a bit less via the standard SR mechanism.

-

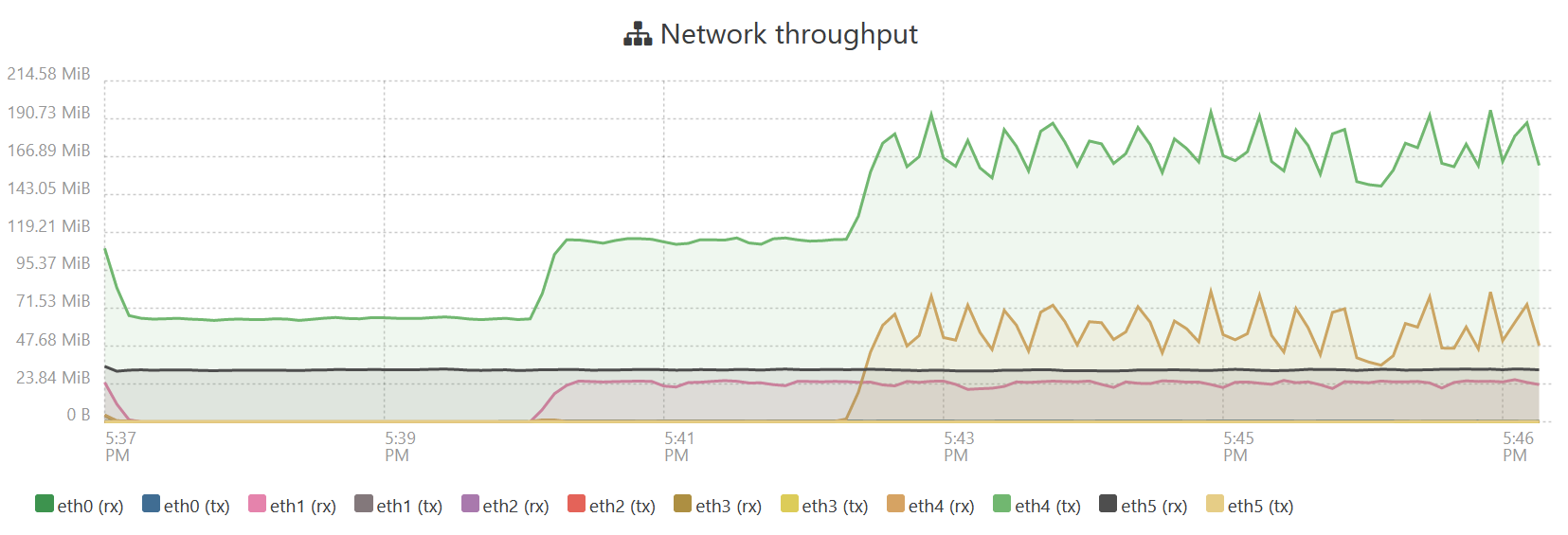

As info.

After several tests I have the following conclusions:- with SSL connection between server and XOCE does not exceed a maximum transfer of 33Mb/s

- without SSL connection (http://) between the server and XOCE, the maximum speed reached, in test, is 290Mb/s

The limit of maximum 1000mbs is given by the encrypted connection, it probably cannot encrypt on a 10Gbs band.

-

Are you sure you only compare SSL vs no SSL or also no SSL vs NBD?

-

@Gheppy If you go over a VLAN and/or a priviate, non-routed network, why even introduce the overhead of SSL unless you are super paranoid about security?

-

@olivierlambert

The only configuration I made for the final test was to pass http:// in front of the IP to connect to XCP-ng servers and the transer is the one shown above. -

Okay so you should try to enable NBD and bench the diff (secure and unsecure). That would be interesting to get a comparison on your side

See https://xen-orchestra.com/blog/xen-orchestra-5-76/#

-faster-backups-preview for more details

-faster-backups-preview for more details -

Also, what is your CPU brand/model? Also, how many vCPU do you have in your XO VM?

-

@olivierlambert

I'll read to see how it's done and I'll start testing with NBD.

This server has the following configuration, it is only used for backup:LENOVO System x3650 M5

- 64Gb Ram

- 24x CPU, Xeon CPU E5-2620 v3 @ 2.40GHz

- 2 x 10Gb LAN, 4 x 1Gb LAN

XOCE

- 16x CPU,

- 12Gb RAM

- 3 x LAN: 1 x 1Gb, 2 x 10Gb

-

Okjay so rather old CPU which is relatively inefficient (compared to modern EPYCs) explaining the huge gap in SSL vs plain.

This is something we can investigate on our side, but if NBD provides a good boost even in SSL, I'm very interested

-

@Gheppy Run "top" ans well as "iostat" during your backup to see if any saturation is taking place -- CPU or memory on dom0, queue and I/O throughput on the storage. I agree with @olivierlambert that a 2.4 GHz CPU is marginal in this day and age.

-

Yes, but I'd like to be more precise: it's not an "excuse" or asking you to purchase better hardware. Just a fact: there's a bottleneck in SSL decode when doing disk export/import in XO. The gap is wider on less efficient CPUs, but also (a bit less) visible on modern ones.

I'd like to see if we can "workaround" this by using NBD in SSL, since in the future, nothing will be left in plain but full SSL.