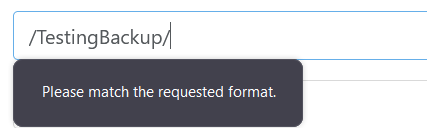

S3 Backup "Please Match The Requested Format"

-

@planedrop TBH I didn't monitor for the full 24hrs - but the transfer speeds were just too slow. The same happens with CR between hosts - way too slow to be usable.

Backup performance on xcp/xo is a major problem - currently battling with this at another site where we just installed a truenas server with dual 25G direct connections between the xcp host and the truenas server (with NDB - and we see < 100MiB/s transfers (nfs share on truenas scale latest) - this time the xcp host is a dual eypc 7543 with all nvme and tons of ram.

This thread did help a bit - top on the host was showing stunnel using a lot of cpu - switching the connection from xo to the server tp be http removed stunnel from the equation but it's still too slow - and I did read somewhere that in the next xcp/xen version http is going away and everything will be https only.

I'm currently researching backups on proxmox to see if they have the same sort of performance issues. I really like xcp and xo but when we end up with backups taking so long that they overlap with the next backup schedule that is not usable.

-

@vincentp I do agree backups being as slow as they are, especially for S3, is a huge issue.

I don't personally find backups over SMB (10GbE in this case) too slow to be usable by any means, many TB of VMs can be backed up in a single night without issues, but remote stuff is very slow.

I'm curious to hear about your CR experience though, as that is something I'm planning to deploy here soon. Where the hosts on the same subnet and had fast connections or was it a remote host with like IPSec handling the connectivity?

-

@planedrop I haven't used smb for backups but will test that - currently testing with nfs which I imagine would be faster than smb though.

I have 3 sites, each with a separate XO instance managing them

LA DC

- 2 xcp hosts (dual E5-2620v4)

- no shared storage

- 10G direct connection between hosts

I tried CR between the hosts, and it works well.. once you get past the full backup - but those take 24hrs (800GB vms),

S3 Backup - same experience - backups seem to work well once you get past the full backup - but that sometimes takes longer than 24hrs and runs into the next backup schedule. XO has access to the 10G interface and I confirmed it's using that.Sydney DC

- single xcp host (dual eypc)

- truenas scale (installed yesterday) - dual 25G direct connections to the xcp

Testing full backups to nfs on truenas over 25G network(NDB Enabled) with the shared option to use multiple files enabled. Also XO is connecting via http to avoid stunnel which was using a huge amount of cpu when I first tried it, and it has access to a 25G interface and I confirmed via netdata that the correct interface is being used for the backups

Small vm test.

Duration: 9 minutes

Size: 41.48 GiB

Speed: 81.03 MiB/sMore testing required but its frustrating that we cannot get better speeds than this - could have saved some money and just used 1G cards in the machines!

HomeLab

- single xcp host

- truenas server to be commissioned this week

just doing disaster recovery backups to 2nd local storage on the machine for now (and just as slow) - will use the truenas once I get it in the rack this week.

The bottleneck with the backups is definitely not the network, even on the homelab machine that backs up to local storage I'm only seeing around 60MiB/s

-

@vincentp If you backup multiple VMs in parallel, does the total speed stays at 80MB/s or does it scales with the number of VM ?

NBD also use encryption by default. You can use it unencrypted by removing the NBD purpose on the network and adding insecure_nbd https://docs.citrix.com/en-us/citrix-hypervisor/developer/changed-block-tracking-guide/enabling-nbd.html#enabling-an-insecure-nbd-connection-for-a-network-notls-mode

-

80MiB/s between LA and Sydney is already pretty impressive, knowing the latency between those. When you write a lot of small blocks, each block have to way for a round trip before being ACK. This takes a lot of time.

Higher the latency, longer the backup, except if we choose bigger blocks, which isn't trivial.

When using NBD, it should be a lot better however since we can have more blocks worked in parallel. I achieved a huge bump with more blocks at the same time.

Also, you can also try XO from the source on a physical machine to check the difference

(vs XO in a VM)

(vs XO in a VM)There's many many many ways to get faster, what's important is to measure each modification boost, because this might help to identify bottlenecks

-

@olivierlambert said in S3 Backup "Please Match The Requested Format":

80MiB/s between LA and Sydney is already pretty impressive

No, that's local to sydney - no backups occuring between sites.

-

Do you have NBD enabled on the network used by XO to backup?

-

@olivierlambert said in S3 Backup "Please Match The Requested Format":

Do you have NBD enabled on the network used by XO to backup?

yes.

I will try the insecure nbd - ok without encryption since its a direct connection between the machines

-

-

@florent said in S3 Backup "Please Match The Requested Format":

@vincentp If you backup multiple VMs in parallel, does the total speed stays at 80MB/s or does it scales with the number of VM ?

Yes it does.

NBD also use encryption by default. You can use it unencrypted by removing the NBD purpose on the network and adding insecure_nbd https://docs.citrix.com/en-us/citrix-hypervisor/developer/changed-block-tracking-guide/enabling-nbd.html#enabling-an-insecure-nbd-connection-for-a-network-notls-mode

I ran 2 full backups together - netdata and truenas are showed 1.45Gb/s so that's definitely an improvement - although it's using a lot of cpu

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 23882 root 20 0 1022156 101484 20560 S 105.0 1.3 18:08.94 xapi 25349 root 20 0 31884 3872 2660 R 66.0 0.0 0:25.54 tapdisk 10163 root 20 0 32696 4896 2900 R 28.7 0.1 2:25.91 tapdiskVM1

Duration: 10 minutes

Size: 41.51 GiB

Speed: 67.6 MiB/sVM2

Duration: 10 minutes

Size: 23.25 GiB

Speed: 39.13 MiB/sSome other backups are running at the moment, when they are done I will measure VM1 by itself to compare to the earlier one I posted.

-

@florent said in S3 Backup "Please Match The Requested Format":

NBD also use encryption by default. You can use it unencrypted by removing the NBD purpose on the network and adding insecure_nbd https://docs.citrix.com/en-us/citrix-hypervisor/developer/changed-block-tracking-guide/enabling-nbd.html#enabling-an-insecure-nbd-connection-for-a-network-notls-mode

Duration: 9 minutes

Size: 41.52 GiB

Speed: 75.26 MiB/sSo didn't really make any meaningful difference (was 80MiB/s previously).

-

@vincentp

if you are on master, can you apply the setting from here : https://xcp-ng.org/forum/topic/7209/slow-backups-updated-xo-source-issue/8 ? -

@florent I already had that setting applied, at least I think so

Is this the correct config file?/opt/xo/xo-server/config.toml -

Assuming I have the setting in the correct place, I'm not seeing any significant difference whether the setting is there or not.

without setting

Duration: 10 minutes

Size: 41.71 GiB

Speed: 72.5 MiB/swith setting

Duration: 10 minutes

Size: 41.71 GiB

Speed: 72.87 MiB/sCPU usage appears to be about the same.