Want to compare insecure_nbd but doesn't seem to work (secure does)

-

@sluflyer06 Anyone able to relay what I might need in my config to enable insecure NBD on the XO side? I have the purpose set on the host.

-

Hi,

- NBD a little slower than non-NBD: that's already weird

- Leaving this thread to @florent when he's around

- NBD a little slower than non-NBD: that's already weird

-

@olivierlambert here you can see a side by side of the speeds. Both boxes are Dell R730XD with frequency focused cpu's, the NAS is truenas on dual 8c/16t broadwells at upper 3ghz range, 64GB ram, 10gig network through a brocade switch mounted with NFS and async. xen box a 128GB ram dual e5-2697a 64thread setup boot drive is a seagate nytro SAS ssd, and VM's are on a U2 samsung 1725b 3.2TB nvme drive if any of that matters at all.

-

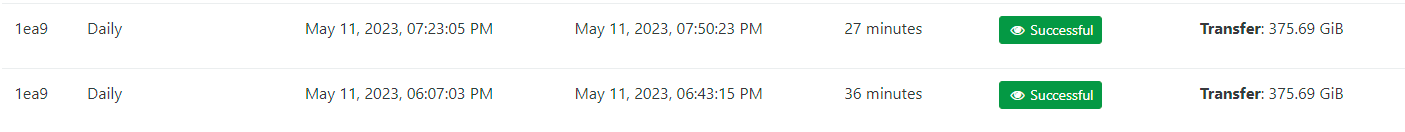

I created a fresh Debian 11.7 VM and installed XO from sources from the offical docs and made really no other change and re-ran NBD (secure) vs standard backup to NFS just to get another data point and see a significant performance advantage without NBD which I think is not supposed to be the case?

-

Are you using a block based backup repository? (not single VHD files but 2MiB blocks). Maybe the bottleneck is on your NFS side, because on our various tests, the usual bottleneck is the XCP-ng export speed, not the backup repository.

-

@olivierlambert I am using block based, I can make a test repo on a flash array and see if the spinners are choking on all the small files if that's what you're getting at. The NAS is a dedicated box but very modest by enterprise standard, it's a striped pool with 2 sets of 14TB WD Red's in ZFS (8 drives), typically it does quite well but every use case is different

Although I guess if the bottleneck was the storage, the NBD backup would be at worst, as fast as non?

-

I would love to have a way to know where the back pressure is happening in the stream

How many VM are you backuping and how many disks?

How many VM are you backuping and how many disks?Also, maybe the small blocks are putting too much pressure on your disks, and therefore putting backpressure on the export speed.

@florent is there a way to raise the number of parallel downloads?

-

@olivierlambert I'm willing to dig in and provide any data. right now it's 10 VM's all with 1 disk each, i'm currently running a backup without NBD using my flash array as a NFS destination, it's a handful of fast samsung SAS 12 SSD's that are striped (they're 3.2TB each). When that finishes I'll flip NBD back up and re-run so at least I think that will take storage out of the equation I believe.

-

Total backup performance was still slower with NBD backing up to a flash pool so my disks do not appear to be the choke point.

-

How many vCPUs and memory do you have in your XO VM?

-

@olivierlambert 6 and 6gb ram

-

In XO, are you in

httpin Settings/server URL when connected, or default? (nothing, meaning https) -

@olivierlambert http. I'd changed that long time ago for performance

-

Okay so compare secure NBD vs HTTPS perf then

I'm pretty sure NBD wins.

I'm pretty sure NBD wins.Alternatively, you might try to raise the number of parallel NBD chunks to be download at the same time (@florent knows the setting I suppose?)

-

@olivierlambert okay yeah I can compare to HTTPS. totally game to try whatever setting he's got to increase the parallel work.

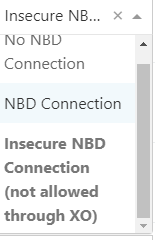

Insecure NBD would good comparison to http but I don't know how to turn that on, I know the purpose to set in host but there must be a config line to make it work in xo.

-

It's only relevant to compare secure vs secure or insecure vs insecure. @florent will guide you when he's around

-

@olivierlambert agree

-

hi,

in theory, the insecure nbd should work when adding the insecure_nbd flag to the purpose of the network . Have you got any error message in the xo logs ?

you can add in the xapiOptions part

preferNbd = true nbdOptions.readAhead = 20 # default 10also do you remote store disks as multiple blocks ?

regards

-

@florent Yes to multiple blocks. I did see this in host>networks page.

I do (and did) have the preferNbd set.

-

@sluflyer06 we did a rework on NBD, to be able to use it everywhere, paving the way for some nice features like retry on network error

but it may have an impact on block based backup. I will make a branch which should alleviate this today