Rolling Pool Update - host took too long to restart

-

@dsiminiuk I think it's about 15 minutes... I don't see it being adjustable in XO.

I thought my HP G8 servers were slow to boot at 10 minutes....

What would you recommend as a timeout? 30 minutes?

-

20 minutes to reboot? Wow

Do you know what is taking so much time? We can change the default obviously.

Do you know what is taking so much time? We can change the default obviously. -

@olivierlambert said in Rolling Pool Update - host took too long to restart:

20 minutes to reboot? Wow

Do you know what is taking so much time? We can change the default obviously.

Do you know what is taking so much time? We can change the default obviously.There is likely a faulty disk involved here that simply isn't known about yet.

I would look further at the host as a whole before making changes that would impact everyone else.

-

That is a really long reboot time, I'd investigate why it's taking so long, any even remotely modern server should be ~5 minutes even for a pretty long POST time. Even my super old Supermicro storage server from like 2015 boots in less than 5 minutes.

-

@DustinB Not a faulty disk. It appears to be memory testing at boot time and at other times after init doing the same thing,

The cluster is a pair of HPE ProLiant DL580 Gen9 servers, each with 2TB of RAM.

Yes, I could turn off memory checking during startup, but I'd rather not.

Danny

-

Ping @pdonias : what value do we have right now? How about raising it to even longer?

-

@olivierlambert By default, it's 20 minutes. And it's already configurable through xo-server's config by adding:

[xapiOptions] restartHostTimeout = '40 minutes' -

got that issue too. Sometimes server restart takes longer than usual, so rolling is canceled by timeout.

Why it's so long? i dunno. Maybe some startup checks. Can't restart production to notice any difference.Is the timeout really requried?

-

Our Dell R630's with 512Gb RAM also takes a while to reboot, so yeah being able to adjust the value is great.

-

@Tristis-Oris said in Rolling Pool Update - host took too long to restart:

got that issue too. Sometimes server restart takes longer than usual, so rolling is canceled by timeout.

Why it's so long? i dunno. Maybe some startup checks. Can't restart production to notice any difference.Is the timeout really requried?

If they have ECC it will check the memory, collect diagnostics and so on, it is pretty common on enterprise servers.

-

Thanks @pdonias I forgot about this

I didn't check in the doc, have we documented that too?

I didn't check in the doc, have we documented that too? -

@olivierlambert It doesn't look like we did. It's documented in the config file but we can add it to the RPU doc too if necessary.

-

Let's do that then, this will reduce a potential thread or two in here

-

@olivierlambert I've made the needed adjustment in the build script to override the default. Now I wait for another set of patches to test it.

Thanks all. -

just installed latest updates, rolling again was canceled by timeout. Since that never happens before, i think it begin after some updates about 2-3 months ago.

-

It's hard to give an answer because we are not inside your infrastructure. How long your host took to reboot in the end?

-

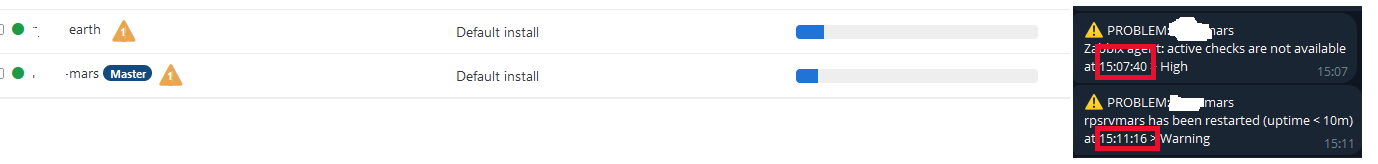

@olivierlambert according to monitoring it takes 10min. >.<

maaaybe some disabled VMs is started after reboot, so it was not enough memory for rolling.But at previous time, reboot really takes very long.

i see here lack of logs. Nothing tell me that rolling was canceled.

-

We are introducing an XO task to monitor the RPU process. That will be easier to track the whole process

-

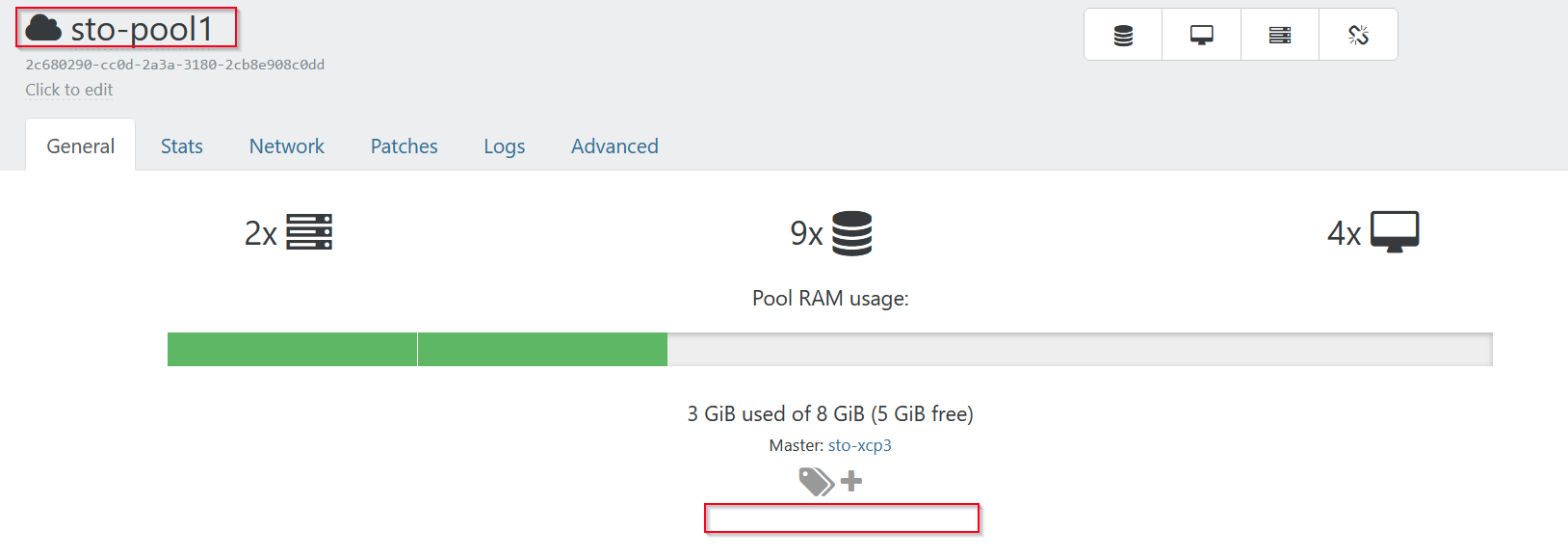

next pool, almost empty, enough memory for rolling, reboot takes 5min.

2nd host not updated.

-

@olivierlambert said in Rolling Pool Update - host took too long to restart:

We are introducing an XO task to monitor the RPU process. That will be easier to track the whole process

This should also be displayed in the pool overview to make sure other admins dont start other tasks by mistake.

In a perfect world there would be a indicative icon by the pool name and also a warning in the general pool overview with some kind of notification ,like "Pool upgrade in progress - Wait for it to complete before starting new tasks" or similar in a very red/orange/other visible color that you cant miss: