CBT: the thread to centralize your feedback

-

Can you show me the exact UI steps you do in XO to do the migration? Then, in the task, are you seeing anything outside VM migrate?

-

I saw these errors in my log today after starting a replication job on commit 530c3. I have not migrated these VMs to a new host or an SR.

Sep 18 08:17:12 xo-server[6199]: 2024-09-18T12:17:12.861Z xo:xapi:vdi INFO can't get changed block { Sep 18 08:17:12 xo-server[6199]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 18 08:17:12 xo-server[6199]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 18 08:17:12 xo-server[6199]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 18 08:17:12 xo-server[6199]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 18 08:17:12 xo-server[6199]: code: 'SR_BACKEND_FAILURE_460', Sep 18 08:17:12 xo-server[6199]: params: [ Sep 18 08:17:12 xo-server[6199]: '', Sep 18 08:17:12 xo-server[6199]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 18 08:17:12 xo-server[6199]: '' Sep 18 08:17:12 xo-server[6199]: ], Sep 18 08:17:12 xo-server[6199]: call: { method: 'VDI.list_changed_blocks', params: [Array] }, Sep 18 08:17:12 xo-server[6199]: url: undefined, Sep 18 08:17:12 xo-server[6199]: task: undefined Sep 18 08:17:12 xo-server[6199]: }, Sep 18 08:17:12 xo-server[6199]: ref: 'OpaqueRef:a7c534ef-d1d5-0578-a564-05b2c36de7be', Sep 18 08:17:12 xo-server[6199]: baseRef: 'OpaqueRef:5d4109f0-5278-64d8-233d-6cd73c8c6d6a' Sep 18 08:17:12 xo-server[6199]: } Sep 18 08:17:14 xo-server[6199]: 2024-09-18T12:17:14.459Z xo:xapi:vdi INFO can't get changed block { Sep 18 08:17:14 xo-server[6199]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 18 08:17:14 xo-server[6199]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 18 08:17:14 xo-server[6199]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 18 08:17:14 xo-server[6199]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 18 08:17:14 xo-server[6199]: code: 'SR_BACKEND_FAILURE_460', Sep 18 08:17:14 xo-server[6199]: params: [ Sep 18 08:17:14 xo-server[6199]: '', Sep 18 08:17:14 xo-server[6199]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', Sep 18 08:17:14 xo-server[6199]: '' Sep 18 08:17:14 xo-server[6199]: ], Sep 18 08:17:14 xo-server[6199]: call: { method: 'VDI.list_changed_blocks', params: [Array] }, Sep 18 08:17:14 xo-server[6199]: url: undefined, Sep 18 08:17:14 xo-server[6199]: task: undefined Sep 18 08:17:14 xo-server[6199]: }, -

After changing NBD back to 1, I haven't seen any additional attached disks. However, the backup that originally succeeded with an attached disk has now failed. Odd that it will initially work with an attached disk but then fail with an attached disk.

-

i still have one VMs stuck without backup. Already restart it host and halt VM itself. SMlog have no records during 5minutes of that task.

"result": { "code": "VDI_IN_USE", "params": [ "OpaqueRef:1f96d4e7-5ca6-4070-b686-b34dd83e5442", "destroy" ], "task": { "uuid": "81a60e3a-c887-13f3-fedc-36eae232a6df", "name_label": "Async.VDI.destroy", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240918T18:03:28Z", "finished": "20240918T18:03:28Z", "status": "failure", "resident_on": "OpaqueRef:223881b6-1309-40e6-9e42-5ad74a274d2d", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VDI_IN_USE", "OpaqueRef:1f96d4e7-5ca6-4070-b686-b34dd83e5442", "destroy" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4711))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "VDI_IN_USE(OpaqueRef:1f96d4e7-5ca6-4070-b686-b34dd83e5442, destroy)", "name": "XapiError", "stack": "XapiError: VDI_IN_USE(OpaqueRef:1f96d4e7-5ca6-4070-b686-b34dd83e5442, destroy)\n at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409111040/packages/xen-api/_XapiError.mjs:16:12)\n at default (file:///opt/xo/xo-builds/xen-orchestra-202409111040/packages/xen-api/_getTaskResult.mjs:13:29)\n at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202409111040/packages/xen-api/index.mjs:1041:24)\n at file:///opt/xo/xo-builds/xen-orchestra-202409111040/packages/xen-api/index.mjs:1075:14\n at Array.forEach (<anonymous>)\n at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202409111040/packages/xen-api/index.mjs:1065:12)\n at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202409111040/packages/xen-api/index.mjs:1238:14)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } },and often get

Job canceled to protect the VDI chainerrors for others. That continue since bad CBT commit. -

It looks like all of my backups have started erroring with "can't create a stream from a metadata VDI, fall back to a base" I am using 1 NDB connection and I am not commit 530c3. I have attached the logs of a delta backup and a replication.

2024-09-19T16_00_00.002Z - backup NG.json.txt

2024-09-19T04_00_00.001Z - backup NG.json.txtI am seeing this in the journal logs.

Sep 19 12:01:39 hostname xo-server[11597]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 19 12:01:39 hostname xo-server[11597]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 19 12:01:39 hostname xo-server[11597]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 19 12:01:39 hostname xo-server[11597]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 19 12:01:39 hostname xo-server[11597]: code: 'SR_BACKEND_FAILURE_460', Sep 19 12:01:39 hostname xo-server[11597]: params: [ Sep 19 12:01:39 hostname xo-server[11597]: '', Sep 19 12:01:39 hostname xo-server[11597]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', Sep 19 12:01:39 hostname xo-server[11597]: '' Sep 19 12:01:39 hostname xo-server[11597]: ], Sep 19 12:01:39 hostname xo-server[11597]: call: { method: 'VDI.list_changed_blocks', params: [Array] }, Sep 19 12:01:39 hostname xo-server[11597]: url: undefined, Sep 19 12:01:39 hostname xo-server[11597]: task: undefined Sep 19 12:01:39 hostname xo-server[11597]: }, Sep 19 12:01:39 hostname xo-server[11597]: ref: 'OpaqueRef:0438087b-5cbc-a458-a8a0-4eaa6ce74d19', Sep 19 12:01:39 hostname xo-server[11597]: baseRef: 'OpaqueRef:ae1330a2-0f95-6c16-6878-f6c05373a2f2' Sep 19 12:01:39 hostname xo-server[11597]: } Sep 19 12:01:43 hostname xo-server[11597]: 2024-09-19T16:01:43.015Z xo:xapi:vdi INFO OpaqueRef:b6f65ae4-bee8-b179-a06c-2bb4956214ba has been disconnected from dom0 { Sep 19 12:01:43 hostname xo-server[11597]: vdiRef: 'OpaqueRef:0438087b-5cbc-a458-a8a0-4eaa6ce74d19', Sep 19 12:01:43 hostname xo-server[11597]: vbdRef: 'OpaqueRef:b6f65ae4-bee8-b179-a06c-2bb4956214ba' Sep 19 12:01:43 hostname xo-server[11597]: } Sep 19 12:02:29 hostname xo-server[11597]: 2024-09-19T16:02:29.855Z xo:xapi:vdi INFO can't get changed block { Sep 19 12:02:29 hostname xo-server[11597]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 19 12:02:29 hostname xo-server[11597]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 19 12:02:29 hostname xo-server[11597]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 19 12:02:29 hostname xo-server[11597]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 19 12:02:29 hostname xo-server[11597]: code: 'SR_BACKEND_FAILURE_460', Sep 19 12:02:29 hostname xo-server[11597]: params: [ Sep 19 12:02:29 hostname xo-server[11597]: '', Sep 19 12:02:29 hostname xo-server[11597]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', -

@Delgado this error sound like issues with the cbt that got invalid, could it be u had a host crash or storage issue? Does a retry create a working full?

-

@rtjdamen I haven't had any hosts crash recently or any storage issue from what I can tell. The "type" in the log says delta but the size of the backups definitely look like full backups. They're also labelled as key when I look at the restore points for delta backups.

-

@Delgado i believe this error message is incorrect, it should be something like "CBT invalid fall back to base", i have seen it random once in a while on a vm, and also with issues on a host or specific storage pool.

-

not sure is it CBT related, never seen that before. VM backup failed in 1min , as always, but task still looks like active.

-

@olivierlambert Any progress on the attached disks and multiple NBD connections issue?

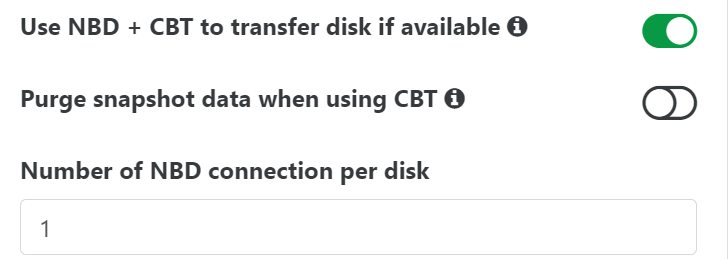

Related, should we see any performance difference related to the number of NBD connections? I went from 4 to 1 and my backups are still taking the same amount of time.

-

I'm not the right person to ask, I'm not tracking this in details. In our own prod, with use more concurrency with 1x NBD connection and that's the best combo I found so far.

-

@olivierlambert Is there a specific person we should ping or link to watch to get updates on the status?

-

@florent is the main backup guy, but he's ultra busy. No guarantee, so the best isn't to ping anyone in particular and see if you have some feedback. If it's a priority, go directly on the pro support. But we'll do our best to answer here, however it can't be a priority vs support ticket.

-

as for about today commit https://github.com/vatesfr/xen-orchestra/commit/ad8cd3791b9459b06d754defa657c97b66261eb3 - migraion still failing.

-

@Tristis-Oris Can you be more specific? What output do you exactly have?

-

vdi.migrate { "id": "1d536c76-1ee7-41aa-93ff-7c7a297e2e80", "sr_id": "9a80cc74-a807-0475-1cc9-b0e42ffc7bf9" } { "code": "SR_BACKEND_FAILURE_46", "params": [ "", "The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045]", "" ], "task": { "uuid": "a6db64c5-b9d2-946c-3cfd-59cd8c4c4586", "name_label": "Async.VDI.pool_migrate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240930T07:54:12Z", "finished": "20240930T07:54:30Z", "status": "failure", "resident_on": "OpaqueRef:223881b6-1309-40e6-9e42-5ad74a274d2d", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "SR_BACKEND_FAILURE_46", "", "The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045]", "" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 12359))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 134))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045], )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045], ) at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1041:24) at file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1075:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1065:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1238:14)" }SMlog

Sep 30 10:54:11 srv SM: [20535] lock: opening lock file /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:11 srv SM: [20535] lock: acquired /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:11 srv SM: [20535] Pause for 1d536c76-1ee7-41aa-93ff-7c7a297e2e80 Sep 30 10:54:11 srv SM: [20535] Calling tap pause with minor 2 Sep 30 10:54:11 srv SM: [20535] ['/usr/sbin/tap-ctl', 'pause', '-p', '12281', '-m', '2'] Sep 30 10:54:11 srv SM: [20535] = 0 Sep 30 10:54:11 srv SM: [20535] lock: released /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:12 srv SM: [20545] lock: opening lock file /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:12 srv SM: [20545] lock: acquired /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:12 srv SM: [20545] Unpause for 1d536c76-1ee7-41aa-93ff-7c7a297e2e80 Sep 30 10:54:12 srv SM: [20545] Realpath: /dev/VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662/VHD-1d536c76-1ee7-41aa-93ff-7c7a297e2e80 Sep 30 10:54:12 srv SM: [20545] Setting LVM_DEVICE to /dev/disk/by-scsid/3600c0ff000524e513777c56301000000 Sep 30 10:54:12 srv SM: [20545] lock: opening lock file /var/lock/sm/d8c3a5f0-6446-6bc0-79d0-749a3a138662/sr Sep 30 10:54:12 srv SM: [20545] LVMCache created for VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662 Sep 30 10:54:12 srv SM: [20545] lock: opening lock file /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] ['/sbin/vgs', '--readonly', 'VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662'] Sep 30 10:54:12 srv SM: [20545] pread SUCCESS Sep 30 10:54:12 srv SM: [20545] Entering _checkMetadataVolume Sep 30 10:54:12 srv SM: [20545] LVMCache: will initialize now Sep 30 10:54:12 srv SM: [20545] LVMCache: refreshing Sep 30 10:54:12 srv SM: [20545] lock: acquired /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662'] Sep 30 10:54:12 srv SM: [20545] pread SUCCESS Sep 30 10:54:12 srv SM: [20545] lock: released /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] lock: acquired /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] lock: released /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] Calling tap unpause with minor 2 Sep 30 10:54:12 srv SM: [20545] ['/usr/sbin/tap-ctl', 'unpause', '-p', '12281', '-m', '2', '-a', 'vhd:/dev/VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662/VHD-1d5 36c76-1ee7-41aa-93ff-7c7a297e2e80'] Sep 30 10:54:12 srv SM: [20545] = 0 Sep 30 10:54:12 srv SM: [20545] lock: released /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi -

Your issue seems to be related to a storage problem regarding the VDI

b3e09a17-9b08-48e5-8b47-93f16979b045. If your SR cannot be scanned due to whatever issue in it, you won't be able to do any operation, snapshot or migrate. I have the impression this problem isn't related at all with CBT. -

@olivierlambert Probably you right. i got that error with both pool's physical SR, but at other pools disks migration fine. So again iscsi problems?

-

@olivierlambert There's a current workaround with NBD connections set to 1 so it's not a priority. I was just looking for a way to keep an eye on the status of any work on it so I can help test, etc.

-

Hi!

Great work with the CBT feature. I noticed it is now included in the "stable" branch which is good news. Is there a summary of the different settings, how they relate and the considerations to take when choosing these options? The XOA documentation, I think, isn't updated yet (searched for CBT with no results).

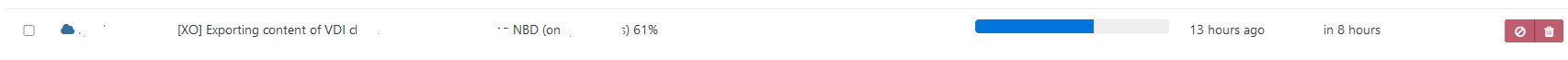

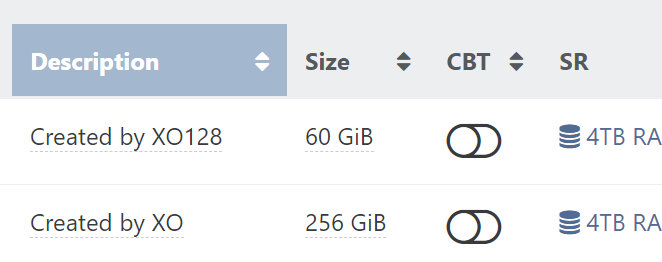

Backup view

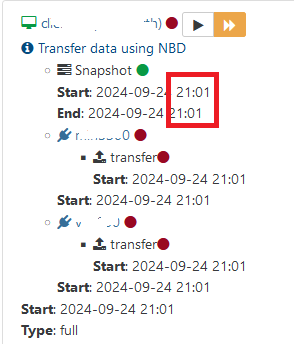

VM disk view

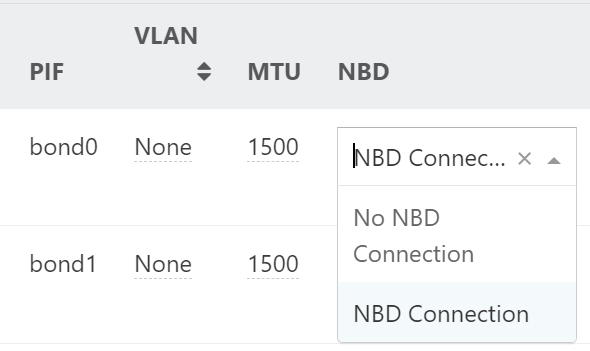

Pool network view