CBT: the thread to centralize your feedback

-

as for about today commit https://github.com/vatesfr/xen-orchestra/commit/ad8cd3791b9459b06d754defa657c97b66261eb3 - migraion still failing.

-

@Tristis-Oris Can you be more specific? What output do you exactly have?

-

vdi.migrate { "id": "1d536c76-1ee7-41aa-93ff-7c7a297e2e80", "sr_id": "9a80cc74-a807-0475-1cc9-b0e42ffc7bf9" } { "code": "SR_BACKEND_FAILURE_46", "params": [ "", "The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045]", "" ], "task": { "uuid": "a6db64c5-b9d2-946c-3cfd-59cd8c4c4586", "name_label": "Async.VDI.pool_migrate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240930T07:54:12Z", "finished": "20240930T07:54:30Z", "status": "failure", "resident_on": "OpaqueRef:223881b6-1309-40e6-9e42-5ad74a274d2d", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "SR_BACKEND_FAILURE_46", "", "The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045]", "" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 12359))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 134))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045], )", "name": "XapiError", "stack": "XapiError: SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=Error scanning VDI b3e09a17-9b08-48e5-8b47-93f16979b045], ) at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1041:24) at file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1075:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1065:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202409301043/packages/xen-api/index.mjs:1238:14)" }SMlog

Sep 30 10:54:11 srv SM: [20535] lock: opening lock file /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:11 srv SM: [20535] lock: acquired /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:11 srv SM: [20535] Pause for 1d536c76-1ee7-41aa-93ff-7c7a297e2e80 Sep 30 10:54:11 srv SM: [20535] Calling tap pause with minor 2 Sep 30 10:54:11 srv SM: [20535] ['/usr/sbin/tap-ctl', 'pause', '-p', '12281', '-m', '2'] Sep 30 10:54:11 srv SM: [20535] = 0 Sep 30 10:54:11 srv SM: [20535] lock: released /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:12 srv SM: [20545] lock: opening lock file /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:12 srv SM: [20545] lock: acquired /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi Sep 30 10:54:12 srv SM: [20545] Unpause for 1d536c76-1ee7-41aa-93ff-7c7a297e2e80 Sep 30 10:54:12 srv SM: [20545] Realpath: /dev/VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662/VHD-1d536c76-1ee7-41aa-93ff-7c7a297e2e80 Sep 30 10:54:12 srv SM: [20545] Setting LVM_DEVICE to /dev/disk/by-scsid/3600c0ff000524e513777c56301000000 Sep 30 10:54:12 srv SM: [20545] lock: opening lock file /var/lock/sm/d8c3a5f0-6446-6bc0-79d0-749a3a138662/sr Sep 30 10:54:12 srv SM: [20545] LVMCache created for VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662 Sep 30 10:54:12 srv SM: [20545] lock: opening lock file /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] ['/sbin/vgs', '--readonly', 'VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662'] Sep 30 10:54:12 srv SM: [20545] pread SUCCESS Sep 30 10:54:12 srv SM: [20545] Entering _checkMetadataVolume Sep 30 10:54:12 srv SM: [20545] LVMCache: will initialize now Sep 30 10:54:12 srv SM: [20545] LVMCache: refreshing Sep 30 10:54:12 srv SM: [20545] lock: acquired /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662'] Sep 30 10:54:12 srv SM: [20545] pread SUCCESS Sep 30 10:54:12 srv SM: [20545] lock: released /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] lock: acquired /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] lock: released /var/lock/sm/.nil/lvm Sep 30 10:54:12 srv SM: [20545] Calling tap unpause with minor 2 Sep 30 10:54:12 srv SM: [20545] ['/usr/sbin/tap-ctl', 'unpause', '-p', '12281', '-m', '2', '-a', 'vhd:/dev/VG_XenStorage-d8c3a5f0-6446-6bc0-79d0-749a3a138662/VHD-1d5 36c76-1ee7-41aa-93ff-7c7a297e2e80'] Sep 30 10:54:12 srv SM: [20545] = 0 Sep 30 10:54:12 srv SM: [20545] lock: released /var/lock/sm/1d536c76-1ee7-41aa-93ff-7c7a297e2e80/vdi -

Your issue seems to be related to a storage problem regarding the VDI

b3e09a17-9b08-48e5-8b47-93f16979b045. If your SR cannot be scanned due to whatever issue in it, you won't be able to do any operation, snapshot or migrate. I have the impression this problem isn't related at all with CBT. -

@olivierlambert Probably you right. i got that error with both pool's physical SR, but at other pools disks migration fine. So again iscsi problems?

-

@olivierlambert There's a current workaround with NBD connections set to 1 so it's not a priority. I was just looking for a way to keep an eye on the status of any work on it so I can help test, etc.

-

Hi!

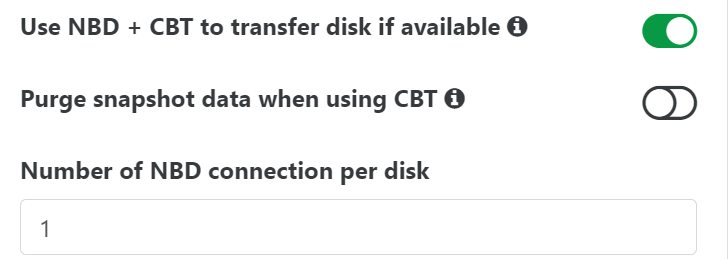

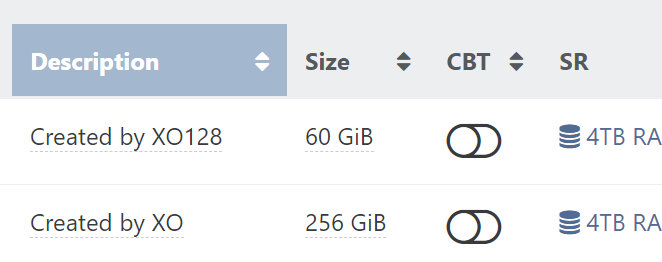

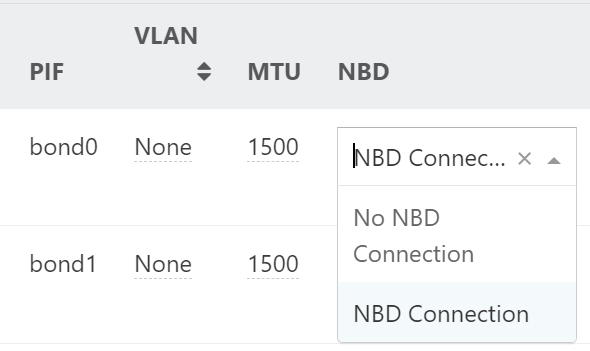

Great work with the CBT feature. I noticed it is now included in the "stable" branch which is good news. Is there a summary of the different settings, how they relate and the considerations to take when choosing these options? The XOA documentation, I think, isn't updated yet (searched for CBT with no results).

Backup view

VM disk view

Pool network view

-

- If you tick the box, CBT will be used for any NBD enabled network (otherwise fallback to regular VHD export). If the box is not tick, then it will never use NBD.

- If you tick the box purge snapshot, the snap will be removed and it rely on CBT metadata to do the delta. Otherwise, the snapshot will be kept.

- NBD connections per disk: we can try to get multiple NBD blocks downloaded in parallel to try to speed up stuff; but in the end, it seems to cause more issues than really improve the transfer speed.

-

To follow up on the backups that break, I have been able to create a reproducible scenario for causing backups to fail with the error message... "can't create a stream from a metadata VDI, fall back to a base"

Hardware:

- 4 XCP-NG hosts (Error is reproducible regardless of which host is hosting the VM.)

- 1 TrueNAS file server providing the NFS share the XCP-NG hosts use for running the VMs.

- 1 TrueNAS file server providing the NFS share for backing up VMs in Continuous Replication mode.

- 1 TrueNAS file server providing the NFS share for backing up VMs in Delta Backups.

Backup Order:

- Full backup in Continuous Replication mode

- Full backup in Delta Backup mode

- Multiple incremental backups in Continuous Replication mode (Not sure of the exact minimum but my servers ran the backup 7 times yesterday.)

- At this point, running an incremental backup of a Delta backup will fail with "can't create a stream from a metadata VDI, fall back to a base"

After this backup has failed, attempting to run any incremental backup will fail. Even the Continuous Replication backups that were working correctly prior to the Delta Backup attempt.

- NDB Connections can be any amount.

- With NBD + CBT = True

- Purge snapshots when using CBP = True

Not purging snapshots generates the same error but also causes problems with the VDI chain when it happens.

All retention policies are set to 1.

This was tested on the current latest version of XCP-NG on all hosts and with commit 0a28a and multiple commits prior to it of the XO Community Edition.

-

@florent feedback for testing this

-

Hi,

I have a problem with a single VM running Almalinux 9. The VM has 2 disks, 50GB and 250GB disk.

Every time i do Delta backup the backup does succeed, but the larger disk is ALWAYS stuck on Control Domain and i have to manually "forget" it, or next backup will fail, which is annoying.This does not occur on full backup, only delta.

I removed all previous snapshots and backup and retried and same thing is happening.

Using XO with latest patches: commit a5967.

This issue started occurring straight after the CBT feature was added.

Using NBD = 1

Here is XO LOG for the backup:

Omitted attempts 2-7.... since they don't fit hereOct 7 11:33:43 xo-ce xo-server[11768]: 2024-10-07T11:33:43.770Z xo:backups:worker INFO starting backup Oct 7 11:33:49 xo-ce xo-server[11768]: 2024-10-07T11:33:49.717Z xo:xapi:vdi INFO found changed blocks { Oct 7 11:33:49 xo-ce xo-server[11768]: changedBlocks: <Buffer 80 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ... 511950 more bytes> Oct 7 11:33:49 xo-ce xo-server[11768]: } Oct 7 11:34:09 xo-ce xo-server[11768]: 2024-10-07T11:34:09.098Z xo:xapi:vdi INFO found changed blocks { Oct 7 11:34:09 xo-ce xo-server[11768]: changedBlocks: <Buffer 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ... 102353 more bytes> Oct 7 11:34:09 xo-ce xo-server[11768]: } Oct 7 11:34:11 xo-ce xo-server[11768]: 2024-10-07T11:34:11.070Z xo:xapi:vdi INFO OpaqueRef:c1f4b3cf-996d-45ba-80ae-59ef7c9cb8ef has been disconnected from dom0 { Oct 7 11:34:11 xo-ce xo-server[11768]: vdiRef: 'OpaqueRef:2fd1d459-46b5-473a-9549-a3e0b401209f', Oct 7 11:34:11 xo-ce xo-server[11768]: vbdRef: 'OpaqueRef:c1f4b3cf-996d-45ba-80ae-59ef7c9cb8ef' Oct 7 11:34:11 xo-ce xo-server[11768]: } Oct 7 11:40:47 xo-ce xo-server[11768]: 2024-10-07T11:40:47.534Z xo:xapi:vdi INFO OpaqueRef:7e94c0da-be46-45f8-9a6b-7e9eb04928b1 has been disconnected from dom0 { Oct 7 11:40:47 xo-ce xo-server[11768]: vdiRef: 'OpaqueRef:f8be3361-0c5c-48b4-bf51-baee4d02cb20', Oct 7 11:40:47 xo-ce xo-server[11768]: vbdRef: 'OpaqueRef:7e94c0da-be46-45f8-9a6b-7e9eb04928b1' Oct 7 11:40:47 xo-ce xo-server[11768]: } Oct 7 11:41:59 xo-ce xo-server[11768]: 2024-10-07T11:41:59.303Z xo:xapi:vdi INFO OpaqueRef:2e1231be-f65b-4fa4-bb8d-3871ddabfa87 has been disconnected from dom0 { Oct 7 11:41:59 xo-ce xo-server[11768]: vdiRef: 'OpaqueRef:cb2af589-ae7e-4b5c-b657-0c2636b3677f', Oct 7 11:41:59 xo-ce xo-server[11768]: vbdRef: 'OpaqueRef:2e1231be-f65b-4fa4-bb8d-3871ddabfa87' Oct 7 11:41:59 xo-ce xo-server[11768]: } Oct 7 11:42:11 xo-ce xo-server[11768]: 2024-10-07T11:42:11.742Z xo:xapi WARN retry { Oct 7 11:42:11 xo-ce xo-server[11768]: attemptNumber: 0, Oct 7 11:42:11 xo-ce xo-server[11768]: delay: 5000, Oct 7 11:42:11 xo-ce xo-server[11768]: error: XapiError: VDI_IN_USE(OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92, destroy) Oct 7 11:42:11 xo-ce xo-server[11768]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_XapiError.mjs:16:12) Oct 7 11:42:11 xo-ce xo-server[11768]: at default (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_getTaskResult.mjs:13:29) Oct 7 11:42:11 xo-ce xo-server[11768]: at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1041:24) Oct 7 11:42:11 xo-ce xo-server[11768]: at file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1075:14 Oct 7 11:42:11 xo-ce xo-server[11768]: at Array.forEach (<anonymous>) Oct 7 11:42:11 xo-ce xo-server[11768]: at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1065:12) Oct 7 11:42:11 xo-ce xo-server[11768]: at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1238:14) Oct 7 11:42:11 xo-ce xo-server[11768]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Oct 7 11:42:11 xo-ce xo-server[11768]: code: 'VDI_IN_USE', Oct 7 11:42:11 xo-ce xo-server[11768]: params: [ 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92', 'destroy' ], Oct 7 11:42:11 xo-ce xo-server[11768]: call: undefined, Oct 7 11:42:11 xo-ce xo-server[11768]: url: undefined, Oct 7 11:42:11 xo-ce xo-server[11768]: task: task { Oct 7 11:42:11 xo-ce xo-server[11768]: uuid: '7bb5adaa-5b49-2227-3ede-759420836f59', Oct 7 11:42:11 xo-ce xo-server[11768]: name_label: 'Async.VDI.destroy', Oct 7 11:42:11 xo-ce xo-server[11768]: name_description: '', Oct 7 11:42:11 xo-ce xo-server[11768]: allowed_operations: [], Oct 7 11:42:11 xo-ce xo-server[11768]: current_operations: {}, Oct 7 11:42:11 xo-ce xo-server[11768]: created: '20241007T11:41:59Z', Oct 7 11:42:11 xo-ce xo-server[11768]: finished: '20241007T11:42:11Z', Oct 7 11:42:11 xo-ce xo-server[11768]: status: 'failure', Oct 7 11:42:11 xo-ce xo-server[11768]: resident_on: 'OpaqueRef:010eebba-be27-489f-9f87-d06c8b675f19', Oct 7 11:42:11 xo-ce xo-server[11768]: progress: 1, Oct 7 11:42:11 xo-ce xo-server[11768]: type: '<none/>', Oct 7 11:42:11 xo-ce xo-server[11768]: result: '', Oct 7 11:42:11 xo-ce xo-server[11768]: error_info: [Array], Oct 7 11:42:11 xo-ce xo-server[11768]: other_config: {}, Oct 7 11:42:11 xo-ce xo-server[11768]: subtask_of: 'OpaqueRef:NULL', Oct 7 11:42:11 xo-ce xo-server[11768]: subtasks: [], Oct 7 11:42:11 xo-ce xo-server[11768]: backtrace: '(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4711))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))' Oct 7 11:42:11 xo-ce xo-server[11768]: } Oct 7 11:42:11 xo-ce xo-server[11768]: }, Oct 7 11:42:11 xo-ce xo-server[11768]: fn: 'destroy', Oct 7 11:42:11 xo-ce xo-server[11768]: arguments: [ 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92' ], Oct 7 11:42:11 xo-ce xo-server[11768]: pool: { Oct 7 11:42:11 xo-ce xo-server[11768]: uuid: 'fe688bb2-b9ac-db7b-737a-cc457195f095', Oct 7 11:42:11 xo-ce xo-server[11768]: name_label: 'SGO Pool' Oct 7 11:42:11 xo-ce xo-server[11768]: } Oct 7 11:42:11 xo-ce xo-server[11768]: } Oct 7 11:42:42 xo-ce xo-server[11768]: 2024-10-07T11:42:42.061Z xo:xapi:vdi WARN Couldn't disconnect OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92 from dom0 { Oct 7 11:42:42 xo-ce xo-server[11768]: vdiRef: 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92', Oct 7 11:42:42 xo-ce xo-server[11768]: vbdRef: 'OpaqueRef:2b0c22e7-2794-471a-bd85-145cf302f8e4', Oct 7 11:42:42 xo-ce xo-server[11768]: err: XapiError: OPERATION_NOT_ALLOWED(VBD '0da0be96-45ef-684e-b956-7b5463cd9b09' still attached to 'f3fd6bd3-a622-4838-a7b6-e6e5e2cc6d04') Oct 7 11:42:42 xo-ce xo-server[11768]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_XapiError.mjs:16:12) Oct 7 11:42:42 xo-ce xo-server[11768]: at file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/transports/json-rpc.mjs:38:21 Oct 7 11:42:42 xo-ce xo-server[11768]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Oct 7 11:42:42 xo-ce xo-server[11768]: code: 'OPERATION_NOT_ALLOWED', Oct 7 11:42:42 xo-ce xo-server[11768]: params: [ Oct 7 11:42:42 xo-ce xo-server[11768]: "VBD '0da0be96-45ef-684e-b956-7b5463cd9b09' still attached to 'f3fd6bd3-a622-4838-a7b6-e6e5e2cc6d04'" Oct 7 11:42:42 xo-ce xo-server[11768]: ], Oct 7 11:42:42 xo-ce xo-server[11768]: call: { method: 'VBD.destroy', params: [Array] }, Oct 7 11:42:42 xo-ce xo-server[11768]: url: undefined, Oct 7 11:42:42 xo-ce xo-server[11768]: task: undefined Oct 7 11:42:42 xo-ce xo-server[11768]: } Oct 7 11:42:42 xo-ce xo-server[11768]: } Oct 7 11:46:54 xo-ce xo-server[11768]: 2024-10-07T11:46:54.842Z xo:xapi WARN retry { Oct 7 11:46:54 xo-ce xo-server[11768]: attemptNumber: 8, Oct 7 11:46:54 xo-ce xo-server[11768]: delay: 5000, Oct 7 11:46:54 xo-ce xo-server[11768]: error: XapiError: VDI_IN_USE(OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92, destroy) Oct 7 11:46:54 xo-ce xo-server[11768]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_XapiError.mjs:16:12) Oct 7 11:46:54 xo-ce xo-server[11768]: at default (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_getTaskResult.mjs:13:29) Oct 7 11:46:54 xo-ce xo-server[11768]: at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1041:24) Oct 7 11:46:54 xo-ce xo-server[11768]: at file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1075:14 Oct 7 11:46:54 xo-ce xo-server[11768]: at Array.forEach (<anonymous>) Oct 7 11:46:54 xo-ce xo-server[11768]: at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1065:12) Oct 7 11:46:54 xo-ce xo-server[11768]: at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1238:14) Oct 7 11:46:54 xo-ce xo-server[11768]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Oct 7 11:46:54 xo-ce xo-server[11768]: code: 'VDI_IN_USE', Oct 7 11:46:54 xo-ce xo-server[11768]: params: [ 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92', 'destroy' ], Oct 7 11:46:54 xo-ce xo-server[11768]: call: undefined, Oct 7 11:46:54 xo-ce xo-server[11768]: url: undefined, Oct 7 11:46:54 xo-ce xo-server[11768]: task: task { Oct 7 11:46:54 xo-ce xo-server[11768]: uuid: '70afe347-815d-806b-0eed-dd6239adcf0e', Oct 7 11:46:54 xo-ce xo-server[11768]: name_label: 'Async.VDI.destroy', Oct 7 11:46:54 xo-ce xo-server[11768]: name_description: '', Oct 7 11:46:54 xo-ce xo-server[11768]: allowed_operations: [], Oct 7 11:46:54 xo-ce xo-server[11768]: current_operations: {}, Oct 7 11:46:54 xo-ce xo-server[11768]: created: '20241007T11:46:54Z', Oct 7 11:46:54 xo-ce xo-server[11768]: finished: '20241007T11:46:54Z', Oct 7 11:46:54 xo-ce xo-server[11768]: status: 'failure', Oct 7 11:46:54 xo-ce xo-server[11768]: resident_on: 'OpaqueRef:010eebba-be27-489f-9f87-d06c8b675f19', Oct 7 11:46:54 xo-ce xo-server[11768]: progress: 1, Oct 7 11:46:54 xo-ce xo-server[11768]: type: '<none/>', Oct 7 11:46:54 xo-ce xo-server[11768]: result: '', Oct 7 11:46:54 xo-ce xo-server[11768]: error_info: [Array], Oct 7 11:46:54 xo-ce xo-server[11768]: other_config: {}, Oct 7 11:46:54 xo-ce xo-server[11768]: subtask_of: 'OpaqueRef:NULL', Oct 7 11:46:54 xo-ce xo-server[11768]: subtasks: [], Oct 7 11:46:54 xo-ce xo-server[11768]: backtrace: '(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4711))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))' Oct 7 11:46:54 xo-ce xo-server[11768]: } Oct 7 11:46:54 xo-ce xo-server[11768]: }, Oct 7 11:46:54 xo-ce xo-server[11768]: fn: 'destroy', Oct 7 11:46:54 xo-ce xo-server[11768]: arguments: [ 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92' ], Oct 7 11:46:54 xo-ce xo-server[11768]: pool: { Oct 7 11:46:54 xo-ce xo-server[11768]: uuid: 'fe688bb2-b9ac-db7b-737a-cc457195f095', Oct 7 11:46:54 xo-ce xo-server[11768]: name_label: 'SGO Pool' Oct 7 11:46:54 xo-ce xo-server[11768]: } Oct 7 11:46:54 xo-ce xo-server[11768]: } Oct 7 11:47:25 xo-ce xo-server[11768]: 2024-10-07T11:47:25.194Z xo:xapi:vdi WARN Couldn't disconnect OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92 from dom0 { Oct 7 11:47:25 xo-ce xo-server[11768]: vdiRef: 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92', Oct 7 11:47:25 xo-ce xo-server[11768]: vbdRef: 'OpaqueRef:2b0c22e7-2794-471a-bd85-145cf302f8e4', Oct 7 11:47:25 xo-ce xo-server[11768]: err: XapiError: OPERATION_NOT_ALLOWED(VBD '0da0be96-45ef-684e-b956-7b5463cd9b09' still attached to 'f3fd6bd3-a622-4838-a7b6-e6e5e2cc6d04') Oct 7 11:47:25 xo-ce xo-server[11768]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_XapiError.mjs:16:12) Oct 7 11:47:25 xo-ce xo-server[11768]: at file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/transports/json-rpc.mjs:38:21 Oct 7 11:47:25 xo-ce xo-server[11768]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Oct 7 11:47:25 xo-ce xo-server[11768]: code: 'OPERATION_NOT_ALLOWED', Oct 7 11:47:25 xo-ce xo-server[11768]: params: [ Oct 7 11:47:25 xo-ce xo-server[11768]: "VBD '0da0be96-45ef-684e-b956-7b5463cd9b09' still attached to 'f3fd6bd3-a622-4838-a7b6-e6e5e2cc6d04'" Oct 7 11:47:25 xo-ce xo-server[11768]: ], Oct 7 11:47:25 xo-ce xo-server[11768]: call: { method: 'VBD.destroy', params: [Array] }, Oct 7 11:47:25 xo-ce xo-server[11768]: url: undefined, Oct 7 11:47:25 xo-ce xo-server[11768]: task: undefined Oct 7 11:47:25 xo-ce xo-server[11768]: } Oct 7 11:47:25 xo-ce xo-server[11768]: } Oct 7 11:47:30 xo-ce xo-server[11768]: 2024-10-07T11:47:30.323Z xo:xapi:vm WARN VM_destroy: failed to destroy VDI { Oct 7 11:47:30 xo-ce xo-server[11768]: error: XapiError: VDI_IN_USE(OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92, destroy) Oct 7 11:47:30 xo-ce xo-server[11768]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_XapiError.mjs:16:12) Oct 7 11:47:30 xo-ce xo-server[11768]: at default (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/_getTaskResult.mjs:13:29) Oct 7 11:47:30 xo-ce xo-server[11768]: at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1041:24) Oct 7 11:47:30 xo-ce xo-server[11768]: at file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1075:14 Oct 7 11:47:30 xo-ce xo-server[11768]: at Array.forEach (<anonymous>) Oct 7 11:47:30 xo-ce xo-server[11768]: at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1065:12) Oct 7 11:47:30 xo-ce xo-server[11768]: at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202410070635/packages/xen-api/index.mjs:1238:14) Oct 7 11:47:30 xo-ce xo-server[11768]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Oct 7 11:47:30 xo-ce xo-server[11768]: code: 'VDI_IN_USE', Oct 7 11:47:30 xo-ce xo-server[11768]: params: [ 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92', 'destroy' ], Oct 7 11:47:30 xo-ce xo-server[11768]: call: undefined, Oct 7 11:47:30 xo-ce xo-server[11768]: url: undefined, Oct 7 11:47:30 xo-ce xo-server[11768]: task: task { Oct 7 11:47:30 xo-ce xo-server[11768]: uuid: '5c688ec4-5523-c1f1-89dc-a881abdbaee1', Oct 7 11:47:30 xo-ce xo-server[11768]: name_label: 'Async.VDI.destroy', Oct 7 11:47:30 xo-ce xo-server[11768]: name_description: '', Oct 7 11:47:30 xo-ce xo-server[11768]: allowed_operations: [], Oct 7 11:47:30 xo-ce xo-server[11768]: current_operations: {}, Oct 7 11:47:30 xo-ce xo-server[11768]: created: '20241007T11:47:30Z', Oct 7 11:47:30 xo-ce xo-server[11768]: finished: '20241007T11:47:30Z', Oct 7 11:47:30 xo-ce xo-server[11768]: status: 'failure', Oct 7 11:47:30 xo-ce xo-server[11768]: resident_on: 'OpaqueRef:010eebba-be27-489f-9f87-d06c8b675f19', Oct 7 11:47:30 xo-ce xo-server[11768]: progress: 1, Oct 7 11:47:30 xo-ce xo-server[11768]: type: '<none/>', Oct 7 11:47:30 xo-ce xo-server[11768]: result: '', Oct 7 11:47:30 xo-ce xo-server[11768]: error_info: [Array], Oct 7 11:47:30 xo-ce xo-server[11768]: other_config: {}, Oct 7 11:47:30 xo-ce xo-server[11768]: subtask_of: 'OpaqueRef:NULL', Oct 7 11:47:30 xo-ce xo-server[11768]: subtasks: [], Oct 7 11:47:30 xo-ce xo-server[11768]: backtrace: '(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4711))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))' Oct 7 11:47:30 xo-ce xo-server[11768]: } Oct 7 11:47:30 xo-ce xo-server[11768]: }, Oct 7 11:47:30 xo-ce xo-server[11768]: vdiRef: 'OpaqueRef:8673586d-a9fc-4651-883a-ad333c13dc92', Oct 7 11:47:30 xo-ce xo-server[11768]: vmRef: 'OpaqueRef:239e4aa1-b3ce-4fa0-abe4-3fb6803a990e' Oct 7 11:47:30 xo-ce xo-server[11768]: } Oct 7 11:47:30 xo-ce xo-server[11768]: 2024-10-07T11:47:30.474Z xo:backups:MixinBackupWriter INFO deleting unused VHD { Oct 7 11:47:30 xo-ce xo-server[11768]: path: '/xo-vm-backups/d5d0334c-a7e3-b29f-51ca-1be9c211d2c1/vdis/5a7a5647-d2ca-4014-b87b-06c621c09a20/9f8da2fd-a08d-43c2-a5b1-ce6125cf52f5/20241007T111850Z.vhd' Oct 7 11:47:30 xo-ce xo-server[11768]: } Oct 7 11:47:30 xo-ce xo-server[11768]: 2024-10-07T11:47:30.475Z xo:backups:MixinBackupWriter INFO deleting unused VHD { Oct 7 11:47:30 xo-ce xo-server[11768]: path: '/xo-vm-backups/d5d0334c-a7e3-b29f-51ca-1be9c211d2c1/vdis/5a7a5647-d2ca-4014-b87b-06c621c09a20/c6853f48-4b06-4c34-9707-b68f9054e6fc/20241007T111850Z.vhd' Oct 7 11:47:30 xo-ce xo-server[11768]: } Oct 7 11:47:30 xo-ce xo-server[11768]: 2024-10-07T11:47:30.568Z xo:backups:worker INFO backup has ended Oct 7 11:48:00 xo-ce xo-server[11768]: 2024-10-07T11:48:00.630Z xo:backups:worker WARN worker process did not exit automatically, forcing... Oct 7 11:48:00 xo-ce xo-server[11768]: 2024-10-07T11:48:00.631Z xo:backups:worker INFO process will exit { Oct 7 11:48:00 xo-ce xo-server[11768]: duration: 856860228, Oct 7 11:48:00 xo-ce xo-server[11768]: exitCode: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: resourceUsage: { Oct 7 11:48:00 xo-ce xo-server[11768]: userCPUTime: 771667771, Oct 7 11:48:00 xo-ce xo-server[11768]: systemCPUTime: 176351529, Oct 7 11:48:00 xo-ce xo-server[11768]: maxRSS: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: sharedMemorySize: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: unsharedDataSize: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: unsharedStackSize: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: minorPageFault: 3322393, Oct 7 11:48:00 xo-ce xo-server[11768]: majorPageFault: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: swappedOut: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: fsRead: 2416, Oct 7 11:48:00 xo-ce xo-server[11768]: fsWrite: 209789336, Oct 7 11:48:00 xo-ce xo-server[11768]: ipcSent: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: ipcReceived: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: signalsCount: 0, Oct 7 11:48:00 xo-ce xo-server[11768]: voluntaryContextSwitches: 527248, Oct 7 11:48:00 xo-ce xo-server[11768]: involuntaryContextSwitches: 342752 Oct 7 11:48:00 xo-ce xo-server[11768]: }, Oct 7 11:48:00 xo-ce xo-server[11768]: summary: { duration: '14m', cpuUsage: '111%', memoryUsage: '0 B' } Oct 7 11:48:00 xo-ce xo-server[11768]: } -

Can you try with XOA on latest channel and see if you have the same behavior?

-

@olivierlambert we have the same issue, i am just updating to latest, i did not see any changes on github regarding backups however, is there a change inside this build?

-

@olivierlambert

Same behaviour on XOA, Full backup OK, delta succeedes, but 2nd disk stuck on control domain:

Current version: 5.98.1 - XOA build: 20241004PS. just noticed that while the delta is successfully ran, the backuplog say:

Retry the VM backup due to an errorWith message:

"The value of \"offset\" is out of range. It must be >= 0 and <= 102399. Received 102400"2nd attempt ran successfully, while leaving the vdi orphaned, on both XO and XOA.

Oct 07 08:44:39 xoa xo-server[4796]: 2024-10-07T13:44:39.083Z xo:backups:worker INFO starting backup Oct 07 08:54:50 xoa xo-server[4796]: 2024-10-07T13:54:50.199Z xo:xapi:vdi INFO OpaqueRef:841ceab7-91b7-48a4-a85c-f4505c1614bd has been disconnected from dom0 { Oct 07 08:54:50 xoa xo-server[4796]: vdiRef: 'OpaqueRef:01c25ee1-a119-4f1d-bfdc-f294a83fee0b', Oct 07 08:54:50 xoa xo-server[4796]: vbdRef: 'OpaqueRef:841ceab7-91b7-48a4-a85c-f4505c1614bd' Oct 07 08:54:50 xoa xo-server[4796]: } Oct 07 08:54:58 xoa xo-server[4796]: 2024-10-07T13:54:58.283Z xo:backups:worker INFO backup has ended Oct 07 08:55:09 xoa xo-server[4796]: 2024-10-07T13:55:09.682Z xo:backups:worker INFO process will exit { Oct 07 08:55:09 xoa xo-server[4796]: duration: 630597722, Oct 07 08:55:09 xoa xo-server[4796]: exitCode: 0, Oct 07 08:55:09 xoa xo-server[4796]: resourceUsage: { Oct 07 08:55:09 xoa xo-server[4796]: userCPUTime: 709081409, Oct 07 08:55:09 xoa xo-server[4796]: systemCPUTime: 152978165, Oct 07 08:55:09 xoa xo-server[4796]: maxRSS: 99824, Oct 07 08:55:09 xoa xo-server[4796]: sharedMemorySize: 0, Oct 07 08:55:09 xoa xo-server[4796]: unsharedDataSize: 0, Oct 07 08:55:09 xoa xo-server[4796]: unsharedStackSize: 0, Oct 07 08:55:09 xoa xo-server[4796]: minorPageFault: 1526509, Oct 07 08:55:09 xoa xo-server[4796]: majorPageFault: 1, Oct 07 08:55:09 xoa xo-server[4796]: swappedOut: 0, Oct 07 08:55:09 xoa xo-server[4796]: fsRead: 4736, Oct 07 08:55:09 xoa xo-server[4796]: fsWrite: 209788416, Oct 07 08:55:09 xoa xo-server[4796]: ipcSent: 0, Oct 07 08:55:09 xoa xo-server[4796]: ipcReceived: 0, Oct 07 08:55:09 xoa xo-server[4796]: signalsCount: 0, Oct 07 08:55:09 xoa xo-server[4796]: voluntaryContextSwitches: 397016, Oct 07 08:55:09 xoa xo-server[4796]: involuntaryContextSwitches: 441949 Oct 07 08:55:09 xoa xo-server[4796]: }, Oct 07 08:55:09 xoa xo-server[4796]: summary: { duration: '11m', cpuUsage: '137%', memoryUsage: '97.48 MiB' } Oct 07 08:55:09 xoa xo-server[4796]: } Oct 07 08:55:47 xoa xo-server[6339]: 2024-10-07T13:55:47.446Z xo:backups:worker INFO starting backup Oct 07 08:55:53 xoa xo-server[6339]: 2024-10-07T13:55:53.223Z xo:xapi:vdi INFO found changed blocks { Oct 07 08:55:53 xoa xo-server[6339]: changedBlocks: <Buffer 80 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ... 511950 more bytes> Oct 07 08:55:53 xoa xo-server[6339]: } Oct 07 08:56:11 xoa xo-server[6339]: 2024-10-07T13:56:11.907Z xo:xapi:vdi INFO found changed blocks { Oct 07 08:56:11 xoa xo-server[6339]: changedBlocks: <Buffer 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ... 102353 more bytes> Oct 07 08:56:11 xoa xo-server[6339]: } Oct 07 08:56:14 xoa xo-server[6339]: 2024-10-07T13:56:14.369Z xo:xapi:vdi INFO OpaqueRef:18023c91-93c8-489b-85e4-f2bda338258c has been disconnected from dom0 { Oct 07 08:56:14 xoa xo-server[6339]: vdiRef: 'OpaqueRef:b438dd7b-d27c-4184-99e5-fddfb44901b0', Oct 07 08:56:14 xoa xo-server[6339]: vbdRef: 'OpaqueRef:18023c91-93c8-489b-85e4-f2bda338258c' Oct 07 08:56:14 xoa xo-server[6339]: } Oct 07 09:07:42 xoa xo-server[6339]: 2024-10-07T14:07:42.981Z xo:xapi WARN retry { Oct 07 09:07:42 xoa xo-server[6339]: attemptNumber: 0, Oct 07 09:07:42 xoa xo-server[6339]: delay: 5000, Oct 07 09:07:42 xoa xo-server[6339]: error: XapiError: VDI_IN_USE(OpaqueRef:69e0e65f-94ee-468a-b919-2b22d3db670a, destroy) Oct 07 09:07:42 xoa xo-server[6339]: at XapiError.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12) Oct 07 09:07:42 xoa xo-server[6339]: at default (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_getTaskResult.mjs:13:29) Oct 07 09:07:42 xoa xo-server[6339]: at Xapi._addRecordToCache (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1076:24) Oct 07 09:07:42 xoa xo-server[6339]: at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1110:14 Oct 07 09:07:42 xoa xo-server[6339]: at Array.forEach (<anonymous>) Oct 07 09:07:42 xoa xo-server[6339]: at Xapi._processEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1100:12) Oct 07 09:07:42 xoa xo-server[6339]: at Xapi._watchEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1273:14) Oct 07 09:07:42 xoa xo-server[6339]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Oct 07 09:07:42 xoa xo-server[6339]: code: 'VDI_IN_USE', Oct 07 09:07:42 xoa xo-server[6339]: params: [ 'OpaqueRef:69e0e65f-94ee-468a-b919-2b22d3db670a', 'destroy' ], Oct 07 09:07:42 xoa xo-server[6339]: call: undefined, Oct 07 09:07:42 xoa xo-server[6339]: url: undefined, Oct 07 09:07:42 xoa xo-server[6339]: task: task { Oct 07 09:07:42 xoa xo-server[6339]: uuid: '30d84ccc-fe22-fd16-6697-4615df096ee6', Oct 07 09:07:42 xoa xo-server[6339]: name_label: 'Async.VDI.destroy', Oct 07 09:07:42 xoa xo-server[6339]: name_description: '', Oct 07 09:07:42 xoa xo-server[6339]: allowed_operations: [], Oct 07 09:07:42 xoa xo-server[6339]: current_operations: {}, Oct 07 09:07:42 xoa xo-server[6339]: created: '20241007T14:07:33Z', Oct 07 09:07:42 xoa xo-server[6339]: finished: '20241007T14:07:42Z', Oct 07 09:07:42 xoa xo-server[6339]: status: 'failure', Oct 07 09:07:42 xoa xo-server[6339]: resident_on: 'OpaqueRef:010eebba-be27-489f-9f87-d06c8b675f19', Oct 07 09:07:42 xoa xo-server[6339]: progress: 1, Oct 07 09:07:42 xoa xo-server[6339]: type: '<none/>', Oct 07 09:07:42 xoa xo-server[6339]: result: '', Oct 07 09:07:42 xoa xo-server[6339]: error_info: [Array], Oct 07 09:07:42 xoa xo-server[6339]: other_config: {}, Oct 07 09:07:42 xoa xo-server[6339]: subtask_of: 'OpaqueRef:NULL', Oct 07 09:07:42 xoa xo-server[6339]: subtasks: [], Oct 07 09:07:42 xoa xo-server[6339]: backtrace: '(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4711))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename o> Oct 07 09:07:42 xoa xo-server[6339]: } Oct 07 09:07:42 xoa xo-server[6339]: }, Oct 07 09:07:42 xoa xo-server[6339]: fn: 'destroy', Oct 07 09:07:42 xoa xo-server[6339]: arguments: [ 'OpaqueRef:69e0e65f-94ee-468a-b919-2b22d3db670a' ], Oct 07 09:07:42 xoa xo-server[6339]: pool: { Oct 07 09:07:42 xoa xo-server[6339]: uuid: 'fe688bb2-b9ac-db7b-737a-cc457195f095', Oct 07 09:07:42 xoa xo-server[6339]: name_label: 'SGO Pool' Oct 07 09:07:42 xoa xo-server[6339]: } Oct 07 09:07:42 xoa xo-server[6339]: } Oct 07 09:08:28 xoa xo-server[6339]: 2024-10-07T14:08:28.493Z xo:xapi:vm WARN VM_destroy: failed to destroy VDI { Oct 07 09:08:28 xoa xo-server[6339]: error: XapiError: VDI_IN_USE(OpaqueRef:69e0e65f-94ee-468a-b919-2b22d3db670a, destroy) Oct 07 09:08:28 xoa xo-server[6339]: at XapiError.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12) Oct 07 09:08:28 xoa xo-server[6339]: at default (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_getTaskResult.mjs:13:29) Oct 07 09:08:28 xoa xo-server[6339]: at Xapi._addRecordToCache (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1076:24) Oct 07 09:08:28 xoa xo-server[6339]: at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1110:14 Oct 07 09:08:28 xoa xo-server[6339]: at Array.forEach (<anonymous>) Oct 07 09:08:28 xoa xo-server[6339]: at Xapi._processEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1100:12) Oct 07 09:08:28 xoa xo-server[6339]: at Xapi._watchEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1273:14) Oct 07 09:08:28 xoa xo-server[6339]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Oct 07 09:08:28 xoa xo-server[6339]: code: 'VDI_IN_USE', Oct 07 09:08:28 xoa xo-server[6339]: params: [ 'OpaqueRef:69e0e65f-94ee-468a-b919-2b22d3db670a', 'destroy' ], Oct 07 09:08:28 xoa xo-server[6339]: call: undefined, Oct 07 09:08:28 xoa xo-server[6339]: url: undefined, Oct 07 09:08:28 xoa xo-server[6339]: task: task { Oct 07 09:08:28 xoa xo-server[6339]: uuid: '76ceadda-070d-3e2e-282b-76c58abd13b1', Oct 07 09:08:28 xoa xo-server[6339]: name_label: 'Async.VDI.destroy', Oct 07 09:08:28 xoa xo-server[6339]: name_description: '', Oct 07 09:08:28 xoa xo-server[6339]: allowed_operations: [], Oct 07 09:08:28 xoa xo-server[6339]: current_operations: {}, Oct 07 09:08:28 xoa xo-server[6339]: created: '20241007T14:08:28Z', Oct 07 09:08:28 xoa xo-server[6339]: finished: '20241007T14:08:28Z', Oct 07 09:08:28 xoa xo-server[6339]: status: 'failure', Oct 07 09:08:28 xoa xo-server[6339]: resident_on: 'OpaqueRef:010eebba-be27-489f-9f87-d06c8b675f19', Oct 07 09:08:28 xoa xo-server[6339]: progress: 1, Oct 07 09:08:28 xoa xo-server[6339]: type: '<none/>', Oct 07 09:08:28 xoa xo-server[6339]: result: '', Oct 07 09:08:28 xoa xo-server[6339]: error_info: [Array], Oct 07 09:08:28 xoa xo-server[6339]: other_config: {}, Oct 07 09:08:28 xoa xo-server[6339]: subtask_of: 'OpaqueRef:NULL', Oct 07 09:08:28 xoa xo-server[6339]: subtasks: [], Oct 07 09:08:28 xoa xo-server[6339]: backtrace: '(((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4711))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename o> Oct 07 09:08:28 xoa xo-server[6339]: } Oct 07 09:08:28 xoa xo-server[6339]: }, Oct 07 09:08:28 xoa xo-server[6339]: vdiRef: 'OpaqueRef:69e0e65f-94ee-468a-b919-2b22d3db670a', Oct 07 09:08:28 xoa xo-server[6339]: vmRef: 'OpaqueRef:c246a561-2409-4e72-900c-b2d259707e22' Oct 07 09:08:28 xoa xo-server[6339]: } Oct 07 09:08:28 xoa xo-server[6339]: 2024-10-07T14:08:28.677Z xo:backups:MixinBackupWriter INFO deleting unused VHD { Oct 07 09:08:28 xoa xo-server[6339]: path: '/xo-vm-backups/d5d0334c-a7e3-b29f-51ca-1be9c211d2c1/vdis/05ce27a6-b447-4b12-b963-7e8d4978c95a/c6853f48-4b06-4c34-9707-b68f9054e6fc/20241007T134447Z.vhd' Oct 07 09:08:28 xoa xo-server[6339]: } Oct 07 09:08:28 xoa xo-server[6339]: 2024-10-07T14:08:28.678Z xo:backups:MixinBackupWriter INFO deleting unused VHD { Oct 07 09:08:28 xoa xo-server[6339]: path: '/xo-vm-backups/d5d0334c-a7e3-b29f-51ca-1be9c211d2c1/vdis/05ce27a6-b447-4b12-b963-7e8d4978c95a/9f8da2fd-a08d-43c2-a5b1-ce6125cf52f5/20241007T134447Z.vhd' Oct 07 09:08:28 xoa xo-server[6339]: } Oct 07 09:08:28 xoa xo-server[6339]: 2024-10-07T14:08:28.788Z xo:backups:worker INFO backup has ended -

Hi,

Same error here, Current version: 5.98.1

I disabled purge data option, but I still had error.

I opened a support ticket #7729749

-

And what about latest channel? Same behavior?

-

@olivierlambert we are still facing issues with VDI_IN_USE and "can't create a stream from a metadata vdi" it seems like it has grown since the latest version is installed. Allready shared this with support. I believe much of these issues we all face are related to XAPI tasks that are still running or timing out.

-

I can't test on latest channel, because long backup job are running?

-

I'm also facing "can't create a stream from a metadata VDI, fall back to a base" on delta backups after upgrade of XO.

Below the logs from failed jobs:{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1728295200005", "jobId": "af0c1c01-8101-4dc6-806f-a4d3cf381cf7", "jobName": "ISO", "message": "backup", "scheduleId": "340b6832-4e2b-485b-87b5-5d21b30b4ddf", "start": 1728295200005, "status": "failure", "infos": [ { "data": { "vms": [ "81dbf29f-adfa-5bc4-9bc1-021f8eb46b9e", "fc1c7067-69f3-8949-ab68-780024a49a75", "07c32e8d-ea85-ffaa-3509-2dabff58e4af", "66949c31-5544-88fe-5e49-f0e0c7946347" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "81dbf29f-adfa-5bc4-9bc1-021f8eb46b9e", "name_label": "MX" }, "id": "1728295200766", "message": "backup VM", "start": 1728295200766, "status": "failure", "tasks": [ { "id": "1728295200804", "message": "clean-vm", "start": 1728295200804, "status": "success", "end": 1728295200839, "result": { "merge": false } }, { "id": "1728295200981", "message": "snapshot", "start": 1728295200981, "status": "success", "end": 1728295202424, "result": "21700914-d8d4-86a9-0035-409406b214e4" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": false, "type": "remote" }, "id": "1728295202425", "message": "export", "start": 1728295202425, "status": "success", "tasks": [ { "id": "1728295214099", "message": "clean-vm", "start": 1728295214099, "status": "success", "end": 1728295214130, "result": { "merge": false } } ], "end": 1728295214135 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:8eeda3b4-5eb9-4bf5-9d09-c76a3d729054" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:5431ba31-88d3-4421-8f35-90c943f3b6eb" }, "message": "Snapshot data has been deleted" } ], "end": 1728295214135, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///etc/xen-orchestra/@xen-orchestra/xapi/vdi.mjs:251:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:56:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///etc/xen-orchestra/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:25:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:379:9)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } }, { "data": { "type": "VM", "id": "fc1c7067-69f3-8949-ab68-780024a49a75", "name_label": "ISH" }, "id": "1728295214142", "message": "backup VM", "start": 1728295214142, "status": "failure", "tasks": [ { "id": "1728295214147", "message": "clean-vm", "start": 1728295214147, "status": "success", "end": 1728295214171, "result": { "merge": false } }, { "id": "1728295214288", "message": "snapshot", "start": 1728295214288, "status": "success", "end": 1728295215179, "result": "b1e43127-c471-cf4b-bb96-d168b3d6cd18" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": false, "type": "remote" }, "id": "1728295215179:0", "message": "export", "start": 1728295215179, "status": "success", "tasks": [ { "id": "1728295215992", "message": "clean-vm", "start": 1728295215992, "status": "success", "end": 1728295216011, "result": { "merge": false } } ], "end": 1728295216012 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:e17c3e37-5e9d-4296-8108-642e7ec2c5e2" }, "message": "Snapshot data has been deleted" } ], "end": 1728295216012, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///etc/xen-orchestra/@xen-orchestra/xapi/vdi.mjs:251:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:56:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///etc/xen-orchestra/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:25:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:379:9)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } }, { "data": { "type": "VM", "id": "07c32e8d-ea85-ffaa-3509-2dabff58e4af", "name_label": "VPS1" }, "id": "1728295216015", "message": "backup VM", "start": 1728295216015, "status": "failure", "tasks": [ { "id": "1728295216019", "message": "clean-vm", "start": 1728295216019, "status": "success", "end": 1728295216054, "result": { "merge": false } }, { "id": "1728295216173", "message": "snapshot", "start": 1728295216173, "status": "success", "end": 1728295216808, "result": "3f1b009a-534b-cb40-b1d3-b8fad305bb73" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": false, "type": "remote" }, "id": "1728295216808:0", "message": "export", "start": 1728295216808, "status": "success", "tasks": [ { "id": "1728295217406", "message": "clean-vm", "start": 1728295217406, "status": "success", "end": 1728295217432, "result": { "merge": false } } ], "end": 1728295217434 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:56a92275-7bbe-47d0-970e-f32c136fe870" }, "message": "Snapshot data has been deleted" } ], "end": 1728295217434, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///etc/xen-orchestra/@xen-orchestra/xapi/vdi.mjs:251:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:56:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///etc/xen-orchestra/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:25:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:379:9)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } }, { "data": { "type": "VM", "id": "66949c31-5544-88fe-5e49-f0e0c7946347", "name_label": "DNS" }, "id": "1728295217436", "message": "backup VM", "start": 1728295217436, "status": "failure", "tasks": [ { "id": "1728295217440", "message": "clean-vm", "start": 1728295217440, "status": "success", "end": 1728295217462, "result": { "merge": false } }, { "id": "1728295217599", "message": "snapshot", "start": 1728295217599, "status": "success", "end": 1728295218238, "result": "950ff33c-b7e9-b202-c2b3-53b2a8e8c416" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": false, "type": "remote" }, "id": "1728295218238:0", "message": "export", "start": 1728295218238, "status": "success", "tasks": [ { "id": "1728295218833", "message": "clean-vm", "start": 1728295218833, "status": "success", "end": 1728295218862, "result": { "merge": false } } ], "end": 1728295218863 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:7907be71-6b11-4240-a2a7-6043aea4ee05" }, "message": "Snapshot data has been deleted" } ], "end": 1728295218864, "result": { "message": "can't create a stream from a metadata VDI, fall back to a base ", "name": "Error", "stack": "Error: can't create a stream from a metadata VDI, fall back to a base \n at Xapi.exportContent (file:///etc/xen-orchestra/@xen-orchestra/xapi/vdi.mjs:251:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:56:32\n at async Promise.all (index 0)\n at async cancelableMap (file:///etc/xen-orchestra/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///etc/xen-orchestra/@xen-orchestra/backups/_incrementalVm.mjs:25:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:379:9)\n at async file:///etc/xen-orchestra/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } } ], "end": 1728295218864 } -

@icompit Full backups of the same VMs are processed without a problem.

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1728349200005", "jobId": "af0c1c01-8101-4dc6-806f-a4d3cf381cf7", "jobName": "ISO", "message": "backup", "scheduleId": "0e073624-a7eb-47ca-aca5-5f1cd0fc996b", "start": 1728349200005, "status": "success", "infos": [ { "data": { "vms": [ "81dbf29f-adfa-5bc4-9bc1-021f8eb46b9e", "fc1c7067-69f3-8949-ab68-780024a49a75", "07c32e8d-ea85-ffaa-3509-2dabff58e4af", "66949c31-5544-88fe-5e49-f0e0c7946347" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "81dbf29f-adfa-5bc4-9bc1-021f8eb46b9e", "name_label": "MX" }, "id": "1728349200726", "message": "backup VM", "start": 1728349200726, "status": "success", "tasks": [ { "id": "1728349200738", "message": "clean-vm", "start": 1728349200738, "status": "success", "end": 1728349200773, "result": { "merge": false } }, { "id": "1728349200944", "message": "snapshot", "start": 1728349200944, "status": "success", "end": 1728349202402, "result": "c9285da2-1b13-e8a7-7652-316fbfde76fd" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": true, "type": "remote" }, "id": "1728349202402:0", "message": "export", "start": 1728349202402, "status": "success", "tasks": [ { "id": "1728349202859", "message": "transfer", "start": 1728349202859, "status": "success", "end": 1728355287481, "result": { "size": 358491499520 } }, { "id": "1728355310406", "message": "health check", "start": 1728355310406, "status": "success", "tasks": [ { "id": "1728355311238", "message": "transfer", "start": 1728355311238, "status": "success", "end": 1728359446575, "result": { "size": 358491498496, "id": "17402cd8-bd52-98e1-7951-3d47a03dcbf4" } }, { "id": "1728359446575:0", "message": "vmstart", "start": 1728359446575, "status": "success", "end": 1728359477580 } ], "end": 1728359492584 }, { "id": "1728359492598", "message": "clean-vm", "start": 1728359492598, "status": "success", "end": 1728359492625, "result": { "merge": false } } ], "end": 1728359492627 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:dea183a0-abf2-422b-b7fd-4d0ed1d2ac12" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:e1c325ac-e8c9-4a75-83d0-a0ee50289665" }, "message": "Snapshot data has been deleted" } ], "end": 1728359492628 }, { "data": { "type": "VM", "id": "fc1c7067-69f3-8949-ab68-780024a49a75", "name_label": "ISH" }, "id": "1728359492631", "message": "backup VM", "start": 1728359492631, "status": "success", "tasks": [ { "id": "1728359492636", "message": "clean-vm", "start": 1728359492636, "status": "success", "end": 1728359492649, "result": { "merge": false } }, { "id": "1728359492792", "message": "snapshot", "start": 1728359492792, "status": "success", "end": 1728359493650, "result": "8c4a61e7-9f4c-a58b-f1d7-e9edb86e73bb" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": true, "type": "remote" }, "id": "1728359493650:0", "message": "export", "start": 1728359493650, "status": "success", "tasks": [ { "id": "1728359494085", "message": "transfer", "start": 1728359494085, "status": "success", "end": 1728360044219, "result": { "size": 49962294784 } }, { "id": "1728360047118", "message": "health check", "start": 1728360047118, "status": "success", "tasks": [ { "id": "1728360047256", "message": "transfer", "start": 1728360047256, "status": "success", "end": 1728360544642, "result": { "size": 49962294272, "id": "3d7e38b1-2366-e657-884e-67f33bee9b56" } }, { "id": "1728360544642:0", "message": "vmstart", "start": 1728360544642, "status": "success", "end": 1728360567171 } ], "end": 1728360568598 }, { "id": "1728360568628", "message": "clean-vm", "start": 1728360568628, "status": "success", "end": 1728360568663, "result": { "merge": false } } ], "end": 1728360568667 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:18e03c56-b537-490c-8c1d-0f87fef61940" }, "message": "Snapshot data has been deleted" } ], "end": 1728360568667 }, { "data": { "type": "VM", "id": "07c32e8d-ea85-ffaa-3509-2dabff58e4af", "name_label": "VPS1" }, "id": "1728360568671", "message": "backup VM", "start": 1728360568671, "status": "success", "tasks": [ { "id": "1728360568677", "message": "clean-vm", "start": 1728360568677, "status": "success", "end": 1728360568702, "result": { "merge": false } }, { "id": "1728360568803", "message": "snapshot", "start": 1728360568803, "status": "success", "end": 1728360569642, "result": "eac56d7c-fa78-f6ae-41c3-d316fbc597a0" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": true, "type": "remote" }, "id": "1728360569643", "message": "export", "start": 1728360569643, "status": "success", "tasks": [ { "id": "1728360570055", "message": "transfer", "start": 1728360570055, "status": "success", "end": 1728360787031, "result": { "size": 15826942976 } }, { "id": "1728360788525", "message": "health check", "start": 1728360788525, "status": "success", "tasks": [ { "id": "1728360788592", "message": "transfer", "start": 1728360788592, "status": "success", "end": 1728360987674, "result": { "size": 15826942464, "id": "52962bc5-2ed4-c397-9131-8894f45523bd" } }, { "id": "1728360987675", "message": "vmstart", "start": 1728360987675, "status": "success", "end": 1728361007502 } ], "end": 1728361008575 }, { "id": "1728361008588", "message": "clean-vm", "start": 1728361008588, "status": "success", "end": 1728361008622, "result": { "merge": true } } ], "end": 1728361008636 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:00c688b0-555d-42bb-93e9-db8b09fea339" }, "message": "Snapshot data has been deleted" } ], "end": 1728361008636 }, { "data": { "type": "VM", "id": "66949c31-5544-88fe-5e49-f0e0c7946347", "name_label": "DNS" }, "id": "1728361008638", "message": "backup VM", "start": 1728361008638, "status": "success", "tasks": [ { "id": "1728361008643", "message": "clean-vm", "start": 1728361008643, "status": "success", "end": 1728361008669, "result": { "merge": false } }, { "id": "1728361008780", "message": "snapshot", "start": 1728361008780, "status": "success", "end": 1728361009626, "result": "20408aa8-5979-23a5-e8db-c32eb5ec52a8" }, { "data": { "id": "22d6a348-ae2b-4783-a77d-a456e508ba64", "isFull": true, "type": "remote" }, "id": "1728361009626:0", "message": "export", "start": 1728361009626, "status": "success", "tasks": [ { "id": "1728361010081", "message": "transfer", "start": 1728361010081, "status": "success", "end": 1728361179509, "result": { "size": 14742417920 } }, { "id": "1728361181070:0", "message": "health check", "start": 1728361181070, "status": "success", "tasks": [ { "id": "1728361181124", "message": "transfer", "start": 1728361181124, "status": "success", "end": 1728361321066, "result": { "size": 14742417408, "id": "cd3ce194-28ca-0c3f-8a78-6873ebac0da2" } }, { "id": "1728361321067", "message": "vmstart", "start": 1728361321067, "status": "success", "end": 1728361368268 } ], "end": 1728361369283 }, { "id": "1728361369296", "message": "clean-vm", "start": 1728361369296, "status": "success", "end": 1728361369327, "result": { "merge": true } } ], "end": 1728361369340 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:b6f2b416-83b1-4f50-84f1-662f03aa626d" }, "message": "Snapshot data has been deleted" } ], "end": 1728361369340 } ], "end": 1728361369340 }