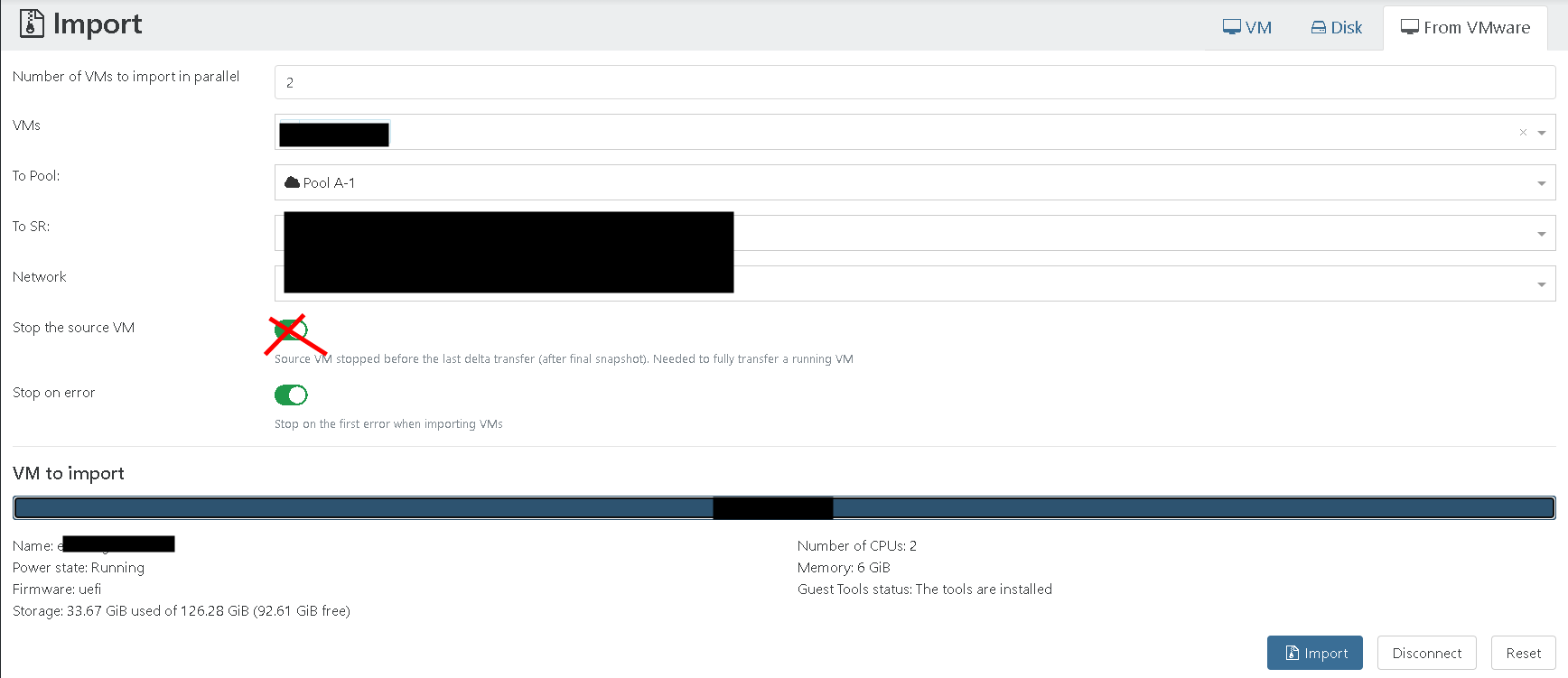

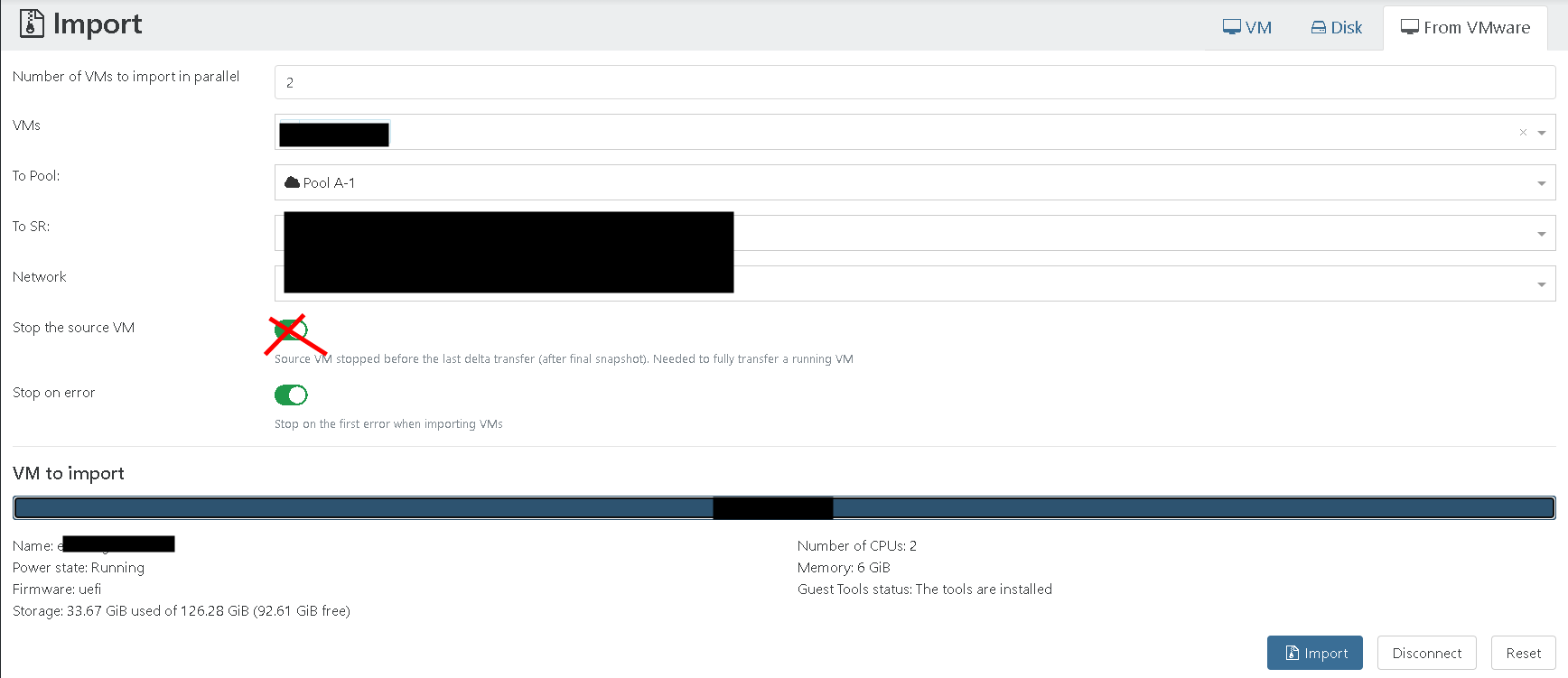

@jkissane Create the snapshot, dont enable "stop the source vm" if your vm is shutdown already and it should work fine.

@jkissane Create the snapshot, dont enable "stop the source vm" if your vm is shutdown already and it should work fine.

@jkissane You still need a shnapshot for the migration to work. Thats at least my expierence.

@KPS I also patched Windows 2016 und 2022 to latest version. With xen guest tools. Both worked fine as well. We dont have a 2019 machine to test with.

@bufanda make sure you have snapshot created.

@AtaxyaNetwork It works !!!! Thank you so much !!!

@AtaxyaNetwork Oh wow. That might be past my linux skill level. I would love to try the vhd file if you can share it with me. This should get me started for sure. But please do the blog post as well.

@AtaxyaNetwork No way  That's fantastic. I'm really curious to learn the fix. It might help someone else here as well.

That's fantastic. I'm really curious to learn the fix. It might help someone else here as well.

So please post the steps if its not to complicated.

THANKS

Stefan

@anik I'm trying to setup dell open manage enterprise for a couple of weeks.

I imported version 3 and 4 from esxi. Both won't work after. I also tried to convert ovf, kvm and hyper v image. The conversion worked. But open mange will not boot on xcp-ng.

I got a supported ticket open for this as well.

No luck so far.

@ha_tu_su are u using vsan on esxi? It worked best for me running xoa latest version and migrate the vm to an nfs storage before the migration.

Hi,

I just noticed that Xenserver released updated Guest Tools. The latest version is from June 4, 2024

Does anyone know what has changed ? Is it worth to update the agent on already deployed machines ?

Thanks

Stefan

@ha_tu_su Hi, I come from a similar setup and did the following

In XOSTOR the diskless node will be called "tie breaker". From my understanding very similar to the witness in vsan.

You can also go ahead select a dedicated network for the XOSTOR. This can be done post create as well. But i'm not sure if this will work without a switch.

I struggled a bit with network setup. Its super easy to setup a BOND but you have to remove it again to join hosts into a pool. This was a bit challenging for us as the juniper switches in our co-location dont support LACP fallback and we dont have direct switch access. There was also an issue where LACP was working in 8.2 but not in 8.3. It was fixed with a firmware.

We come from an setup where we had multiple 2-node vsan cluster in 3 sites with vReplication for DR. Running more windows VMs than anything else. XCP-NG with XOSTOR and build in backup was an 1:1 replacement for us. We are very happy how everything turned out. vmware migration was working fine as well. Support is great too and much more responsive compared to what we had at vmware.

@nikade keep your eyes open. Maybe reach out to support and ask if iscsi is a feature or on the road map.

@nikade I just looked up an old documentation from 2019. We used at that time the vSAN iSCSI Target Service to create (multiple) LUNs. We also enabled Mulitpash I/O / Microsoft iSCSI in Windows and mapped those LUNs into the two VMs. We have then successfully created a WSCF that presents the disks in the exact way you have it today. It was our first design for file services and later replaced with an active / avtive truenas.

vSAN was used for iSCSI but i dont remember that this was particular limited to VMware. I believe that you could use any iSCSI storage system for this.

I also see that linstor has a feature that would allow to create an iSCSI target. Not sure if this is available for XOSTOR yet.

https://linbit.com/blog/create-a-highly-available-iscsi-target-using-linstor-gateway/

@nikade we have dedicated VMs for each role. So sql is only sql.

You would have to check if your application has some build in HA that would work without a shared disk.

We are setup in a way where mutilple application server run behind a load balancer (haproxy) with load balancing enabled.

file services are provided by truenas which is behind a load balancer as well in an active /backup configuration.

The app and file services files are synced by syncthing.

We are coming from a configuration similar to yours. But had to change to scale it more and increase the redundancy. We also considered the shared disks as single point of failure.

Just take your time in look into each component. I'm sure you will find a way.

@nikade it's fully HA and the failover is even faster as sql server ist already started. It's too long ago. But I believe that sql service In your configuration was only stated when it actually fails over.

Each server will have local disks and sql server will keep them in sync syncronos with log shipping. It requires fast network like vsan does as well.

You can failover the server or individual databases at any time.

We currently have the server1 on xcp-ng and server2 on a vsan cluster that was not migrated yet.

We failover all the time during maintenance windows for patching etc. Its very robust.

Stefan

@nikade I believe this feature is available since SQL 2016. We are using two regular standard licenses for this.

The limitation in standard is that you can have only one availability group. The availability group is linked to the windows cluster and the IP that can failover between the nodes.

However you can have as many databases as you want and you can fail them over independet.

We are not using the failover IP at all. The limitation to one group is therefore not relevant for us. We have definied both sql server within the sql connections string

server=sql1;Failover Partner=sql2;uid=username;pwd=password;database=dbname

This will make the application aware of the failover cluster and allow to connect to the primary node.

This works very well in our case. You can also use some clever scripts if you deal with legacy apllication that cannot use the connection string above.

https://www.linkedin.com/pulse/adding-enterprise-wing-sql-server-basic-availability-group-zheng-xu

Its something we have done before. But its currently not needed anymore.