Thanks! Looking forward to trying it out!

Posts

-

RE: Our future backup code: test it!

@olivierlambert I just tried to build the install with that branch and got the following error.

• Running build in 22 packages • Remote caching disabled @xen-orchestra/disk-transform:build: cache miss, executing c1d61a12721a1a1b @xen-orchestra/disk-transform:build: yarn run v1.22.22 @xen-orchestra/disk-transform:build: $ tsc @xen-orchestra/disk-transform:build: src/SynchronizedDisk.mts(2,30): error TS 2307: Cannot find module '@vates/generator-toolbox' or its corresponding type declarations. @xen-orchestra/disk-transform:build: error Command failed with exit code 2.OS: Rocky 9

Yarn: 1.22.22

Node: v22.14.0Let me know if you need anymore information.

-

RE: XOSTOR on 8.3?

@TheNorthernLight, based on this recent blog post: https://xcp-ng.org/blog/2025/03/14/the-future-of-xcp-ng-lts/, I'm assuming it will go GA around or on when 8.3 gets moved into LTS. But that's just a guess.

-

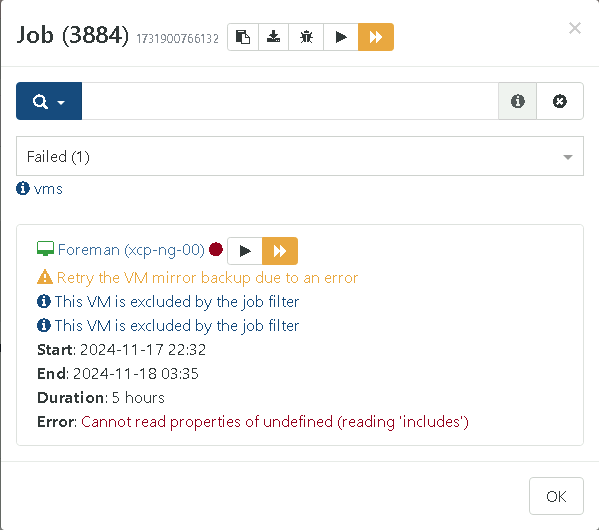

Mirror Backup Failing On Excluded VM

Hello,

I have a mirror incremental backup that is mirroring some vms to BackBlaze buckets. I am only selecting my more critical vms as I don't need this larger VM backed up offsite. I seem to be getting an error every backup on the one excluded VM stating "Cannot read properties of undefined (reading 'includes')" I have tried recreating the mirror job etc but it seems to still crop up.

Commit: 99c56

Log: 2024-11-18T03_32_46.132Z - backup NG.json.txt

Screenshot below:

Let me know if you need anymore informaton.

-

Question About Backup Sequences

Hello,

I saw that backup sequences were added with the new release (and it looks like an awesome feature by the way!). I have one quick question. Does the backup sequence schedule take precedence over the normal schedule for a job? My thought process was to disable the schedule on the normal job but keep it to allow for things such as health checks but allow the back sequence to start the job.

-

RE: CBT: the thread to centralize your feedback

@rtjdamen I haven't had any hosts crash recently or any storage issue from what I can tell. The "type" in the log says delta but the size of the backups definitely look like full backups. They're also labelled as key when I look at the restore points for delta backups.

-

RE: CBT: the thread to centralize your feedback

It looks like all of my backups have started erroring with "can't create a stream from a metadata VDI, fall back to a base" I am using 1 NDB connection and I am not commit 530c3. I have attached the logs of a delta backup and a replication.

2024-09-19T16_00_00.002Z - backup NG.json.txt

2024-09-19T04_00_00.001Z - backup NG.json.txtI am seeing this in the journal logs.

Sep 19 12:01:39 hostname xo-server[11597]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 19 12:01:39 hostname xo-server[11597]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 19 12:01:39 hostname xo-server[11597]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 19 12:01:39 hostname xo-server[11597]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 19 12:01:39 hostname xo-server[11597]: code: 'SR_BACKEND_FAILURE_460', Sep 19 12:01:39 hostname xo-server[11597]: params: [ Sep 19 12:01:39 hostname xo-server[11597]: '', Sep 19 12:01:39 hostname xo-server[11597]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', Sep 19 12:01:39 hostname xo-server[11597]: '' Sep 19 12:01:39 hostname xo-server[11597]: ], Sep 19 12:01:39 hostname xo-server[11597]: call: { method: 'VDI.list_changed_blocks', params: [Array] }, Sep 19 12:01:39 hostname xo-server[11597]: url: undefined, Sep 19 12:01:39 hostname xo-server[11597]: task: undefined Sep 19 12:01:39 hostname xo-server[11597]: }, Sep 19 12:01:39 hostname xo-server[11597]: ref: 'OpaqueRef:0438087b-5cbc-a458-a8a0-4eaa6ce74d19', Sep 19 12:01:39 hostname xo-server[11597]: baseRef: 'OpaqueRef:ae1330a2-0f95-6c16-6878-f6c05373a2f2' Sep 19 12:01:39 hostname xo-server[11597]: } Sep 19 12:01:43 hostname xo-server[11597]: 2024-09-19T16:01:43.015Z xo:xapi:vdi INFO OpaqueRef:b6f65ae4-bee8-b179-a06c-2bb4956214ba has been disconnected from dom0 { Sep 19 12:01:43 hostname xo-server[11597]: vdiRef: 'OpaqueRef:0438087b-5cbc-a458-a8a0-4eaa6ce74d19', Sep 19 12:01:43 hostname xo-server[11597]: vbdRef: 'OpaqueRef:b6f65ae4-bee8-b179-a06c-2bb4956214ba' Sep 19 12:01:43 hostname xo-server[11597]: } Sep 19 12:02:29 hostname xo-server[11597]: 2024-09-19T16:02:29.855Z xo:xapi:vdi INFO can't get changed block { Sep 19 12:02:29 hostname xo-server[11597]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 19 12:02:29 hostname xo-server[11597]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 19 12:02:29 hostname xo-server[11597]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 19 12:02:29 hostname xo-server[11597]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 19 12:02:29 hostname xo-server[11597]: code: 'SR_BACKEND_FAILURE_460', Sep 19 12:02:29 hostname xo-server[11597]: params: [ Sep 19 12:02:29 hostname xo-server[11597]: '', Sep 19 12:02:29 hostname xo-server[11597]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', -

RE: CBT: the thread to centralize your feedback

I saw these errors in my log today after starting a replication job on commit 530c3. I have not migrated these VMs to a new host or an SR.

Sep 18 08:17:12 xo-server[6199]: 2024-09-18T12:17:12.861Z xo:xapi:vdi INFO can't get changed block { Sep 18 08:17:12 xo-server[6199]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 18 08:17:12 xo-server[6199]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 18 08:17:12 xo-server[6199]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 18 08:17:12 xo-server[6199]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 18 08:17:12 xo-server[6199]: code: 'SR_BACKEND_FAILURE_460', Sep 18 08:17:12 xo-server[6199]: params: [ Sep 18 08:17:12 xo-server[6199]: '', Sep 18 08:17:12 xo-server[6199]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 18 08:17:12 xo-server[6199]: '' Sep 18 08:17:12 xo-server[6199]: ], Sep 18 08:17:12 xo-server[6199]: call: { method: 'VDI.list_changed_blocks', params: [Array] }, Sep 18 08:17:12 xo-server[6199]: url: undefined, Sep 18 08:17:12 xo-server[6199]: task: undefined Sep 18 08:17:12 xo-server[6199]: }, Sep 18 08:17:12 xo-server[6199]: ref: 'OpaqueRef:a7c534ef-d1d5-0578-a564-05b2c36de7be', Sep 18 08:17:12 xo-server[6199]: baseRef: 'OpaqueRef:5d4109f0-5278-64d8-233d-6cd73c8c6d6a' Sep 18 08:17:12 xo-server[6199]: } Sep 18 08:17:14 xo-server[6199]: 2024-09-18T12:17:14.459Z xo:xapi:vdi INFO can't get changed block { Sep 18 08:17:14 xo-server[6199]: error: XapiError: SR_BACKEND_FAILURE_460(, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated], ) Sep 18 08:17:14 xo-server[6199]: at XapiError.wrap (file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/_XapiError.mjs:16:12) Sep 18 08:17:14 xo-server[6199]: at file:///opt/xo/xo-builds/xen-orchestra-202409180806/packages/xen-api/transports/json-rpc.mjs:38:21 Sep 18 08:17:14 xo-server[6199]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Sep 18 08:17:14 xo-server[6199]: code: 'SR_BACKEND_FAILURE_460', Sep 18 08:17:14 xo-server[6199]: params: [ Sep 18 08:17:14 xo-server[6199]: '', Sep 18 08:17:14 xo-server[6199]: 'Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]', Sep 18 08:17:14 xo-server[6199]: '' Sep 18 08:17:14 xo-server[6199]: ], Sep 18 08:17:14 xo-server[6199]: call: { method: 'VDI.list_changed_blocks', params: [Array] }, Sep 18 08:17:14 xo-server[6199]: url: undefined, Sep 18 08:17:14 xo-server[6199]: task: undefined Sep 18 08:17:14 xo-server[6199]: }, -

RE: Backup Fail: Trying to add data in unsupported state

Hello,

My vms are about 150G each. I was using compression when I backed up the vm to the remote before mirroring it to the s3 bucket. I did end up changing to delta backups and the error did go away but I can create another normal backup and mirror it to the bucket again to see if I get the same results.

-

RE: Backup Fail: Trying to add data in unsupported state

I can confirm I am seeing the same thing with full a mirror full backup job to Backblaze B2 utilizing encryption. I am on Master, commit 732ca in my homelab. I have attached the backup log as well.2024-08-28T22_32_37.271Z - backup NG.json.txt

-

RE: CBT: the thread to centralize your feedback

I have been seeing this error recently. "VDI must be free or attached to exactly one VM" with quite a few snapshots attached to the control domain when I look at the health dashboard. I am not sure if this is related to the cbt backups but wanted to ask. It seems to only being happening on my delta backups that do have cbt enabled.

-

RE: Continuous Replication Job Causing XO to Crash

@olivierlambert Thanks. I will do that. I did bump the memory up to 12GiB and the backup ran successfully but I will pursue a ticket with the 3rd party script maker as well. Thank you for your time!

-

RE: Continuous Replication Job Causing XO to Crash

I increased the memory of my XO vm to 12GiB and so far everything seems to be working. I will keep an eye on things.

-

RE: Continuous Replication Job Causing XO to Crash

@olivierlambert I am currently using a 3rd party script. I can follow the documentation and rebuild by hand to see what happens.

Here is a more telling error.

Jul 23 14:30:23 xo-server[85312]: <--- Last few GCs ---> Jul 23 14:30:23 xo-server[85312]: [85312:0x64ad290] 70880873 ms: Mark-Compact 2002.7 (2082.8) -> 1988.6 (2082.5) MB, 807.86 / 0.07 ms (average mu = 0.144, current mu > Jul 23 14:30:23 xo-server[85312]: [85312:0x64ad290] 70881716 ms: Mark-Compact 2002.9 (2083.2) -> 1986.5 (2081.8) MB, 721.13 / 0.11 ms (average mu = 0.144, current mu > Jul 23 14:30:23 xo-server[85312]: <--- JS stacktrace ---> Jul 23 14:30:23 xo-server[85312]: FATAL ERROR: Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory Jul 23 14:30:23 xo-server[85312]: ----- Native stack trace -----e913272/20240716T110135Z.vhd', Jul 23 14:30:23 xo-server[85312]: 1: 0xb80c98 node::OOMErrorHandler(char const*, v8::OOMDetails const&) [node] Jul 23 14:30:23 xo-server[85312]: 2: 0xeede90 v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, v8::OOMDetails const&) [node] Jul 23 14:30:23 xo-server[85312]: 3: 0xeee177 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, v8::OOMDetails const&) [node] Jul 23 14:30:23 xo-server[85312]: 4: 0x10ffd15 [node] Jul 23 14:30:23 xo-server[85312]: 5: 0x11002a4 v8::internal::Heap::RecomputeLimits(v8::internal::GarbageCollector) [node] Jul 23 14:30:23 xo-server[85312]: 6: 0x1117194 v8::internal::Heap::PerformGarbageCollection(v8::internal::GarbageCollector, v8::internal::GarbageCollectionReason, cha> Jul 23 14:30:23 xo-server[85312]: 7: 0x11179ac v8::internal::Heap::CollectGarbage(v8::internal::AllocationSpace, v8::internal::GarbageCollectionReason, v8::GCCallback> 0.044) task; scavenge might not succeed Jul 23 14:30:23 xo-server[85312]: 8: 0x117076c v8::internal::MinorGCJob::Task::RunInternal() [node] 0.145) task; scavenge might not succeed Jul 23 14:30:23 xo-server[85312]: 9: 0xd368e6 [node] Jul 23 14:30:23 xo-server[85312]: 10: 0xd39e8f node::PerIsolatePlatformData::FlushForegroundTasksInternal() [node] Jul 23 14:30:23 xo-server[85312]: 11: 0x18af2d3 [node] Jul 23 14:30:23 xo-server[85312]: 12: 0x18c3d4b [node] Jul 23 14:30:23 xo-server[85312]: 13: 0x18afff7 uv_run [node] Jul 23 14:30:23 xo-server[85312]: 14: 0xbc7be6 node::SpinEventLoopInternal(node::Environment*) [node] Jul 23 14:30:23 xo-server[85312]: 15: 0xd0ae44 [node] Jul 23 14:30:23 xo-server[85312]: 16: 0xd0b8dd node::NodeMainInstance::Run() [node] Jul 23 14:30:23 xo-server[85312]: 17: 0xc6fc8f node::Start(int, char**) [node] const*) [node] Jul 23 14:30:23 xo-server[85312]: 18: 0x7f004c029590 [/lib64/libc.so.6]lags) [node] Jul 23 14:30:23 xo-server[85312]: 19: 0x7f004c029640 __libc_start_main [/lib64/libc.so.6] Jul 23 14:30:23 xo-server[85312]: 20: 0xbc430e _start [node] Jul 23 14:30:23 systemd-coredump[89957]: [] Process 85312 (node) of user 0 dumped core. Jul 23 14:30:23 systemd[1]: xo-server.service: Main process exited, code=dumped, status=6/ABRT Jul 23 14:30:23 systemd[1]: xo-server.service: Failed with result 'core-dump'.My XO vm currently has 6 gigs allocated to it.

-

Continuous Replication Job Causing XO to Crash

Hello,

I have been messing around with The continuous replication part of backups. I created my job and all was running well then my XO crashed. Now the vms that were replicating when XO crashed show an error of operation blocked when I try to migrate them to another host. Please see below for more information.

XO commit: 919d2

nodejs version: 20.15.1

OS: Rocky Linux 9Crash that I saw in the log

xo:plugin INFO Cannot find module '/opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server-test/dist'. Please verify that the package.json has a valid "main" entry { error: Error: Cannot find module '/opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server-test/dist'. Please verify that the package.json has a valid "main" entry at tryPackage (node:internal/modules/cjs/loader:445:19) at Function.Module._findPath (node:internal/modules/cjs/loader:716:18) at Function.Module._resolveFilename (node:internal/modules/cjs/loader:1131:27) at requireResolve (node:internal/modules/helpers:190:19) at Xo.call (file:///opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server/src/index.mjs:354:32) at Xo.call (file:///opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server/src/index.mjs:406:25) at from (file:///opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server/src/index.mjs:442:95) at Function.from (<anonymous>) at registerPlugins (file:///opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server/src/index.mjs:442:27) at main (file:///opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server/src/index.mjs:921:5) { code: 'MODULE_NOT_FOUND', path: '/opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server-test/package.json', requestPath: '/opt/xo/xo-builds/xen-orchestra-202407221847/packages/xo-server-test' } }The backup log and the operation blocked logs have been attached as well.

Thanks for the help!

2024-07-23T18_45_30.613Z - XO.log.txt 2024-07-23T18_22_34.453Z - backup NG.json.txt -

RE: CBT: the thread to centralize your feedback

@Andrew interesting thanks. I didn't disable nbd + cbt in the delta job or cbt on the disks. I just set up another job as a normal full backup mode.

-

RE: CBT: the thread to centralize your feedback

@Andrew Thanks for the info! I had a feeling it may have been that one normal backup mode I ran. I'll stick to the deltas.

-

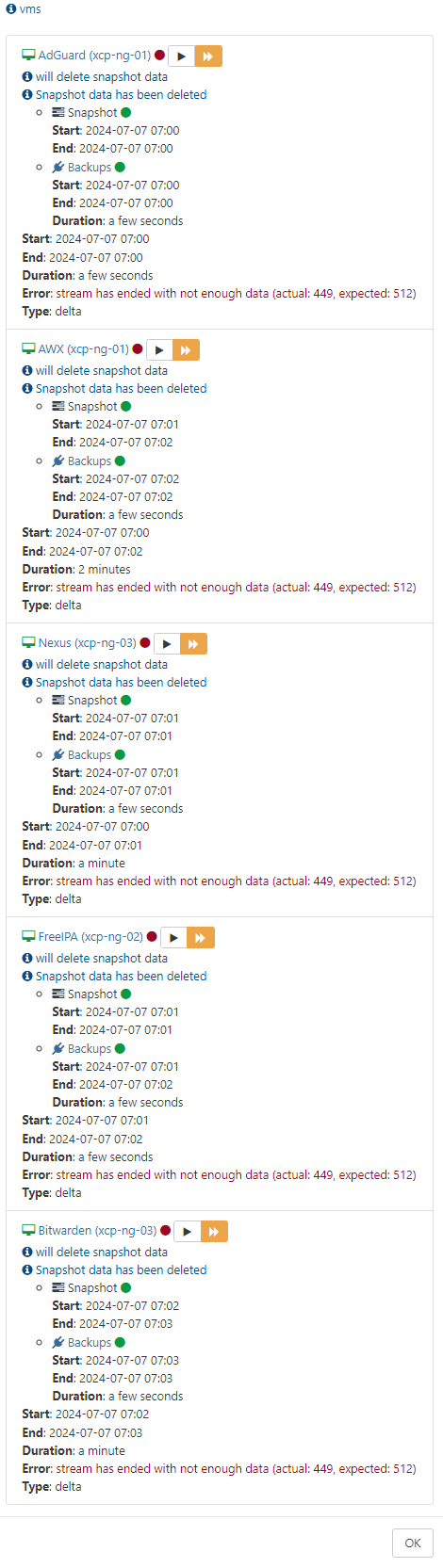

RE: CBT: the thread to centralize your feedback

I started seeing this error this afternoon.

The failed job also happened to run after a monthly backup job that I have setup that does a full backup to some immutable storage that I have but I am not sure if it is related I just wanted to add that information. Below is the log from the failed backup.

2024-07-07T11_00_11.127Z - backup NG.json.txt