@dhiraj26683 We have production related infrastructure setup on these Hosts. Access to the services hosted gets a little slower than usual. For e.g among other services, one of the service is our deployent server, which we use to deploy softwares from a repository hosted on that server itself. We could see the delays in operations, even if the deployment servers utilization is not that high. If we move the server to another hosts, whose utilization is low, the service works well.

We need to restart the XCP host and everything works well like before. So at the end, we have only one solution to restart the impacted XCP hosts, when this memory utilization reach it's limit and alerts being started about it.

Posts

-

RE: Memory Consumption goes higher day by day

-

RE: Memory Consumption goes higher day by day

@dhiraj26683 I know xcp-ng center is not supported anymore. But whenever this happens, inspite of lot of memory in cache, the VM's performance gets impacted.

-

RE: Memory Consumption goes higher day by day

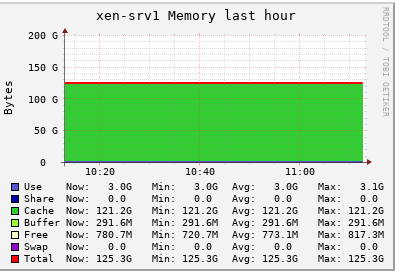

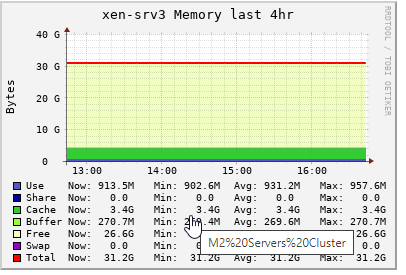

@dhiraj26683 Here we go. Memory accumulated in cache and XCP-NG console started to say about 98% of allocated memory got use, performance degradation might happen.

-

RE: Memory Consumption goes higher day by day

@dhiraj26683 Ahh yes, we are using ha-lizard in our two node cluster. Thanks for pointing that out @tuxen. But the thing is, we are using it since 4-5 years and it's with basic configuration. I don't thing that we are using TGT iSCSI drivers or enabled any kind of write-cache. If that's the case then, we would have seen same behaviour in all the nodes we have in same pool and other pools.

We have configured below parameters only related to ha-lizard and that's since we start using it, no change in between.

DISABLED_VAPPS=() DISK_MONITOR=1 ENABLE_ALERTS=1 ENABLE_LOGGING=1 FENCE_ACTION=stop FENCE_ENABLED=1 FENCE_FILE_LOC=/etc/ha-lizard/fence FENCE_HA_ONFAIL=0 FENCE_HEURISTICS_IPS=10.66.0.1 FENCE_HOST_FORGET=0 FENCE_IPADDRESS= FENCE_METHOD=POOL FENCE_MIN_HOSTS=2 FENCE_PASSWD= FENCE_QUORUM_REQUIRED=1 FENCE_REBOOT_LONE_HOST=0 FENCE_USE_IP_HEURISTICS=1 GLOBAL_VM_HA=0 HOST_SELECT_METHOD=0 MAIL_FROM=xen-cluster1@xxx.xx MAIL_ON=1 MAIL_SUBJECT="SYSTEM_ALERT-FROM_HOST:$HOSTNAME" MAIL_TO=it@xxxxx.xxx MGT_LINK_LOSS_TOLERANCE=5 MONITOR_DELAY=15 MONITOR_KILLALL=1 MONITOR_MAX_STARTS=20 MONITOR_SCANRATE=10 OP_MODE=2 PROMOTE_SLAVE=1 SLAVE_HA=1 SLAVE_VM_STAT=0 SMTP_PASS="" SMTP_PORT="25" SMTP_SERVER=10.66.1.241 SMTP_USER="" XAPI_COUNT=2 XAPI_DELAY=10 XC_FIELD_NAME='ha-lizard-enabled' XE_TIMEOUT=10@yann @stormi @olivierlambert we have updated the ixgbe driver on one of the node a day back. Lets see. I will update asap hold on commenting on this thread unless we hit a issue again. Thank you so much for your help guys.

-

RE: Memory Consumption goes higher day by day

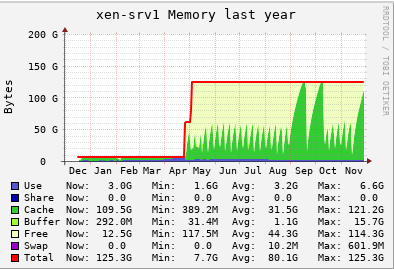

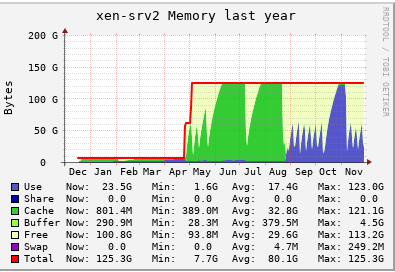

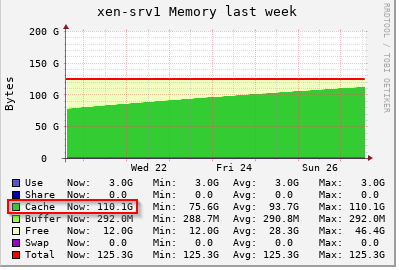

@yann @stormi We have used vGPU for about 8-9 months. Initially we never faced any issue. When we were about to stop the vGPU workflow, it started and even after stoping that workflow, it is. We need to restart the servers to release the memory. We use to migrate all VM's to one server and restart individual host. And this issue started resently, before we never faced such thing.

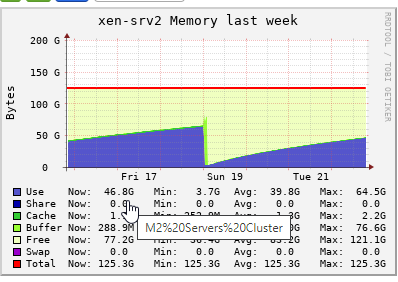

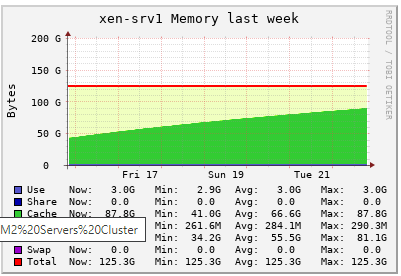

Providing below is a year graph of both servers.

But i will surely try the new drivers and see if that helps. Thanks for your inputs guys. Much appreciated.

-

RE: Memory Consumption goes higher day by day

@yann I can understand the buff/cache part but on this server which is with 1TB physical memory and only three VM's running with 8G, 32G and 64G as their alloted memory, eating up and alloting all memory in cache is not understandable. It's getting cache means something is using it. Not sure if that makes sence though.

Initially both our XCP hosts were with 16G Control domain memory. We started to face issue and alerts, we increased to 32G, then 64G, and then 128G, and it's like that for a while now.

Now we are not using vGPU, so it's not getting full within 2 days where alerts starts saying Control domain memory reached it's limit

-

RE: Memory Consumption goes higher day by day

@stormi Sure, we can try that. Thank you

-

RE: Memory Consumption goes higher day by day

-

RE: Memory Consumption goes higher day by day

@olivierlambert i believe it's something related to nic drivers as we are running network intensive guests on both the servers.

We have a third Server, which is runing standalone. Below is it's config and only one guests runs on this host, which is XOA

CPU - AMD Tyzen Threadripper PRO 3975WX 32-Cores 3500 MHz

Memory - 320G

Ethernet - 1G Ethernet

10G Fiber

intel-ixgbe-5.9.4-1.xcpng8.2.x86_64As XOA does uses 10G ethernet for backup/migration operations. It seems to be caching not that much memory, but it is caching though. But not ending up utilizing all memory in cache because less operations happens here.

-

RE: Memory Consumption goes higher day by day

@dhiraj26683 Thanks for replying back @olivierlambert

Nothing as such other than Ice drivers.But for now, we are not running any virtual GPU workstation from last 3-4 months, so that kind of load is not there on any of our XCP hosts.

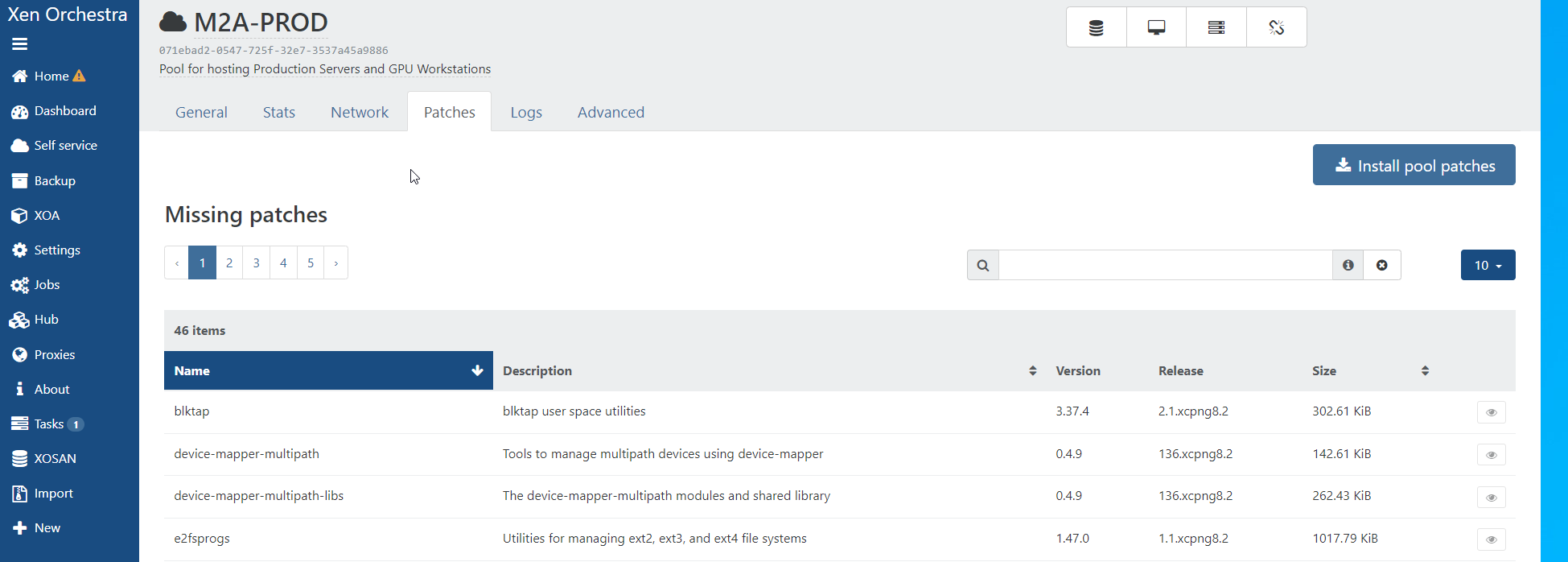

But as i could say, this memory issue started resently and and the only changes that we do is to push the patches via xoa.

Considering this kind of issue, where memory gets fullly utilized (get into cache) and notifications start about Control Domain Load reached 100%, we didn't pushed any patches for now.

-

RE: Memory Consumption goes higher day by day

@dhiraj26683 tried to find out the process. But nothing to be identified as such. There are only three guests are running on this server and it is almost there to reach the limit. After all the memory goes into cache, we will start getting notifications/alerts about Control Domain Load reached 100% and there may be a service degradation.

-

RE: Memory Consumption goes higher day by day

@dhiraj26683

[14:26 xen-srv2 Dell-Drivers]$ rpm -qf /lib/modules/4.19.0+1/updates/ixgbe.ko

intel-ixgbe-5.9.4-1.xcpng8.2.x86_64 -

RE: Memory Consumption goes higher day by day

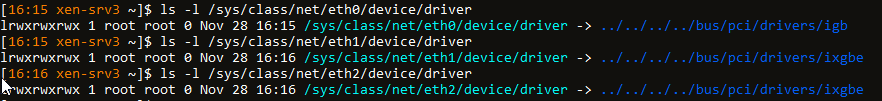

@dhiraj26683 Providing both servers ixgbe module info and rpm info, it's stock driver came along.

[13:59 xen-srv2 Dell-Drivers]$ modinfo ixgbe

filename: /lib/modules/4.19.0+1/updates/ixgbe.ko

version: 5.9.4

license: GPL

description: Intel(R) 10GbE PCI Express Linux Network Driver

author: Intel Corporation, linux.nics@intel.com

srcversion: AA8061C6A752528BD6CFE19[13:45 xen-srv1 ~]$ modinfo ixgbe

filename: /lib/modules/4.19.0+1/updates/ixgbe.ko

version: 5.9.4

license: GPL

description: Intel(R) 10GbE PCI Express Linux Network Driver

author: Intel Corporation, linux.nics@intel.com

srcversion: AA8061C6A752528BD6CFE19We tried below version update of ice modules as well,

ice-1.10.1.2.2

ice-1.12.7It's the same behaviour, hence we downloaded ice drivers from Dell and installed available version which is as given below. But it's still the same.

ice-1.11.14 -

RE: Memory Consumption goes higher day by day

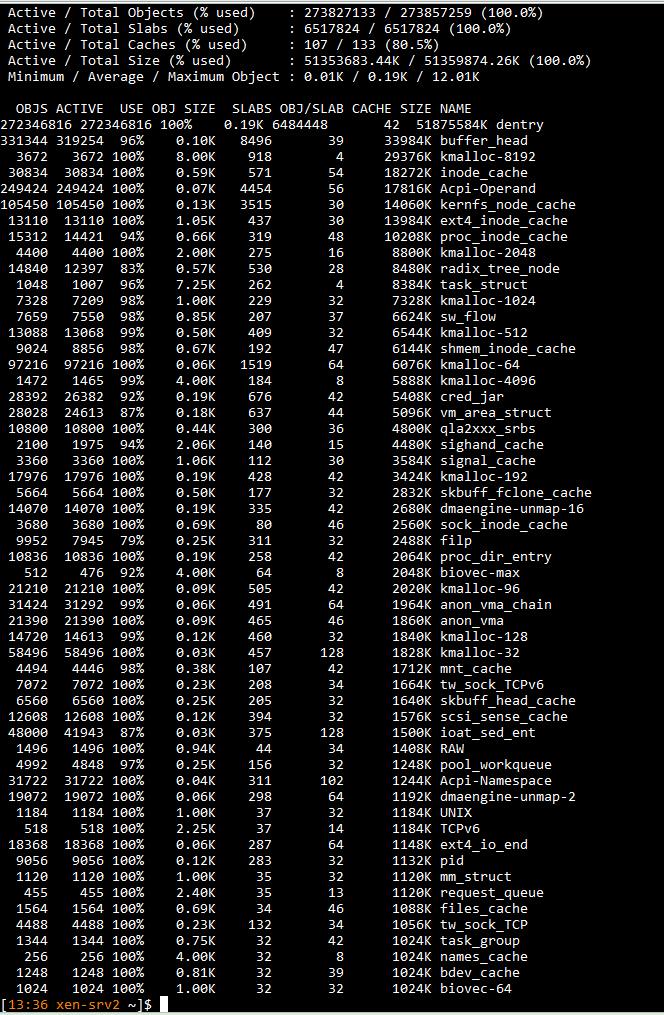

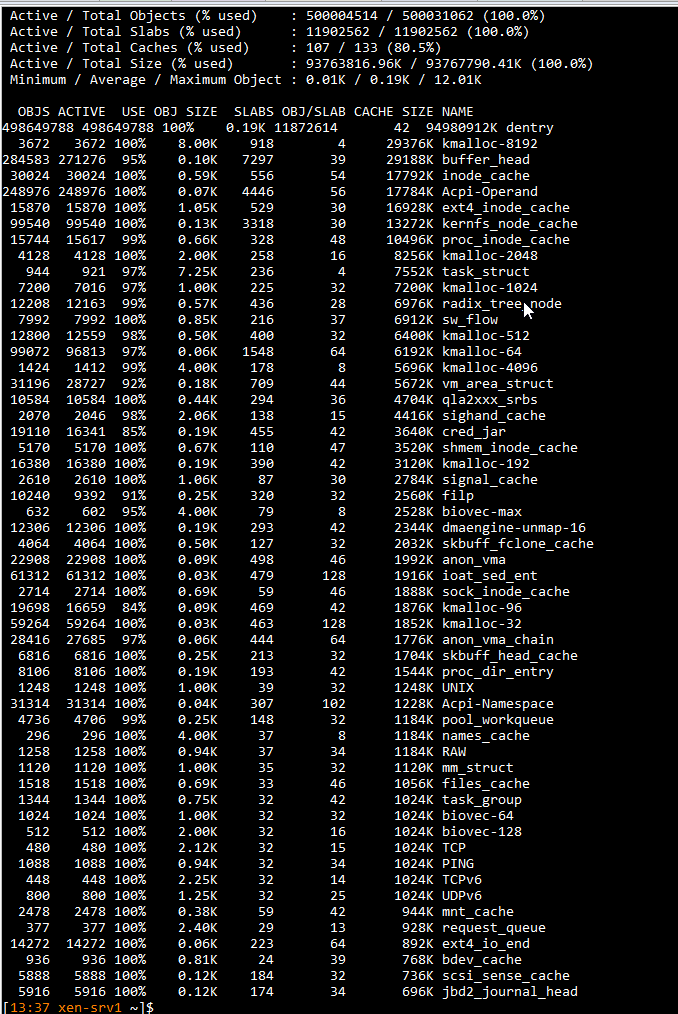

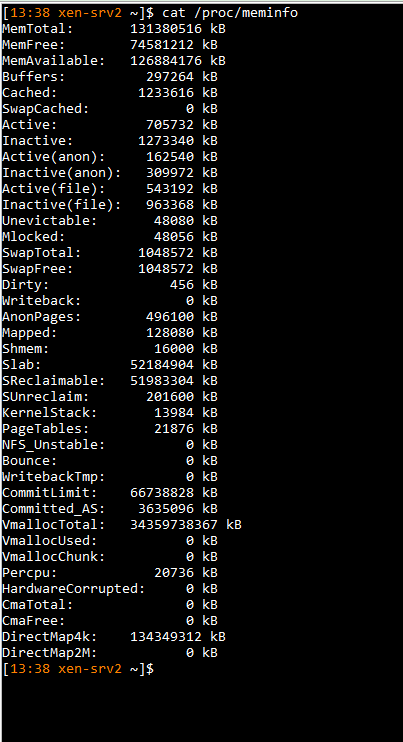

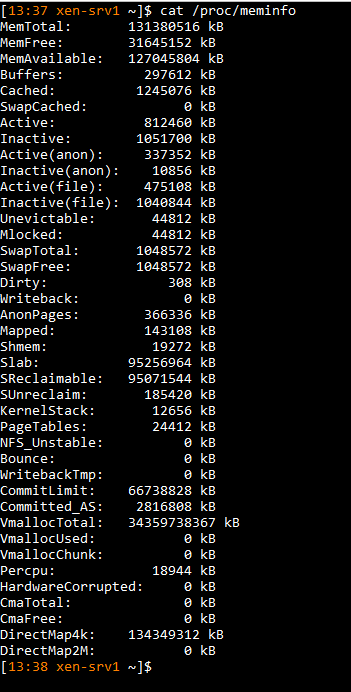

@dhiraj26683 Providing here with output of below commands

slabtop -o -s c

cat /proc/meminfo

-

RE: Memory Consumption goes higher day by day

@john-c @olivierlambert

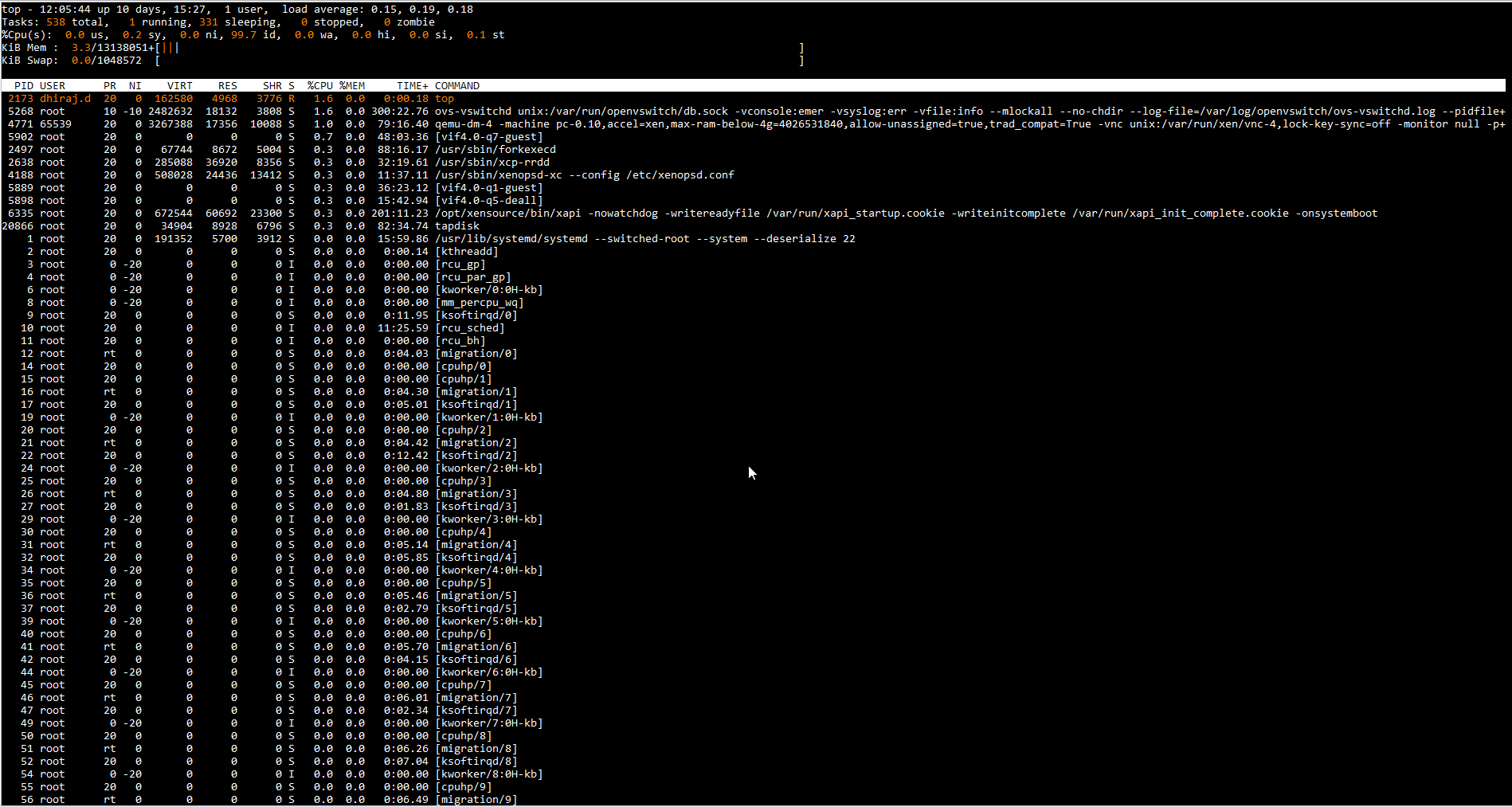

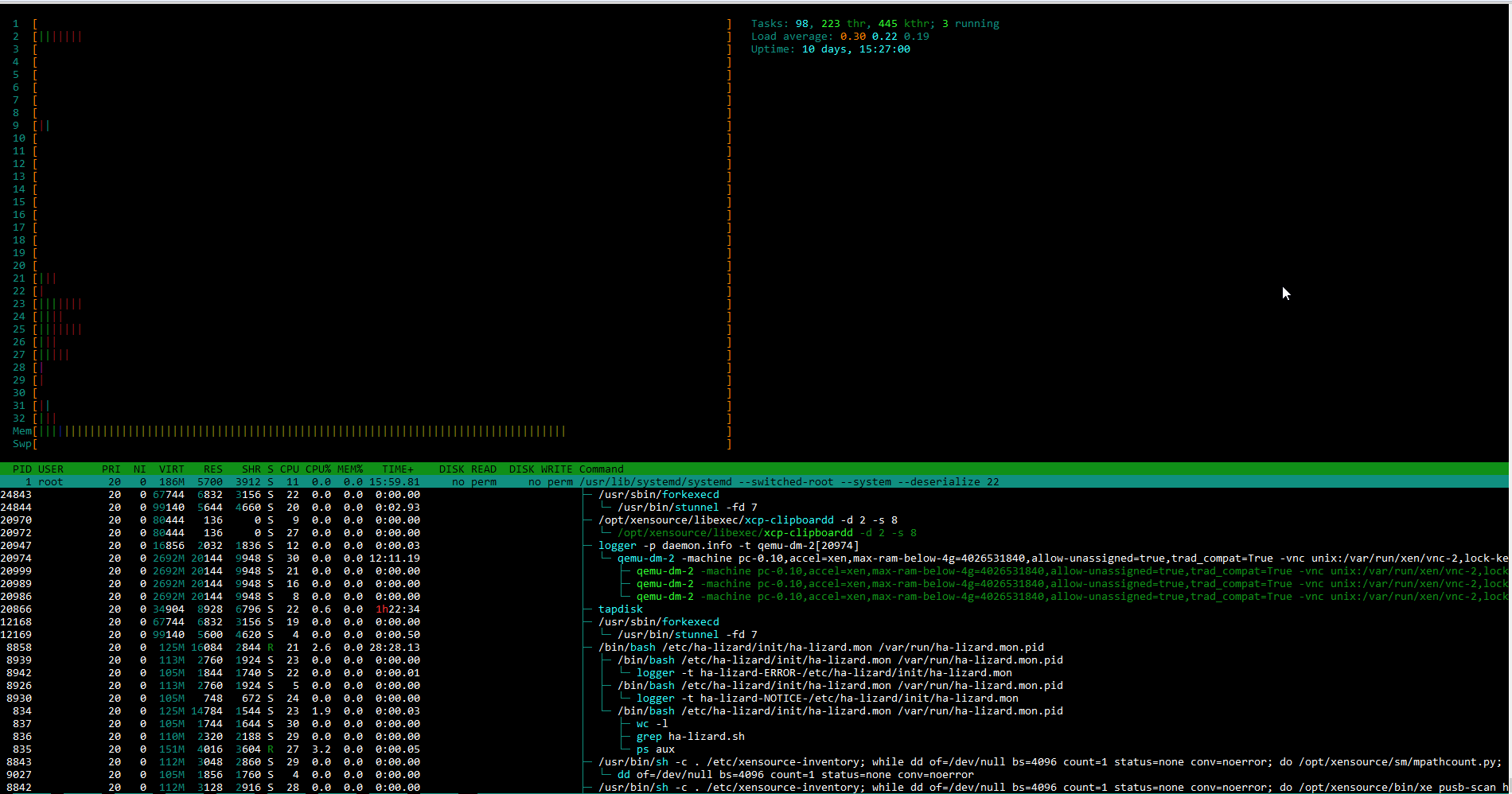

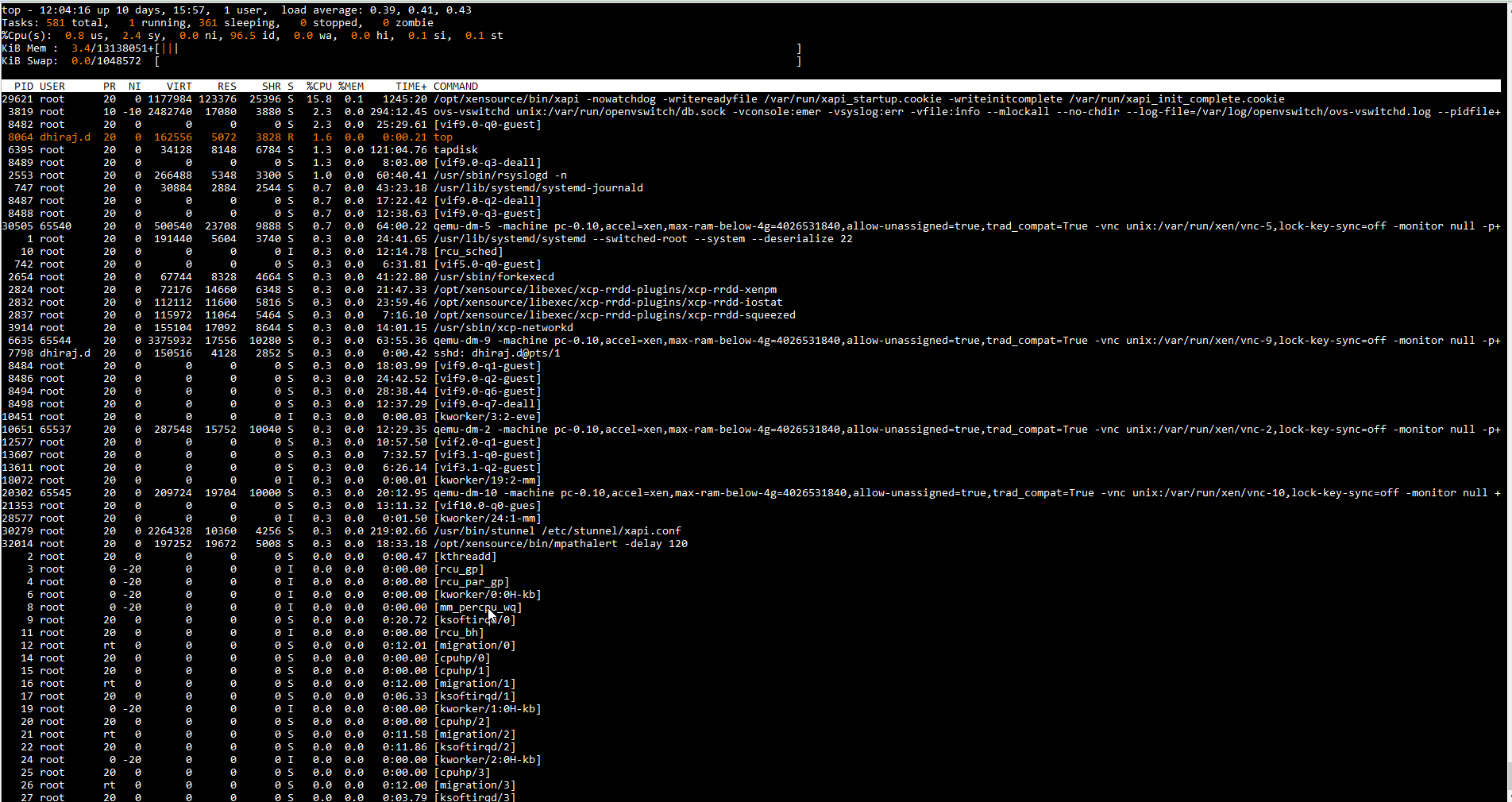

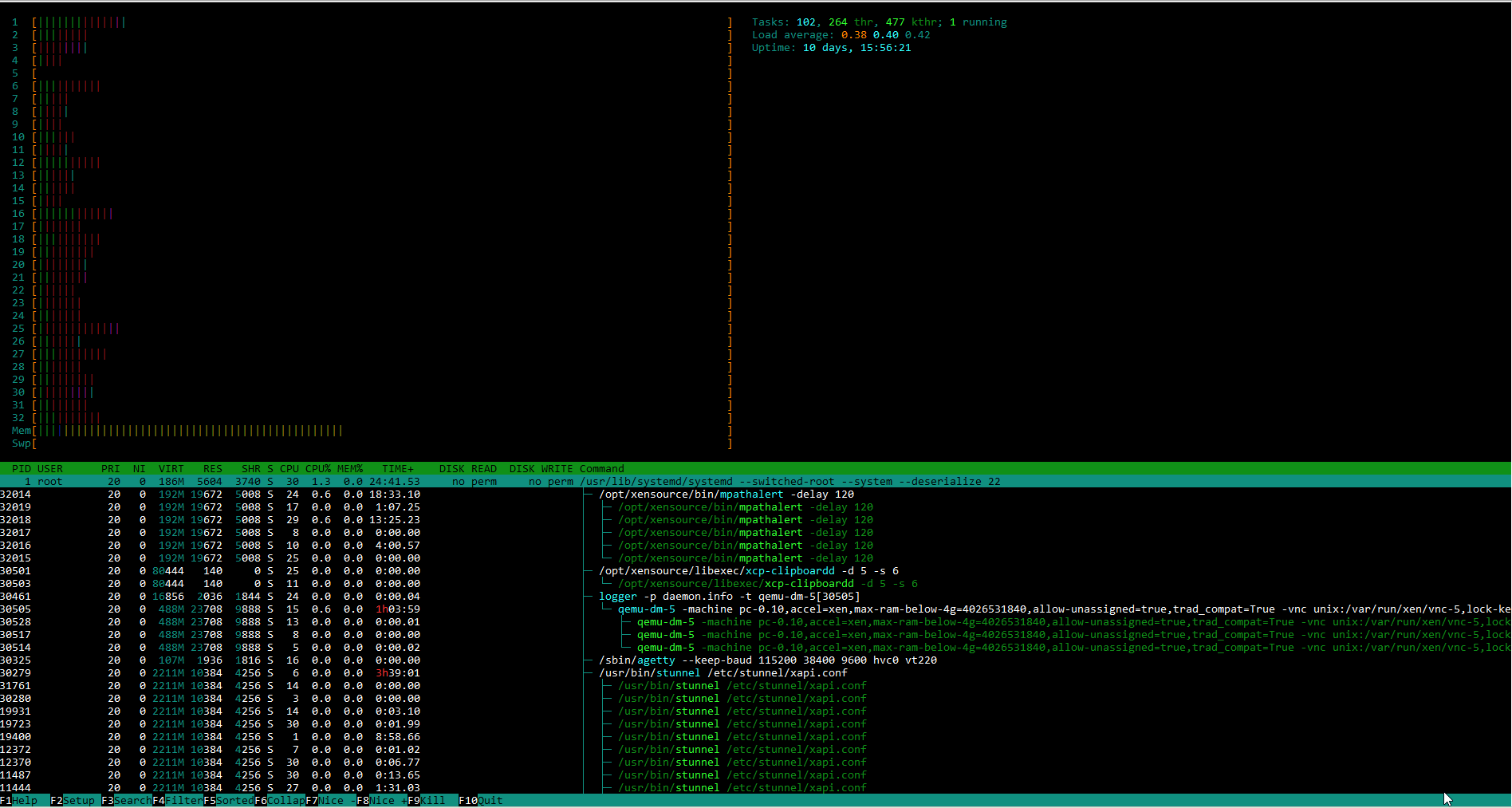

attaching both the servers htop and top output.

-

Memory Consumption goes higher day by day

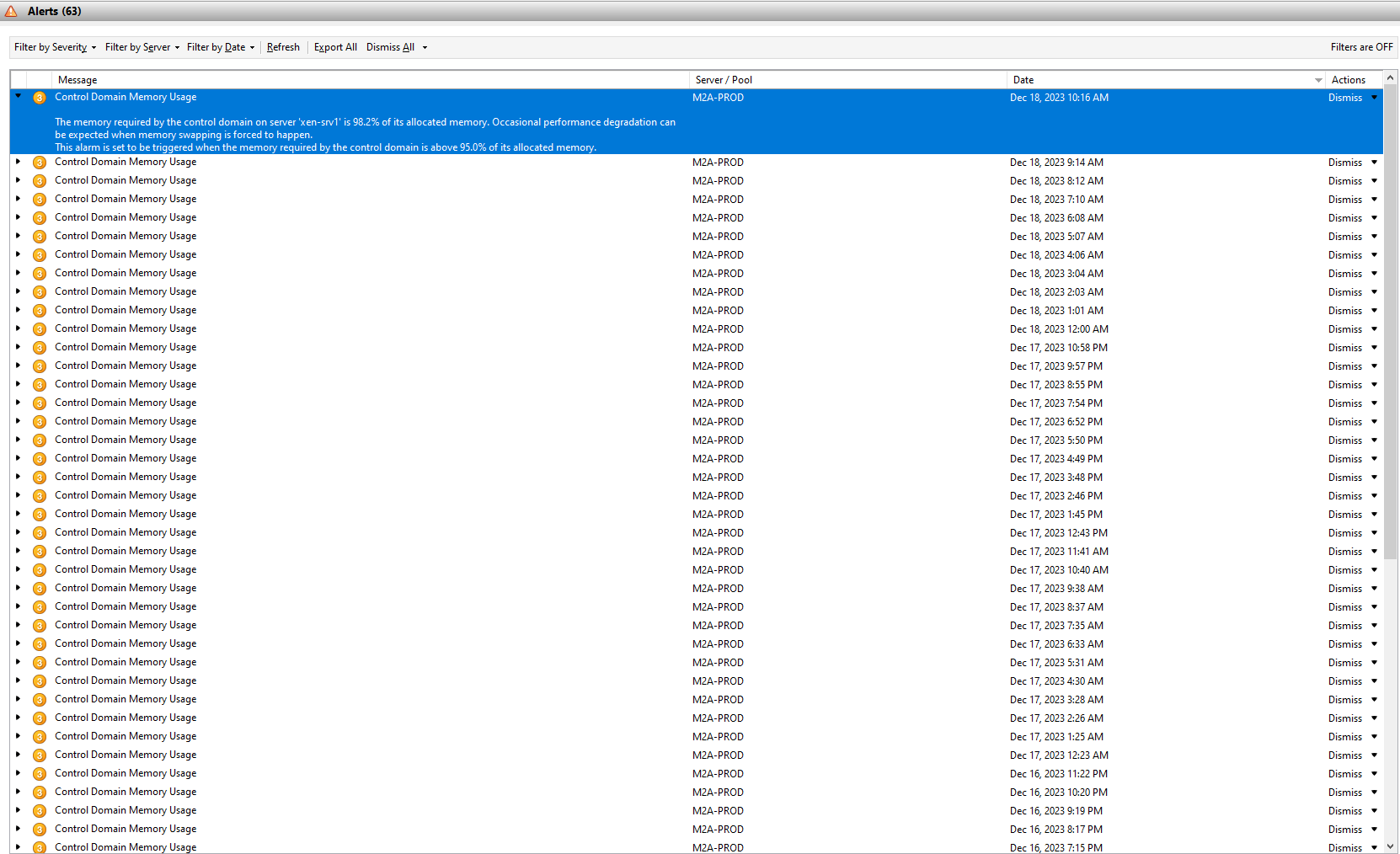

Re: Alert: Control Domain Memory Usage

Yes, it's not solving the issue. Have updated the latest Ice drivers as well. We need to restart the node to release the memory. But after a couple of days, it reaches the same state.

We are on XCP-ng release 8.2.1

Network cards:

We have 1G Broadcom and Intel 10G/25G ethernet/fiber cards. But for now we are just using

both 1G cards using bond and single fiber card with 10G module.[19:03 xen-srv2 ~]$ lspci | grep -i eth 04:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe 04:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe 32:00.0 Ethernet controller: Intel Corporation Ethernet Controller E810-C for SFP (rev 02) 32:00.1 Ethernet controller: Intel Corporation Ethernet Controller E810-C for SFP (rev 02) 32:00.2 Ethernet controller: Intel Corporation Ethernet Controller E810-C for SFP (rev 02) 32:00.3 Ethernet controller: Intel Corporation Ethernet Controller E810-C for SFP (rev 02) b1:00.0 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02) b1:00.1 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02) b2:00.0 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02) b2:00.1 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02) [19:05 xen-srv2 ~]$ ls -l /sys/class/net/eth6/device/driver lrwxrwxrwx 1 root root 0 Nov 22 18:05 /sys/class/net/eth6/device/driver -> ../../../../bus/pci/drivers/ice [19:06 xen-srv2 ~]$ modinfo ice| less filename: /lib/modules/4.19.0+1/updates/drivers/net/ethernet/intel/ice/ice.ko firmware: intel/ice/ddp/ice.pkg version: 1.11.14 license: GPL v2 description: Intel(R) Ethernet Connection E800 Series Linux Driver author: Intel Corporation, <linux.nics@intel.com> srcversion: ABA97333D32A1C8C8127E80 alias: pci:v00008086d00001888sv*sd*bc*sc*i* alias: pci:v00008086d0000579Fsv*sd*bc*sc*i* alias: pci:v00008086d0000579Esv*sd*bc*sc*i* alias: pci:v00008086d0000579Dsv*sd*bc*sc*i* alias: pci:v00008086d0000579Csv*sd*bc*sc*i* alias: pci:v00008086d0000151Dsv*sd*bc*sc*i* alias: pci:v00008086d0000124Fsv*sd*bc*sc*i* alias: pci:v00008086d0000124Esv*sd*bc*sc*i* alias: pci:v00008086d0000124Dsv*sd*bc*sc*i* alias: pci:v00008086d0000124Csv*sd*bc*sc*i* alias: pci:v00008086d0000189Asv*sd*bc*sc*i* alias: pci:v00008086d00001899sv*sd*bc*sc*i* alias: pci:v00008086d00001898sv*sd*bc*sc*i* alias: pci:v00008086d00001897sv*sd*bc*sc*i* alias: pci:v00008086d00001894sv*sd*bc*sc*i* alias: pci:v00008086d00001893sv*sd*bc*sc*i* alias: pci:v00008086d00001892sv*sd*bc*sc*i* alias: pci:v00008086d00001891sv*sd*bc*sc*i* alias: pci:v00008086d00001890sv*sd*bc*sc*i* alias: pci:v00008086d0000188Esv*sd*bc*sc*i* alias: pci:v00008086d0000188Dsv*sd*bc*sc*i* alias: pci:v00008086d0000188Csv*sd*bc*sc*i* alias: pci:v00008086d0000188Bsv*sd*bc*sc*i* alias: pci:v00008086d0000188Asv*sd*bc*sc*i* alias: pci:v00008086d0000159Bsv*sd*bc*sc*i* alias: pci:v00008086d0000159Asv*sd*bc*sc*i* alias: pci:v00008086d00001599sv*sd*bc*sc*i* alias: pci:v00008086d00001593sv*sd*bc*sc*i* alias: pci:v00008086d00001592sv*sd*bc*sc*i* alias: pci:v00008086d00001591sv*sd*bc*sc*i* depends: devlink,intel_auxiliary retpoline: Y name: ice vermagic: 4.19.0+1 SMP mod_unload modversions parm: debug:netif level (0=none,...,16=all) (int) parm: fwlog_level:FW event level to log. All levels <= to the specified value are enabled. Values: 0=none, 1=error, 2=warning, 3=normal, 4=verbose. Invalid values: >=5 (ushort) parm: fwlog_events:FW events to log (32-bit mask) (ulong) [19:07 xen-srv2 ~]$ ethtool eth6 Settings for eth6: Supported ports: [ FIBRE ] Supported link modes: 1000baseT/Full 25000baseCR/Full 25000baseSR/Full 1000baseX/Full 10000baseCR/Full 10000baseSR/Full 10000baseLR/Full Supported pause frame use: Symmetric Supports auto-negotiation: No Supported FEC modes: None Advertised link modes: 25000baseSR/Full 10000baseSR/Full Advertised pause frame use: No Advertised auto-negotiation: No Advertised FEC modes: None RS Speed: 10000Mb/s Duplex: Full Port: FIBRE PHYAD: 0 Transceiver: internal Auto-negotiation: off Cannot get wake-on-lan settings: Operation not permitted Current message level: 0x00000007 (7) drv probe link Link detected: yesWe have two servers with similar configurations (XEN-SRV1 and XEN-SRV2). The Control Domain memory gets full each time, so we increased the CDM to 128G on both the hosts. But strangely, that's too getting used. VM's are not using that much memory though.

Not sure why it grabs the memory and keep it in cache, where as usage is not that much.

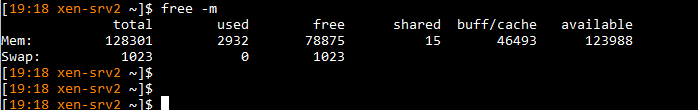

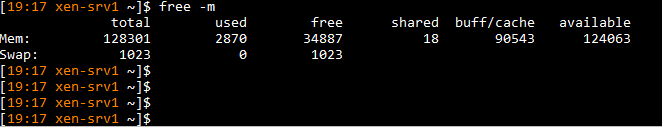

XEN-SRV2

[19:07 xen-srv2 ~]$ free -m total used free shared buff/cache available Mem: 128301 3012 78844 17 46444 123907 Swap: 1023 0 1023XEN-SRV1

[14:48 xen-srv1 ~]$ free -m total used free shared buff/cache available Mem: 128301 2865 34909 18 90525 124068 Swap: 1023 0 1023Attaching memory usage graph as well.