From what it looks like...If XCP-ng could move to kernel 6.8+ the Intel SR-IOV option should be on the table. That's a pretty massive jump from 4.19 and I have no idea how much effort that would be for you guys.

Posts

-

RE: vGPU options for current XCP-ng?

-

RE: Native Ceph RBD SM driver for XCP-ng

Nothing fruitful to add....

But...

Oooof....

This will be somewhat messy to clean up. I'm rooting for you guys though!!

-

vGPU options for current XCP-ng?

I'm attempting to virtualize some media functions and doing video work on raw CPU is just crazy. (I've got an app running 80% on second gen 3Ghz Epyc with 12 vCPU's). I'm pretty sure if I had a vGPU to throw at it I could cut that down significantly.

From what I've seen, it looks like AMD is broken, NVidia works but requires expensive hardware and equally expensive licensing, and Intel isn't even remotely going to work.

Is that pretty much the state of things?

Thanks!

James

-

RE: Intel Flex GPU with SR-IOV for GPU accelarated VDIs

@olivierlambert While VDI is maybe not as vital as it once was...I'm experimenting with multimedia work in XCP-ng. Having a VM with GPU off-loading of CODEC encoding would be nice. It's a pretty big CPU hit to make that go.

-

RE: Intel Flex GPU with SR-IOV for GPU accelarated VDIs

@olivierlambert Ideally you need to be somewhere into Kernel 6. 6.12 is sticking out in my head, but I'm not positive when support got fully integrated.

-

RE: Intel Flex GPU with SR-IOV for GPU accelarated VDIs

@olivierlambert If I remember right, you should be able to see 62 VF's on that card. There might be a tool needed to define how many VF's are present like on a NIC.

-

RE: Epyc VM to VM networking slow

@Forza said in Epyc VM to VM networking slow:

Would sr-iov with xoa help backup speeds?

If you specify the SR-IOV NIC, it will be wire-speed.

-

RE: Intel Flex GPU with SR-IOV for GPU accelarated VDIs

@olivierlambert From Intel's page:

"With up to 62 virtual functions based on hardware-enabled single-root input/output virtualization (SR-IOV) and no licensing fees, the Intel

Data Center GPU Flex 140 delivers impeccable quality, flexibility, and productivity at scale."

Data Center GPU Flex 140 delivers impeccable quality, flexibility, and productivity at scale." -

RE: Intel Flex GPU with SR-IOV for GPU accelarated VDIs

Generally speaking...Just because you haven't had direct requests doesn't mean the feature isn't desired.

It's easy enough to look over the current features and because it's not listed, you assume it's not there and move on to find the next viable solution.

Proxmox and OpenShift seem to be killing it in this space with Intel Flex GPU's.

-

RE: Intel Flex GPU with SR-IOV for GPU accelarated VDIs

I can't believe that there's not much interest.

Anyway...As far as I know these are pretty well supported in newer kernels. I think you need to be fairly deep in kernel 6. Given that XCPng/XenServer is currently running on kernel 4 with a bunch of backports, this might be a little problematic.

Last I knew, Intel is not charging licensing fees for using vGPUs like NVidia does.

With a working driver in XCP-ng and no licensing fees, this is could be a real cost-effective VDI platform.

-

RE: Nvidia P40s with XCP-ng 8.3 for inference and light training

I just added a P4 to one of my hosts for exactly this. My servers can only handle low-profile cards, so the P4 fits. Not the most powerful of GPU's but I can get my feet wet.

-

RE: Epyc VM to VM networking slow

These latest 8.3 update speeds are still slower than a 13 year-old Xeon E3 1230.

-

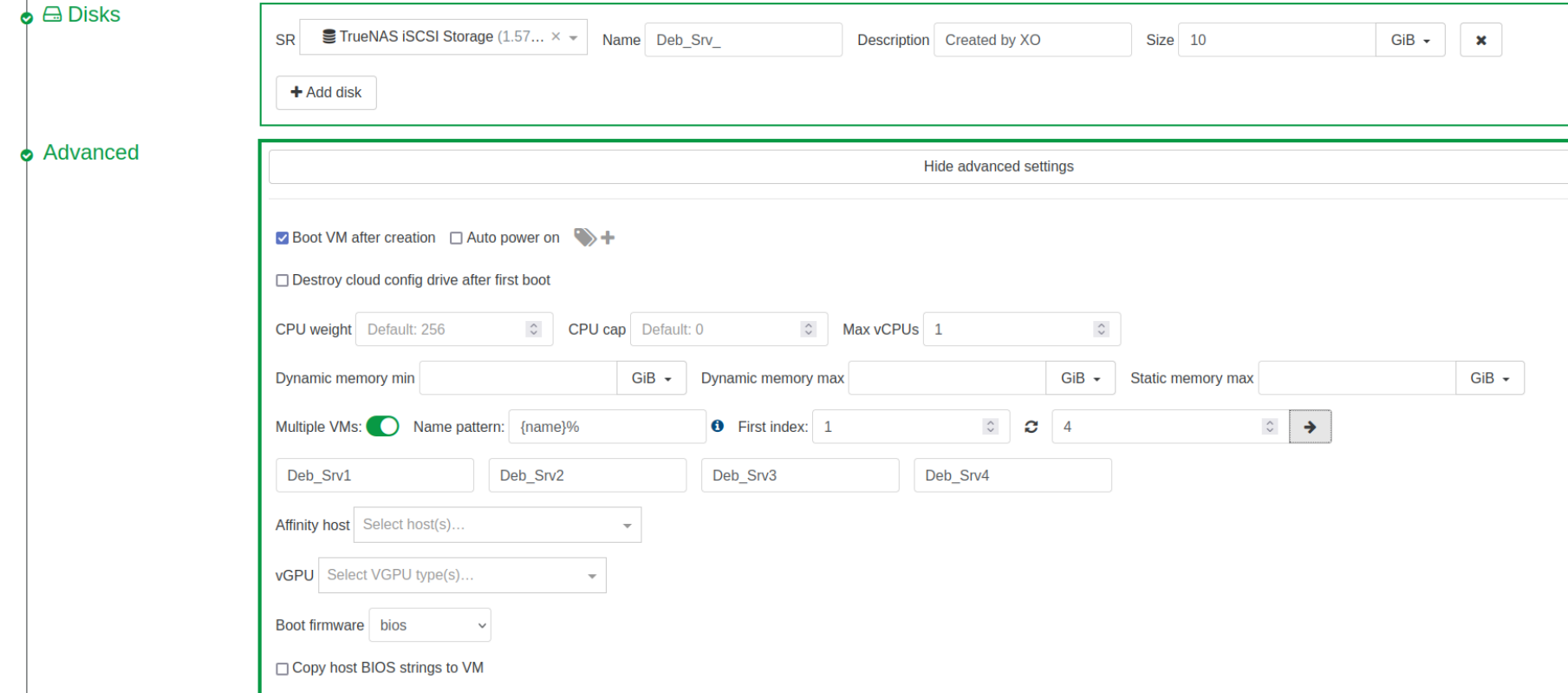

RE: XO deploy multiple VMs observation/issue

While not critical...It would be nice to have the same dialog for system disks as we do for system names in the multiple VM's section under "advanced." Seems like a really easy thing to add that would prevent someone from having to go back and rename disks later. The system is already creating a name, it's already giving us the ability to name the disks for singles, why not be able to name the disks like the systems for multiples?

-

RE: XO deploy multiple VMs observation/issue

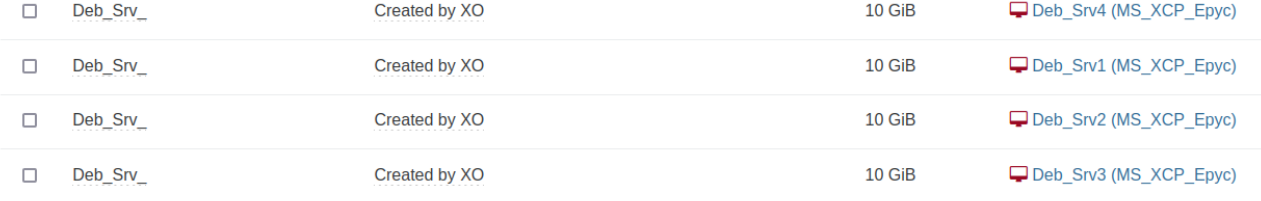

Okay...Updated to latest commit. Still behaves the same

-

RE: XO deploy multiple VMs observation/issue

@DustinB I'm on ee6fa which is apparently 11 commits behind. I'm updating now. and will retry a multiple VM deployment and see what happens.

My understanding is that the "name" of the disk doesn't really matter so much as that's more for us humans. The system mainly works off of UUID's which are unique.

I'll post back later.

-

XO deploy multiple VMs observation/issue

Using XO from sources (and presumably XOA) to deploy multiple VM's, there's a function to set the names for the VM's. I wish there was the same capability to name the disks.

I usually label the disks the same as the host with an _0 for first (boot) drive, and _"X" for any subsequent drives (if any).

So using XO, if I try to create three hosts with the advanced/multiple feature, I can set the host naming: Sys-VM-1, Sys-VM-2, Sys-VM-3, and I would like to define their respective system drives as Sys-VM-1_0, Sys-VM-2_0, and Sys-VM-3_0, but instead, I get three disks named the same as whatever I specified in the main VM disk creation dialog (Sys-VM-1_0). Yes...You can go back later and rename them, but it would be nice to just do it all from the main dialog and not have to go back later and clean up.

-

RE: Epyc VM to VM networking slow

@Seneram If you search the forum you'll find other topics that discuss this. In January/February 2023 I reported it myself because I was trying to build a cluster that needed high-performance networking and found that the VM's couldn't do it. While researching the issue then, I seem to recall seeing other topics from a year or so prior to that.

Just because this one thread isn't two years old doesn't mean this is the only topic reporting the issue.

-

RE: Epyc VM to VM networking slow

While I'm very happy to see this getting some attention now, I am a bit disappointed that this has been reported for so long (easily two years or more) and is only now getting serious attention. Hopefully it will be resolved fairly soon.

That said...If you need high-speed networking in Epyc VM's now, SR-IOV can be your friend. Using ConnectX-4 25Gb cards I can hit 22-23Gb/s with guest VM's. Obviously SR-IOV brings along a whole other set of issues, but it's a way to get fast networking today.

-

RE: nVidia Tesla P4 for vgpu and Plex encoding

From my perspective, there's literally money on the ground for any virtualization platform to pick up VDI with Intel. The GPU's are affordable and performant for VDI work. They currently work with Openshift and Proxmox is at work on it.