Posts

-

RE: backup fail after last update

-

RE: backup fail after last update

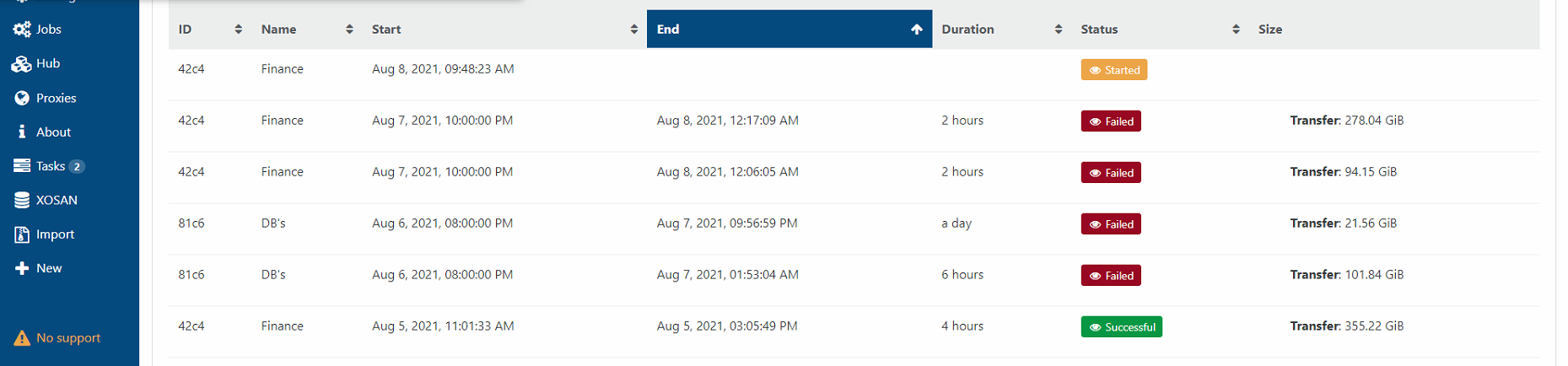

@tony the job failed, not all the VM's failed. some has one VM fail, some has 4 VM's fail.

-

RE: backup fail after last update

@tony no, its all my jobs. this is an example of a job that fails. i can give you all my jobs but you;ll see the same error.

if i run the jobs manually, even all together, all works fine. but it's annoying, and disturbing. -

RE: backup fail after last update

@julien-f well, there is no other job on the VM's, and it happens on all my predefined jobs. only automatic jobs does not work from the last update. i did not do any change in the jobs, and they worked fine for the past 4 month. I've only updated the version of orchestra and it started.

-

backup fail after last update

All my backups are failing with 'Lock file is already being held'.

they run at night and fails.

when i start them manually they work fine...Please help.

Joe

{ "data": { "mode": "full", "reportWhen": "failure" }, "id": "1627495200006", "jobId": "d6eb656d-b0cc-4dc6-b9ef-18b6ca58b838", "jobName": "Domain", "message": "backup", "scheduleId": "d7fe0858-fc02-46f5-9626-bc380df1f993", "start": 1627495200006, "status": "failure", "infos": [ { "data": { "vms": [ "ca171dfc-261a-4ae8-0f3d-857b8e31a0e7", "3d523a78-1da6-39e6-b7ca-cec9ef3833f6", "4203343e-3904-6d71-e207-23a9d7985112", "4e1499d4-c27c-413d-6678-34a684951fc9", "68e574cb-1f7b-fb49-3c1a-4e4d4db0239f" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "ca171dfc-261a-4ae8-0f3d-857b8e31a0e7" }, "id": "1627495200896", "message": "backup VM", "start": 1627495200896, "status": "success", "tasks": [ { "id": "1627495207124", "message": "snapshot", "start": 1627495207124, "status": "success", "end": 1627495266667, "result": "41d02d2b-9de4-88d8-43c8-53fc17c1cbfd" }, { "data": { "id": "f2ad79cd-6bbe-47ad-908f-bfef50d0400c", "type": "remote", "isFull": true }, "id": "1627495266715", "message": "export", "start": 1627495266715, "status": "success", "tasks": [ { "id": "1627495267668", "message": "transfer", "start": 1627495267668, "status": "success", "end": 1627503631984, "result": { "size": 128382542336 } } ], "end": 1627503633193 } ], "end": 1627503635631 }, { "data": { "type": "VM", "id": "3d523a78-1da6-39e6-b7ca-cec9ef3833f6" }, "id": "1627495200913", "message": "backup VM", "start": 1627495200913, "status": "success", "tasks": [ { "id": "1627495207126", "message": "snapshot", "start": 1627495207126, "status": "success", "end": 1627495220878, "result": "5db04304-7f98-d580-b054-90f34cdbfa81" }, { "data": { "id": "f2ad79cd-6bbe-47ad-908f-bfef50d0400c", "type": "remote", "isFull": true }, "id": "1627495220927", "message": "export", "start": 1627495220927, "status": "success", "tasks": [ { "id": "1627495220956", "message": "transfer", "start": 1627495220956, "status": "success", "end": 1627497418929, "result": { "size": 29895727616 } } ], "end": 1627497419120 } ], "end": 1627497421876 }, { "data": { "type": "VM", "id": "4203343e-3904-6d71-e207-23a9d7985112" }, "id": "1627497421876:0", "message": "backup VM", "start": 1627497421876, "status": "failure", "end": 1627497421901, "result": { "code": "ELOCKED", "file": "/mnt/Domain/xo-vm-backups/4203343e-3904-6d71-e207-23a9d7985112", "message": "Lock file is already being held", "name": "Error", "stack": "Error: Lock file is already being held\n at /opt/xen-orchestra/node_modules/proper-lockfile/lib/lockfile.js:68:47\n at callback (/opt/xen-orchestra/node_modules/graceful-fs/polyfills.js:299:20)\n at FSReqCallback.oncomplete (fs.js:193:5)\n at FSReqCallback.callbackTrampoline (internal/async_hooks.js:134:14)" } }, { "data": { "type": "VM", "id": "4e1499d4-c27c-413d-6678-34a684951fc9" }, "id": "1627497421901:0", "message": "backup VM", "start": 1627497421901, "status": "failure", "end": 1627497421917, "result": { "code": "ELOCKED", "file": "/mnt/Domain/xo-vm-backups/4e1499d4-c27c-413d-6678-34a684951fc9", "message": "Lock file is already being held", "name": "Error", "stack": "Error: Lock file is already being held\n at /opt/xen-orchestra/node_modules/proper-lockfile/lib/lockfile.js:68:47\n at callback (/opt/xen-orchestra/node_modules/graceful-fs/polyfills.js:299:20)\n at FSReqCallback.oncomplete (fs.js:193:5)\n at FSReqCallback.callbackTrampoline (internal/async_hooks.js:134:14)" } }, { "data": { "type": "VM", "id": "68e574cb-1f7b-fb49-3c1a-4e4d4db0239f" }, "id": "1627497421918", "message": "backup VM", "start": 1627497421918, "status": "success", "tasks": [ { "id": "1627497421964", "message": "snapshot", "start": 1627497421964, "status": "success", "end": 1627497429017, "result": "a6af0609-80f7-4f7a-e47f-6fbbbd2c5454" }, { "data": { "id": "f2ad79cd-6bbe-47ad-908f-bfef50d0400c", "type": "remote", "isFull": true }, "id": "1627497429132", "message": "export", "start": 1627497429132, "status": "success", "tasks": [ { "id": "1627497429621", "message": "transfer", "start": 1627497429621, "status": "success", "end": 1627500681632, "result": { "size": 38368017408 } } ], "end": 1627500682070 } ], "end": 1627500684793 } ], "end": 1627503635631 } -

RE: Import VHD

@darkbeldin how an issue in the browser can solve an SR not available in xen orchestra? or a host being detached from orchestra? even when i look at orchestra from another machine!!!

-

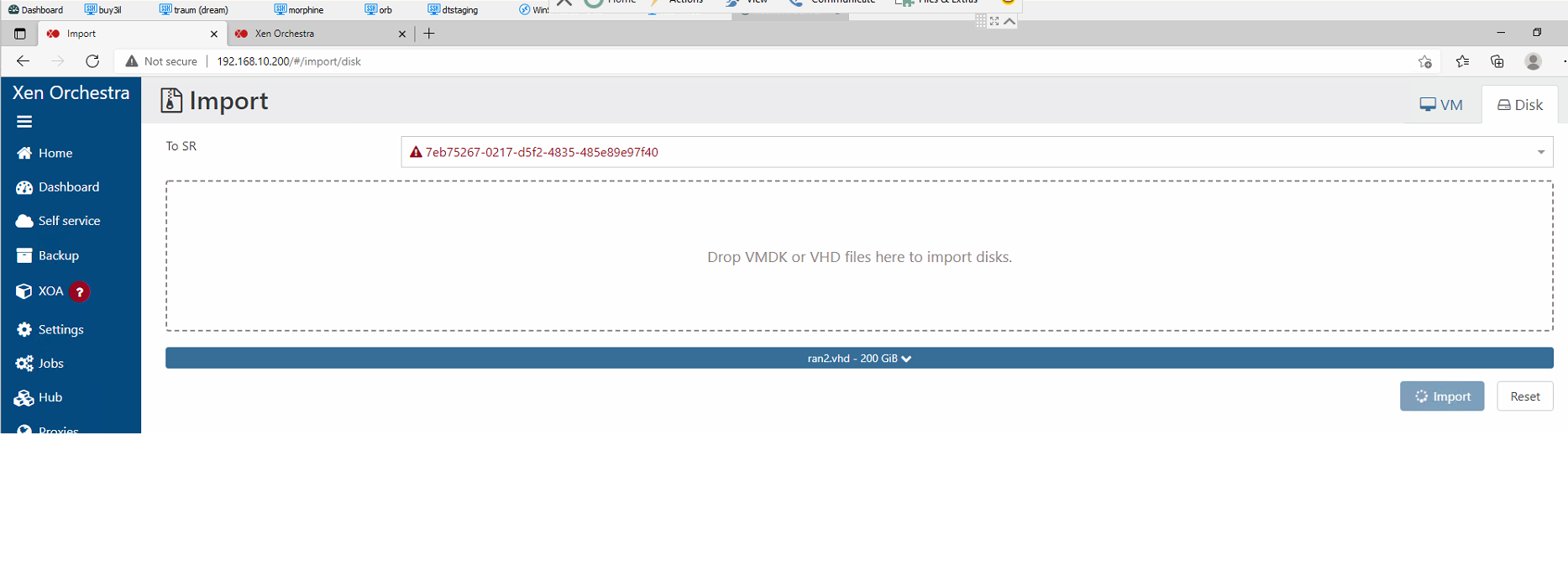

Import VHD

Hi,

I'm trying to import a vhd created by windows disk2vhd and i get the strangest phenomena.- In the middle of the import, the SR im trying to import into is becoming unavailable.

- the owner host of the SR disappear from the host list.

- the import continues for a few hours and then stop and the new disk is not available.

attached is an example.

Joe

-

RE: VM Backup Folders

@danp i want to delete old backups. and move the new one to another location.

-

VM Backup Folders

when i create a backup job with several VM's. how to determine in the NFS folder which folder belongs to each VM?

-

RE: backup failed

@danp

The log shows only the start and stop of the xo-server. 4 days ago. i tried increasing to 1000 and got the same result. nothing about backup. -

RE: backup failed

@danp

using :

journalctl -u xo-server -f -n 50

gives nothing about the backup. -

RE: backup failed

@julien-f can you ell me how to do that? as i said im a windows guy not linux, so youll have to walk me through the process...

-

RE: backup failed

{ "data": { "mode": "full", "reportWhen": "failure" }, "id": "1623351600009", "jobId": "05d242c4-b048-41bd-8ed7-3c32b388ab55", "jobName": "Finance (Every 3 Days at 22:00)", "message": "backup", "scheduleId": "e493f947-a723-4ba1-95c2-5df1bdd762f5", "start": 1623351600009, "status": "failure", "infos": [ { "data": { "vms": [ "5402c8be-e33f-d762-0fea-c6052d831aa1", "010d5f6c-b6cc-94da-9853-3a61d542ba60", "f81f70bf-b6a7-7fa8-dfcd-6e0ff296d077", "ee5b6776-bbe1-7de2-3d8c-815d44599253", "9536ea02-0ea6-d69e-0ba6-b02672ff4d18", "ebb1bdec-e2d3-4b7e-52f7-a5e880c0644a", "21a40b8c-3c7e-16dd-cda9-bf9a4cf4a212", "4872fef3-1e2b-6169-439c-5e831859175e", "ddf2b801-f18a-89ec-b82f-5183bab519e9" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "5402c8be-e33f-d762-0fea-c6052d831aa1" }, "id": "1623351600765:0", "message": "backup VM", "start": 1623351600765, "status": "failure", "end": 1623351606719, "result": { "message": "all targets have failed, step: writer.beforeBackup()", "name": "Error", "stack": "Error: all targets have failed, step: writer.beforeBackup()\n at VmBackup._callWriters (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:118:13)\n at async VmBackup.run (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:343:5)" } }, { "data": { "type": "VM", "id": "010d5f6c-b6cc-94da-9853-3a61d542ba60" }, "id": "1623351600780", "message": "backup VM", "start": 1623351600780, "status": "failure", "end": 1623351606719, "result": { "message": "all targets have failed, step: writer.beforeBackup()", "name": "Error", "stack": "Error: all targets have failed, step: writer.beforeBackup()\n at VmBackup._callWriters (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:118:13)\n at async VmBackup.run (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:343:5)" } }, { "data": { "type": "VM", "id": "f81f70bf-b6a7-7fa8-dfcd-6e0ff296d077" }, "id": "1623351600780:0", "message": "backup VM", "start": 1623351600780, "status": "success", "tasks": [ { "id": "1623351607155", "message": "snapshot", "start": 1623351607155, "status": "success", "end": 1623351615220, "result": "51934b74-e52c-4cf7-6301-258f05a6096c" }, { "data": { "id": "6c2bf055-1260-401f-ac8e-d0e69b03b970", "type": "remote", "isFull": true }, "id": "1623351615245", "message": "export", "start": 1623351615245, "status": "success", "tasks": [ { "id": "1623351615308", "message": "transfer", "start": 1623351615308, "status": "success", "end": 1623351893293, "result": { "size": 2739784704 } } ], "end": 1623351894456 } ], "end": 1623351898277 }, { "data": { "type": "VM", "id": "ee5b6776-bbe1-7de2-3d8c-815d44599253" }, "id": "1623351606719:0", "message": "backup VM", "start": 1623351606719, "status": "success", "tasks": [ { "id": "1623351607170", "message": "snapshot", "start": 1623351607170, "status": "success", "end": 1623351660079, "result": "1618c328-f5d0-ba0a-12f6-873b8add4ba1" }, { "data": { "id": "6c2bf055-1260-401f-ac8e-d0e69b03b970", "type": "remote", "isFull": true }, "id": "1623351660103", "message": "export", "start": 1623351660103, "status": "success", "tasks": [ { "id": "1623351660736", "message": "transfer", "start": 1623351660736, "status": "success", "end": 1623352583590, "result": { "size": 6931830272 } } ], "end": 1623352584082 } ], "end": 1623352587494 }, { "data": { "type": "VM", "id": "9536ea02-0ea6-d69e-0ba6-b02672ff4d18" }, "id": "1623351606719:2", "message": "backup VM", "start": 1623351606719, "status": "success", "tasks": [ { "id": "1623351607172", "message": "snapshot", "start": 1623351607172, "status": "success", "end": 1623351637850, "result": "55148313-ac49-073a-480d-076e7adc8215" }, { "data": { "id": "6c2bf055-1260-401f-ac8e-d0e69b03b970", "type": "remote", "isFull": true }, "id": "1623351637875", "message": "export", "start": 1623351637875, "status": "success", "tasks": [ { "id": "1623351638535", "message": "transfer", "start": 1623351638535, "status": "success", "end": 1623363752942, "result": { "size": 256111958016 } } ], "end": 1623363753843 } ], "end": 1623363770261 }, { "data": { "type": "VM", "id": "ebb1bdec-e2d3-4b7e-52f7-a5e880c0644a" }, "id": "1623351898278", "message": "backup VM", "start": 1623351898278, "status": "failure", "end": 1623351904526, "result": { "message": "all targets have failed, step: writer.beforeBackup()", "name": "Error", "stack": "Error: all targets have failed, step: writer.beforeBackup()\n at VmBackup._callWriters (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:118:13)\n at async VmBackup.run (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:343:5)" } }, { "data": { "type": "VM", "id": "21a40b8c-3c7e-16dd-cda9-bf9a4cf4a212" }, "id": "1623351904527", "message": "backup VM", "start": 1623351904527, "status": "success", "tasks": [ { "id": "1623351905474", "message": "snapshot", "start": 1623351905474, "status": "success", "end": 1623351912328, "result": "5d45e921-a934-01db-32ac-abc5a8e23322" }, { "data": { "id": "6c2bf055-1260-401f-ac8e-d0e69b03b970", "type": "remote", "isFull": true }, "id": "1623351912370", "message": "export", "start": 1623351912370, "status": "success", "tasks": [ { "id": "1623351913333", "message": "transfer", "start": 1623351913333, "status": "success", "end": 1623352708494, "result": { "size": 40308589056 } } ], "end": 1623352709017 } ], "end": 1623352710173 }, { "data": { "type": "VM", "id": "4872fef3-1e2b-6169-439c-5e831859175e" }, "id": "1623352587495", "message": "backup VM", "start": 1623352587495, "status": "failure", "end": 1623352588661, "result": { "message": "all targets have failed, step: writer.beforeBackup()", "name": "Error", "stack": "Error: all targets have failed, step: writer.beforeBackup()\n at VmBackup._callWriters (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:118:13)\n at async VmBackup.run (/opt/xen-orchestra/@xen-orchestra/backups/_VmBackup.js:343:5)" } }, { "data": { "type": "VM", "id": "ddf2b801-f18a-89ec-b82f-5183bab519e9" }, "id": "1623352588661:0", "message": "backup VM", "start": 1623352588661, "status": "success", "tasks": [ { "id": "1623352588716", "message": "snapshot", "start": 1623352588716, "status": "success", "end": 1623352670691, "result": "201861e7-142a-ebe4-61ba-b86577dfcc41" }, { "data": { "id": "6c2bf055-1260-401f-ac8e-d0e69b03b970", "type": "remote", "isFull": true }, "id": "1623352670744", "message": "export", "start": 1623352670744, "status": "success", "tasks": [ { "id": "1623352671666", "message": "transfer", "start": 1623352671666, "status": "success", "end": 1623358080755, "result": { "size": 9415387136 } } ], "end": 1623358080960 } ], "end": 1623358083272 } ], "end": 1623363770261 } -

RE: backup failed

I'm also on Debian 10. but I'm running as root. Still have the problem, happened again this morning. running the backup again succeeds...