@Theoi-Meteoroi said in How to kubernetes on xcp-ng (csi?):

I've been using this with NVMe on 3 Dell 7920 boxen with PCI passthru.

https://github.com/piraeusdatastore/piraeus-operator

It worked well enough that I installed the rest of the NVMe slots to have 7TB per node. I pin the master kubernetes nodes each to a physical node, I use 3 so I can roll updates and patches. The masters serve the storage out to containers - so the workers are basically "storage-less". Those worker nodes can move around. All the networking is 10G with 4 interfaces, so I have one specifically as the backend for this.

Just one note on handing devices to the operator - I use raw NVMe disk.

There can't be any partition or PV on the device. I put a PV on, then erase it so the disk is wiped. Then the operator finds the disk usable an initializes. It tries to not use a disk that seems in use already.

I also played a bit with XOSTOR but on spinning rust. Its really robust with the DRBD backend once you get used to working with it. Figuring out object relationships will have you maybe drink more than usual.

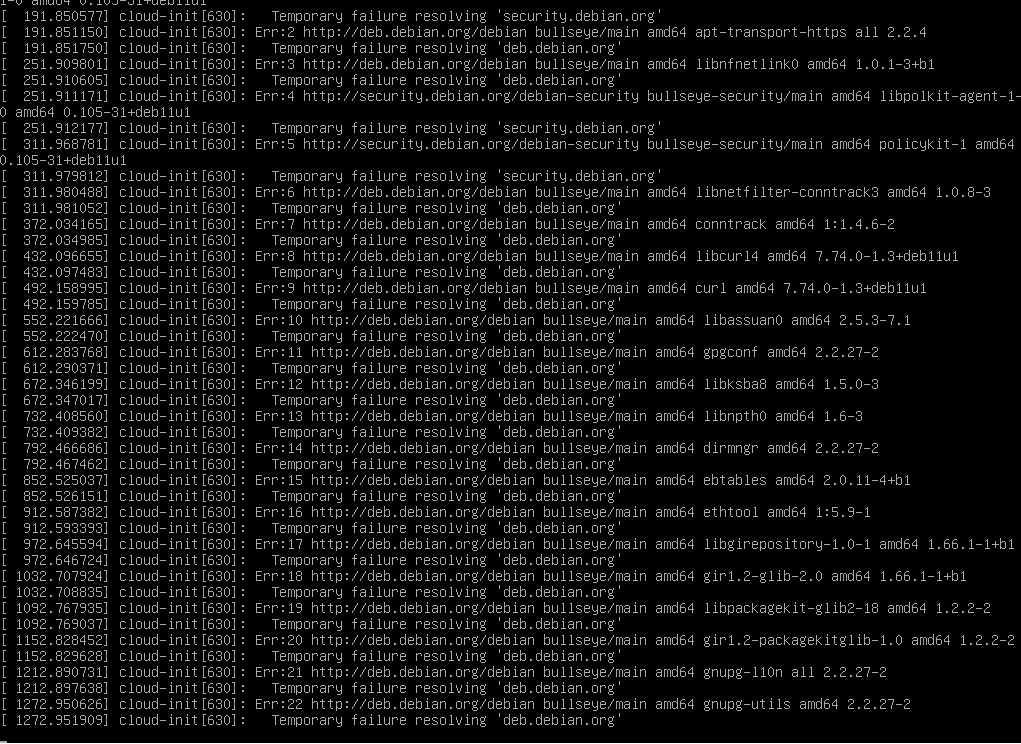

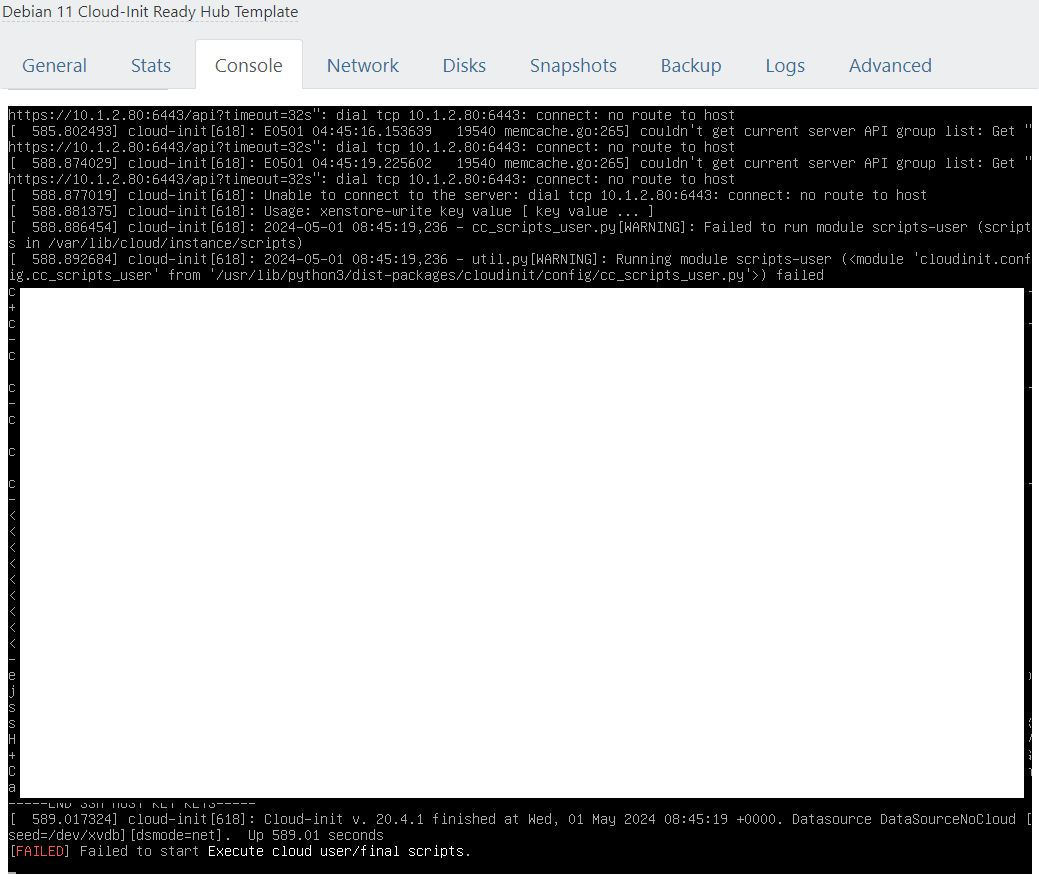

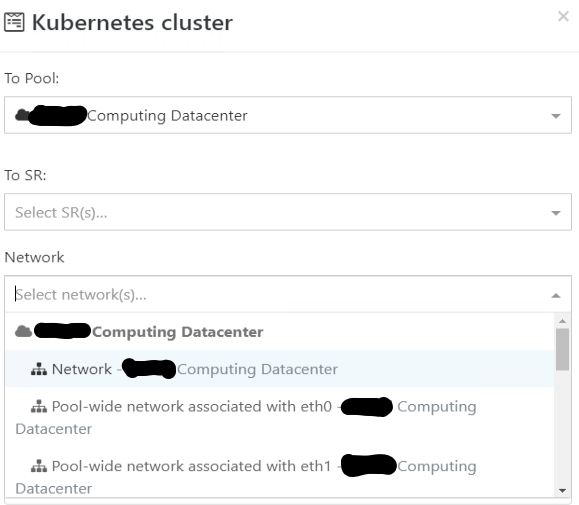

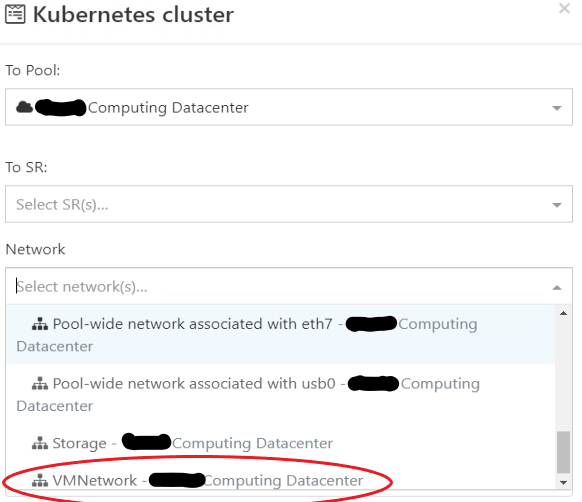

Did you use the built-in Recipes to create the kubernetes cluster? I tried NVMe, iCSCI, SSD, NFS Share. All the same thing.