@davx8342 There is a Packer plugin for XCP-ng : https://github.com/ddelnano/packer-plugin-xenserver

I use it in my homelab, I have some manifests in public on my GitHub profile if you want some examples : https://github.com/ruskofd/xcp-ng-images

@davx8342 There is a Packer plugin for XCP-ng : https://github.com/ddelnano/packer-plugin-xenserver

I use it in my homelab, I have some manifests in public on my GitHub profile if you want some examples : https://github.com/ruskofd/xcp-ng-images

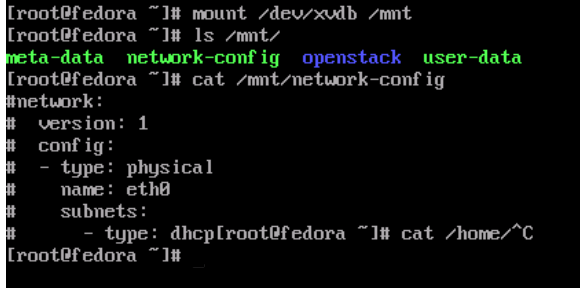

Regarding to logs on the machine where the network configuration is commented, there is this error : 2021-06-20 21:22:59,657 - util.py[WARNING]: Getting data from <class 'cloudinit.sources.DataSourceNoCloud.DataSourceNoCloudNet'> failed

No other errors, but it seems sufficient to broke the provisionning.

Commented :

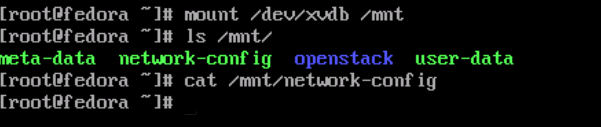

Empty :

Seems consistent imo.

I can confirm this behavior :

@olivierlambert I must be blind, no other explaination....

Hello everyone !

I have a question about memory in general with XCP-ng (pure curiosity).

I have my homelab host with 64GB of RAM, so the Dom0 memory is about 4.2GB, all fine (and it's correct according calculation provided by Citrix : https://docs.citrix.com/en-us/citrix-hypervisor/memory-usage.html)

But when I looked in Xen Orchestra memory stats graphs, it indicate me that 5.28 GB is used on the host (with no vm running). Using the xe CLI, I got the same information (using the commands provided on the link above).

Is there some kind of "overhead" or memory reservation by the Xen hypervisor itself under the hood (or something else) ? I can't find any information about this.

IIRC ESXi has a more or less similar behavior where it reserve some memory for it's own operations, dunno if it's the same here with XS/XCP-ng.

Nested virt is quiet random and not viable sometimes. I already had case where nested virt won't work on a recent i7 and worked wonderful on old CPU

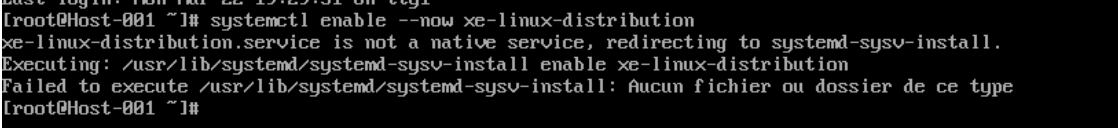

@stormi For now, only a sysvinit script is available right ? If needed for systemd, can we re-use the unit from the Fedora packagers ? Looks pretty simple :

[Unit]

Description=Linux Guest Agent

ConditionVirtualization=xen

[Service]

ExecStartPre=/usr/sbin/sysctl net.ipv4.conf.all.arp_notify=1

ExecStartPre=/usr/sbin/xe-linux-distribution /var/cache/xe-linux-distribution

ExecStart=/usr/sbin/xe-daemon

[Install]

WantedBy=multi-user.target

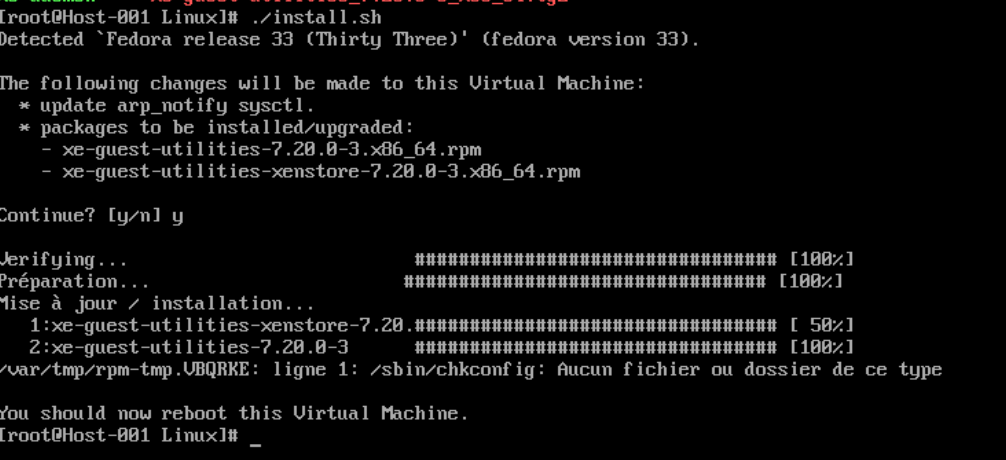

Tested on Fedora 33 and CentOS 8 Stream (can't test live migration in my homelab)

Fedora 33 : nope at all

It failed because of chkconfig requirements and legacy init system unit => no more supported in

very recent version of systemd (here is 246 for Fedora). The officiel RPM from Fedora xe-guest- utilities-latest doesn't have these issues.

CentOS 8 Stream : way better than Fedora

I didn't try the provider, but there is an option cloud_network_config, you should be able to pass network config as in Xen Orchestra UI using cloud-init.

Much much cleaner and readable, very professionnal in look and feel. Great job guys

Yes, for this generation of CPU, you can't run ESXi superior to 6.5.

Far better readability, thanks @stormi

And now, CoreOS is EOL, so it won't help. Maybe there is something doable with the guys behind Flatcar Linux, the fork of CoreOS, to help with support who knows. If you want a GUI for your containers, consider Portainer, it's your best choice, you will have way more features

Why not using a systemd unit instead of forever ?

Even with the package 'xscontainer' is installed, CoreOS is moving very fast and the compatibility is not good atm. And Citrix doesn't seems to give much interest in this plugin.

Try to disable C-States, it could also improve a lot of operations, including storage.

I had an issue too yesterday with XO 5.51.1 for importing an exported VM (IMPORT_ERROR_EOF). Update to XO 5.52 solved the problem.

Interesting news

But the improvements in export/import should be more amazing with the zstd from XCP-ng :

The reduction in the time it takes to import or export a VM depends on the specific hardware of the machine. Reductions of 30% to 40% are common. Reductions of 30% to 40% are common

Wow didn't expected this kind of news so early after the launch of the project.

Congrats team, this is so much merited