If it helps, I'm reasonably certain that I was able to backup and upload similarly sized VMs previously without memory issues, before the 50GB+ chunking changes.

Best posts made by klou

-

RE: S3 / Wasabi Backup

Latest posts made by klou

-

RE: Wasabi just wont work...

5.79.3 / 5.81.0

All of my backups work (now), and speeds range between 5 MB/s to 20 MB/s.

Most of my VM's are smaller (zstd helps a lot), though the largest archive is about 80 GB.

Also, all of my backups are sequential -- I seem to remember a previous note where somebody had problems with concurrent uploads.

My XOCE VM runs with 12 GB, which was the number that "just works" according to some of my earlier problems (and I haven't bothered to chase down or fine tune further).

-

RE: backblaze b2 / amazon s3 as remote in xoa

Confirmed bumping to 12 Gb RAM allowed my "larger" VM's (60 GB+) to complete.

xo-server 5.73.0

xo-web 5.76.0 -

RE: S3 / Wasabi Backup

@nraynaud Sorry about dropping this for a while. If you want to pick it back up, let me know and I'll try to test changes as well.

-

RE: S3 / Wasabi Backup

If it helps, I'm reasonably certain that I was able to backup and upload similarly sized VMs previously without memory issues, before the 50GB+ chunking changes.

-

RE: S3 / Wasabi Backup

Yes, Full Backup, target is S3, both with or without compression.

(Side note: I didn't realize that Delta Backups were possible with S3. This could be significant in storage space. But I also assume that this is *nix VMs only?)

-

RE: S3 / Wasabi Backup

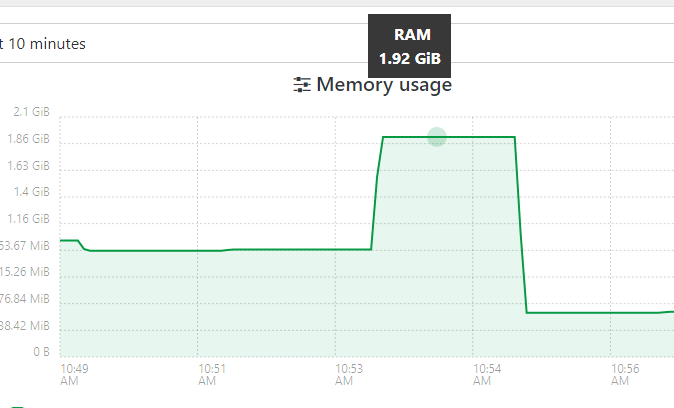

Just did, reduced to 2GB RAM. The pfSense Backup was about the same, except the post-backup idle state was around 900MB usage.

The 2nd VM bombed out with an "Interrupted" transfer status (similar to a few posts above).

-

RE: S3 / Wasabi Backup

OK, DL'ed/Registered XOA, bumped it to 6 GB just in case (VM only, not the node process).

Updated to XOA latest channel, 5.51.1 (5.68.0/5.72.0). (p.s. thanks for the config backup/restore function. Needed to restart the VM or xo-server, but retained my S3 remote settings.)

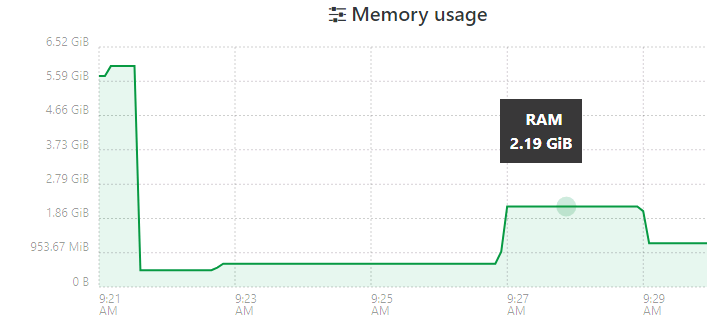

First is a pfSense VM, ~600 MB after zstd. The initial 5+GB usage is VM bootup.

Next is the same VM that I used for the previous tests. ~7GB after ztsd. The job started around 9:30, where the initial ramp-up occurred (during snapshot and transfer start). Then it jumped further to 5+ GB.

That's about as clear as I can get in the 10 minute window. It finished, dropped down to 3.5GB, and then eventually back to 1.4GB.

-

RE: S3 / Wasabi Backup

@olivierlambert From sources. Latest pull was last Friday, so 5.68.0/5.72.0.

Memory usage is relatively stable around 1.4 GB (most of this morning, with

--max-old-space-size=2560) , balloons during a S3 backup job, and then goes back down to 1.4 GB when transfer is complete.

edit: The above was a backup without compression.

-

RE: S3 / Wasabi Backup

Again, thanks for the help with this.

Whether or not the node process is actually using that much (I've been reading that by default, it maxes around 1.4 GB, but I just increased that with

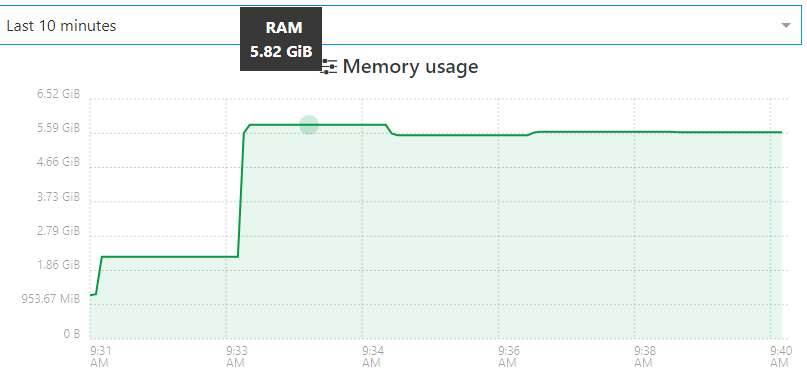

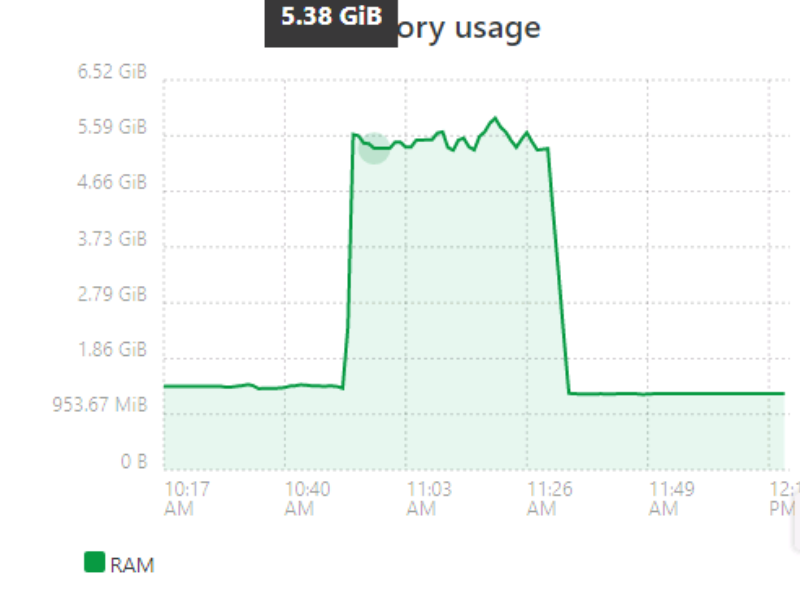

--max-old-space-size=2560), larger S3 backups/transfers are still only successful if I increase XO VM memory to > 5 GB.A recent backup run showed overall usage ballooned to 5.5+ GB during the backup, and then went back to ~1.4GB afterwards.

I don't know if this is intended behavior or if you want to finetune it later, but leaving the VM at 6 GB works for me.

-

RE: S3 / Wasabi Backup

Thanks for the hint. The upload still eventually times out now (after 12000ms of inactivity somewhere), and I still have sporadic TimeOutErrors (see above) during the transfer.

However, the

xo-serverprocess doesn't obviously crash anymore after I increased VM memory from 3GB to 4GB. I see that XOA defaults to 2GB -- what's recommended at this point (~30 VMs, 3 hosts)?With both 3GB or 4GB,

topshows thenodeprocess taking ~90% of memory during a transfer. I wonder if it's buffering the entire upload chunk in memory?With that in mind, I increased to 5.5GB, since the largest upload chunk should be 5GB. And it completed the upload successfully, though still using 90% memory throughout the process. This ended up being a 6GB upload, after zstd.