I believe this issue was resolved when the health check system was changed to detect network connectivity at startup so it did not need to wait for then entire VM to boot. Needs the Xen tools to be installed. I have not had an issue since this change.

Best posts made by McHenry

-

RE: Backup Issue: "timeout reached while waiting for OpaqueRef"

-

RE: Large incremental backups

The server had high memory usage so I expect lots of paging, which could explain the block writes. I've increased the mem and want to see what difference that makes.

-

RE: Job canceled to protect the VDI chain

Host started and issue resolved.

-

RE: Disaster Recovery hardware compatibility

Results are in...

Four VMs migrated. Three using warm migration and all worked. 4th used straight migration and BSOD but worked after a reboot.

-

RE: ZFS for a backup server

Thanks Oliver. We have used GFS with Veeam previously and will be a great addition.

-

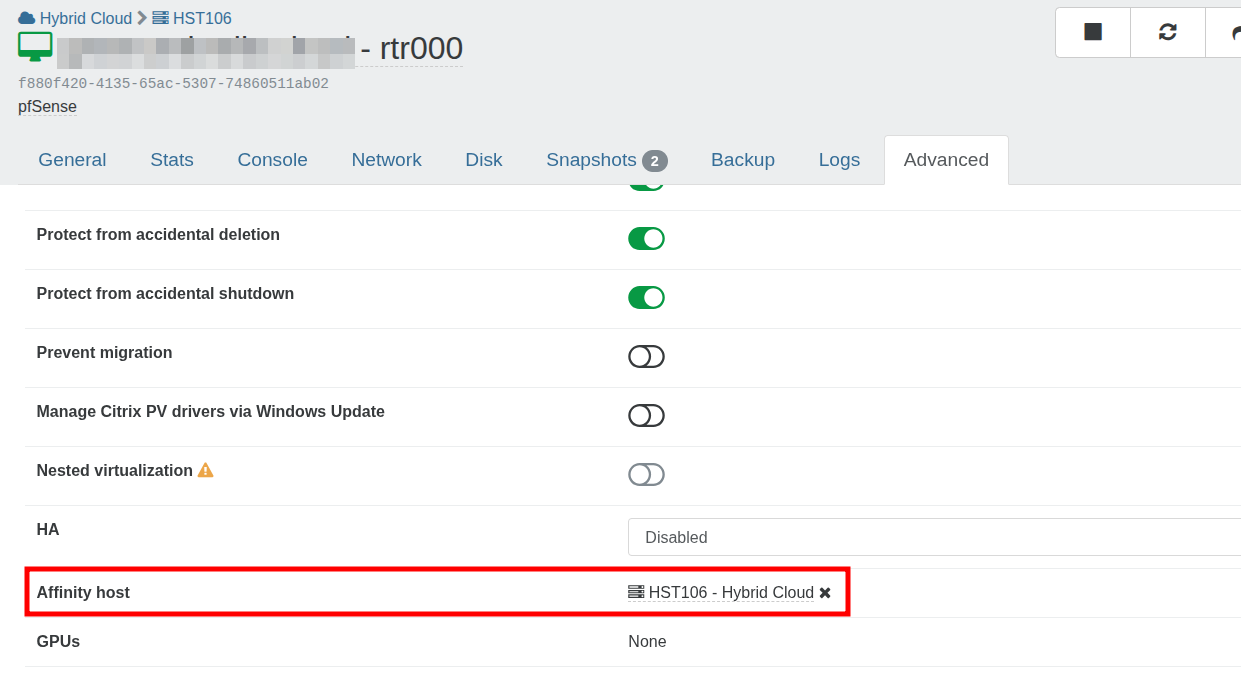

RE: VM association with shared storage

Perfect thanks. The issue is we have an IP address locked to that host so the router needs to live there. The host affinity looks like the correct solution.

Does host affinity also prevent the VM being migrated manually?

-

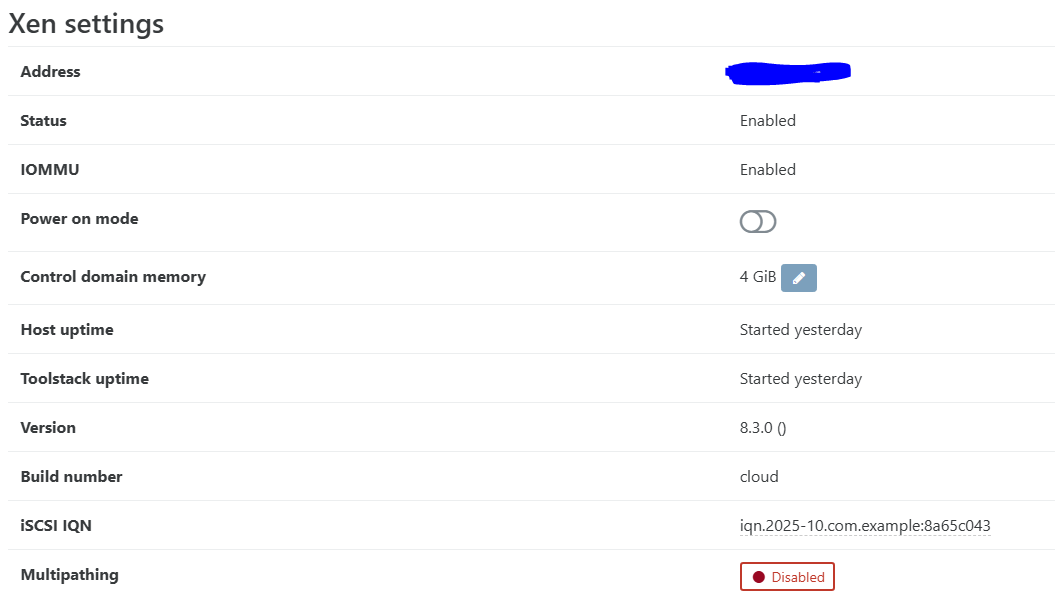

RE: Alarms in XO

This host does not run any VMs, just used for CR

I've increased the dom0 ram to 4GB with no more alarms.

-

RE: Windows11 VMs failing to boot

Thank you so much. If you want me I'll be at the pub.

-

RE: Zabbix on xcp-ng

We have successfully installed using:

rpm -Uvh https://repo.zabbix.com/zabbix/7.0/rhel/7/x86_64/zabbix-release-latest.el7.noarch.rpm yum install zabbix-agent2 zabbix-agent2-plugin-* --enablerepo=base,updates -

RE: Migrating a single host to an existing pool

Worked perfectly. Thanks guys.

Latest posts made by McHenry

-

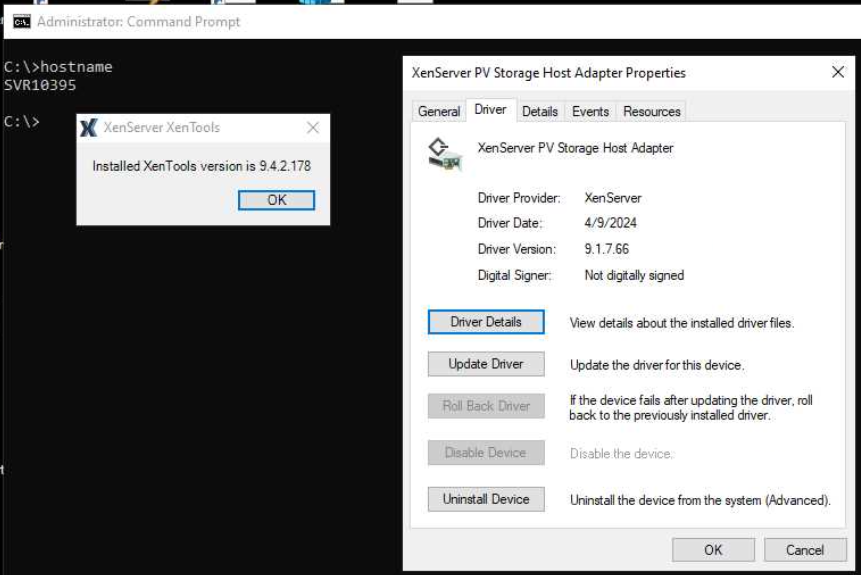

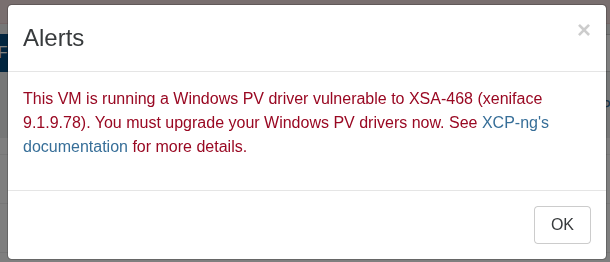

IMPORTANT! Some of your VMs are vulnerable.

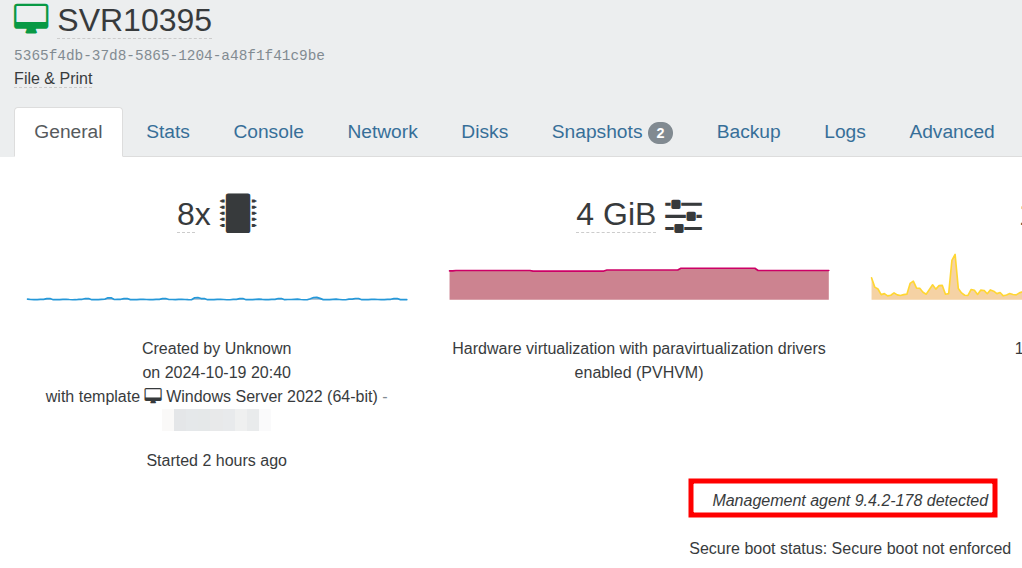

I am seeing this error on all VMs on a host.

https://docs.xcp-ng.org/vms/#xsa-468-multiple-windows-pv-driver-vulnerabilities

I have checked the Xen Tools and the latest version is installed.

-

RE: Site outage. pfSense VM offline after pool master reboot

Agreed however as our xcp-ng hosts are in the cloud with OVH Cloud we cannot use a physical appliance. We are currently setting up a dedicated OVH server for pfSense.

-

RE: Site outage. pfSense VM offline after pool master reboot

I am very concerned that the pfSense VM went offline yesterday when the pool master was shutdown even through the pfSense VM was running on the slave.

If I can get an understanding of how this happened I can better mitigate it from happening again.

I have just reread this to ensure I understand the master/slave relationship:

https://xcp-ng.org/forum/topic/6986/pool-master-down-restart-makes-the-whole-pool-invisible-to-xo-till-master-is-online-again/9?_=1764739019505Can anyone suggest a reason as to why the pfsense VM went offline when the master was down?

As the router role is crucial and there are a number of moving parts to ensure it works as a VM I am seriously considering running it as a standalone server.

-

RE: Site outage. pfSense VM offline after pool master reboot

This problem has since been resolved.

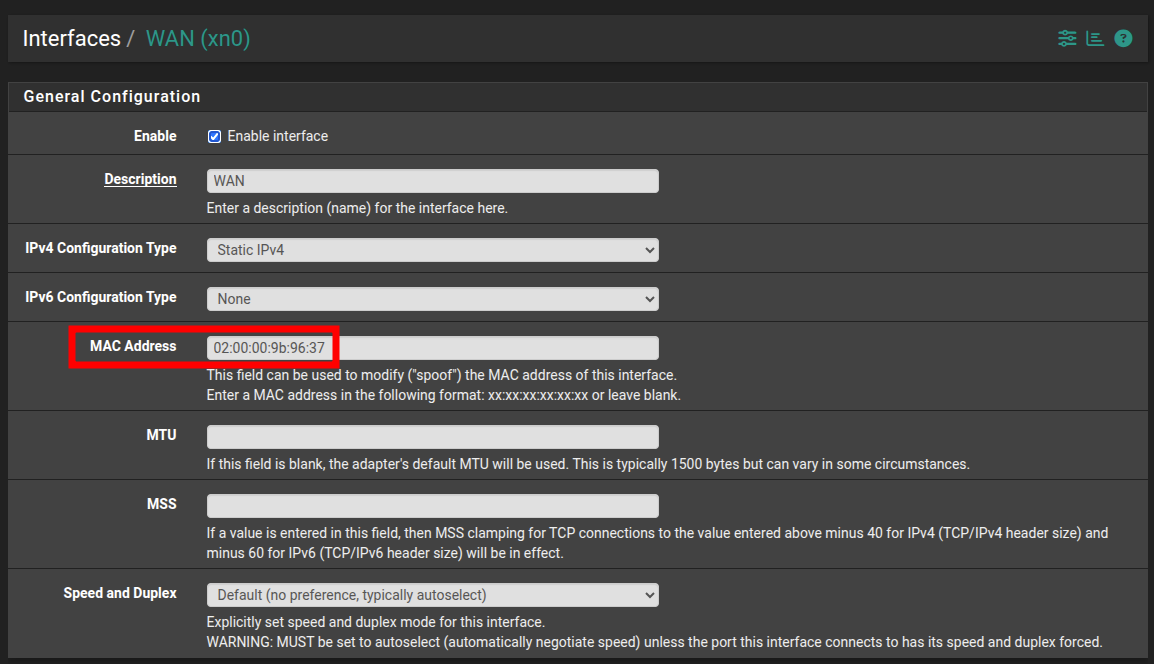

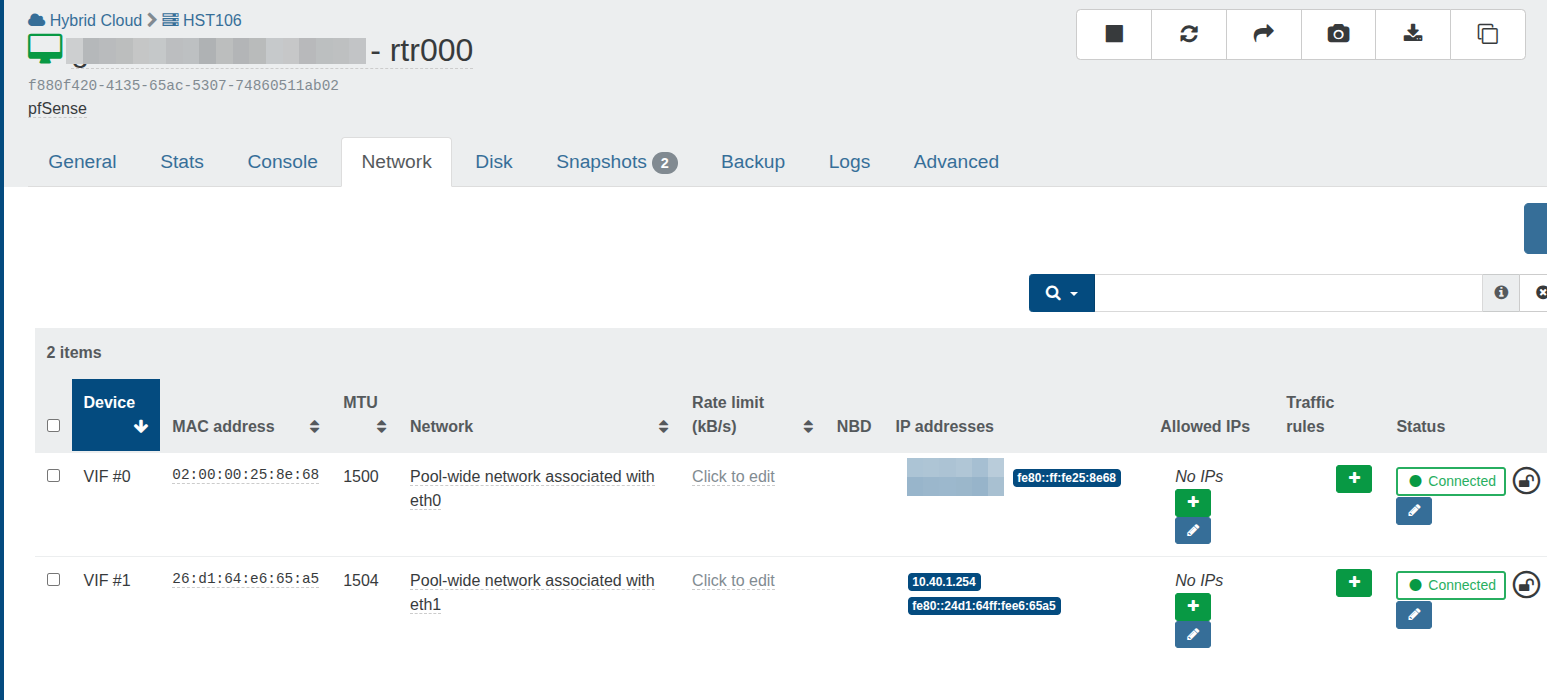

The issue related to running pfSense as a VM when using an additional IP form OVH Cloud.

When using an OVH additional IP on pfSense the MAC address of the additional IP needs to be entered in the WAN configuration.

When we restored this pfSense VM from CR and then migrated it to our production host the MAC address had been changed in XO. Once the correct MAC address was reentered into pfSense everything worked again.

This is a real gotcha when running pfSense as a VM using CR on a host that is not part of the main pool. -

RE: Site outage. pfSense VM offline after pool master reboot

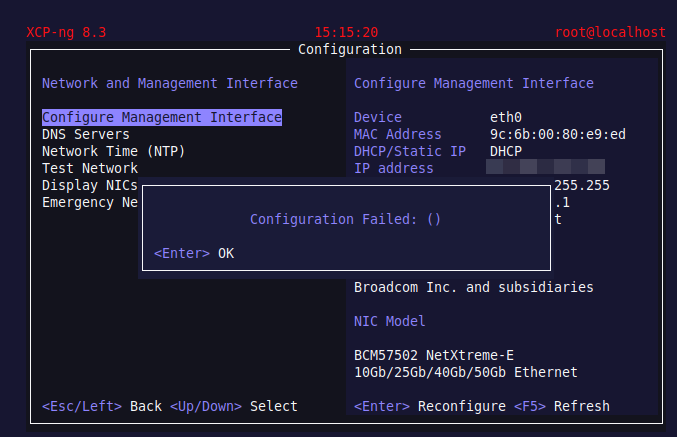

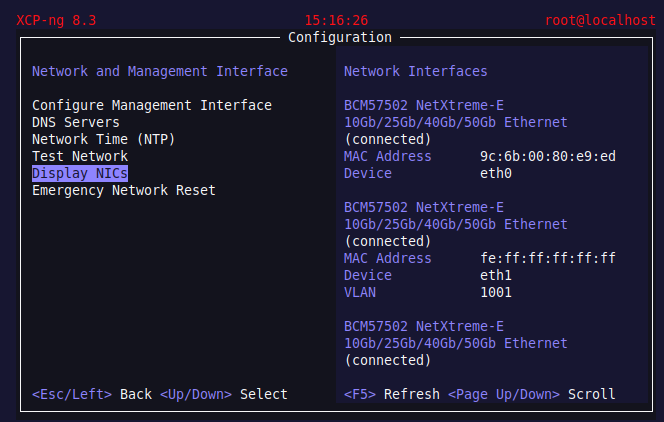

When I try to manually configure the management interface as eth0 i get this:

The NIC appears to be there and connected:

-

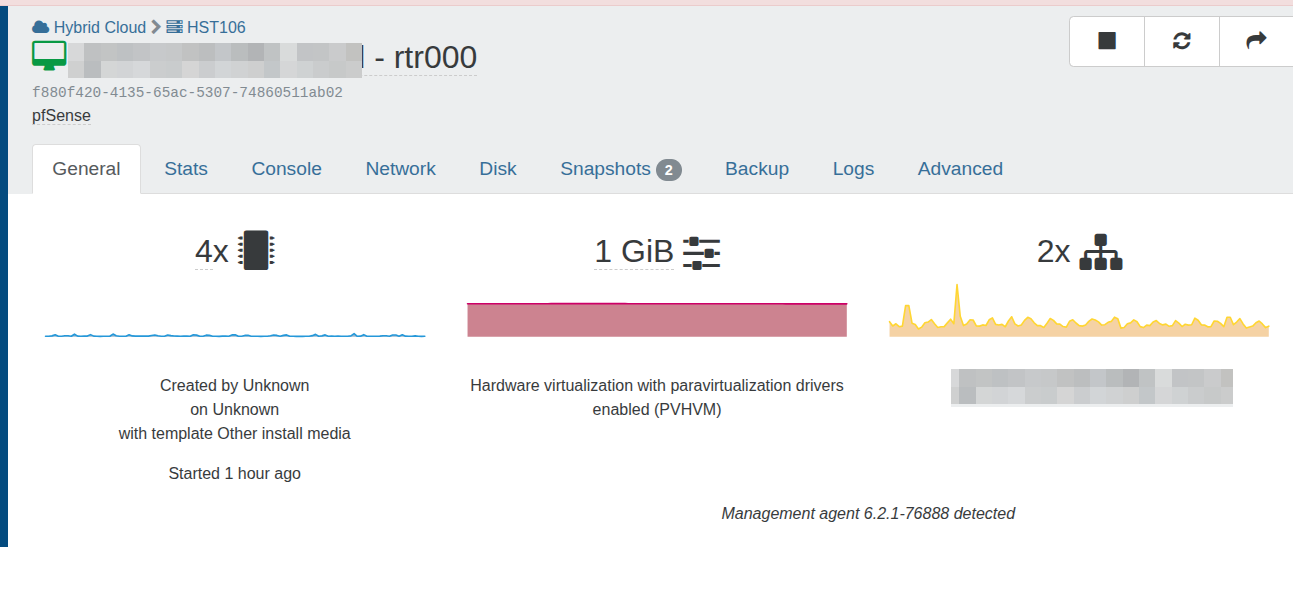

Site outage. pfSense VM offline after pool master reboot

We downed our pool master (no VMs) for testing and the router VM (pfSense) lost internet connectivity.

When we restarted the master the router VM still has no internet connectivity.

All VMs,including the router are online in XO.

From the pfSense console I can ping VMs on the LAN however there is internet connectivity.

Everything looks fine so I am at a loss as to what the issue is.

-

RE: All VMs down

What a day...

Have two hosts and needed a RAM upg so scheduled these with OVH, one for 4pm and the other for 8pm. Plan was to migrate the VMs off the host being upgraded to maintain uptime.

Got an email from OVH stating that due to parts availability the upg will not proceed and then they downed both hosts at 8pm to upg at the same time and that caused havoc today.