Thanks Marc

Posts

-

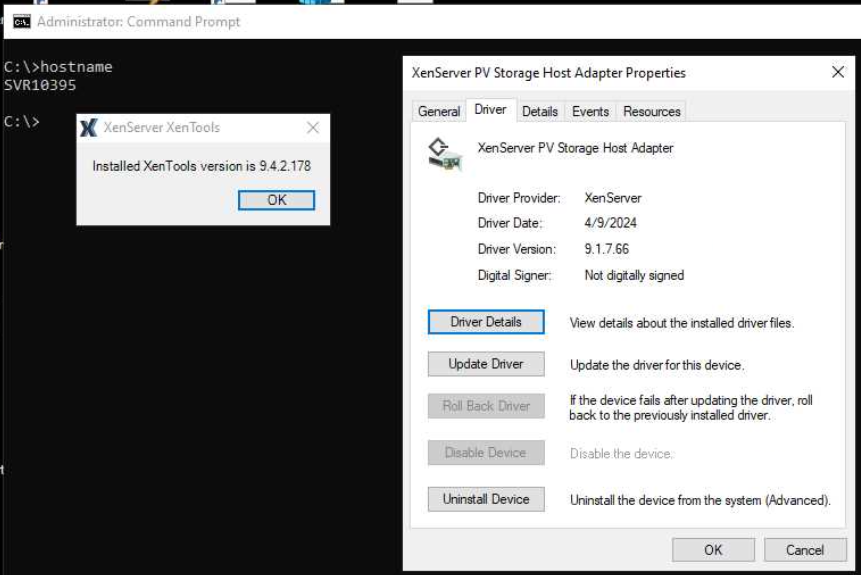

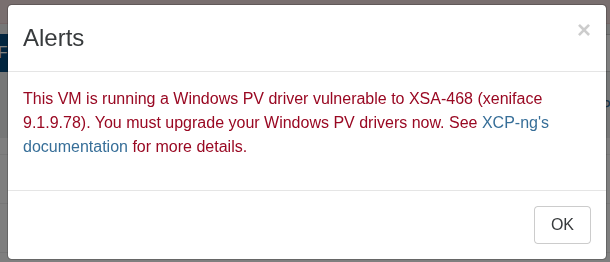

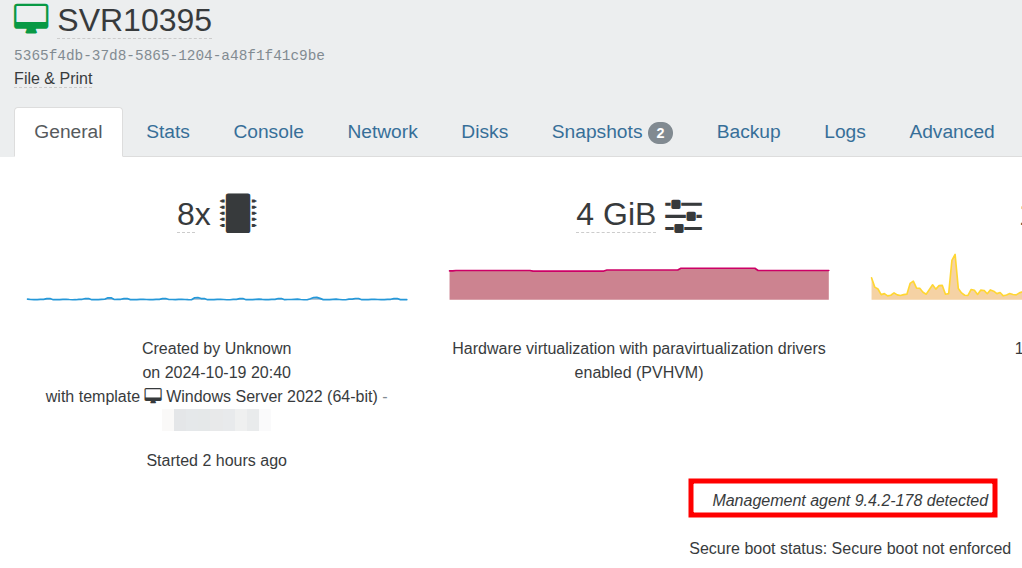

IMPORTANT! Some of your VMs are vulnerable.

I am seeing this error on all VMs on a host.

https://docs.xcp-ng.org/vms/#xsa-468-multiple-windows-pv-driver-vulnerabilities

I have checked the Xen Tools and the latest version is installed.

-

RE: Site outage. pfSense VM offline after pool master reboot

Agreed however as our xcp-ng hosts are in the cloud with OVH Cloud we cannot use a physical appliance. We are currently setting up a dedicated OVH server for pfSense.

-

RE: Site outage. pfSense VM offline after pool master reboot

I am very concerned that the pfSense VM went offline yesterday when the pool master was shutdown even through the pfSense VM was running on the slave.

If I can get an understanding of how this happened I can better mitigate it from happening again.

I have just reread this to ensure I understand the master/slave relationship:

https://xcp-ng.org/forum/topic/6986/pool-master-down-restart-makes-the-whole-pool-invisible-to-xo-till-master-is-online-again/9?_=1764739019505Can anyone suggest a reason as to why the pfsense VM went offline when the master was down?

As the router role is crucial and there are a number of moving parts to ensure it works as a VM I am seriously considering running it as a standalone server.

-

RE: Site outage. pfSense VM offline after pool master reboot

This problem has since been resolved.

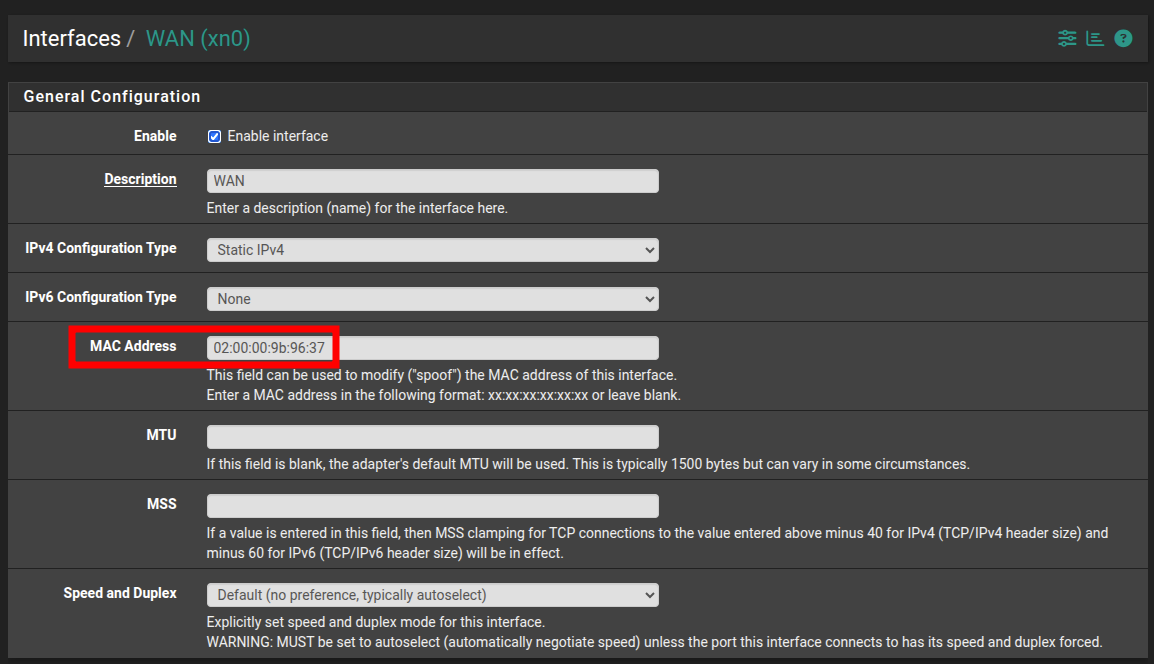

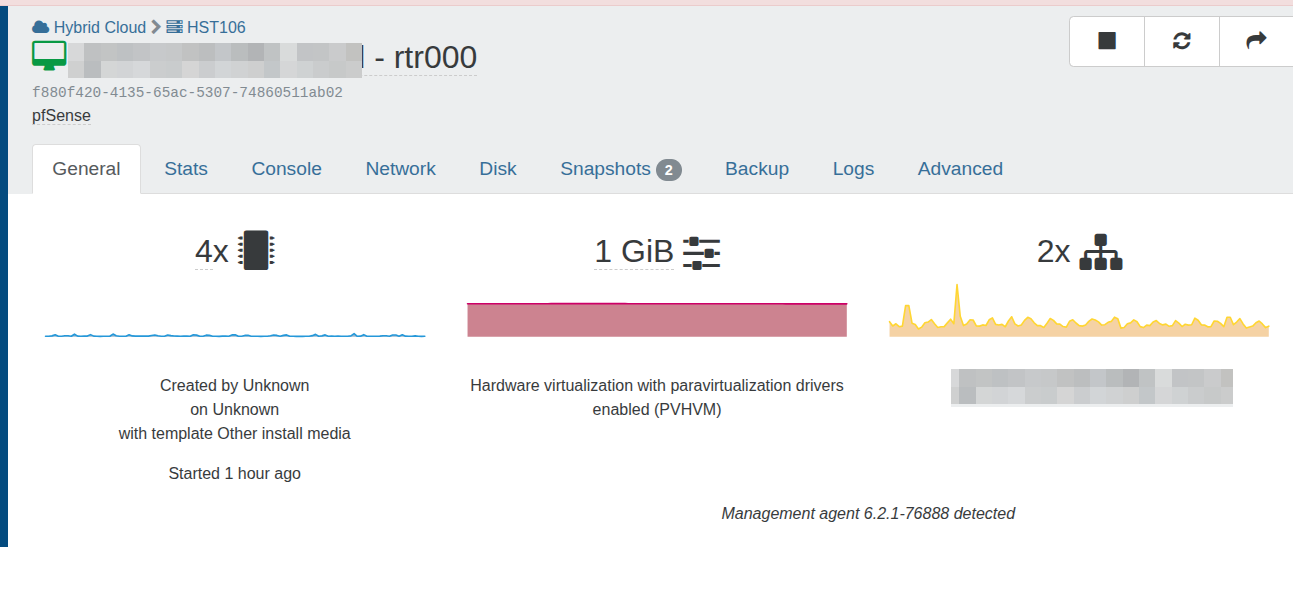

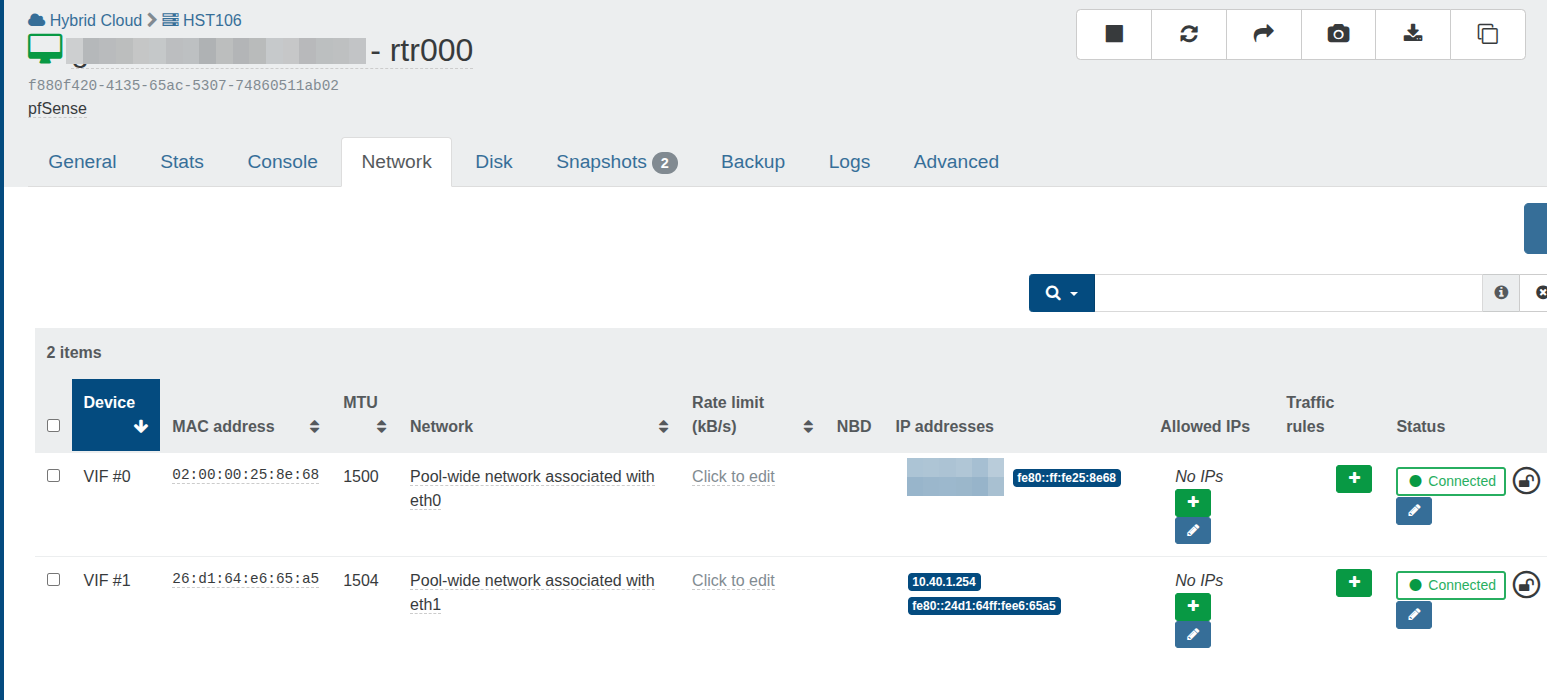

The issue related to running pfSense as a VM when using an additional IP form OVH Cloud.

When using an OVH additional IP on pfSense the MAC address of the additional IP needs to be entered in the WAN configuration.

When we restored this pfSense VM from CR and then migrated it to our production host the MAC address had been changed in XO. Once the correct MAC address was reentered into pfSense everything worked again.

This is a real gotcha when running pfSense as a VM using CR on a host that is not part of the main pool. -

RE: Site outage. pfSense VM offline after pool master reboot

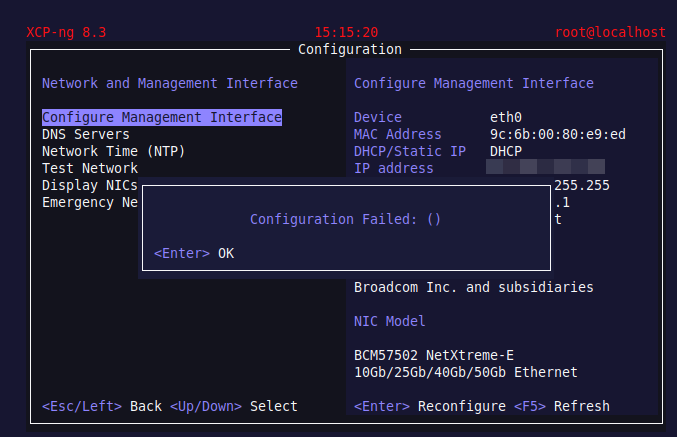

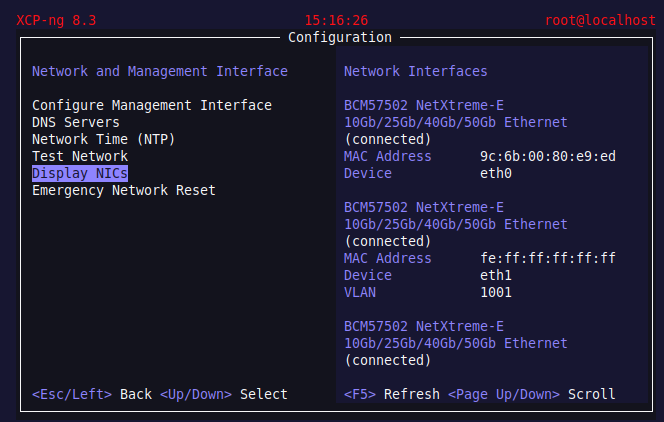

When I try to manually configure the management interface as eth0 i get this:

The NIC appears to be there and connected:

-

Site outage. pfSense VM offline after pool master reboot

We downed our pool master (no VMs) for testing and the router VM (pfSense) lost internet connectivity.

When we restarted the master the router VM still has no internet connectivity.

All VMs,including the router are online in XO.

From the pfSense console I can ping VMs on the LAN however there is internet connectivity.

Everything looks fine so I am at a loss as to what the issue is.

-

RE: All VMs down

What a day...

Have two hosts and needed a RAM upg so scheduled these with OVH, one for 4pm and the other for 8pm. Plan was to migrate the VMs off the host being upgraded to maintain uptime.

Got an email from OVH stating that due to parts availability the upg will not proceed and then they downed both hosts at 8pm to upg at the same time and that caused havoc today.

-

All VMs down

I have an issue with VMs appearing to be locked and showing as running however not running.

I am unable to stop these VMs. I have tried shutting down the host HST106

The VMs are appearing to be running on HST106 however HST106 is now powered off.

I need to start these VMs on HST107 instead.

-

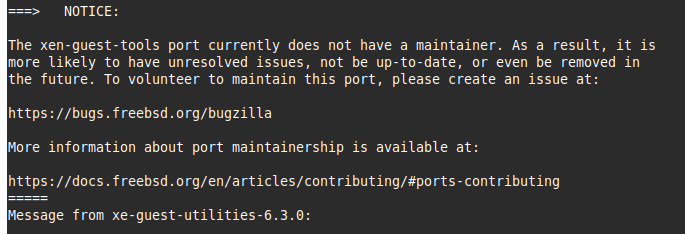

pfSense Guest Tools

I have been using pfSense with xcp-ng for a while now without installing the guest tools.

Due to some networking complications I have decided to install the guest tools to eliminate this as the cause.

Q1) Are the guest tools required on pfSense and what do they do?

Q2) Are these tools being maintained?

-

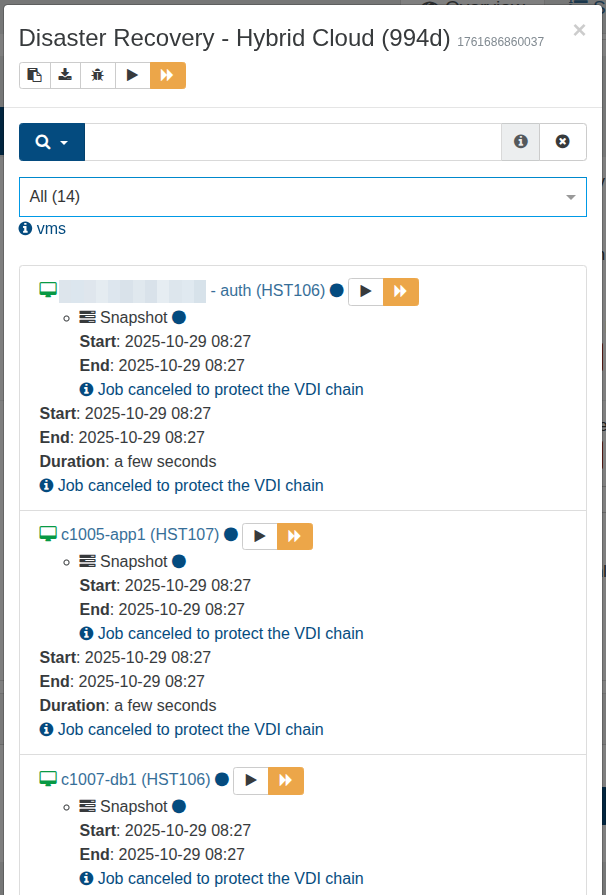

RE: Job canceled to protect the VDI chain

Host started and issue resolved.

-

RE: Job canceled to protect the VDI chain

Appears to be the same as:

https://xcp-ng.org/forum/topic/1751/smgc-stuck-with-xcp-ng-8-0?_=1761802212787It appears this snapshot is locked by a slave host that is currently offline.

Oct 30 08:30:09 HST106 SMGC: [1866514] Checking with slave: ('OpaqueRef:16797af5-c5d1-08d5-0e26-e17149c2807b', 'nfs-on-slave', 'check'When using shared storage how does a snapshot become locked by a host?

Is the scenario where a slave host is offline how can this lock be cleared?

-

RE: Job canceled to protect the VDI chain

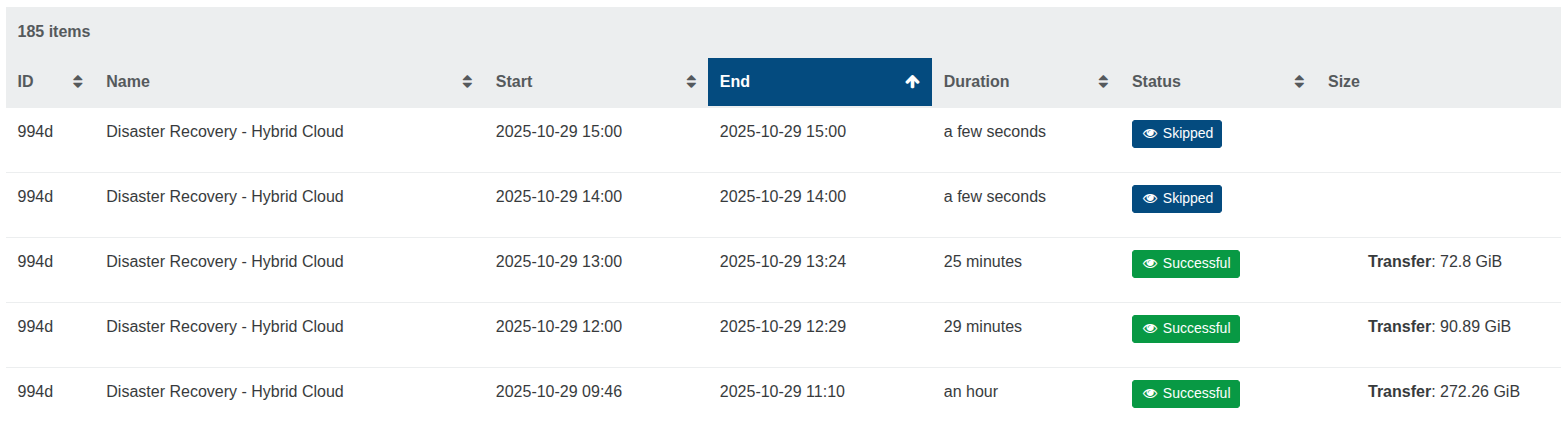

As per yesterday, the backups are still being "Skipped". Checking the logs I see the following message being repeated:

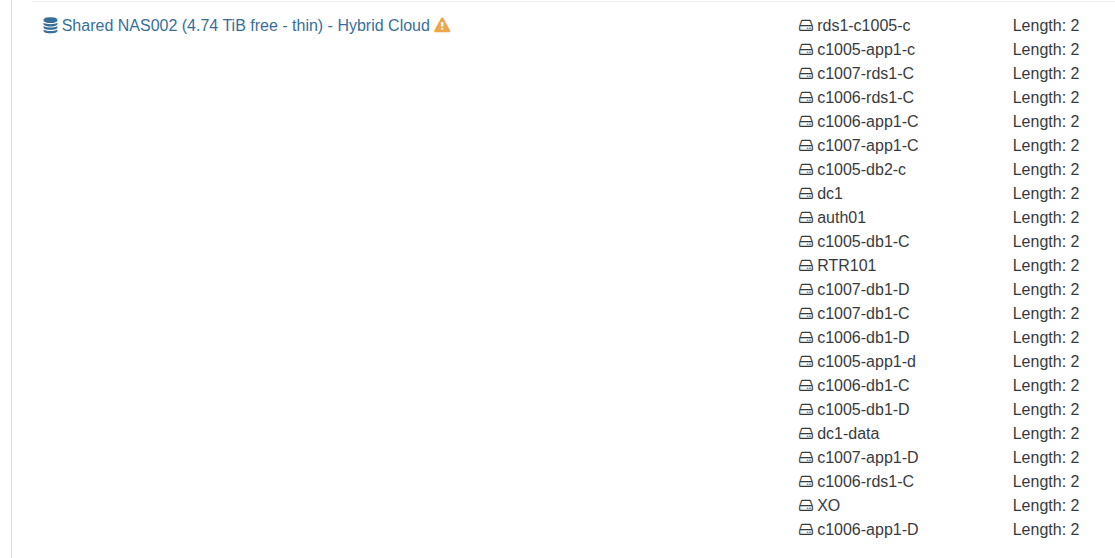

Oct 30 08:30:09 HST106 SMGC: [1866514] Found 1 orphaned vdis Oct 30 08:30:09 HST106 SM: [1866514] lock: tried lock /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/sr, acquired: True (exists: True) Oct 30 08:30:09 HST106 SMGC: [1866514] Found 1 VDIs for deletion: Oct 30 08:30:09 HST106 SMGC: [1866514] *d4a17b38(100.000G/21.652G?) Oct 30 08:30:09 HST106 SMGC: [1866514] Deleting unlinked VDI *d4a17b38(100.000G/21.652G?) Oct 30 08:30:09 HST106 SMGC: [1866514] Checking with slave: ('OpaqueRef:16797af5-c5d1-08d5-0e26-e17149c2807b', 'nfs-on-slave', 'check', {'path': '/var/run/sr-mount/be743b1c-7803-1943-0a70-baf5fcbfeaaf/d4a17b38-5a3c-438a-b394-fcbb64784499.vhd'}) Oct 30 08:30:09 HST106 SM: [1866514] lock: released /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/sr Oct 30 08:30:09 HST106 SM: [1866514] lock: released /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/running Oct 30 08:30:09 HST106 SMGC: [1866514] GC process exiting, no work left Oct 30 08:30:09 HST106 SM: [1866514] lock: released /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/gc_active Oct 30 08:30:09 HST106 SMGC: [1866514] In cleanup Oct 30 08:30:09 HST106 SMGC: [1866514] SR be74 ('Shared NAS002') (166 VDIs in 27 VHD trees): no changes Oct 30 08:30:09 HST106 SM: [1866514] lock: closed /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/running Oct 30 08:30:09 HST106 SM: [1866514] lock: closed /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/gc_active Oct 30 08:30:09 HST106 SM: [1866514] lock: closed /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/srIt appears the unlinked VDI is never deleted. Could this be blocking and should this be deleted manually?

Deleting unlinked VDI *d4a17b38(100.000G/21.652G?)In regards to the following line, I can identify the VM UUID however is the 2nd UUID a snapshot? (d4a17b38-5a3c-438a-b394-fcbb64784499.vhd)

Oct 30 08:30:09 HST106 SMGC: [1866514] Checking with slave: ('OpaqueRef:16797af5-c5d1-08d5-0e26-e17149c2807b', 'nfs-on-slave', 'check', {'path': '/var/run/sr-mount/be743b1c-7803-1943-0a70-baf5fcbfeaaf/d4a17b38-5a3c-438a-b394-fcbb64784499.vhd'}) -

RE: Job canceled to protect the VDI chain

I have the following entry in the logs, over and over. Not sure if this is a problem:

Oct 29 15:25:08 HST106 SMGC: [1009624] Found 1 orphaned vdis Oct 29 15:25:08 HST106 SM: [1009624] lock: tried lock /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/sr, acquired: True (exists: True) Oct 29 15:25:08 HST106 SMGC: [1009624] Found 1 VDIs for deletion: Oct 29 15:25:08 HST106 SMGC: [1009624] *d4a17b38(100.000G/21.652G?) Oct 29 15:25:08 HST106 SMGC: [1009624] Deleting unlinked VDI *d4a17b38(100.000G/21.652G?) Oct 29 15:25:08 HST106 SMGC: [1009624] Checking with slave: ('OpaqueRef:16797af5-c5d1-08d5-0e26-e17149c2807b', 'nfs-on-slave', 'check', {'path': '/var/run/sr-mount/be743b1c-7803-1943-0a70-baf5fcbfeaaf/d4a17b38-5a3c-438a-b394-fcbb64784499.vhd'}) Oct 29 15:25:08 HST106 SM: [1009624] lock: released /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/sr Oct 29 15:25:08 HST106 SM: [1009624] lock: released /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/running Oct 29 15:25:08 HST106 SMGC: [1009624] GC process exiting, no work left Oct 29 15:25:08 HST106 SM: [1009624] lock: released /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/gc_active Oct 29 15:25:08 HST106 SMGC: [1009624] In cleanup Oct 29 15:25:08 HST106 SMGC: [1009624] SR be74 ('Shared NAS002') (166 VDIs in 27 VHD trees): no changes Oct 29 15:25:08 HST106 SM: [1009624] lock: closed /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/running Oct 29 15:25:08 HST106 SM: [1009624] lock: closed /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/gc_active Oct 29 15:25:08 HST106 SM: [1009624] lock: closed /var/lock/sm/be743b1c-7803-1943-0a70-baf5fcbfeaaf/sr -

RE: Job canceled to protect the VDI chain

I spoke too soon. The backups started working however the problem has returned.

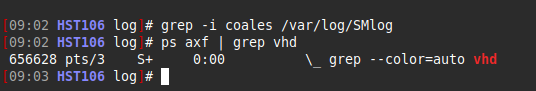

I do see 44 items waiting to coalesce. This is new as these would coalesce faster previously without causing this issue.

Is there a reason the coalesce is taking longer now or is there a way I can add resources to speed up the process?

-

RE: Job canceled to protect the VDI chain

Is it XO or xcp-ng that manages the coalescing? Can more resources be applied to assist?

-

RE: Job canceled to protect the VDI chain

I think you are correct. When I checked the Health it showed 46 to coalesce and then number started dropping down to zero. Now the backups appear to be running again

I have never seen this before and I am curious as to why it appeared yesterday.

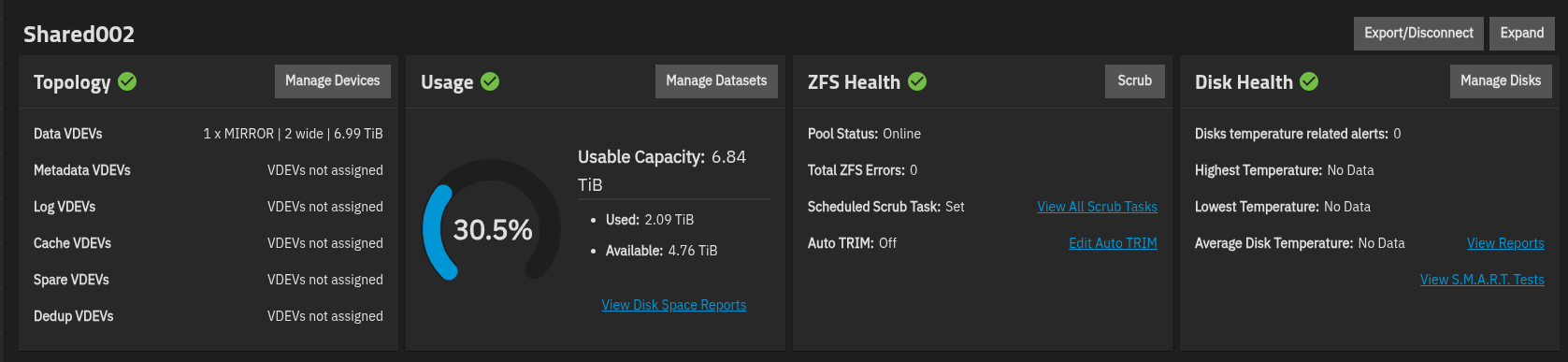

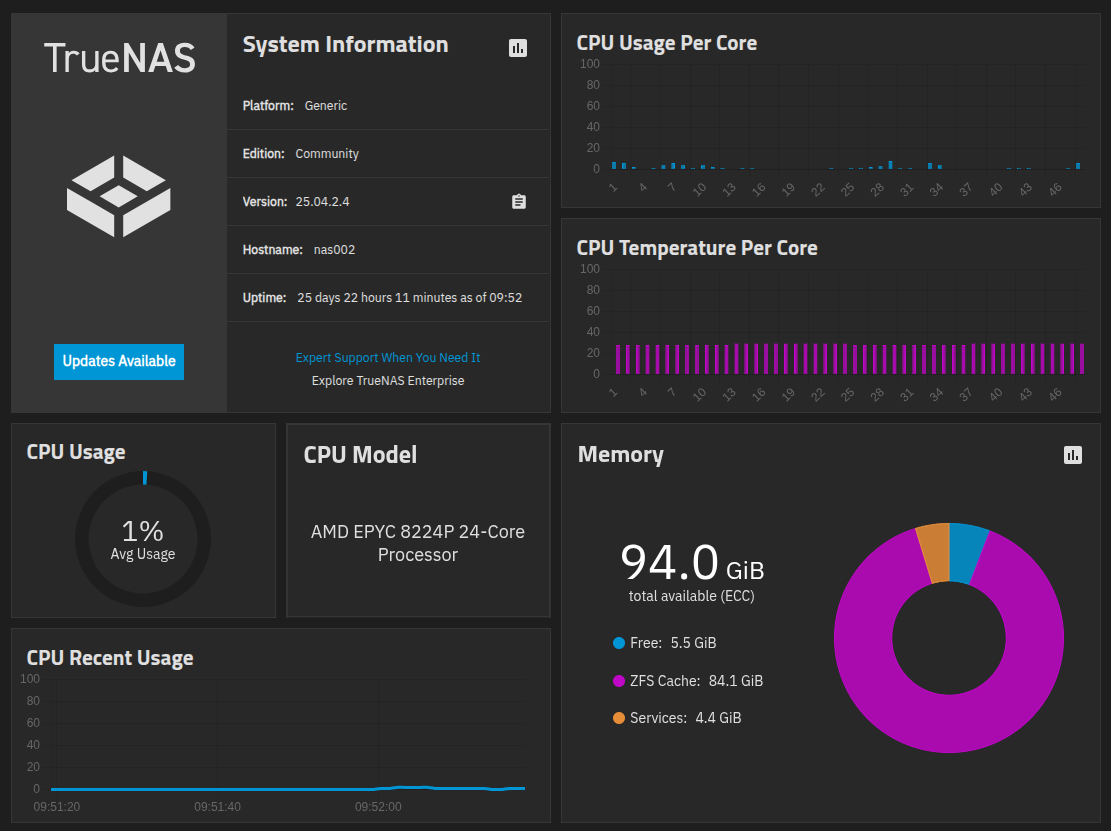

My fear was storage corruption, as with shared storage it would impact all VMs. I checked TrueNAS and everything appears to be be healthy.

-

Job canceled to protect the VDI chain

Yesterday our backup job started failing for all VMs with the message:

"Job canceled to protect the VDI chain"

I have checked the docs regarding VDI chain protection:

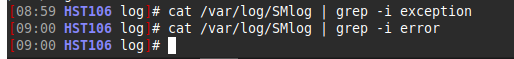

https://docs.xen-orchestra.com/backup_troubleshooting#vdi-chain-protectionThe xcp-ng logs do not show any errors:

I am using TrueNAS as shared storage.