Remove backup bottleneck: please report

-

Thanks for the feedback. In your case, Xeon D is likely causing slowness on HTTPS. I'll update the doc for putting this advice

-

@olivierlambert Hmmm... this comes from a test lab box, no other activity occurring, VMs are powered off for duration of these backup tests (except the XOA vm, which runs on a different box then the one being backed up), so there's zero load and I don't see the CPU ever getting over even 10% in dom0.

Have the same issue/see the same results on dual 24 core E5s. Granted those are also 2.2GHZ. What would be minimally acceptable CPU to overcome that 3x performance penalty of stunnel? I guess I should read up on the stunnel limitations more.

Could it be I'm not giving the XOA vm enough CPU/RAM? Or the wrong topology? I've looked for guidance on that too and haven't seen much online about it (especially in regards to speed).

Either way, I am excited that we can start taking advantage of that 3x increase in speed. Security can be handled by a VLAN/separate storage network, so really not concerned about using HTTP instead of HTTPS.

Any tips on how to configure storage/networks to take advantage of every bit of juice available would be awesome.

Thanks again for all you and your teams have done!!

-

@julien-f is doing a ton of work to rewire all backup code in a way it could be split into small bits, so we'll be able to get an easier code to improve and benchmark relatively soon

For example, we should have a stream "waiting" info to find which part in the chain is slowing things done. It's really hard without that to know where the bottleneck is.

Might be a limit in

stunnelbut as soon we got our "stream probe" we'll be able to pinpoint it more exactly.Also, with and without HTTPS, are you seeing an XOA CPU usage change?

-

@olivierlambert Havent looked at that in a while... I'll run a test here shortly.

-

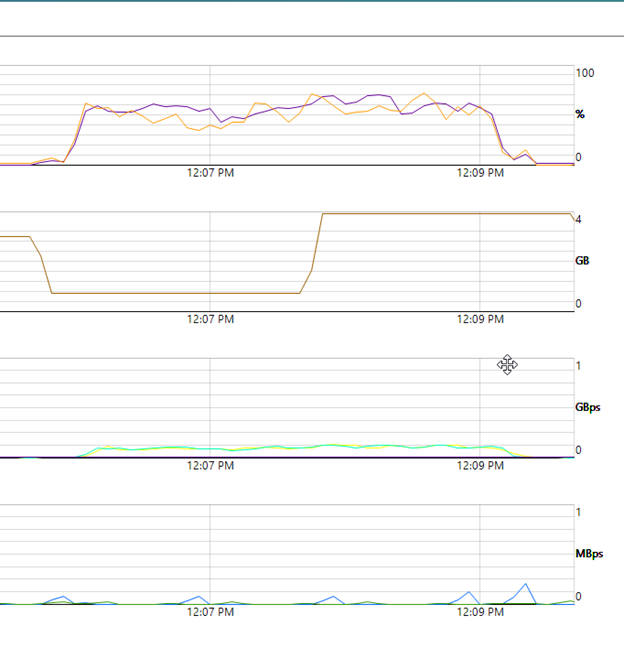

BTW, this is not quite the exact same setup as before (there are some test vm's idling in the background and I am only backing up a single VM). That said DOM0 on both the host running the VM being backed up and the host running the XOA box were in the 10%-15% range for the duration of the tests and idling around 2-4%

While the peaks in CPU under HTTPS do show slightly higher, the biggest thing I see is the wide divergence in the CPU on the two cores under HTTPS. Under HTTP, they look to be in lock step. Also note that the RAM usage on the XOA box jumps to full after about 25% of the way through the job. XOA was given 2 sockets with 1 core socket and 4GB ram.

Oh, and you can see we aren't even maxing 100MBps, let alone a 10GB. Disks involved are single SSDs, SATA6. So I dont expect to see 10GBps but still. Shouldn't I expect to see way more than 50? Those SSDs can easily do 500.

HTTP/ XOA performance. 3 mins for 18.89GIB

HTTPS/ XOA performance. 7 mins for 18.89GIB

-

Using Netdata would deliver for more insight on what's happening, both on the host and XO

-

Hello,

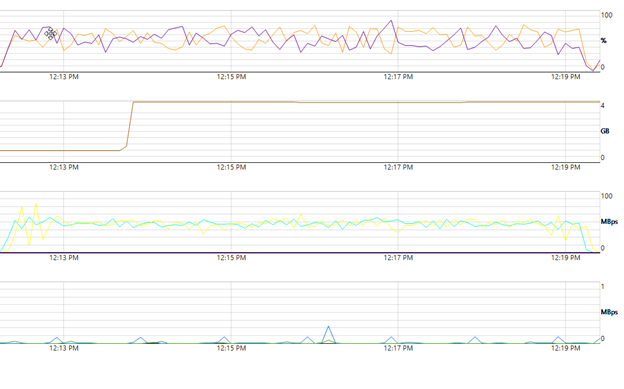

Http ~7 times faster than https on our system for cr jobs. Https ~50MB/sec. Http ~350MB/sec.

xo from source 5.60

xcp-ng 8.0

10 gbit connection everywhere

-

@rizaemet-0 said in Remove backup bottleneck: please report:

Http ~7 times faster than https on our system for cr jobs. Https ~50MB/sec. Http ~350MB/sec.

Wow, 350 is really a nice speed!

-

For me, switching to http seems to have increased the backup speed by about 3.5 times as well. However I feel like it's still pretty slow. I went from a reported transfer speed average of 3.26 MiB/s to 11.59 MiB/s across 16 VMs running on a pool consisting of two 8.1 XCP-ng hypervisors and a 5.61 Xen Orchestra Community VM backing up to an NFS share on a Synology RackStation over a 10 Gb/s storage network. When testing the speed with which I am able to write to the NFS share from the XO VM with dd it's averaging write speeds of about 95 MB/s.

Anything else I can do to try and figure out where the next bottleneck is?

-

That's slow indeed

It's not trivial. A lot of things could cause that. However, you can test by:

- changing backup concurrency to find a sweet spot (one VM, then 2 VM etc.)

- give more memory to the dom0

- give more memory to the XO VM

- having an SSD on the backup storage

- having an SSD on the storage repository

-

I will for sure attempt and see how fast it is to backup only one VM at a time... actually I guess I don't know the answer to this but when XO does a delta backup to an NFS remote does it attempt to transfer all VM snapshots at the same time or does it do it sequentially one after the other?

-

-

Thank you so much for this info, I can't believe I didn't see this in all of my research. With the information from that document I take it back. I am getting full bandwidth since 12 machines get backed up at a time. The individual VM transfer speed in the backup reports was a bit misleading to me since I didn't realize that 12 are uploaded to storage at a time.

My initial finding still stands though, going http completely removed the one bottleneck that we had in our backup infrastructure and made it 3.5 times faster then before.

-

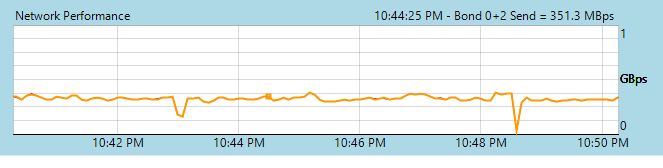

To get the complete speed, just take a look at XOA network stats while the backup is happening in… Xen Orchestra

-

Hi,

I'm very interested in trying this out.

Except I can't find how I should change the master URL?In XO when I click on the disconnect button in the pool view I receive an error.

-

@CIT same here. I was able to switch to http at settings -> server. Remove the server and add with

http://ipCurrent Backup Speed without change on XCP-ng 8.2:

Duration: 28 minutes Size: 43.85 GiB Speed: 26.86 MiB/sSpeed should be limited to WD Red or my Gigabit Bandwidth to my NFS. The meassured speed is far below. x3 is expected (~80-100 MiB/s)

-

@ChuckNorrison Ah, thnx for updating... I edited my servers, hope to see some speed improvements!

-

S savage79 referenced this topic on

S savage79 referenced this topic on

-

V vincentp referenced this topic on

-

Got about twice speed increase on single thread: 60-80 > 110-120MB with 10Gbit link.

But is this method recomended? I mean authorise now also work with http?

-

The thread is a bit old, since then we got NBD that could speed up things a lot.

-

yes i understand, just a interesting solution.