Slow backups (updated XO source issue)

-

@julien-f @olivierlambert Current XO source (11cc299) is causing backups to run at about 1/2 speed (takes twice the normal time). This affects Continuous Replication and Delta Backups (to S3). I have not tested other backups. Looks like it may be caused by

validate VHD streams(commit 68b2c28). -

Adding @florent in the loop

-

@Andrew I'm surprised because I have done a lot of tests to ensure the impact would be minimal.

You can disable this check by setting the following entry in your xo-server/xo-proxy config:

[backups.vm.defaultSettings] validateVhdStreams = falseIf you can it would be interesting if you could provide the duration of the backup job and the CPU usage on your XO appliance with and without the validation.

And please also provide the transferred size of the backup to ensure the difference does not come from here (an error I could very likely make ^^)Also, we've just removed some other checks which where no longer necessary with this change: https://github.com/vatesfr/xen-orchestra/pull/6773

-

@julien-f Normally (before the issue) the CR run takes about 8-9 minutes and transfers about 10-15GiB. After the config change it has been taking longer 12-15 minutes but is transferring more data 30-35GiB. I'll have to let it run a few more hours to see if the data size (and time) gets back to normal.

I have been getting a lot of errors (xcp7 is the destination of the CR) too:

root@xcp7 Xapi#putResource /import_raw_vdi/ Error [ERR_STREAM_PREMATURE_CLOSE]: Premature close at new NodeError (node:internal/errors:399:5) at ClientRequest.onclose (node:internal/streams/end-of-stream:154:30) at ClientRequest.emit (node:events:525:35) at ClientRequest.patchedEmit [as emit] (/opt/xo/xo-builds/xen-orchestra-202304161246/@xen-orchestra/log/configure.js:52:17) at TLSSocket.socketCloseListener (node:_http_client:465:9) at TLSSocket.emit (node:events:525:35) at TLSSocket.patchedEmit [as emit] (/opt/xo/xo-builds/xen-orchestra-202304161246/@xen-orchestra/log/configure.js:52:17) at node:net:322:12 at TCP.done (node:_tls_wrap:588:7) at TCP.callbackTrampoline (node:internal/async_hooks:130:17) { code: 'ERR_STREAM_PREMATURE_CLOSE' } -

@Andrew This change should absolutely not change the transferred size, let's see how the future runs go

-

@julien-f The time it takes for CR to run seems to be back to normal with the added config

[backups.vm.defaultSettings] validateVhdStreams = falseI have Concurrency set to 4.

There's more than enough local ethernet bandwidth between machines and XO is running on the pool master. There's lots of cores and memory for XO. But with

validateVhdStreams = truethere seems to be some single threaded issues with node because it uses 90%-120% cpu.With

validateVhdStreams = falsethe CPU usage is about 110%-150%, so still some single threaded issues but a lot less as node is able to use more than one core (but not all of them).Also... I see the warning that appeared from an earlier commit but does not cause problems:

transfer Suspend VDI not available for this suspended VM -

@Andrew The suspend VDI warning is a bug introduced by https://github.com/vatesfr/xen-orchestra/pull/6774

It has no consequences except from this message, I'll fix it ASAP

-

I had the same problem. I have a CR that until applying the setting took up to 5 days (I interrupted the backup to see where the problem is) to make a backup, now with the setting it takes 12 hours.

But the stability of the transfer is better by at least 15% compared to commit afadc8f95adf741611d1f298dfe77cbf1f895231.@Andrew said in Slow backups (updated XO source issue):

@julien-f The time it takes for CR to run seems to be back to normal with the added config

[backups.vm.defaultSettings] validateVhdStreams = falseI have Concurrency set to 4.

There's more than enough local ethernet bandwidth between machines and XO is running on the pool master. There's lots of cores and memory for XO. But with

validateVhdStreams = truethere seems to be some single threaded issues with node because it uses 90%-120% cpu.With

validateVhdStreams = falsethe CPU usage is about 110%-150%, so still some single threaded issues but a lot less as node is able to use more than one core (but not all of them).Also... I see the warning that appeared from an earlier commit but does not cause problems:

transfer Suspend VDI not available for this suspended VM -

F florent referenced this topic on

F florent referenced this topic on

-

@Andrew https://github.com/vatesfr/xen-orchestra/commit/136718df7e28d63f340c700564e5c1a90d1bf05e

Regarding the perf issue, I will work on it as soon as I'm able to reproduce on my side

If the impact is too big, we'll disable it by default…

-

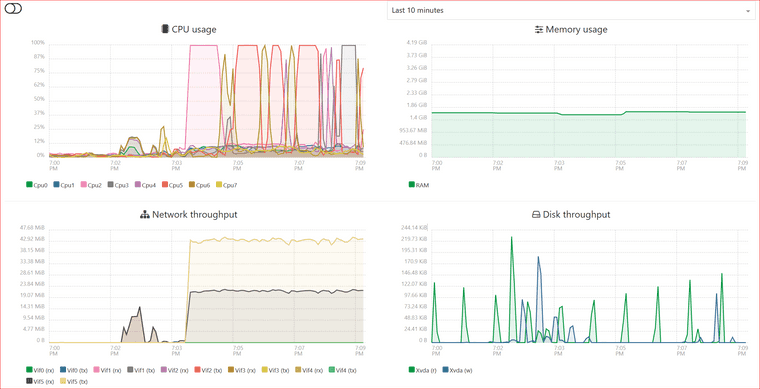

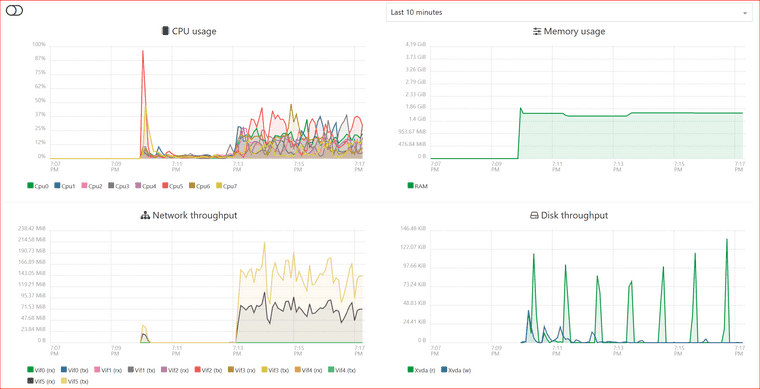

From what I've seen:

- when nothing is set, XOCE makes the transfer using a single vCPU to the maximum and the rest up to 5% max . The maximum transfer being up to 23Mb/s

- when the setting exists, XOCE uses all 12 vCPUs between 30% and 45%. The transfer is between 145Mb/s and 350Mb/s.

In both cases, the transfer is done on a 10Gib LAN and I use http mod for connecting to Servers

-

Hmm this points to a concurrency issue with the backup workers

-

This is on testing environment.

Version of XOCE

Transfer without setting

Transfer with setting

-

That's indeed very different. Out of curiosity, what's the CPU model?

-

Intel(R) Xeon(R) CPU E5-2620 v3 @ 2.40GHz

-

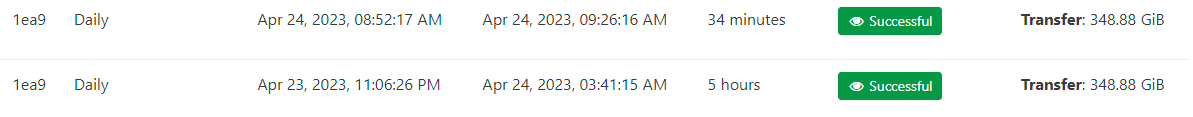

I too noticed by backup times went sky high recently. I just made the suggested switch in the config and that is the difference between before/after. My box has 2x E5-2697a v4 processors.

-

I've disabled the VHD stream validation by default for now: https://github.com/vatesfr/xen-orchestra/commit/0444cf0b3bc6902fc1a995da5cf29474b7f4f167

We'll re-enable it when we'll find a better solution.

Thank you all for your reports

-

@julien-f Thanks. I updated to 0444cf0 and CR times are normal again (as expected).