Updated XOA with kernel >5.3 to support nconnect nfs option

-

It would be lovely to have the stats view on your NFS server with the BR mounted before and after adding

nconnect, ideally with different number of parallel TCP connections In case you have some time to do it, that would be great!

In case you have some time to do it, that would be great! -

Changing nconnect requires restart of XOA (seems remount doesn't take a new value for nconnect), and I am heading home now, so I will try some additional benchmarks later in the week.

Btw, does xcp-ng 8.3 support nconnect? 8.2 that I am using does not.

-

Nope, it will be just for the traffic between XO and the Backup Repo (BR)

-

Ah, that's a shame, but reasonable for a point release. Maybe next major release?

This was an interesting read regarding nconnect on Azure https://learn.microsoft.com/en-us/azure/storage/files/nfs-performance

-

Yes, XCP-ng 9.x will likely able to use it

-

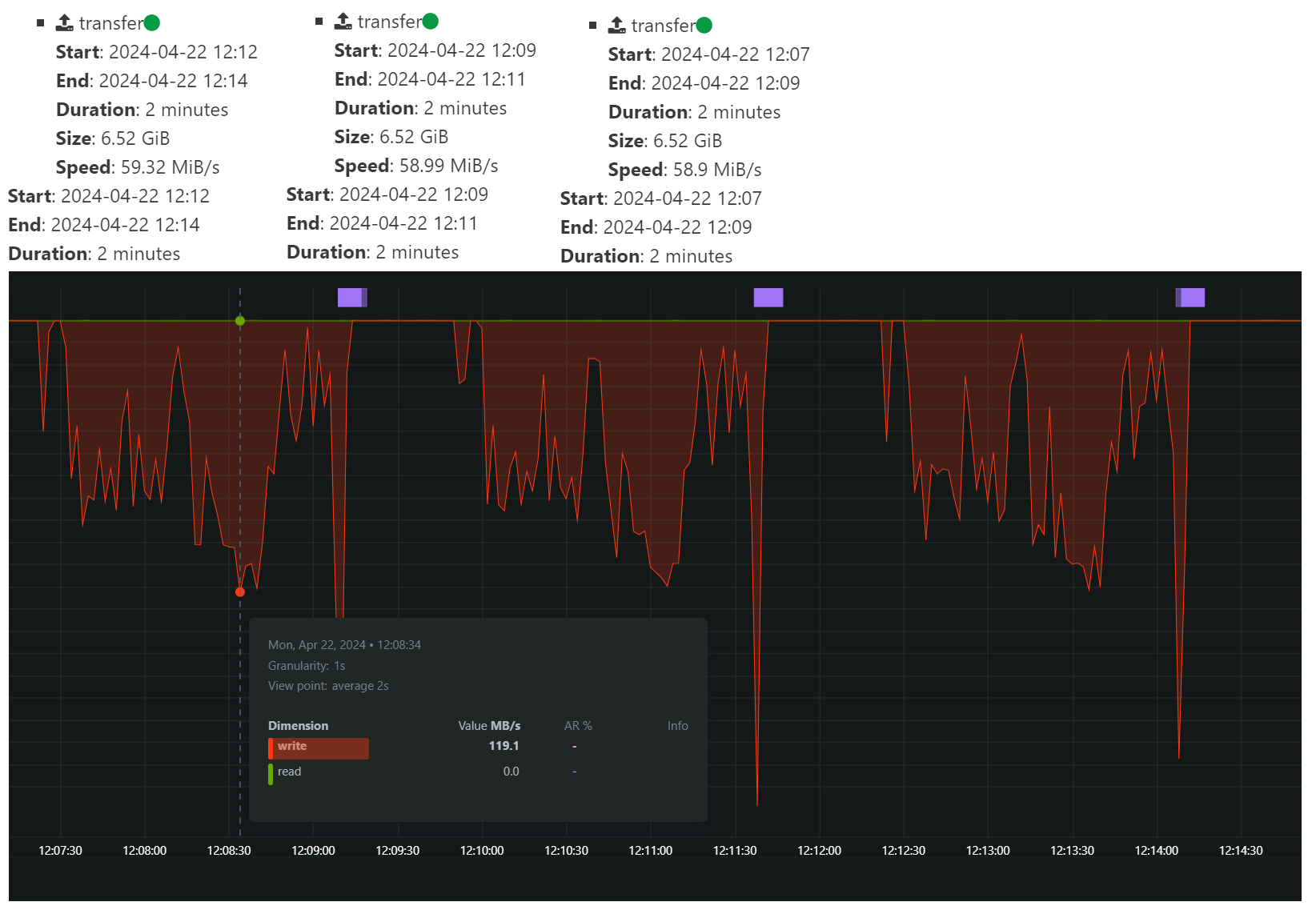

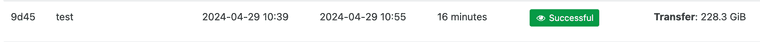

I did a 3 x backup of a single VM hosted on an SSD drive on the same host as XOA is running, which is also pool master.

This is with

nconnect=1:

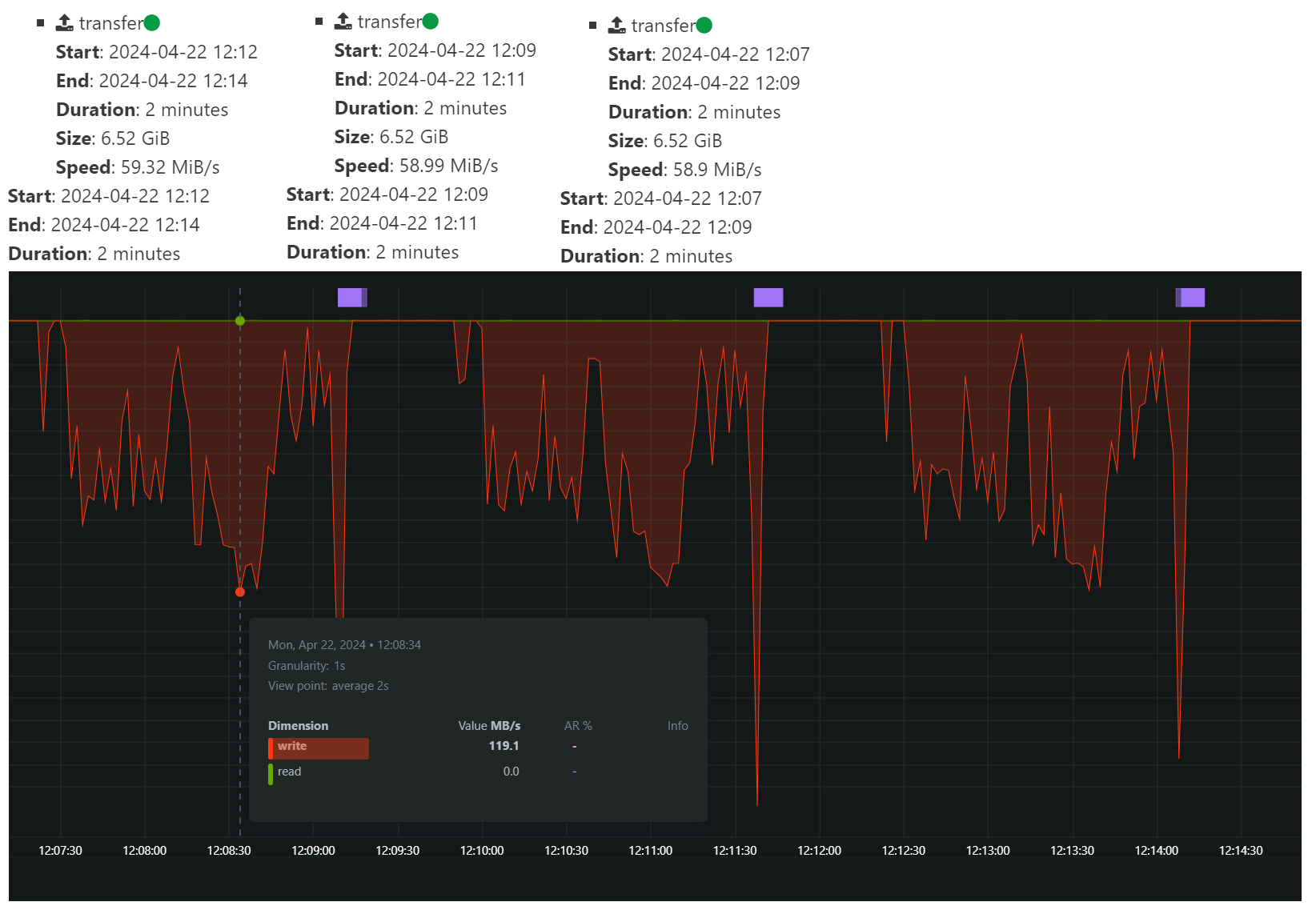

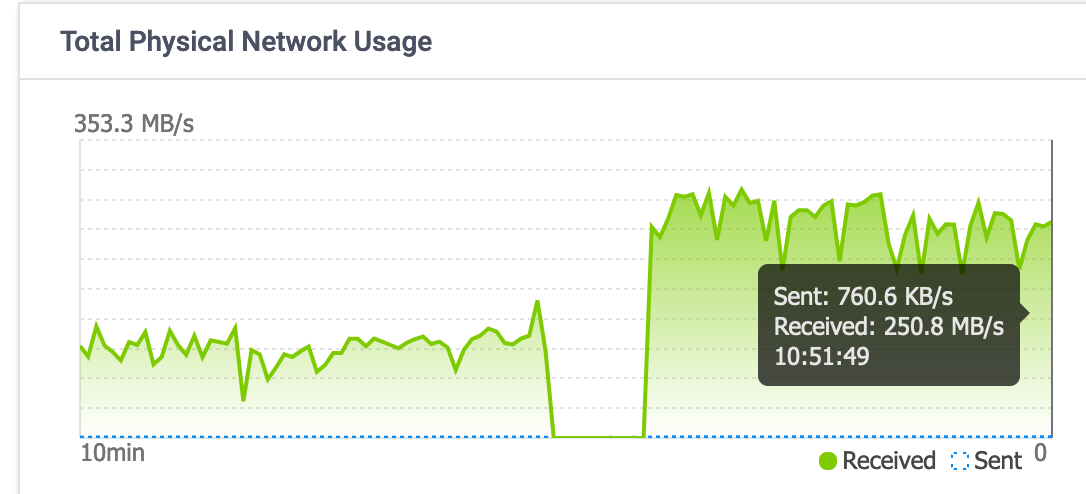

This is with

nconnect=16:

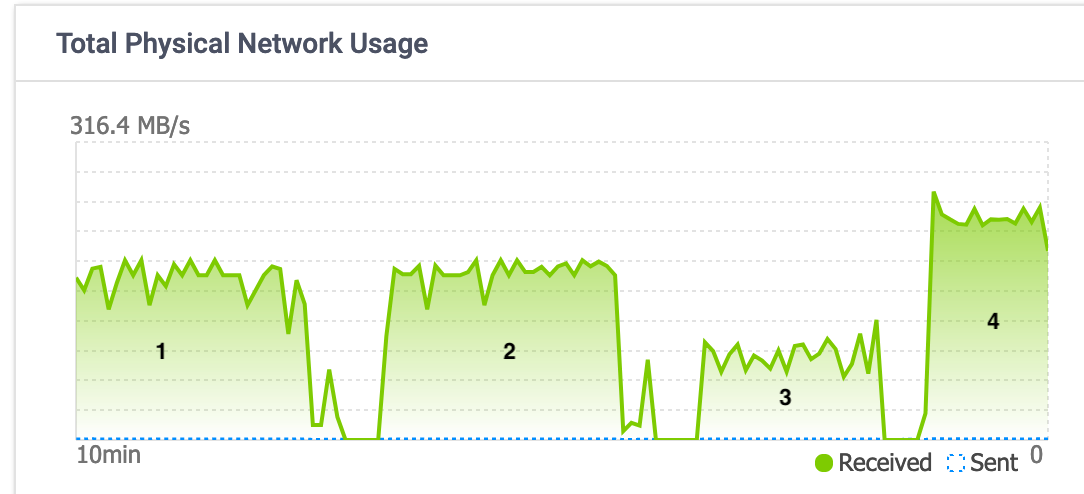

The transfer speed according to XOA is slightly less, but looking at the bandwidth graph, it looks like the LACP bonded network on the storage server is reaching a higher max throughput.

I will test some more with incremental backups and see if there's a difference with them.

If we ignore the

nconnectfor a second, and just look at the graphs, it seems we have a lot of possibilities to improve the backup performance if we could make the transfer more even. What is causing this type of pattern? I do not believe any coalesce was happening during this test. -

That's a question for @florent

-

On the read side :

- the legacy mode : xapi build an expor from the vhd chain

- NBD : we read individual block on the storage repository

On the write Side :

- the default file mode : we write 1 big file per disk

- block mode : we write a compressed block per 2MB data

We don't have a lot of room on the legacy mode. The NBD + block gives us more freedom, and , instinctively , should gain more from nconnect, since we will read and write multiple small blocks in parallel

What mode are you using ?

@Forza said in Updated XOA with kernel >5.3 to support nconnect nfs option:

I did a 3 x backup of a single VM hosted on an SSD drive on the same host as XOA is running, which is also pool master.

This is with

nconnect=1:

This is with

nconnect=16:

The transfer speed according to XOA is slightly less, but looking at the bandwidth graph, it looks like the LACP bonded network on the storage server is reaching a higher max throughput.

I will test some more with incremental backups and see if there's a difference with them.

If we ignore the

nconnectfor a second, and just look at the graphs, it seems we have a lot of possibilities to improve the backup performance if we could make the transfer more even. What is causing this type of pattern? I do not believe any coalesce was happening during this test. -

@florent hi, for the full backup test above, I used normal mode with zstd enabled. There were no snapshots of the source VM and it was stored on local ssd storage on the pool master.

-

Just replying to thank you for pointing this out. I have been having very poor backup speeds for over a month and this sorted it out.

I have only used nconnect=4 and 6 for my NFS shares. -

Hi @acomav

Can you give more details on your setup and the result before/after? We might use this to update our XO guide with the new values

-

@olivierlambert Hi Olivier. I'll see what I can do. I've spent the weekend cleaning up my backups and catching up on mirror transfers. Once completed, I'll do a few custom backups at various nconnect values.

-

@Forza said in Updated XOA with kernel >5.3 to support nconnect nfs option:

@florent hi, for the full backup test above, I used normal mode with zstd enabled. There were no snapshots of the source VM and it was stored on local ssd storage on the pool master.

can you test a backup on an empty remote with block mode ?

-

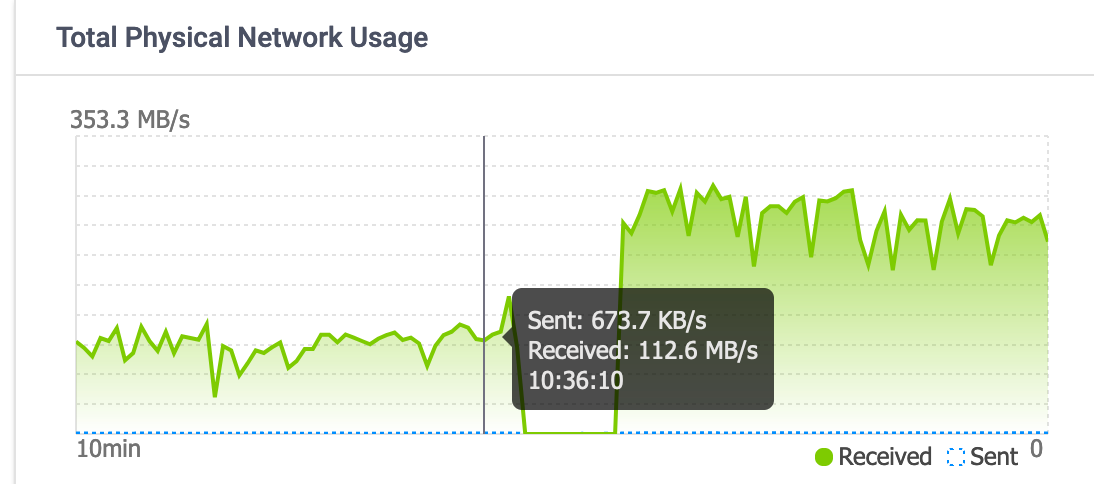

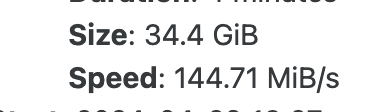

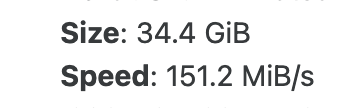

@florent Did a test on my 8.3 homelab.

Using XO on a Debian 12 VM.2 full (delta) backups using "nconnect=6" of all my 10 VM's

With block defined at remote:

without block (my normal mode):

-

I'm not sure to get it with your graph. Can you write down the average values for each scenario?

-

with block: 100-150 MB/s approx

without: 200-300 MB/sValues from observed NAS traffic.

-

@manilx Do you mean

blockas NBD mode? -

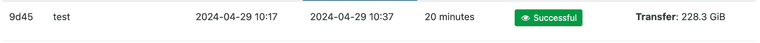

@Forza Did another test:

A: Remote as nfs share with "Store backup as multiple data blocks"

B: same but without multiple data blocks1st backup to A,"Backup"

2nd backup to B, "Backup"

3rd backup to A, "Backup"

4th backup to B, "Delta Backup"

-

@manilx This was just one VM. Did not use NBD (forgot to turn on)

-

I'm a bit confused, you are comparing block based vs VHD, but did you also change the

nconnectoption between run or kept it to a specific number? (in case, which one)