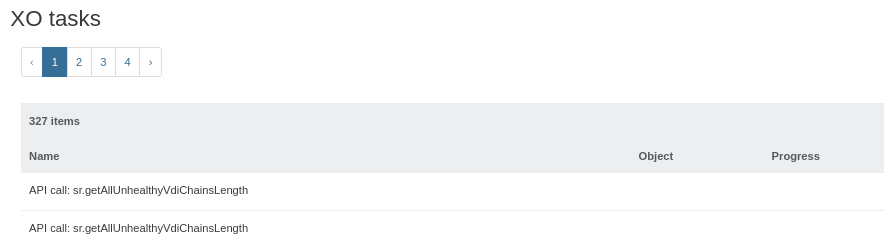

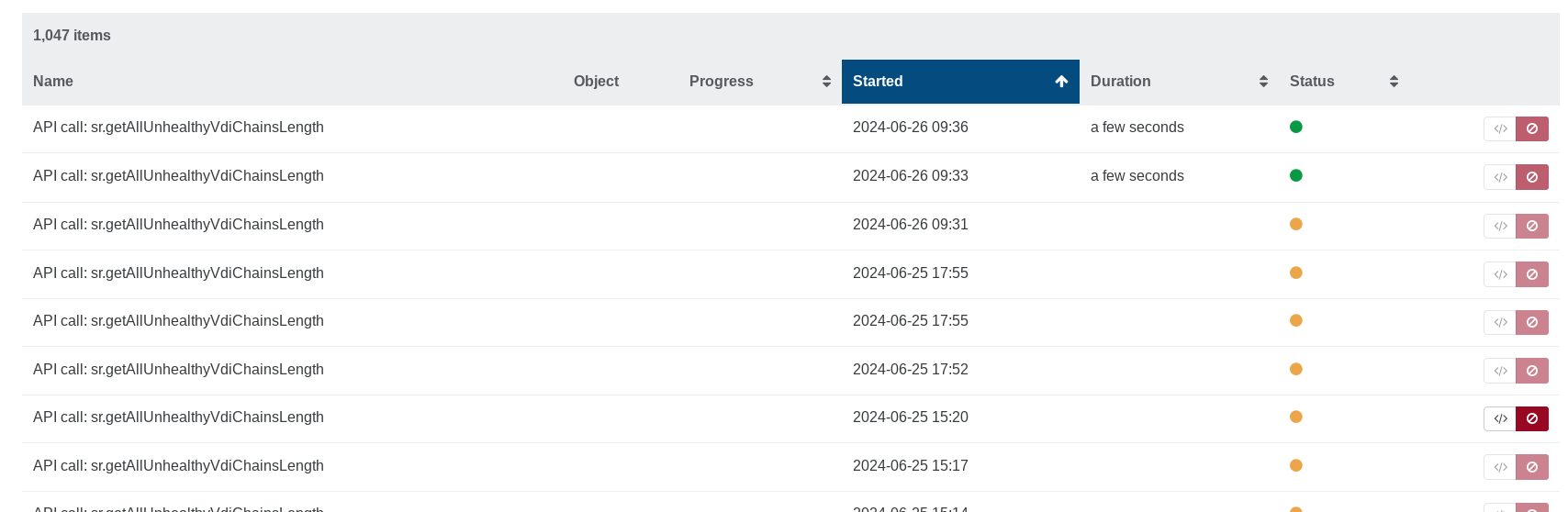

huge number of api call "sr.getAllUnhealthyVdiChainsLength" in tasks

-

This thread will be used to centralize all backup issues related to CBT: https://xcp-ng.org/forum/topic/9268/cbt-the-thread-to-centralize-your-feedback

-

After i seen the CBT stuff was reverted on github, i updated to the latest commit on my home server, (253aa) i can report my backups are now working as they should and coalesce runs without issues leaving a clean health check dashboard again. :). Glad to see this has been held back on XOA as well as i was planning to stay on 5.95.1 otherwise! Looking forward to CBT eventually all the same!

-

@flakpyro Just updated, run my delta backups and all is fine

-

-

Same problem here 300+ sessions and counting

XAPI restart did not solve the issue... -

Doesn't seem to be respecting [NOBAK] anymore for storage repos. Tried '[NOSNAP][NOBAK] StorageName' and it still grabs it.

-

On the VDI name, right?

-

@olivierlambert I believe so. It is the Disks tab of a VM. [NOBAK] was working but now the NAS is complaining about storage space. This VM has a disk on the NAS so it is backing it up twice. Added [NOSNAP] just as a test with the same result.

It is running a full backup if that makes any difference.

-

Ping @julien-f

-

@ryan-gravel I cannot reproduce, both Full Backup and Incremental Backup correctly ignore VDIs with

[NOBAK]in their name label. -

@julien-f It's happening to me too... my

[NOBAK]disk is being backed up now (commit 0e4a3) using the normal Backup (running a full). -

-

With today's update (feat: release 5.96.0, master 96b7673) things are working well again. I have not seen any Orphan VDI/snapshots or stuck Control Domain VDIs (but it may take time to test). Thanks to the Vates team!

-

Hello, can you please try the

buffered-task-eventsbranch and let me know if that solves the issue?

Thanks a lot for everyone's help!

Thanks a lot for everyone's help!