CBT: the thread to centralize your feedback

-

@olivierlambert the vdi_in_use error we saw in regarding to destroy has not been occuring again. The vdi_in_use error regarding data_destroy is still occuring. Not shure if the fix did anything or that we were lucky not to see issues last night on it. I believe @florent is right and this is caused by a timing issue but maybe we need more time then 4 seconds.

-

@rtjdamen I do run from sources and I was on the latest build when I posted. One of my first steps in regards to any issue is to update XO. I do not have any speed limits configured for the job.

@olivierlambert It seems to be happening fairly regularly for me. At least every couple of days. Let me know what I can do to help troubleshoot.

It appears that the same thing has happened again. One VM got attached to the Control Domain yesterday and therefore failed to backup. What is odd is that now I have another VM listed twice as being attached to the Control Domain. Oddly, they show the same attached UUID but different VBD UUIDs.

I see that there are patches available for the pool, so I will apply those as well as update XO and remove the Control attached VMs.

-

today i got issues with one of our hosts, i needed to do a hard reset of it. Now i get the error "can't create a stream from a metadata VDI, fall back to a base " for these vms hosted on this machine. I believe this error message is caused because the CBT got invalid after the hard reset. I remember we had this kind of error messages on vmware as well when a host crashed. Maybe if this is the case the error message should be update to something like "CBT is invalid fall back to base" it makes more sense that way.

-

@CJ strange indeed, are the backups succesfull or do they fail as well?

-

@rtjdamen The one backup failed last night, but the next one hasn't run yet. However, I expect both VMs to fail. Due to issues applying the patches I haven't cleared the control attached domain yet.

-

@CJ strange issue, not something we see on our end. Maybe vates has an idea?

-

@rtjdamen Agreed. I'm waiting for them to respond regarding my patching problems so I can test the latest updates. If that still has problems then hopefully they can let me know what they need to investigate the issue.

-

I'm now updated to the latest patches and XO version. I've cleaned out the control attached VMs and the orphans. We'll see how the backup goes tonight.

-

@CJ fingers crossed!

-

Hi all, i can confirm the vdi_in_use error is resolved by https://github.com/vatesfr/xen-orchestra/pull/7960 we no longer see issues there.

Only remaining issue we see is the “ can’t create a stream from a metadata vdi fall back to base”

-

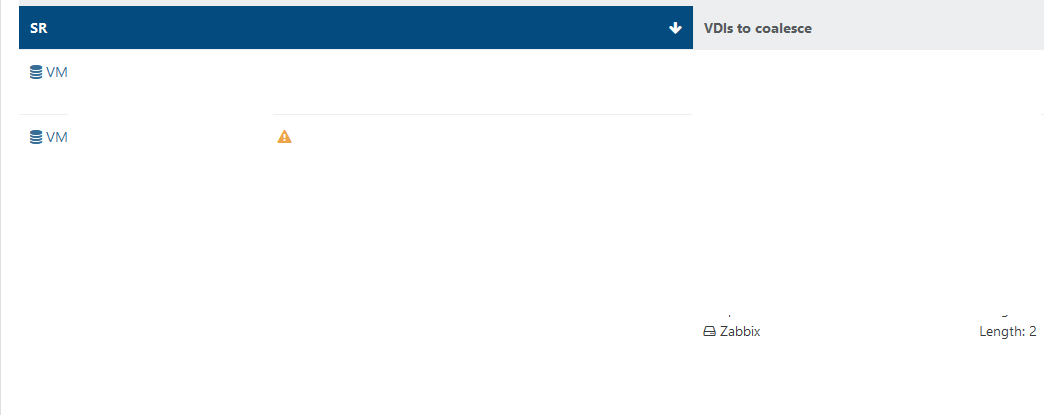

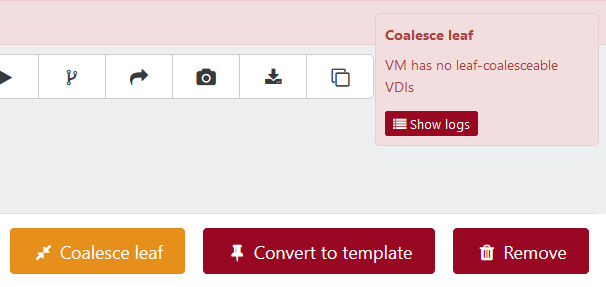

not sure is it CBT related, but 1 pool got a huge coalesce queue after i tried migrate VDI to another storage.

for example, this VM halted for a months with disabled backups. How it possible to get coalesce this way?

-

@Tristis-Oris Coalesce Leaf is only valid with no snapshots on the vm, if there is one this is just coalesce and the button does not work. Maybe check your smlog to see if there are any coalesce related errors. Also check if there are vdi's attached to the control domain, sometimes this can prevent coalesce from occuring.

-

Unfortunately, I have to report that my 3 problem VMs all have attached themselves to the control domain again.

-

Things continue to get weirder. I now have three copies of one VM attached to the control domain along with one copy each of the other two VMs but the delta backup says that it successfully completed.

Any ideas why the one VM keeps getting additional disks attached to the control domain?

-

@CJ Do you have Number of NBD connection per disk set to 1 (one), or is it set higher? If it's set higher than 1, try setting to back to 1. I have the same problem when I use higher than 1.

-

@Andrew I have it set to 4. But it's only these 3 VMs, not all of the VMs part of the backup job.

-

Try to set it to 1 first and see if you still have the same problem (after cleaning the previous left overs)

-

@olivierlambert That's the really weird part. Other than the notice on the dashboard, I'm not having any problems.

The backups are completing successfully. Which is a definite change from before I updated everything. Then the backups would fail for any VM with an attached disk.

The backups are completing successfully. Which is a definite change from before I updated everything. Then the backups would fail for any VM with an attached disk. -

@CJ we have the number of nbd connections also set to 1, did some testing with more but had issues with it and it gave no performance improvement. Maybe this is causing your issue?

-

This is odd. It seems to need to get to a certain point before backups start failing. I have the one VM with 3 disks attached to the control, the other two with only one disk each attached, and now a fourth VM with only one disk attached. However, the backup only failed the original three VMs. The backup failed with "VDI_IN_USE(OpaqueRef:UUID, destroy)".

I've changed the number of NBD connections to 1 so we'll see if that stops the attachment issue.

There appears to be a problem with the backup report email, however. It states "Success: 0/N" while the actual job report shows that only the three VMs failed and the others succeeded.