CBT: the thread to centralize your feedback

-

Hi all, i can confirm the vdi_in_use error is resolved by https://github.com/vatesfr/xen-orchestra/pull/7960 we no longer see issues there.

Only remaining issue we see is the “ can’t create a stream from a metadata vdi fall back to base”

-

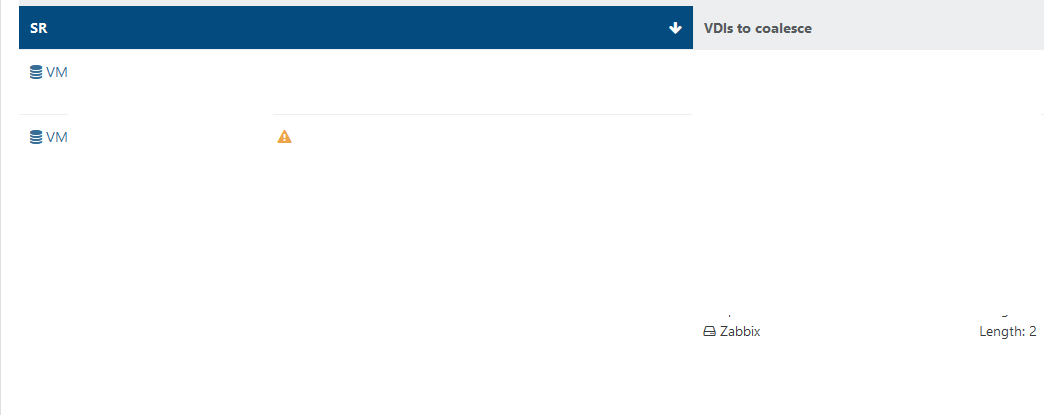

not sure is it CBT related, but 1 pool got a huge coalesce queue after i tried migrate VDI to another storage.

for example, this VM halted for a months with disabled backups. How it possible to get coalesce this way?

-

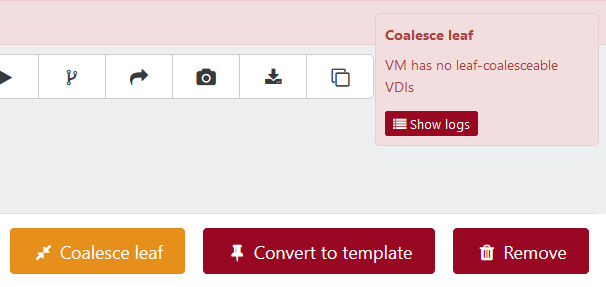

@Tristis-Oris Coalesce Leaf is only valid with no snapshots on the vm, if there is one this is just coalesce and the button does not work. Maybe check your smlog to see if there are any coalesce related errors. Also check if there are vdi's attached to the control domain, sometimes this can prevent coalesce from occuring.

-

Unfortunately, I have to report that my 3 problem VMs all have attached themselves to the control domain again.

-

Things continue to get weirder. I now have three copies of one VM attached to the control domain along with one copy each of the other two VMs but the delta backup says that it successfully completed.

Any ideas why the one VM keeps getting additional disks attached to the control domain?

-

@CJ Do you have Number of NBD connection per disk set to 1 (one), or is it set higher? If it's set higher than 1, try setting to back to 1. I have the same problem when I use higher than 1.

-

@Andrew I have it set to 4. But it's only these 3 VMs, not all of the VMs part of the backup job.

-

Try to set it to 1 first and see if you still have the same problem (after cleaning the previous left overs)

-

@olivierlambert That's the really weird part. Other than the notice on the dashboard, I'm not having any problems.

The backups are completing successfully. Which is a definite change from before I updated everything. Then the backups would fail for any VM with an attached disk.

The backups are completing successfully. Which is a definite change from before I updated everything. Then the backups would fail for any VM with an attached disk. -

@CJ we have the number of nbd connections also set to 1, did some testing with more but had issues with it and it gave no performance improvement. Maybe this is causing your issue?

-

This is odd. It seems to need to get to a certain point before backups start failing. I have the one VM with 3 disks attached to the control, the other two with only one disk each attached, and now a fourth VM with only one disk attached. However, the backup only failed the original three VMs. The backup failed with "VDI_IN_USE(OpaqueRef:UUID, destroy)".

I've changed the number of NBD connections to 1 so we'll see if that stops the attachment issue.

There appears to be a problem with the backup report email, however. It states "Success: 0/N" while the actual job report shows that only the three VMs failed and the others succeeded.

-

@olivierlambert @florent @CJ Backups have been much more stable since the latest XO update 10-Sep-2024 (XOA 5.98.1, master commit 4c7acc1).

Running CR and CBT/NBD of 2 connections does not leave stranded VDIs any more (at least I have not seen any yet).

-

@Andrew we see the same behavior here, no strange backup issues so far!

-

No attached disks so far, but I'll wait until next week to bump up the NBD connections to make sure.

-

Unfortunately, as soon as I bumped the NBD connections up to 2 I got an attached disk. It doesn't seem like the latest changes have fixed the issue.

Xen Orchestra, commit 74e6f

-

This post is deleted! -

@florent deployed a fix last week that resolved the vdi_in_use errors, however after updating tot the latest XOA release that problem came back and is not resolved anymore. Not shure if this is a new issue or that it is having issues with the fix.

-

@CJ seems like issue with the nbd connections then… hope this is something that can be fixed easy.

-

In relation to the issues i have been seeing about "can't create a stream from a metadata VDI, fall back to a base " after preforming a VM migration from one host to another i notice i also see the following in the SMLog.

Note: i also see this in the SMLog on the pool master after a VM migration even if i don't have snapshot delete enabled but simply have NBD + CBT Enabled. However the regular delta backup will proceed anyway and works fine in that case. (With snap delete disabled) With Snap delete i will see "can't create a stream from a metadata VDI, fall back to a base". Running the job again after this will produce no error in SMLog. Only after a VM migration between hosts will this appear.

Log snippit:

Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] lock: opening lock file /var/lock/sm/afd3edac-3659-4253-8d6e-76062399579c/cbtlog Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] lock: acquired /var/lock/sm/afd3edac-3659-4253-8d6e-76062399579c/cbtlog Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] ['/usr/sbin/cbt-util', 'get', '-n', '/var/run/sr-mount/16e4ecd2-583e-e2a0-5d3d-8e53ae9c1429/afd3edac-3659-4253-8d6e-76062399579c.cbtlog', '-c'] Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] pread SUCCESS Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] lock: released /var/lock/sm/afd3edac-3659-4253-8d6e-76062399579c/cbtlog Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] Raising exception [460, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated]] Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] ***** generic exception: vdi_list_changed_blocks: EXCEPTION <class 'xs_errors.SROSError'>, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated] Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/SRCommand.py", line 111, in run Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] return self._run_locked(sr) Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/SRCommand.py", line 161, in _run_locked Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] rv = self._run(sr, target) Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/SRCommand.py", line 326, in _run Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] return target.list_changed_blocks() Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/VDI.py", line 757, in list_changed_blocks Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] "Source and target VDI are unrelated") Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] ***** NFS VHD: EXCEPTION <class 'xs_errors.SROSError'>, Failed to calculate changed blocks for given VDIs. [opterr=Source and target VDI are unrelated] Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/SRCommand.py", line 385, in run Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] ret = cmd.run(sr) Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/SRCommand.py", line 111, in run Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] return self._run_locked(sr) Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/SRCommand.py", line 161, in _run_locked Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] rv = self._run(sr, target) Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/SRCommand.py", line 326, in _run Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] return target.list_changed_blocks() Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] File "/opt/xensource/sm/VDI.py", line 757, in list_changed_blocks Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] "Source and target VDI are unrelated") Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] lock: closed /var/lock/sm/afd3edac-3659-4253-8d6e-76062399579c/cbtlog Sep 17 21:07:40 xcpng-prd-03 SM: [1126578] lock: closed /var/lock/sm/16e4ecd2-583e-e2a0-5d3d-8e53ae9c1429/sr Sep 17 21:07:40 xcpng-prd-03 SM: [1126556] FileVDI._snapshot for c56e5d87-1486-41da-86d4-92ede62de75a (type 2) Sep 17 21:07:40 xcpng-prd-03 SM: [1126556] ['uuidgen', '-r'] Sep 17 21:07:40 xcpng-prd-03 SM: [1126556] pread SUCCESS -

I wonder if the migration is not also migrating the VDI with it, which shouldn't be the case. What are you doing exactly to migrate the VM?