MAP_DUPLICATE_KEY error in XOA backup - VM's wont START now!

-

XOA: Current version: 5.95.2

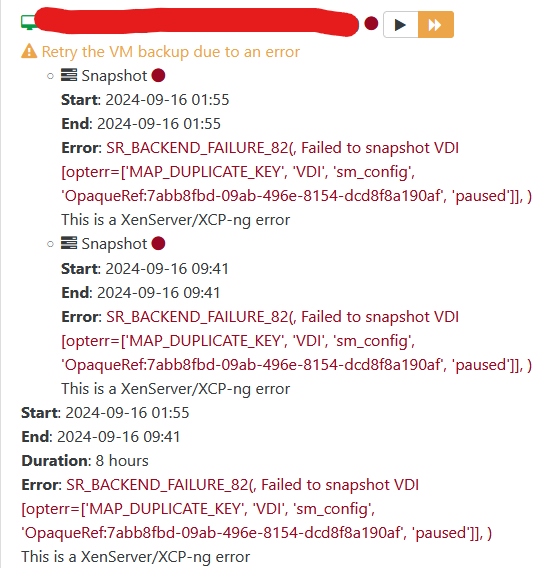

XCP-NG: Latest Stable XCP-ng 8.2.1 (GPLv2)A few days ago I started to see the following error during XOA backups

running a single backup task with Delta Backup, and Continuous Replication7 VM's effected.

Error: SR_BACKEND_FAILURE_82(, Failed to snapshot VDI [opterr=['MAP_DUPLICATE_KEY', 'VDI', 'sm_config', 'OpaqueRef:bd76d2e6-f329-4488-ae82-c53b48ea873c', 'paused']], )

when i restart the task i get the same error.

I removed the existing snapshot for the backup task, tried the backup again, still got the same error

I shutdown on of the VM effected, when i tried to start it back up i got a VDI error. VM wont start.

SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 not detached cleanly], )

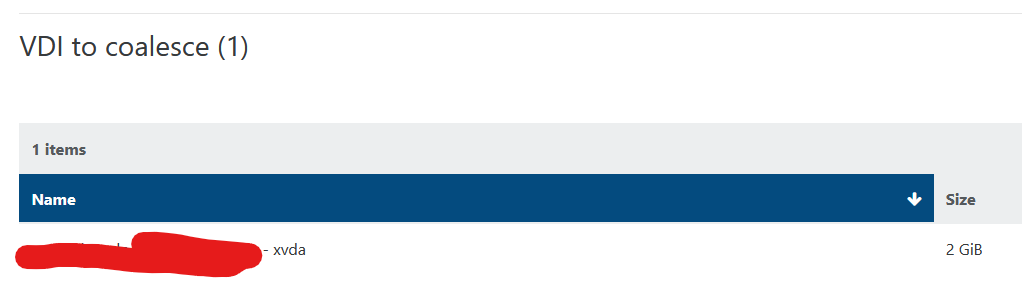

this is a very serious issue.then i noticed that coalesce is stuck for the VDI

SMlog shows the following :

Sep 16 01:55:28 fen-xcp-01 SM: [9450] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd'] Sep 16 01:55:28 fen-xcp-01 SM: [9450] vdi_snapshot {'sr_uuid': 'cfda3fef-663a-849b-3e47-692607e612e4', 'subtask_of': 'DummyRef:|ea2c58ab-ed05-4070-9eb7-7466dd300894|VDI.snapshot', 'vdi_ref': 'OpaqueRef:7abb8fbd-09ab-496e-8154-dcd8f8a190af', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '3a4bdcc6-ce4c-4c00-aab3-2895061229f2', 'host_ref': 'OpaqueRef:a34e39e4-b42d-48d3-87a9-6eec76720259', 'session_ref': 'OpaqueRef:9f9d085f-9105-4dc5-b715-77ef059bc0c6', 'device_config': {'server': '10.20.86.80', 'options': '', 'SRmaster': 'true', 'serverpath': '/mnt/srv/xcp_lowIO', 'nfsversion': '4.1'}, 'command': 'vdi_snapshot', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:795adcc6-8c21-4853-9c39-8b1b1b095abc', 'driver_params': {'epochhint': '8248f4d4-2986-3c0f-e364-92bb317a376e'}, 'vdi_uuid': '3a4bdcc6-ce4c-4c00-aab3-2895061229f2'} Sep 16 01:55:28 fen-xcp-01 SM: [9450] Pause request for 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 01:59:27 fen-xcp-01 SM: [9269] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd'] Sep 16 02:00:30 fen-xcp-01 SM: [9852] Refresh request for 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 02:00:30 fen-xcp-01 SM: [9852] ***** BLKTAP2:call_pluginhandler ['XENAPI_PLUGIN_FAILURE', 'refresh', 'TapdiskInvalidState', 'Tapdisk(vhd:/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd, pid=21183, minor=11, state=P)']: EXCEPTION <class 'XenAPI.Failure'>, ['XENAPI_PLUGIN_FAILURE', 'refresh', 'TapdiskInvalidState', 'Tapdisk(vhd:/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd, pid=21183, minor=11, state=P)'] Sep 16 02:01:00 fen-xcp-01 SM: [18226] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd'] Sep 16 02:06:05 fen-xcp-01 SM: [18920] Refresh request for 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 02:06:05 fen-xcp-01 SM: [18920] ***** BLKTAP2:call_pluginhandler ['XENAPI_PLUGIN_FAILURE', 'refresh', 'TapdiskInvalidState', 'Tapdisk(vhd:/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd, pid=21183, minor=11, state=P)']: EXCEPTION <class 'XenAPI.Failure'>, ['XENAPI_PLUGIN_FAILURE', 'refresh', 'TapdiskInvalidState', 'Tapdisk(vhd:/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd, pid=21183, minor=11, state=P)'] Sep 16 09:41:07 fen-xcp-01 SM: [2099] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd'] Sep 16 09:41:07 fen-xcp-01 SM: [2099] vdi_snapshot {'sr_uuid': 'cfda3fef-663a-849b-3e47-692607e612e4', 'subtask_of': 'DummyRef:|e23e33c2-d526-47ea-9e75-584912f68b44|VDI.snapshot', 'vdi_ref': 'OpaqueRef:7abb8fbd-09ab-496e-8154-dcd8f8a190af', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '3a4bdcc6-ce4c-4c00-aab3-2895061229f2', 'host_ref': 'OpaqueRef:a34e39e4-b42d-48d3-87a9-6eec76720259', 'session_ref': 'OpaqueRef:a20f95b7-55a1-4e6c-b0bd-8bf7eb0e2b33', 'device_config': {'server': '10.20.86.80', 'options': '', 'SRmaster': 'true', 'serverpath': '/mnt/srv/xcp_lowIO', 'nfsversion': '4.1'}, 'command': 'vdi_snapshot', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:795adcc6-8c21-4853-9c39-8b1b1b095abc', 'driver_params': {'epochhint': 'efd0cb8b-cb2f-9afc-f17e-f99e56cc8cbe'}, 'vdi_uuid': '3a4bdcc6-ce4c-4c00-aab3-2895061229f2'} Sep 16 09:41:07 fen-xcp-01 SM: [2099] Pause request for 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 09:44:54 fen-xcp-01 SM: [16507] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd'] Sep 16 09:45:07 fen-xcp-01 SM: [18149] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd'] Sep 16 09:46:10 fen-xcp-01 SM: [2208] Refresh request for 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 09:46:10 fen-xcp-01 SM: [2208] ***** BLKTAP2:call_pluginhandler ['XENAPI_PLUGIN_FAILURE', 'refresh', 'TapdiskInvalidState', 'Tapdisk(vhd:/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd, pid=21183, minor=11, state=P)']: EXCEPTION <class 'XenAPI.Failure'>, ['XENAPI_PLUGIN_FAILURE', 'refresh', 'TapdiskInvalidState', 'Tapdisk(vhd:/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd, pid=21183, minor=11, state=P)'] Sep 16 09:58:42 fen-xcp-01 SM: [13090] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd']when looking deeper into this at a XCP-NG log level we are setting stuck tapdisk. this is whats causing the snapshot error and for the vdi to not colalesce. I CANNOT shut down critical VM's for these tapdisk issues... backup process should not be breaking VM's.

this is a pretty critical problem when backups are taking out production VM's.

I have serveral VM's that wont boot and some i am scared to reboot.

any advise?

-

I have, what is hopefully a final update to this issue(s).

we upgraded to xoa version .99 a few weeks ago and the problem has now gone away. we suspect that some changes were made for timeouts in xoa that have resolved this , and a few other related problems.

-

Hi,

I would check on your NFS server, I suspect some disconnection happened causing your tapdisk to hang.

dmesgmight provide some useful info.In any case, you can always shutdown the VM, reset the VDI state and boot it again without any issue. Feel free to open a support ticket to have assistance on your supported infrastructure.

-

no errors on the storage , already checked those logs on the NFS server. all other 400+ VM's are working fine , and backup ran fine fine for them last night. only ones that failed are in this state.

VM was shutdown, and the vm we shutdown in this state will not start back up with error:

SR_BACKEND_FAILURE_46(, The VDI is not available [opterr=VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 not detached cleanly], )when we reset the VDI state we get :

"vdi not available now" -

I'm talking about the

dmesgof the Dom0. -

dmsg on the master just shows the typical

example : hour and hours of:

[1682640.408004] block tdcf: sector-size: 512/512 capacity: 41943040 [1683000.466302] block tdcg: sector-size: 512/512 capacity: 629145600 [1683000.525800] block tdcj: sector-size: 512/512 capacity: 209715200 [1683000.565488] block tdck: sector-size: 512/512 capacity: 943718400 [1683000.638879] block tdcn: sector-size: 512/512 capacity: 314572800 [1683000.676200] block tdcs: sector-size: 512/512 capacity: 943718400 [1683001.410788] block tdcu: sector-size: 512/512 capacity: 629145600 [1683001.535529] block tdcv: sector-size: 512/512 capacity: 209715200 [1683001.565065] block tdcw: sector-size: 512/512 capacity: 943718400 [1683001.565755] block tdcy: sector-size: 512/512 capacity: 314572800 [1683001.566053] block tdcx: sector-size: 512/512 capacity: 943718400 [1683032.036059] block tdcj: sector-size: 512/512 capacity: 314572800 [1683032.074151] block tdcv: sector-size: 512/512 capacity: 209715200 [1683032.133846] block tdcz: sector-size: 512/512 capacity: 943718400 [1683032.235247] block tdda: sector-size: 512/512 capacity: 629145600 [1683032.302698] block tddb: sector-size: 512/512 capacity: 943718400 [1683404.730704] block tdbp: sector-size: 512/512 capacity: 31457280 [1683404.853315] block tdbs: sector-size: 512/512 capacity: 251658240 [1683404.908535] block tdbw: sector-size: 512/512 capacity: 4194304 [1683425.348146] block tdcb: sector-size: 512/512 capacity: 251658240 [1683425.402338] block tdcg: sector-size: 512/512 capacity: 4194304 [1683425.420468] block tdcj: sector-size: 512/512 capacity: 31457280 [1683452.196344] block tdck: sector-size: 512/512 capacity: 31457280 [1683452.226289] block tdcn: sector-size: 512/512 capacity: 104857600 [1683452.263279] block tdcp: sector-size: 512/512 capacity: 4194304 [1683471.593541] block tdct: sector-size: 512/512 capacity: 4194304 [1683471.671318] block tdcu: sector-size: 512/512 capacity: 31457280 [1683471.703155] block tdcv: sector-size: 512/512 capacity: 104857600 [1684753.546700] block tdbs: sector-size: 512/512 capacity: 20971520 [1684754.920151] block tdbw: sector-size: 512/512 capacity: 20971520 [1686424.322960] block tdbp: sector-size: 512/512 capacity: 1048576000 [1686424.360929] block tdby: sector-size: 512/512 capacity: 1677721600 [1686424.435019] block tdca: sector-size: 512/512 capacity: 4194304 [1686424.480912] block tdcb: sector-size: 512/512 capacity: 104857600 [1686425.125871] block tdcd: sector-size: 512/512 capacity: 1048576000 [1686425.143709] block tdcf: sector-size: 512/512 capacity: 1677721600 [1686425.233555] block tdcg: sector-size: 512/512 capacity: 104857600 [1686425.240485] block tdcj: sector-size: 512/512 capacity: 4194304 [1686443.975172] block tdck: sector-size: 512/512 capacity: 104857600 [1686444.009457] block tdcn: sector-size: 512/512 capacity: 4194304 [1686444.092733] block tdcp: sector-size: 512/512 capacity: 1048576000 [1686444.149059] block tdcs: sector-size: 512/512 capacity: 1677721600 [1686503.696741] block tdbs: sector-size: 512/512 capacity: 167772160 [1686505.120749] block tdbw: sector-size: 512/512 capacity: 167772160 [1686752.003839] block tdbm: sector-size: 512/512 capacity: 20971520 [1686752.037748] block tdca: sector-size: 512/512 capacity: 41943040 [1686759.742139] block tdcc: sector-size: 512/512 capacity: 41943040 [1686759.821025] block tdcj: sector-size: 512/512 capacity: 20971520 [1687064.146613] block tdbp: sector-size: 512/512 capacity: 524288000 [1687065.695287] block tdbz: sector-size: 512/512 capacity: 524288000 [1687697.847517] block tdby: sector-size: 512/512 capacity: 4194304 [1687698.102192] block tdcb: sector-size: 512/512 capacity: 31457280 [1687708.983792] block tdcd: sector-size: 512/512 capacity: 4194304 [1687709.029127] block tdcf: sector-size: 512/512 capacity: 31457280 [1688269.978386] block tdbm: sector-size: 512/512 capacity: 18874368 [1688270.008683] block tdby: sector-size: 512/512 capacity: 209715200 [1688285.389014] block tdca: sector-size: 512/512 capacity: 18874368 [1688285.476928] block tdcc: sector-size: 512/512 capacity: 209715200dmesg on the host the VM was running on showed the following:

[1758566.844668] device vif10.0 left promiscuous mode [1789860.670491] vif vif-11-0 vif11.0: Guest Rx stalled [1789870.913618] vif vif-11-0 vif11.0: Guest Rx ready [1789928.804604] vif vif-11-0 vif11.0: Guest Rx stalled [1789938.807814] vif vif-11-0 vif11.0: Guest Rx ready [1790000.178379] vif vif-11-0 vif11.0: Guest Rx stalled [1790010.417692] vif vif-11-0 vif11.0: Guest Rx ready [1790019.923374] device vif11.0 left promiscuous mode [1790058.769958] block tdk: sector-size: 512/512 capacity: 41943040 [1790058.825462] block tdm: sector-size: 512/512 capacity: 268435456 [1790058.876045] block tdn: sector-size: 512/512 capacity: 524288000 [1790059.376780] device vif47.0 entered promiscuous mode [1790059.639442] device tap47.0 entered promiscuous mode [1790511.811087] device vif47.0 left promiscuous mode [1790709.493476] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1790709.493486] Tainted: G O 4.19.0+1 #1 [1790709.493489] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1790709.493493] qemu-system-i38 D 0 2475 2409 0x80000126 [1790709.493495] Call Trace: [1790709.493507] ? __schedule+0x2a6/0x880 [1790709.493508] schedule+0x32/0x80 [1790709.493518] io_schedule+0x12/0x40 [1790709.493521] __lock_page+0xf2/0x140 [1790709.493528] ? page_cache_tree_insert+0xd0/0xd0 [1790709.493532] truncate_inode_pages_range+0x46d/0x7d0 [1790709.493536] ? __brelse+0x30/0x30 [1790709.493537] ? invalidate_bh_lru+0x27/0x40 [1790709.493538] ? __brelse+0x30/0x30 [1790709.493542] ? on_each_cpu_mask+0x55/0x60 [1790709.493543] ? proc_ns_fget+0x40/0x40 [1790709.493544] ? __brelse+0x30/0x30 [1790709.493544] ? on_each_cpu_cond+0x85/0xc0 [1790709.493548] __blkdev_put+0x73/0x1e0 [1790709.493550] blkdev_close+0x21/0x30 [1790709.493553] __fput+0xe2/0x210 [1790709.493559] task_work_run+0x88/0xa0 [1790709.493563] do_exit+0x2ca/0xb20 [1790709.493567] ? kmem_cache_free+0x10f/0x130 [1790709.493568] do_group_exit+0x39/0xb0 [1790709.493572] get_signal+0x1d0/0x630 [1790709.493579] do_signal+0x36/0x620 [1790709.493583] ? __seccomp_filter+0x3b/0x230 [1790709.493589] exit_to_usermode_loop+0x5e/0xb8 [1790709.493590] do_syscall_64+0xcb/0x100 [1790709.493595] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1790709.493598] RIP: 0033:0x7fdff64cffcf [1790709.493604] Code: Bad RIP value. [1790709.493605] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1790709.493606] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1790709.493607] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1790709.493607] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1790709.493608] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1790709.493608] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1790830.314878] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1790830.314887] Tainted: G O 4.19.0+1 #1 [1790830.314890] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1790830.314895] qemu-system-i38 D 0 2475 2409 0x80000126 [1790830.314898] Call Trace: [1790830.314909] ? __schedule+0x2a6/0x880 [1790830.314910] schedule+0x32/0x80 [1790830.314919] io_schedule+0x12/0x40 [1790830.314922] __lock_page+0xf2/0x140 [1790830.314930] ? page_cache_tree_insert+0xd0/0xd0 [1790830.314934] truncate_inode_pages_range+0x46d/0x7d0 [1790830.314939] ? __brelse+0x30/0x30 [1790830.314940] ? invalidate_bh_lru+0x27/0x40 [1790830.314940] ? __brelse+0x30/0x30 [1790830.314945] ? on_each_cpu_mask+0x55/0x60 [1790830.314946] ? proc_ns_fget+0x40/0x40 [1790830.314947] ? __brelse+0x30/0x30 [1790830.314948] ? on_each_cpu_cond+0x85/0xc0 [1790830.314951] __blkdev_put+0x73/0x1e0 [1790830.314953] blkdev_close+0x21/0x30 [1790830.314956] __fput+0xe2/0x210 [1790830.314962] task_work_run+0x88/0xa0 [1790830.314967] do_exit+0x2ca/0xb20 [1790830.314970] ? kmem_cache_free+0x10f/0x130 [1790830.314971] do_group_exit+0x39/0xb0 [1790830.314976] get_signal+0x1d0/0x630 [1790830.314983] do_signal+0x36/0x620 [1790830.314987] ? __seccomp_filter+0x3b/0x230 [1790830.314992] exit_to_usermode_loop+0x5e/0xb8 [1790830.314994] do_syscall_64+0xcb/0x100 [1790830.314999] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1790830.315002] RIP: 0033:0x7fdff64cffcf [1790830.315007] Code: Bad RIP value. [1790830.315008] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1790830.315009] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1790830.315010] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1790830.315010] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1790830.315011] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1790830.315012] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1790951.136471] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1790951.136482] Tainted: G O 4.19.0+1 #1 [1790951.136485] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1790951.136489] qemu-system-i38 D 0 2475 2409 0x80000126 [1790951.136491] Call Trace: [1790951.136504] ? __schedule+0x2a6/0x880 [1790951.136505] schedule+0x32/0x80 [1790951.136515] io_schedule+0x12/0x40 [1790951.136518] __lock_page+0xf2/0x140 [1790951.136525] ? page_cache_tree_insert+0xd0/0xd0 [1790951.136530] truncate_inode_pages_range+0x46d/0x7d0 [1790951.136535] ? __brelse+0x30/0x30 [1790951.136536] ? invalidate_bh_lru+0x27/0x40 [1790951.136536] ? __brelse+0x30/0x30 [1790951.136541] ? on_each_cpu_mask+0x55/0x60 [1790951.136542] ? proc_ns_fget+0x40/0x40 [1790951.136542] ? __brelse+0x30/0x30 [1790951.136543] ? on_each_cpu_cond+0x85/0xc0 [1790951.136547] __blkdev_put+0x73/0x1e0 [1790951.136549] blkdev_close+0x21/0x30 [1790951.136553] __fput+0xe2/0x210 [1790951.136559] task_work_run+0x88/0xa0 [1790951.136563] do_exit+0x2ca/0xb20 [1790951.136567] ? kmem_cache_free+0x10f/0x130 [1790951.136568] do_group_exit+0x39/0xb0 [1790951.136572] get_signal+0x1d0/0x630 [1790951.136579] do_signal+0x36/0x620 [1790951.136584] ? __seccomp_filter+0x3b/0x230 [1790951.136589] exit_to_usermode_loop+0x5e/0xb8 [1790951.136591] do_syscall_64+0xcb/0x100 [1790951.136596] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1790951.136599] RIP: 0033:0x7fdff64cffcf [1790951.136604] Code: Bad RIP value. [1790951.136605] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1790951.136606] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1790951.136607] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1790951.136607] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1790951.136608] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1790951.136608] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1791071.958017] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1791071.958025] Tainted: G O 4.19.0+1 #1 [1791071.958028] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1791071.958033] qemu-system-i38 D 0 2475 2409 0x80000126 [1791071.958035] Call Trace: [1791071.958045] ? __schedule+0x2a6/0x880 [1791071.958046] schedule+0x32/0x80 [1791071.958056] io_schedule+0x12/0x40 [1791071.958059] __lock_page+0xf2/0x140 [1791071.958067] ? page_cache_tree_insert+0xd0/0xd0 [1791071.958073] truncate_inode_pages_range+0x46d/0x7d0 [1791071.958077] ? __brelse+0x30/0x30 [1791071.958078] ? invalidate_bh_lru+0x27/0x40 [1791071.958078] ? __brelse+0x30/0x30 [1791071.958083] ? on_each_cpu_mask+0x55/0x60 [1791071.958084] ? proc_ns_fget+0x40/0x40 [1791071.958085] ? __brelse+0x30/0x30 [1791071.958086] ? on_each_cpu_cond+0x85/0xc0 [1791071.958089] __blkdev_put+0x73/0x1e0 [1791071.958091] blkdev_close+0x21/0x30 [1791071.958095] __fput+0xe2/0x210 [1791071.958101] task_work_run+0x88/0xa0 [1791071.958106] do_exit+0x2ca/0xb20 [1791071.958109] ? kmem_cache_free+0x10f/0x130 [1791071.958110] do_group_exit+0x39/0xb0 [1791071.958115] get_signal+0x1d0/0x630 [1791071.958123] do_signal+0x36/0x620 [1791071.958128] ? __seccomp_filter+0x3b/0x230 [1791071.958133] exit_to_usermode_loop+0x5e/0xb8 [1791071.958135] do_syscall_64+0xcb/0x100 [1791071.958141] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1791071.958143] RIP: 0033:0x7fdff64cffcf [1791071.958149] Code: Bad RIP value. [1791071.958150] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1791071.958151] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1791071.958152] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1791071.958152] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1791071.958153] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1791071.958153] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1791192.779645] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1791192.779656] Tainted: G O 4.19.0+1 #1 [1791192.779659] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1791192.779663] qemu-system-i38 D 0 2475 2409 0x80000126 [1791192.779666] Call Trace: [1791192.779678] ? __schedule+0x2a6/0x880 [1791192.779680] schedule+0x32/0x80 [1791192.779689] io_schedule+0x12/0x40 [1791192.779692] __lock_page+0xf2/0x140 [1791192.779699] ? page_cache_tree_insert+0xd0/0xd0 [1791192.779704] truncate_inode_pages_range+0x46d/0x7d0 [1791192.779708] ? __brelse+0x30/0x30 [1791192.779709] ? invalidate_bh_lru+0x27/0x40 [1791192.779710] ? __brelse+0x30/0x30 [1791192.779715] ? on_each_cpu_mask+0x55/0x60 [1791192.779715] ? proc_ns_fget+0x40/0x40 [1791192.779716] ? __brelse+0x30/0x30 [1791192.779717] ? on_each_cpu_cond+0x85/0xc0 [1791192.779720] __blkdev_put+0x73/0x1e0 [1791192.779722] blkdev_close+0x21/0x30 [1791192.779726] __fput+0xe2/0x210 [1791192.779732] task_work_run+0x88/0xa0 [1791192.779736] do_exit+0x2ca/0xb20 [1791192.779740] ? kmem_cache_free+0x10f/0x130 [1791192.779741] do_group_exit+0x39/0xb0 [1791192.779745] get_signal+0x1d0/0x630 [1791192.779752] do_signal+0x36/0x620 [1791192.779756] ? __seccomp_filter+0x3b/0x230 [1791192.779762] exit_to_usermode_loop+0x5e/0xb8 [1791192.779764] do_syscall_64+0xcb/0x100 [1791192.779768] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1791192.779771] RIP: 0033:0x7fdff64cffcf [1791192.779777] Code: Bad RIP value. [1791192.779778] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1791192.779780] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1791192.779780] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1791192.779781] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1791192.779781] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1791192.779782] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1791313.601332] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1791313.601343] Tainted: G O 4.19.0+1 #1 [1791313.601346] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1791313.601350] qemu-system-i38 D 0 2475 2409 0x80000126 [1791313.601352] Call Trace: [1791313.601365] ? __schedule+0x2a6/0x880 [1791313.601366] schedule+0x32/0x80 [1791313.601375] io_schedule+0x12/0x40 [1791313.601379] __lock_page+0xf2/0x140 [1791313.601386] ? page_cache_tree_insert+0xd0/0xd0 [1791313.601390] truncate_inode_pages_range+0x46d/0x7d0 [1791313.601395] ? __brelse+0x30/0x30 [1791313.601396] ? invalidate_bh_lru+0x27/0x40 [1791313.601396] ? __brelse+0x30/0x30 [1791313.601401] ? on_each_cpu_mask+0x55/0x60 [1791313.601402] ? proc_ns_fget+0x40/0x40 [1791313.601403] ? __brelse+0x30/0x30 [1791313.601403] ? on_each_cpu_cond+0x85/0xc0 [1791313.601407] __blkdev_put+0x73/0x1e0 [1791313.601409] blkdev_close+0x21/0x30 [1791313.601412] __fput+0xe2/0x210 [1791313.601418] task_work_run+0x88/0xa0 [1791313.601423] do_exit+0x2ca/0xb20 [1791313.601426] ? kmem_cache_free+0x10f/0x130 [1791313.601427] do_group_exit+0x39/0xb0 [1791313.601432] get_signal+0x1d0/0x630 [1791313.601439] do_signal+0x36/0x620 [1791313.601443] ? __seccomp_filter+0x3b/0x230 [1791313.601448] exit_to_usermode_loop+0x5e/0xb8 [1791313.601450] do_syscall_64+0xcb/0x100 [1791313.601455] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1791313.601457] RIP: 0033:0x7fdff64cffcf [1791313.601464] Code: Bad RIP value. [1791313.601464] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1791313.601466] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1791313.601466] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1791313.601467] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1791313.601467] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1791313.601468] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1791434.422795] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1791434.422805] Tainted: G O 4.19.0+1 #1 [1791434.422808] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1791434.422813] qemu-system-i38 D 0 2475 2409 0x80000126 [1791434.422815] Call Trace: [1791434.422827] ? __schedule+0x2a6/0x880 [1791434.422828] schedule+0x32/0x80 [1791434.422837] io_schedule+0x12/0x40 [1791434.422840] __lock_page+0xf2/0x140 [1791434.422848] ? page_cache_tree_insert+0xd0/0xd0 [1791434.422852] truncate_inode_pages_range+0x46d/0x7d0 [1791434.422857] ? __brelse+0x30/0x30 [1791434.422858] ? invalidate_bh_lru+0x27/0x40 [1791434.422858] ? __brelse+0x30/0x30 [1791434.422863] ? on_each_cpu_mask+0x55/0x60 [1791434.422863] ? proc_ns_fget+0x40/0x40 [1791434.422864] ? __brelse+0x30/0x30 [1791434.422865] ? on_each_cpu_cond+0x85/0xc0 [1791434.422869] __blkdev_put+0x73/0x1e0 [1791434.422871] blkdev_close+0x21/0x30 [1791434.422874] __fput+0xe2/0x210 [1791434.422881] task_work_run+0x88/0xa0 [1791434.422885] do_exit+0x2ca/0xb20 [1791434.422889] ? kmem_cache_free+0x10f/0x130 [1791434.422890] do_group_exit+0x39/0xb0 [1791434.422894] get_signal+0x1d0/0x630 [1791434.422901] do_signal+0x36/0x620 [1791434.422905] ? __seccomp_filter+0x3b/0x230 [1791434.422910] exit_to_usermode_loop+0x5e/0xb8 [1791434.422912] do_syscall_64+0xcb/0x100 [1791434.422917] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1791434.422919] RIP: 0033:0x7fdff64cffcf [1791434.422926] Code: Bad RIP value. [1791434.422927] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1791434.422928] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1791434.422929] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1791434.422929] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1791434.422930] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1791434.422930] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1791555.244401] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1791555.244410] Tainted: G O 4.19.0+1 #1 [1791555.244413] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1791555.244417] qemu-system-i38 D 0 2475 2409 0x80000126 [1791555.244419] Call Trace: [1791555.244431] ? __schedule+0x2a6/0x880 [1791555.244432] schedule+0x32/0x80 [1791555.244442] io_schedule+0x12/0x40 [1791555.244446] __lock_page+0xf2/0x140 [1791555.244454] ? page_cache_tree_insert+0xd0/0xd0 [1791555.244459] truncate_inode_pages_range+0x46d/0x7d0 [1791555.244464] ? __brelse+0x30/0x30 [1791555.244465] ? invalidate_bh_lru+0x27/0x40 [1791555.244465] ? __brelse+0x30/0x30 [1791555.244471] ? on_each_cpu_mask+0x55/0x60 [1791555.244471] ? proc_ns_fget+0x40/0x40 [1791555.244472] ? __brelse+0x30/0x30 [1791555.244473] ? on_each_cpu_cond+0x85/0xc0 [1791555.244477] __blkdev_put+0x73/0x1e0 [1791555.244479] blkdev_close+0x21/0x30 [1791555.244483] __fput+0xe2/0x210 [1791555.244489] task_work_run+0x88/0xa0 [1791555.244494] do_exit+0x2ca/0xb20 [1791555.244509] ? kmem_cache_free+0x10f/0x130 [1791555.244510] do_group_exit+0x39/0xb0 [1791555.244515] get_signal+0x1d0/0x630 [1791555.244523] do_signal+0x36/0x620 [1791555.244528] ? __seccomp_filter+0x3b/0x230 [1791555.244533] exit_to_usermode_loop+0x5e/0xb8 [1791555.244535] do_syscall_64+0xcb/0x100 [1791555.244541] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1791555.244543] RIP: 0033:0x7fdff64cffcf [1791555.244548] Code: Bad RIP value. [1791555.244549] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1791555.244550] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1791555.244551] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1791555.244551] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1791555.244552] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1791555.244552] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1791676.065872] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1791676.065881] Tainted: G O 4.19.0+1 #1 [1791676.065885] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1791676.065889] qemu-system-i38 D 0 2475 2409 0x80000126 [1791676.065891] Call Trace: [1791676.065899] ? __schedule+0x2a6/0x880 [1791676.065901] schedule+0x32/0x80 [1791676.065906] io_schedule+0x12/0x40 [1791676.065908] __lock_page+0xf2/0x140 [1791676.065913] ? page_cache_tree_insert+0xd0/0xd0 [1791676.065916] truncate_inode_pages_range+0x46d/0x7d0 [1791676.065920] ? __brelse+0x30/0x30 [1791676.065921] ? invalidate_bh_lru+0x27/0x40 [1791676.065921] ? __brelse+0x30/0x30 [1791676.065924] ? on_each_cpu_mask+0x55/0x60 [1791676.065925] ? proc_ns_fget+0x40/0x40 [1791676.065926] ? __brelse+0x30/0x30 [1791676.065927] ? on_each_cpu_cond+0x85/0xc0 [1791676.065929] __blkdev_put+0x73/0x1e0 [1791676.065931] blkdev_close+0x21/0x30 [1791676.065933] __fput+0xe2/0x210 [1791676.065937] task_work_run+0x88/0xa0 [1791676.065939] do_exit+0x2ca/0xb20 [1791676.065942] ? kmem_cache_free+0x10f/0x130 [1791676.065943] do_group_exit+0x39/0xb0 [1791676.065946] get_signal+0x1d0/0x630 [1791676.065952] do_signal+0x36/0x620 [1791676.065955] ? __seccomp_filter+0x3b/0x230 [1791676.065960] exit_to_usermode_loop+0x5e/0xb8 [1791676.065962] do_syscall_64+0xcb/0x100 [1791676.065965] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1791676.065967] RIP: 0033:0x7fdff64cffcf [1791676.065971] Code: Bad RIP value. [1791676.065972] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1791676.065973] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1791676.065974] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1791676.065974] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1791676.065975] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1791676.065975] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1791796.887578] INFO: task qemu-system-i38:2475 blocked for more than 120 seconds. [1791796.887588] Tainted: G O 4.19.0+1 #1 [1791796.887591] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. [1791796.887635] qemu-system-i38 D 0 2475 2409 0x80000126 [1791796.887638] Call Trace: [1791796.887667] ? __schedule+0x2a6/0x880 [1791796.887669] schedule+0x32/0x80 [1791796.887678] io_schedule+0x12/0x40 [1791796.887695] __lock_page+0xf2/0x140 [1791796.887702] ? page_cache_tree_insert+0xd0/0xd0 [1791796.887706] truncate_inode_pages_range+0x46d/0x7d0 [1791796.887711] ? __brelse+0x30/0x30 [1791796.887712] ? invalidate_bh_lru+0x27/0x40 [1791796.887713] ? __brelse+0x30/0x30 [1791796.887717] ? on_each_cpu_mask+0x55/0x60 [1791796.887718] ? proc_ns_fget+0x40/0x40 [1791796.887718] ? __brelse+0x30/0x30 [1791796.887719] ? on_each_cpu_cond+0x85/0xc0 [1791796.887723] __blkdev_put+0x73/0x1e0 [1791796.887724] blkdev_close+0x21/0x30 [1791796.887728] __fput+0xe2/0x210 [1791796.887734] task_work_run+0x88/0xa0 [1791796.887738] do_exit+0x2ca/0xb20 [1791796.887742] ? kmem_cache_free+0x10f/0x130 [1791796.887743] do_group_exit+0x39/0xb0 [1791796.887747] get_signal+0x1d0/0x630 [1791796.887755] do_signal+0x36/0x620 [1791796.887759] ? __seccomp_filter+0x3b/0x230 [1791796.887764] exit_to_usermode_loop+0x5e/0xb8 [1791796.887766] do_syscall_64+0xcb/0x100 [1791796.887771] entry_SYSCALL_64_after_hwframe+0x44/0xa9 [1791796.887774] RIP: 0033:0x7fdff64cffcf [1791796.887781] Code: Bad RIP value. [1791796.887781] RSP: 002b:00007ffd7cd184c0 EFLAGS: 00000293 ORIG_RAX: 000000000000010f [1791796.887783] RAX: fffffffffffffdfe RBX: 00007fdff3c77e00 RCX: 00007fdff64cffcf [1791796.887783] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 00007fdff115a100 [1791796.887784] RBP: 0000000000000000 R08: 0000000000000008 R09: 0000000000000000 [1791796.887784] R10: 0000000000000000 R11: 0000000000000293 R12: 0000000000000001 [1791796.887785] R13: 0000000000000000 R14: 0000000000000000 R15: 00007fdff3c77ef8 [1792495.201512] print_req_error: I/O error, dev tdl, sector 0 [1792495.201542] print_req_error: I/O error, dev tdl, sector 88 [1792495.201568] print_req_error: I/O error, dev tdl, sector 176 [1792495.203559] device tap47.0 left promiscuous modebut nothing indicating a problem with the VDI, or cause of the missing VDI issue after the vdi reset.

this is happening still on 7 vm's only 2 of them exist on the same XCP-NG host. the rest are spread over the other servers.

additionally: when we start the VM SMlogs shows the following

Sep 16 11:33:57 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:57 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:58 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:58 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:58 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:59 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:59 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:59 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:00 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:00 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:00 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:01 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:01 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:01 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:02 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:02 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:02 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:03 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:03 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:03 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:04 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:04 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:04 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:05 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:05 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:05 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:06 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:06 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:06 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:07 fen-xcp-01 SM: [24503] ***** vdi_activate: EXCEPTION <class 'util.SMException'>, VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 locked Sep 16 11:34:07 fen-xcp-01 SM: [24503] Raising exception [46, The VDI is not available [opterr=VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 locked]] Sep 16 11:34:07 fen-xcp-01 SM: [24503] ***** NFS VHD: EXCEPTION <class 'SR.SROSError'>, The VDI is not available [opterr=VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 locked] Sep 16 11:34:08 fen-xcp-01 SM: [1804] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061 229f2.vhd'] Sep 16 11:34:08 fen-xcp-01 SM: [1804] vdi_detach {'sr_uuid': 'cfda3fef-663a-849b-3e47-692607e612e4', 'subtask_of': 'DummyRef:|255a4ea2-036b-4b2c-8cfe-ee66144b5286|VDI.detac h', 'vdi_ref': 'OpaqueRef:7abb8fbd-09ab-496e-8154-dcd8f8a190af', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '3a4bdcc6-ce4c-4c00-aab3-289506122 9f2', 'host_ref': 'OpaqueRef:a34e39e4-b42d-48d3-87a9-6eec76720259', 'session_ref': 'OpaqueRef:433e29ff-9a31-497e-a831-19ef6e7478d8', 'device_config': {'server': '10.20.86.8 0', 'options': '', 'SRmaster': 'true', 'serverpath': '/mnt/srv/xcp_lowIO', 'nfsversion': '4.1'}, 'command': 'vdi_detach', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef :795adcc6-8c21-4853-9c39-8b1b1b095abc', 'vdi_uuid': '3a4bdcc6-ce4c-4c00-aab3-2895061229f2'} Sep 16 11:34:08 fen-xcp-01 SM: [1804] lock: opening lock file /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdiNOTE: running resetvdis.py on 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 says that there's nothing to do ..

-

You have an issue indeed, because the

dmesgis showing something that shouldn't happen. And it seems to be I/O error related, explaining your problems. Asking around if I can have a better meaning than that. -

still doing more digging on our side too

the issue seems to be around here:

Sep 16 11:33:40 fen-xcp-01 SM: [24503] Paused or host_ref key found [{'paused': 'true', 'read-caching-enabled-on-b3a38eb3-bdad-47f6-92c0-15c11fa4125e': 'true', 'vhd-parent': '93c10df0-ba2a-4553-8e3e-43eb9752fba1', 'read-caching-enabled-on-c305769c-bb8a-43f4-88ff-be7d9f88ad1bc305769c-bb8a-43f4-88ff-be7d9f88ad1b': 'true', 'vhd-blocks': 'eJxjYBhYAAAAgAAB'}]

b3a38eb3-bdad-47f6-92c0-15c11fa4125e is fen-xcp-01

c305769c-bb8a-43f4-88ff-be7d9f88ad1b is fen-xcp-08I can't really see what 93c10df0-ba2a-4553-8e3e-43eb9752fba1 is; I would think a vdi, but it's not showing as so. no tap-disk process attached to anything with that in the name,

-

My first feeling is a I/O issue causing the process to hang, because the device

tdlisn't responding anymore. Reasons could be numerous, network (packet loss or whatever), process issue on the NFS server but as a result, the underlying process attached to this VDI was dead.To answer your question, 93c10 is the parent VHD (in a snapshot chain). You need the parent to be reachable to coalesce.

-

... that helps in that I know that 93c10 should be findable; but if it's not , it's not immediately apparent what to do.

I suppose that I could look to try and see any tap disk that we don't know what it might be attached to.

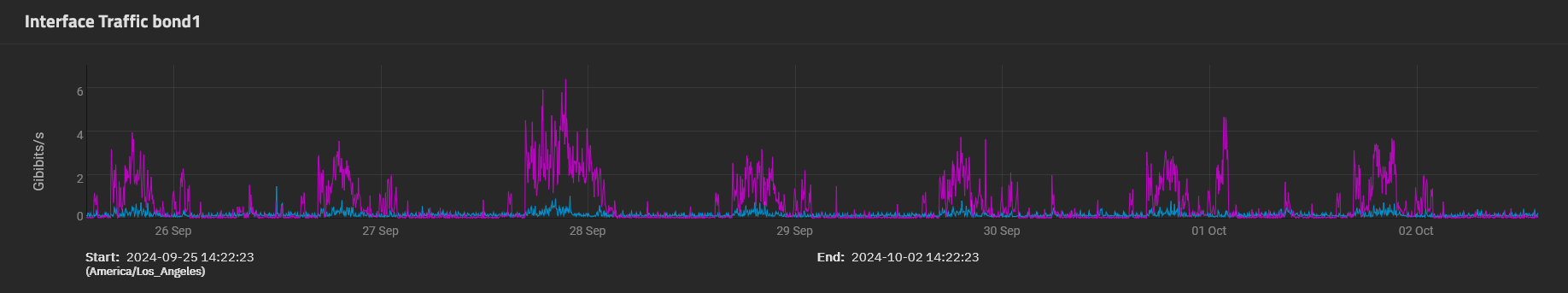

we just double checked our storage network. Monitoring software (snmpc and zabbix) show there have been no connectivity issues over the past 6 months. no traps , no issues, steady numbers on bandwidth used.

running a constant ping shows no drops. everything storage network is layer2 connectivity. NFS are connected via redundant 40gb/s to redundant 40gb/s switches. no routers involved.

387 VM's backed up correctly, 7 failed with this error that we cant resolve.

we are not seeing any other IO issues on VM's

i can create new snapshots in the pool with no issues on other VM', backups for other VM's did complete with no errors last night except for the 7 we are having problems with.

my storage guy has finished combing through the logs on all 3 NFS servers and not finding any signs of problems with drives, network, ZFS.

also note: the issue with the 7 vm's having problems exist on across different xen hosts AND different storage NFS servers.

all 7 vm's are stuck with at least one disk in VDI to coalesce , same error/issue on all of them

cat SMlog | grep "Leaf-coalesce failed"

Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping

Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping

Sep 16 10:43:08 fen-xcp-01 SMGC: [1761] Leaf-coalesce failed on 3a4bdcc6(2.000G/8.500K), skipping

Sep 16 11:22:38 fen-xcp-01 SMGC: [26946] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping

Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping

Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping[13:15 fen-xcp-01 log]# cat SMlog | grep "4a2f04ae"

Sep 16 01:55:21 fen-xcp-01 SM: [7227] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 01:55:21 fen-xcp-01 SM: [7227] vdi_snapshot {'sr_uuid': 'ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1', 'subtask_of': 'DummyRef:|477538e7-9035-4d41-9318-dfeb69248734|VDI.snapshot', 'vdi_ref': 'OpaqueRef:5527b552-e1ec-449a-912e-4eb2fe440554', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b', 'host_ref': 'OpaqueRef:a34e39e4-b42d-48d3-87a9-6eec76720259', 'session_ref': 'OpaqueRef:62704cca-7fb4-4110-a84c-b7fe0f0275f2', 'device_config': {'server': '10.20.86.80', 'options': '', 'SRmaster': 'true', 'serverpath': '/mnt/srv/databases', 'nfsversion': '4.1'}, 'command': 'vdi_snapshot', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:1d92d8fc-8c8f-4bdc-9de8-6eccf499d65a', 'driver_params': {'epochhint': '475c2776-2553-daa0-00e9-a95cf0cd0ee4'}, 'vdi_uuid': '4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b'} Sep 16 01:55:21 fen-xcp-01 SM: [7227] Pause request for 4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b Sep 16 01:55:52 fen-xcp-01 SMGC: [3077] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 01:55:52 fen-xcp-01 SMGC: [3077] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 01:55:52 fen-xcp-01 SMGC: [3077] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 01:55:52 fen-xcp-01 SMGC: [3077] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 01:55:52 fen-xcp-01 SM: [3077] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 01:55:52 fen-xcp-01 SMGC: [3077] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 01:55:53 fen-xcp-01 SM: [3077] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Leaf-coalescing 4a2f04ae(60.000G/128.000K) -> *1869c2bd(60.000G/1.114G) Sep 16 01:55:53 fen-xcp-01 SM: [3077] Pause request for 4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Removed leaf-coalesce from 4a2f04ae(60.000G/128.000K) Sep 16 01:55:53 fen-xcp-01 SMGC: [3077] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping Sep 16 04:08:52 fen-xcp-01 SMGC: [27850] 4a2f04ae(60.000G/128.000K) Sep 16 04:23:04 fen-xcp-01 SMGC: [27850] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 04:23:04 fen-xcp-01 SMGC: [27850] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 04:23:04 fen-xcp-01 SMGC: [27850] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 04:23:04 fen-xcp-01 SMGC: [27850] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 04:23:04 fen-xcp-01 SM: [27850] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 04:23:04 fen-xcp-01 SMGC: [27850] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 04:23:04 fen-xcp-01 SMGC: [27850] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 04:23:05 fen-xcp-01 SM: [27850] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Leaf-coalescing 4a2f04ae(60.000G/128.000K) -> *1869c2bd(60.000G/1.114G) Sep 16 04:23:05 fen-xcp-01 SM: [27850] Pause request for 4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Removed leaf-coalesce from 4a2f04ae(60.000G/128.000K) Sep 16 04:23:05 fen-xcp-01 SMGC: [27850] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping Sep 16 09:41:02 fen-xcp-01 SM: [973] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 09:41:02 fen-xcp-01 SM: [973] vdi_snapshot {'sr_uuid': 'ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1', 'subtask_of': 'DummyRef:|6677fd65-d9b2-4f85-a6ad-c2871f013368|VDI.snapshot', 'vdi_ref': 'OpaqueRef:5527b552-e1ec-449a-912e-4eb2fe440554', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b', 'host_ref': 'OpaqueRef:a34e39e4-b42d-48d3-87a9-6eec76720259', 'session_ref': 'OpaqueRef:776ddeef-9758-4885-93a4-32e7fa0d2473', 'device_config': {'server': '10.20.86.80', 'options': '', 'SRmaster': 'true', 'serverpath': '/mnt/srv/databases', 'nfsversion': '4.1'}, 'command': 'vdi_snapshot', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:1d92d8fc-8c8f-4bdc-9de8-6eccf499d65a', 'driver_params': {'epochhint': 'b31e09c1-c512-f823-1a3e-fa37586fe962'}, 'vdi_uuid': '4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b'} Sep 16 09:41:02 fen-xcp-01 SM: [973] Pause request for 4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b Sep 16 11:17:33 fen-xcp-01 SM: [26745] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:17:37 fen-xcp-01 SMGC: [26946] 4a2f04ae(60.000G/128.000K) Sep 16 11:17:37 fen-xcp-01 SMGC: [26946] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 11:17:37 fen-xcp-01 SMGC: [26946] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 11:17:37 fen-xcp-01 SMGC: [26946] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 11:17:37 fen-xcp-01 SMGC: [26946] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 11:17:37 fen-xcp-01 SM: [26946] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:17:37 fen-xcp-01 SMGC: [26946] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 11:17:37 fen-xcp-01 SMGC: [26946] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 11:22:37 fen-xcp-01 SM: [26946] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 11:22:37 fen-xcp-01 SM: [26946] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 11:22:37 fen-xcp-01 SMGC: [26946] Leaf-coalescing 4a2f04ae(60.000G/128.000K) -> *1869c2bd(60.000G/1.114G) Sep 16 11:22:38 fen-xcp-01 SM: [26946] Pause request for 4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b Sep 16 11:22:38 fen-xcp-01 SMGC: [26946] Removed leaf-coalesce from 4a2f04ae(60.000G/128.000K) Sep 16 11:22:38 fen-xcp-01 SMGC: [26946] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping Sep 16 11:55:55 fen-xcp-01 SM: [23125] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:55:59 fen-xcp-01 SMGC: [23306] 4a2f04ae(60.000G/128.000K) Sep 16 11:55:59 fen-xcp-01 SMGC: [23306] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 11:55:59 fen-xcp-01 SMGC: [23306] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 11:55:59 fen-xcp-01 SMGC: [23306] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 11:55:59 fen-xcp-01 SMGC: [23306] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 11:55:59 fen-xcp-01 SM: [23306] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:55:59 fen-xcp-01 SMGC: [23306] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 11:55:59 fen-xcp-01 SMGC: [23306] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 11:56:00 fen-xcp-01 SM: [23842] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:56:31 fen-xcp-01 SM: [26211] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:56:31 fen-xcp-01 SM: [26211] vdi_update {'sr_uuid': 'ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1', 'subtask_of': 'DummyRef:|efb1535c-5bf0-454c-a213-7a2be0f0a177|VDI.stat', 'vdi_ref': 'OpaqueRef:5527b552-e1ec-449a-912e-4eb2fe440554', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b', 'host_ref': 'OpaqueRef:a34e39e4-b42d-48d3-87a9-6eec76720259', 'session_ref': 'OpaqueRef:aa44b900-f6a7-4ee2-aab0-504a8b11cddc', 'device_config': {'server': '10.20.86.80', 'options': '', 'SRmaster': 'true', 'serverpath': '/mnt/srv/databases', 'nfsversion': '4.1'}, 'command': 'vdi_update', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:1d92d8fc-8c8f-4bdc-9de8-6eccf499d65a', 'vdi_uuid': '4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b'} Sep 16 11:56:31 fen-xcp-01 SM: [26211] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 11:56:58 fen-xcp-01 SM: [27737] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 12:01:00 fen-xcp-01 SM: [23306] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 12:01:00 fen-xcp-01 SM: [23306] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Leaf-coalescing 4a2f04ae(60.000G/128.000K) -> *1869c2bd(60.000G/1.114G) Sep 16 12:01:00 fen-xcp-01 SM: [23306] Pause request for 4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Removed leaf-coalesce from 4a2f04ae(60.000G/128.000K) Sep 16 12:01:00 fen-xcp-01 SMGC: [23306] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skipping Sep 16 13:04:29 fen-xcp-01 SM: [23853] ['vhd-util', 'key', '-p', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 13:04:33 fen-xcp-01 SMGC: [24023] 4a2f04ae(60.000G/128.000K) Sep 16 13:04:33 fen-xcp-01 SMGC: [24023] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 13:04:33 fen-xcp-01 SMGC: [24023] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 13:04:33 fen-xcp-01 SMGC: [24023] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 13:04:33 fen-xcp-01 SMGC: [24023] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 13:04:33 fen-xcp-01 SM: [24023] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 13:04:33 fen-xcp-01 SMGC: [24023] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 13:04:33 fen-xcp-01 SMGC: [24023] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 13:09:33 fen-xcp-01 SMGC: [24023] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 13:09:33 fen-xcp-01 SMGC: [24023] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 13:09:33 fen-xcp-01 SMGC: [24023] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 13:09:33 fen-xcp-01 SMGC: [24023] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 13:09:33 fen-xcp-01 SM: [24023] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 13:09:33 fen-xcp-01 SMGC: [24023] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 13:09:33 fen-xcp-01 SMGC: [24023] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Got on-boot for 4a2f04ae(60.000G/128.000K): 'persist' Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Got allow_caching for 4a2f04ae(60.000G/128.000K): False Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Got other-config for 4a2f04ae(60.000G/128.000K): {} Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Removed vhd-blocks from 4a2f04ae(60.000G/128.000K) Sep 16 13:09:34 fen-xcp-01 SM: [24023] ['/usr/bin/vhd-util', 'read', '--debug', '-B', '-n', '/var/run/sr-mount/ba6c1ba6-c6c8-caef-5d30-7951f50bbbc1/4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b.vhd'] Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Set vhd-blocks = eJztwQENAAAAwqD3T20PBxQAAAD8Gw8AAAE= for 4a2f04ae(60.000G/128.000K) Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Leaf-coalesce candidate: 4a2f04ae(60.000G/128.000K) Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Leaf-coalescing 4a2f04ae(60.000G/128.000K) -> *1869c2bd(60.000G/1.114G) Sep 16 13:09:34 fen-xcp-01 SM: [24023] Pause request for 4a2f04ae-6a1d-4bbe-bbc0-2f1a0c4db37b Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Removed leaf-coalesce from 4a2f04ae(60.000G/128.000K) Sep 16 13:09:34 fen-xcp-01 SMGC: [24023] Leaf-coalesce failed on 4a2f04ae(60.000G/128.000K), skippingcat SMlog | grep "3a4bdcc6"

Sep 16 11:33:51 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:52 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:52 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:52 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:53 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:53 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:53 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:54 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:54 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:54 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:55 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:55 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:55 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:56 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:56 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:56 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:57 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:57 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:57 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:58 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:58 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:58 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:59 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:33:59 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:33:59 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:00 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:00 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:00 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:01 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:01 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:01 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:02 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:02 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:02 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:03 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:03 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:03 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:04 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:04 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:04 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:05 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:05 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:05 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:06 fen-xcp-01 SM: [24503] lock: acquired /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:06 fen-xcp-01 SM: [24503] Adding tag to: 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 Sep 16 11:34:06 fen-xcp-01 SM: [24503] lock: released /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi Sep 16 11:34:07 fen-xcp-01 SM: [24503] ***** vdi_activate: EXCEPTION <class 'util.SMException'>, VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 locked Sep 16 11:34:07 fen-xcp-01 SM: [24503] Raising exception [46, The VDI is not available [opterr=VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 locked]] Sep 16 11:34:07 fen-xcp-01 SM: [24503] ***** NFS VHD: EXCEPTION <class 'SR.SROSError'>, The VDI is not available [opterr=VDI 3a4bdcc6-ce4c-4c00-aab3-2895061229f2 locked] Sep 16 11:34:08 fen-xcp-01 SM: [1804] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/cfda3fef-663a-849b-3e47-692607e612e4/3a4bdcc6-ce4c-4c00-aab3-2895061229f2.vhd'] Sep 16 11:34:08 fen-xcp-01 SM: [1804] vdi_detach {'sr_uuid': 'cfda3fef-663a-849b-3e47-692607e612e4', 'subtask_of': 'DummyRef:|255a4ea2-036b-4b2c-8cfe-ee66144b5286|VDI.detach', 'vdi_ref': 'OpaqueRef:7abb8fbd-09ab-496e-8154-dcd8f8a190af', 'vdi_on_boot': 'persist', 'args': [], 'o_direct': False, 'vdi_location': '3a4bdcc6-ce4c-4c00-aab3-2895061229f2', 'host_ref': 'OpaqueRef:a34e39e4-b42d-48d3-87a9-6eec76720259', 'session_ref': 'OpaqueRef:433e29ff-9a31-497e-a831-19ef6e7478d8', 'device_config': {'server': '10.20.86.80', 'options': '', 'SRmaster': 'true', 'serverpath': '/mnt/srv/xcp_lowIO', 'nfsversion': '4.1'}, 'command': 'vdi_detach', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:795adcc6-8c21-4853-9c39-8b1b1b095abc', 'vdi_uuid': '3a4bdcc6-ce4c-4c00-aab3-2895061229f2'} Sep 16 11:34:08 fen-xcp-01 SM: [1804] lock: opening lock file /var/lock/sm/3a4bdcc6-ce4c-4c00-aab3-2895061229f2/vdi -

Again as I said, for such advanced investigation, you should open a support ticket. Hopefully, all your involved hosts are under support so our storage team could investigate more in depth what's going on.

-

Was a solution to this issue found? I am not running into the same thing in my home lab after my nightly delta and monthly full backup ended up running at the same time. i have 2 VM's each with a disk in the same state as the original poster.

-

@SeanMiller Have you tried running the

resetvdis.pycommand on the affected VDIs? You can read more about it here. -

@Danp sure did, it was no help. we ended up having to restore the effected VM's from backup

there was no sign of any storage network issues during the time this started, no RT,RX errors anywhere, no traps, nothing in the zfs server logs.. nothing.... we have hundreds of VM's running , only 7 were effected and at random times during the backup process. its a little concerning..

-

@Danp I did, I got the same results as @jshiells.

I was hoping to avoid restoring from backup as the backup is 24 hours old and changed some data between now and then..

-

@SeanMiller no sorry, we ended up having to restore the VDI's form a known good backup.

-

Im restoring from backup.

This does have me a bit concerned, This type of issue should not be caused by a backup..

-

The root cause is not clear, I don't think it's the backup directly. It might be the generation of snapshots and reading them that cause a lot of sudden I/O on the storage.

This would require some intensive investigations, gather all the logs of all hosts and inspecting what's going on. Each installation is unique (hardware, network, disks, CPUs, memory, there's an infinite number of combinations), so finding a solution on a forum without spending days asking tons of questions seems complicated. What's concerning to me is that type of infrastructure should not be unsupported, because spending time to understand the problem is part of what we do. I'm not saying we'll find magically a solution or the root cause, but at least it could be investigated in details and compare if we have similar reports to go deeper.

-

@olivierlambert I can provide information on infrastructure if it would help. the issue has not happened again though , and i cannot re create the issue in my lab. even when backups are running we are REALLY under utilizing our NFS/ZFS NVME/SSD based storage with 40gb/s links. i'm not saying its not possible, but i would be shocked. we have no recorded tx/rx errors on truenas/swtich/hosts and no record of any snmp traps coming in for any issues with all the equipment involved.

-

and its happening again tonight.

I have 55 failed backups tonight with a mix of the following errors.

Error: INTERNAL_ERROR(Invalid argument: chop)

Error: SR_BACKEND_FAILURE_82(, Failed to snapshot VDI [opterr=failed to pause VDI 346298a8-cfad-4e9b-84fe-6185fd5e7fbb], )Zero TX/RX errors on SFP's at on XEN hosts and Storage

ZERO TX/RX errors on switch ports

no errors on the ZFS/NFS storage devices

no traps in monitoring for any networking or storage issues. -

Well, obviously something is wrong somewhere, otherwise you wouldn't have this error. I think I never saw

Error: INTERNAL_ERROR(Invalid argument: chop)in my life.There's something triggering storage issues, not having TX/RX errors doesn't save you for other problems, like MTU problems, bad switch config or simply latency issue from the Dom0 perspective, you name it. I'd love to get it reproducible at some point but as you can see, there's "something" that makes it happen, now the question is "what". Those problems are really hard to diagnose because it's often a combo of environment and tipping points from timeouts or similar things.