MAP_DUPLICATE_KEY error in XOA backup - VM's wont START now!

-

Again as I said, for such advanced investigation, you should open a support ticket. Hopefully, all your involved hosts are under support so our storage team could investigate more in depth what's going on.

-

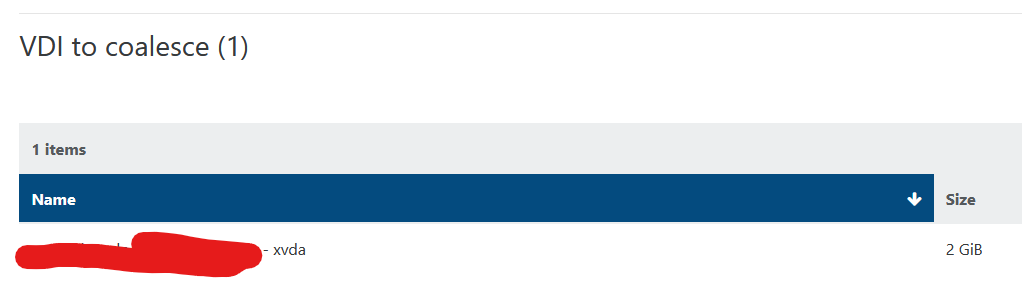

Was a solution to this issue found? I am not running into the same thing in my home lab after my nightly delta and monthly full backup ended up running at the same time. i have 2 VM's each with a disk in the same state as the original poster.

-

@SeanMiller Have you tried running the

resetvdis.pycommand on the affected VDIs? You can read more about it here. -

@Danp sure did, it was no help. we ended up having to restore the effected VM's from backup

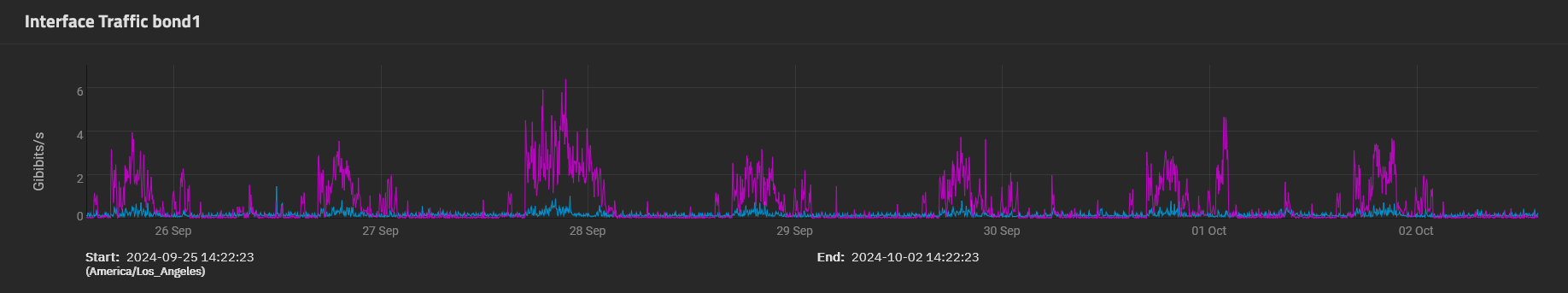

there was no sign of any storage network issues during the time this started, no RT,RX errors anywhere, no traps, nothing in the zfs server logs.. nothing.... we have hundreds of VM's running , only 7 were effected and at random times during the backup process. its a little concerning..

-

@Danp I did, I got the same results as @jshiells.

I was hoping to avoid restoring from backup as the backup is 24 hours old and changed some data between now and then..

-

@SeanMiller no sorry, we ended up having to restore the VDI's form a known good backup.

-

Im restoring from backup.

This does have me a bit concerned, This type of issue should not be caused by a backup..

-

The root cause is not clear, I don't think it's the backup directly. It might be the generation of snapshots and reading them that cause a lot of sudden I/O on the storage.

This would require some intensive investigations, gather all the logs of all hosts and inspecting what's going on. Each installation is unique (hardware, network, disks, CPUs, memory, there's an infinite number of combinations), so finding a solution on a forum without spending days asking tons of questions seems complicated. What's concerning to me is that type of infrastructure should not be unsupported, because spending time to understand the problem is part of what we do. I'm not saying we'll find magically a solution or the root cause, but at least it could be investigated in details and compare if we have similar reports to go deeper.

-

@olivierlambert I can provide information on infrastructure if it would help. the issue has not happened again though , and i cannot re create the issue in my lab. even when backups are running we are REALLY under utilizing our NFS/ZFS NVME/SSD based storage with 40gb/s links. i'm not saying its not possible, but i would be shocked. we have no recorded tx/rx errors on truenas/swtich/hosts and no record of any snmp traps coming in for any issues with all the equipment involved.

-

and its happening again tonight.

I have 55 failed backups tonight with a mix of the following errors.

Error: INTERNAL_ERROR(Invalid argument: chop)

Error: SR_BACKEND_FAILURE_82(, Failed to snapshot VDI [opterr=failed to pause VDI 346298a8-cfad-4e9b-84fe-6185fd5e7fbb], )Zero TX/RX errors on SFP's at on XEN hosts and Storage

ZERO TX/RX errors on switch ports

no errors on the ZFS/NFS storage devices

no traps in monitoring for any networking or storage issues. -

Well, obviously something is wrong somewhere, otherwise you wouldn't have this error. I think I never saw

Error: INTERNAL_ERROR(Invalid argument: chop)in my life.There's something triggering storage issues, not having TX/RX errors doesn't save you for other problems, like MTU problems, bad switch config or simply latency issue from the Dom0 perspective, you name it. I'd love to get it reproducible at some point but as you can see, there's "something" that makes it happen, now the question is "what". Those problems are really hard to diagnose because it's often a combo of environment and tipping points from timeouts or similar things.

-

@jshiells Did you also check

/var/log/kern.logfor hardware errors? I'm seeing qemu process crashing with bad RIP (Instruction pointer) value which screams for a hardware issue, IMO. Just a 1-bit flipping in memory is enough to cause unpleasant surprises. I hope the servers are using ECC memory. I'd run a memtest and some CPU stress test on that server.Some years ago, I had a two-socket Dell server with one bad core (no errors reported at boot). When the Xen scheduler ran a task on that core... Boom. Host crash.

-

@olivierlambert digging more into this today, we did find this error in xensource.log related to that "CHOP" message

xensource.log.11.gz:Oct 20 10:22:08 xxx-xxx-01 xapi: [error||115 pool_db_backup_thread|Pool DB sync D:d79f115776bd|pool_db_sync] Failed to synchronise DB with host OpaqueRef:a87f2682-dd77-4a2d-aa1a-b831b1d5107f: Server_error(INTERNAL_ERROR, [ Invalid argument: chop ])

xensource.log.22.gz:Oct 19 06:06:03 fen-xcp-01 xapi: [error||27967996 :::80||backtrace] host.get_servertime D:61ad83a0cd72 failed with exception (Invalid_argument chop)

-

@tuxen no sorry, great idea but we are not seeing any errors like that in kern.log. this problem when it happens is across several xen hosts all at the same time. it would be wild if all of the xen hosts were having hardware issues during the small window of time this problem happened in. if it was one xen server then i would look at hardware but its all of them, letting me believe its XOA, a BUG in xcp-ng or a storage problem (even though we have seen no errors or monitoring blips at all on the truenas server)

-

@jshiells said in MAP_DUPLICATE_KEY error in XOA backup - VM's wont START now!:

@tuxen no sorry, great idea but we are not seeing any errors like that in kern.log. this problem when it happens is across several xen hosts all at the same time. it would be wild if all of the xen hosts were having hardware issues during the small window of time this problem happened in. if it was one xen server then i would look at hardware but its all of them, letting me believe its XOA, a BUG in xcp-ng or a storage problem (even though we have seen no errors or monitoring blips at all on the truenas server)

In a cluster with shared resources, It only takes one unstable host or a crashed PID left with an open shared resource to cause some unclean state cluster-wide. If the hosts and VMs aren't protected by a HA/STONITH mechanism to automatically release the resources, a qemu crash might keep one or more VDIs in an invalid, blocked state and affect SR scans done by the master. Failed SR scans may prevent SR-dependent actions (eg. VM boot, snapshots, GC kicking etc).

I'm not saying there isn't a bug somewhere but running MEMTEST/CPU tests on the host that triggered the bad RIP error would be my starting point of investigation. Just to be sure.

-

After much digging I traced the following formal exceptions below from

XEN. For most of them, the "chop" error is burried in the bowels of XAPI.ie

Oct 18 21:46:29 fen-xcp-01 SMGC: [28249] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 238, in _parse_result Oct 18 21:46:29 fen-xcp-01 SMGC: [28249] raise Failure(result['ErrorDescription'])It's worth noting due to the time involved in this, the only things

considered in play where nfs-01/02 and lesser degree 03. The one XCP

pool (fen-xcp), and even lesser degree XOA itself.'I could not find any obvious issues with storage, neither hardware nor

data. Scrubs were fine, no cpu/hardware errors.I could not find any obvious issues with xcp hosts, neither hardware nor

data. No cpu/hardware errors.The only real change made was to correct the clock on nfs-01. I don't

see how that could affect this since most if not all locking is done

with flock.There is a valid argument to be made that Xen technically was responding

to an issue, though not entirely clear what nor how. Most of the other

errors / wtfbbq states are either directly related (in call path), or

indirectly (xen wanted a thing, didn't get it). Those are some deep

rabbit holes.There is more pre/post context to these, tried to include what I thought

made them a bit more easier to understand.

./SMlog.4.gz:Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] * E X C E P T I O N * Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] leaf-coalesce: EXCEPTION <class 'XenAPI.Failure'>, ['INTERNAL_ERROR', 'Invalid argument: chop'] Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 1774, in coalesceLeaf Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] self._coalesceLeaf(vdi) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2048, in _coalesceLeaf Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] if not self._snapshotCoalesce(vdi): Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2153, in _snapshotCoalesce Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] self._coalesce(tempSnap) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 1962, in _coalesce Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] self.deleteVDI(vdi) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2469, in deleteVDI Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] self._checkSlaves(vdi) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2482, in _checkSlaves Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] self._checkSlave(hostRef, vdi) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2491, in _checkSlave Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] text = _host.call_plugin(*call) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 264, in __call__ Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] return self.__send(self.__name, args) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 160, in xenapi_request Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] result = _parse_result(getattr(self, methodname)(*full_params)) Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 238, in _parse_result Oct 19 00:19:36 fen-xcp-01 SMGC: [3729] raise Failure(result['ErrorDescription']) ./SMlog.4.gz:Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] * E X C E P T I O N * Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] leaf-coalesce: EXCEPTION <class 'XenAPI.Failure'>, ['INTERNAL_ERROR', 'Invalid argument: chop'] Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 1774, in coalesceLeaf Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] self._coalesceLeaf(vdi) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2048, in _coalesceLeaf Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] if not self._snapshotCoalesce(vdi): Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2153, in _snapshotCoalesce Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] self._coalesce(tempSnap) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 1962, in _coalesce Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] self.deleteVDI(vdi) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2469, in deleteVDI Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] self._checkSlaves(vdi) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2482, in _checkSlaves Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] self._checkSlave(hostRef, vdi) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2491, in _checkSlave Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] text = _host.call_plugin(*call) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 264, in __call__ Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] return self.__send(self.__name, args) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 160, in xenapi_request Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] result = _parse_result(getattr(self, methodname)(*full_params)) Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 238, in _parse_result Oct 19 00:22:04 fen-xcp-01 SMGC: [3729] raise Failure(result['ErrorDescription']) ./SMlog.4.gz:Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] * E X C E P T I O N * Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] gc: EXCEPTION <class 'XenAPI.Failure'>, ['INTERNAL_ERROR', 'Invalid argument: chop'] Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 3388, in gc Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] _gc(None, srUuid, dryRun) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 3273, in _gc Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] _gcLoop(sr, dryRun) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 3214, in _gcLoop Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] sr.garbageCollect(dryRun) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 1794, in garbageCollect Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] self.deleteVDIs(vdiList) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2374, in deleteVDIs Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] SR.deleteVDIs(self, vdiList) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 1808, in deleteVDIs Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] self.deleteVDI(vdi) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2469, in deleteVDI Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] self._checkSlaves(vdi) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2482, in _checkSlaves Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] self._checkSlave(hostRef, vdi) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/opt/xensource/sm/cleanup.py", line 2491, in _checkSlave Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] text = _host.call_plugin(*call) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 264, in __call__ Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] return self.__send(self.__name, args) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 160, in xenapi_request Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] result = _parse_result(getattr(self, methodname)(*full_params)) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 238, in _parse_result Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] raise Failure(result['ErrorDescription']) Oct 19 00:22:11 fen-xcp-01 SMGC: [3729] ./SMlog.5.gz:Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] * E X C E P T I O N * Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] GC process exiting, no work left Oct 18 21:51:23 fen-xcp-01 SM: [30714] lock: released /var/lock/sm/0cff5362-5c89-2241-2207-a1d736d9ef5e/gc_active Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] In cleanup Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] SR 0cff ('fen-nfs-03 - DR (Diaster Recovery Storage ZFS/NFS)') (608 VDIs in 524 VHD trees): no changes Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] *********************** Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] * E X C E P T I O N * Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] *********************** Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] gc: EXCEPTION <class 'XenAPI.Failure'>, ['INTERNAL_ERROR', 'Invalid argument: chop'] Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 3388, in gc Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] _gc(None, srUuid, dryRun) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 3273, in _gc Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] _gcLoop(sr, dryRun) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 3214, in _gcLoop Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] sr.garbageCollect(dryRun) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 1794, in garbageCollect Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] self.deleteVDIs(vdiList) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 2374, in deleteVDIs Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] SR.deleteVDIs(self, vdiList) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 1808, in deleteVDIs Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] self.deleteVDI(vdi) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 2469, in deleteVDI Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] self._checkSlaves(vdi) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 2482, in _checkSlaves Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] self._checkSlave(hostRef, vdi) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/opt/xensource/sm/cleanup.py", line 2491, in _checkSlave Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] text = _host.call_plugin(*call) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 264, in __call__ Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] return self.__send(self.__name, args) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 160, in xenapi_request Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] result = _parse_result(getattr(self, methodname)(*full_params)) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 238, in _parse_result Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] raise Failure(result['ErrorDescription']) Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] * * * * * SR 0cff5362-5c89-2241-2207-a1d736d9ef5e: ERROR Oct 18 21:51:23 fen-xcp-01 SMGC: [30714] Oct 18 21:51:28 fen-xcp-01 SM: [26746] lock: opening lock file /var/lock/sm/894e5d0d-c100-be00-4fc4-b0c6db478a26/sr -

Well, changing time on the NFS server could have an impact, since file access time attributes are used to do many checks in general. I'm not saying that's 100% the issue, but it tells how deep a small change could cause havoc.

-

Just an update on this:

we made sure all our server times were synced. issue happened again the next run.

just for shits and giggles we restarted toolstack on the all the hosts yesterday and the problem went away. no issues with the backup last night. maybe just a coincidence, we are continuing to monitor.

we also noticed that even though this CHOP error is coming up, snapshots are getting created

-

I have, what is hopefully a final update to this issue(s).

we upgraded to xoa version .99 a few weeks ago and the problem has now gone away. we suspect that some changes were made for timeouts in xoa that have resolved this , and a few other related problems.

-

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on

-

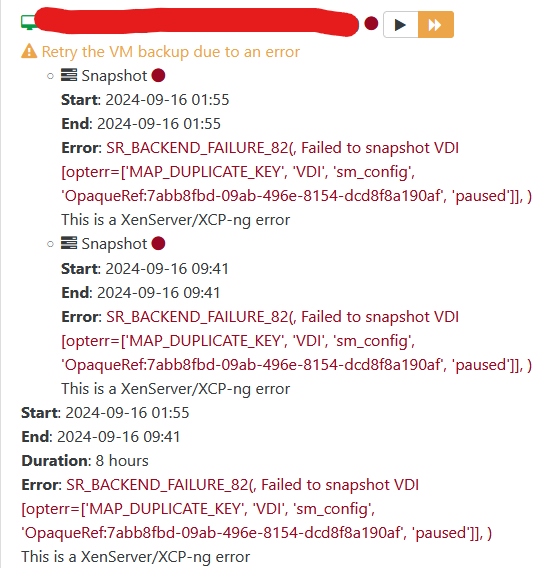

This problem is back with vengeance. after almost a year of no issues, we have upgraded to version 109.2 of XOA and boom... probably is back

XCP-NG servers are on 8.2.1 May 6th update

we get this following error in XOA

Error: SR_BACKEND_FAILURE_82(, Failed to snapshot VDI [opterr=failed to pause VDI 7d213264-1f89-488f-b273-eb91c186eaab], )

cant make snapshots of the effected VM anymore...

manual snapshots we get same error:

SR_BACKEND_FAILURE_82(, Failed to snapshot VDI [opterr=failed to pause VDI c51f22e2-ba5c-413b-8afc-9208a2b944ef],

but there is NO issues with the SR.failed 17 backups last night,

failed 3 backups tonight.

across 3 different storage arraysall those VM's we had to shut down and fix.

tapdisk connections to the disk have to be manually killed.we will double check all our interfaces again but i suspect it will be the same as last time:, no errors on networking or storage side.

something has changed, reverted.